Can you create a sci-fi movie using Metahuman in Unreal Engine 5?

MetaHuman characters in Unreal Engine 5 (UE5) enable indie teams to create cinematic-quality sci-fi movies using real-time rendering and photorealistic, fully rigged digital humans ideal for close-ups and emotional performances. Examples include a 2024 sci-fi firmware project and a 2.5-minute short, Mandatory, created by a solo artist in three months using UE5.3, MetaHumans, Lumen, and ray tracing. MetaHumans feature 8K skin materials and strand-based hair, supporting complex actions and dialogue. UE5’s real-time feedback accelerates iteration for fully animated or hybrid live-action/CG films, lowering barriers for high-quality sci-fi storytelling.

What tools do I need to create a sci-fi movie using Metahuman and UE5?

Essential tools include:

- Unreal Engine 5: Free platform for scene assembly, animation, VFX, lighting, and rendering, including the MetaHuman framework and Sequencer cinematic editor.

- MetaHuman Creator: Free cloud-based app to customize photorealistic characters’ faces, bodies, and features, downloadable via Quixel Bridge into UE5.

- Quixel Bridge: Tool integrated in UE5 to import MetaHumans and assets like Megascans for environments, ensuring seamless asset integration.

- Capable PC with GPU: Requires multi-core CPU, 32GB+ RAM, and Nvidia RTX 3070/4070 or better GPU with 8–16GB VRAM to handle MetaHumans’ high-poly assets and dynamic lighting.

- Motion Capture Hardware (Optional): Inertial mocap suits (e.g., Rokoko, Xsens) or iPhone (12+ for MetaHuman Animator, Live Link Face app) for body/facial capture to enhance animation realism.

- Audio Recording Equipment: High-quality microphone, audio interface, and DAW for recording and editing dialogue to ensure professional lip-sync and production value.

- 3D Content Creation Software (Optional): Blender, Maya, or similar for modeling custom props, costumes, or tweaking animations, with MetaHuman rigs exportable to Maya.

- Source Control and Storage: Git, Perforce, or backups and ample disk space (dozens of gigabytes) for managing large Unreal projects with high-quality assets.

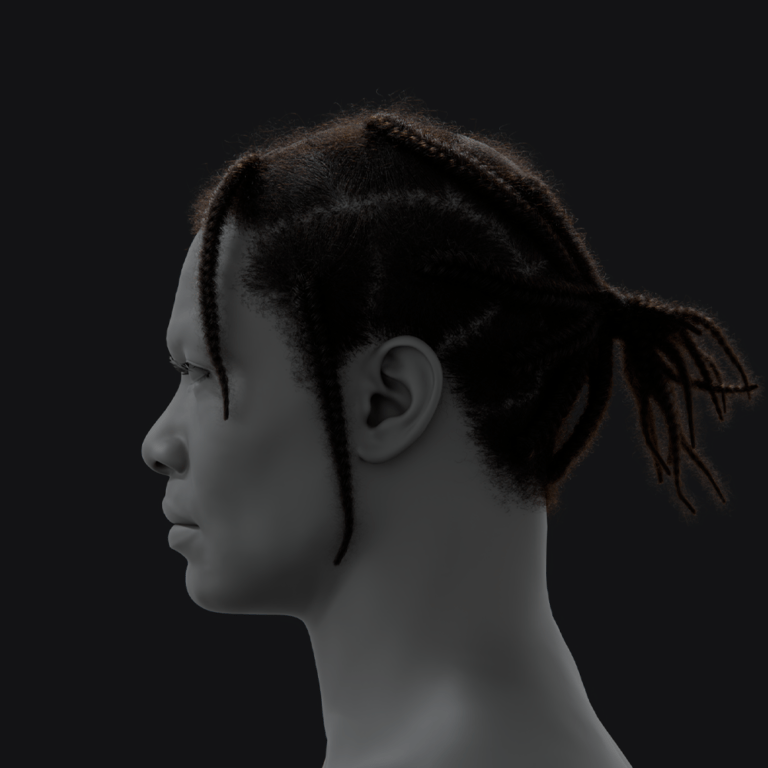

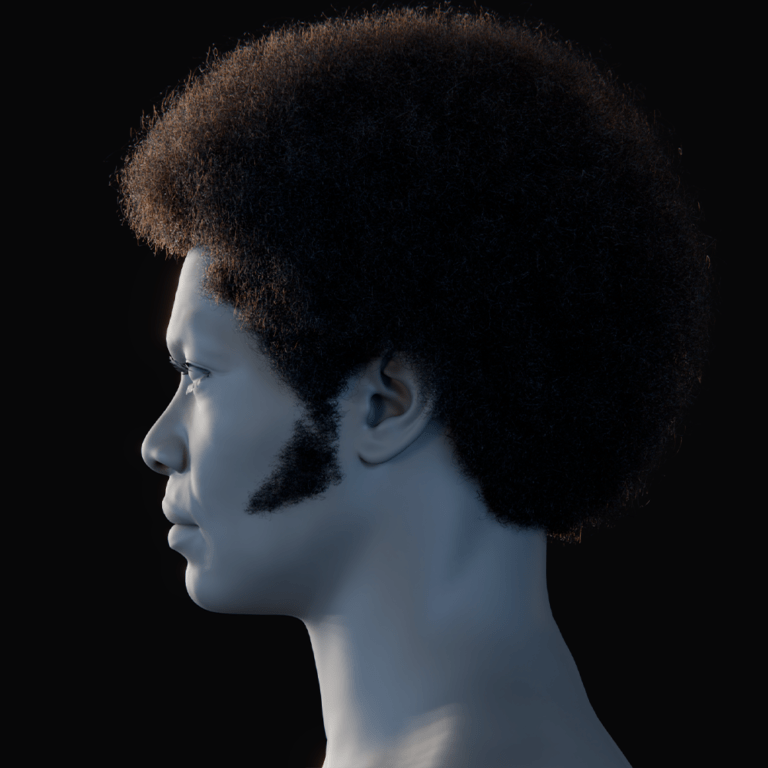

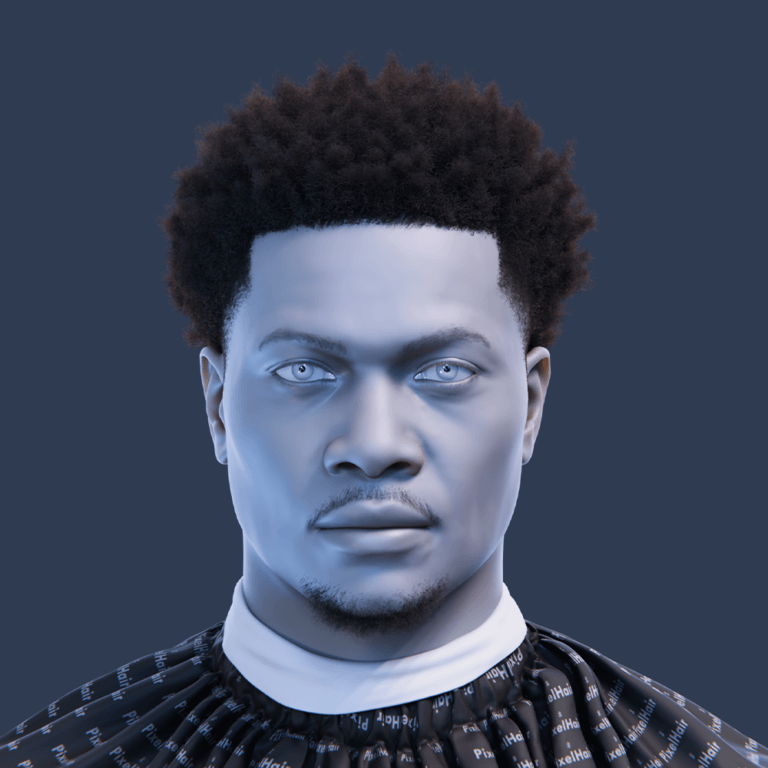

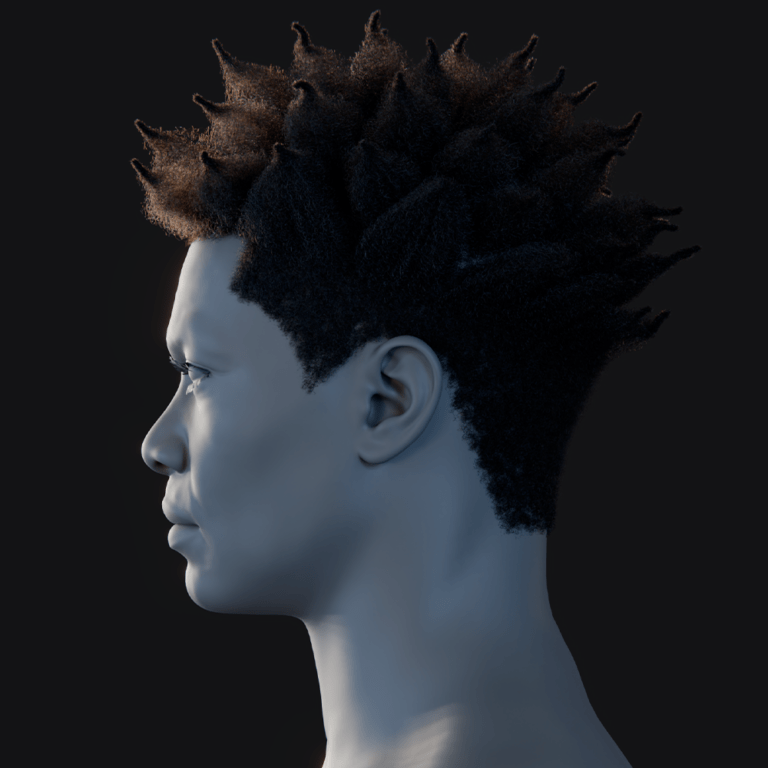

- Asset Libraries: Unreal Marketplace and Quixel Megascans for sci-fi props, vehicles, and environments, plus specialized packs like PixelHair or space suit kits.

Ensure software/plugins are updated, and test hardware compatibility (e.g., iPhone 12+ for facial capture) via the Epic Games launcher.

What is the best workflow for making a sci-fi short film with Metahuman?

Key stages include:

- Pre-Production: Develop a script/storyboard, identifying MetaHumans, environments (e.g., spaceship, alien planet), and VFX-heavy sequences, using animatics in Sequencer for camera planning.

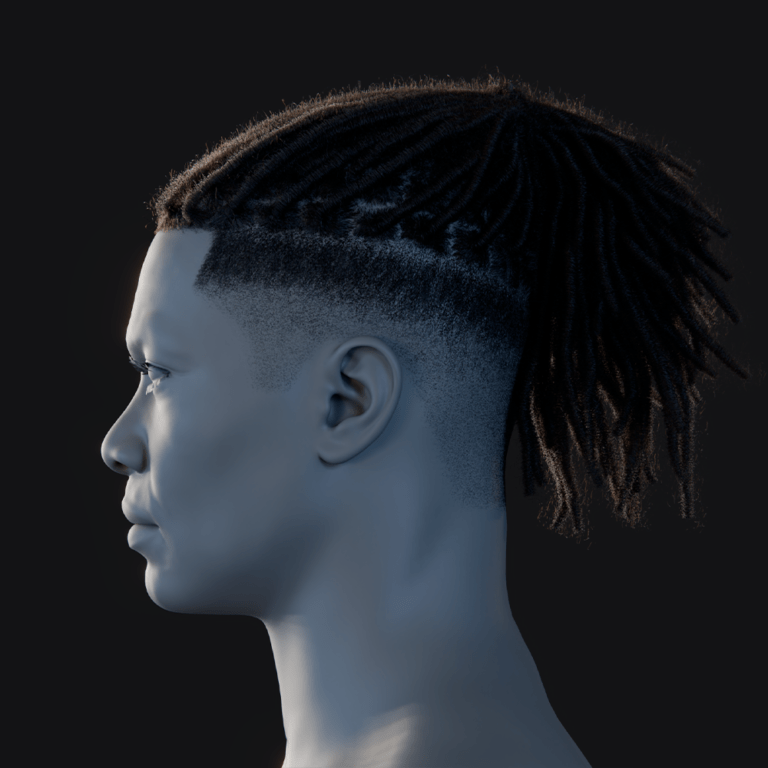

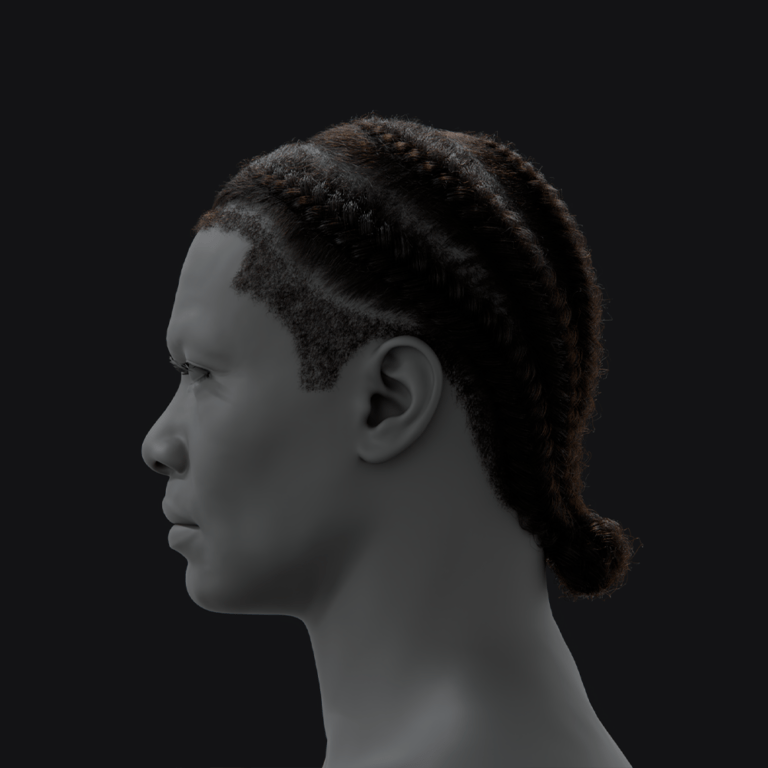

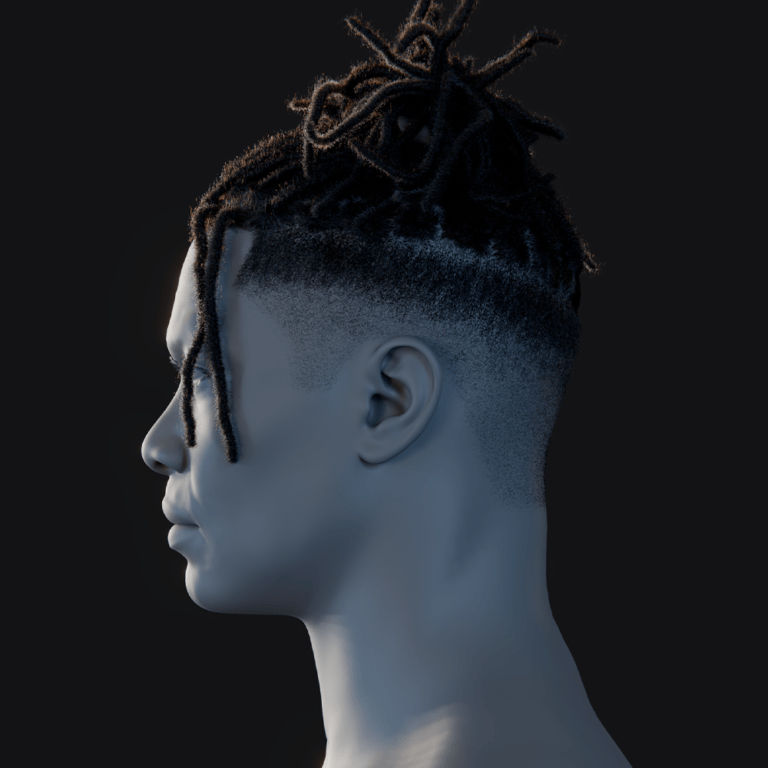

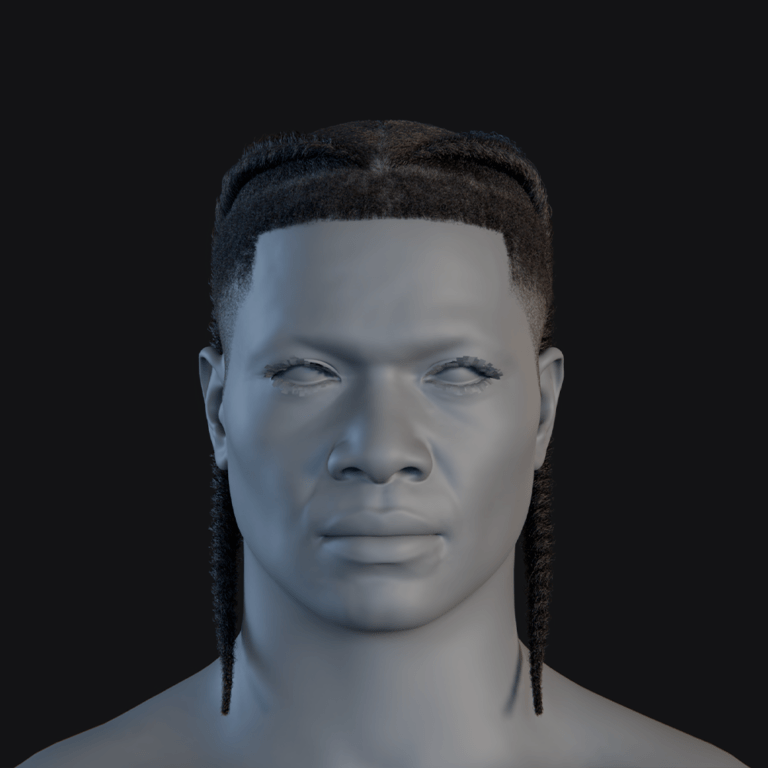

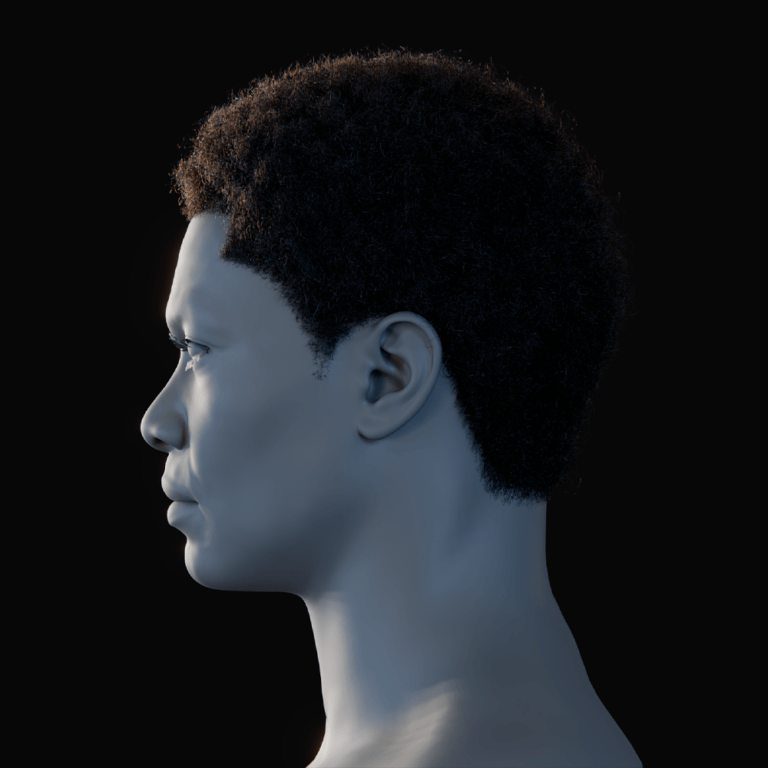

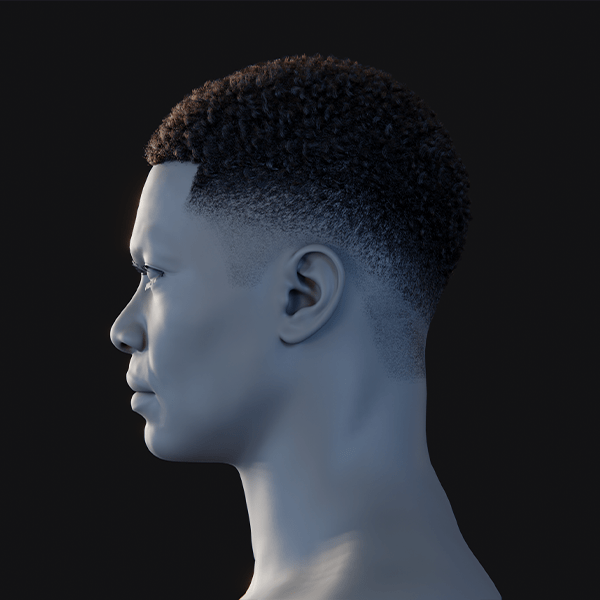

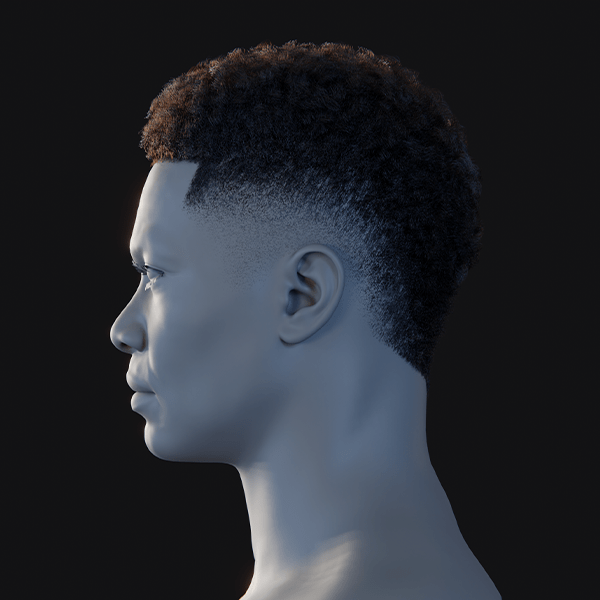

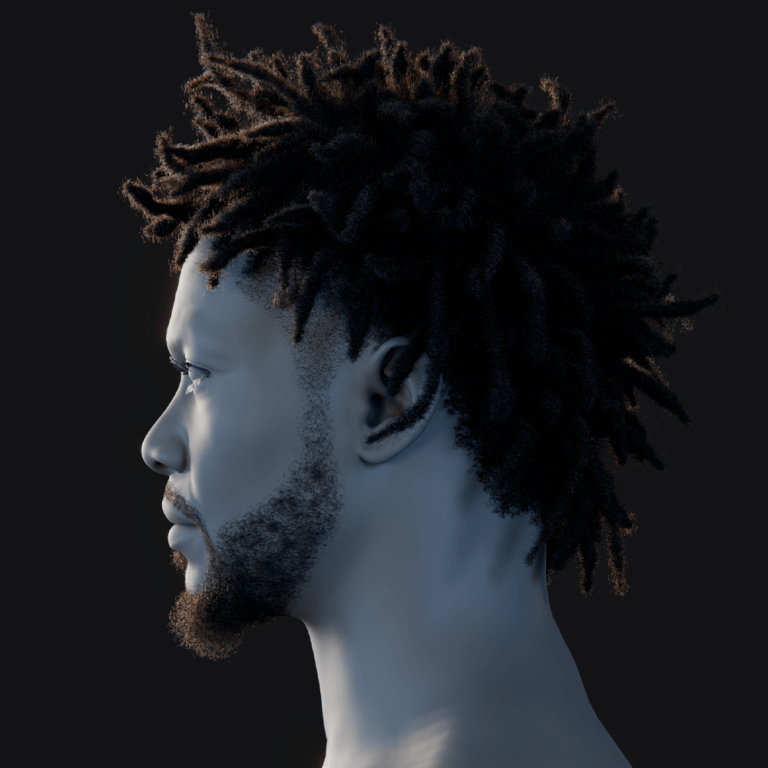

- Designing MetaHuman Characters: Customize actors in MetaHuman Creator, adjusting facial features, hairstyles, and clothing for sci-fi roles, importing via Quixel Bridge and tweaking in UE5.

- Creating/Importing Costumes and Props: Use marketplace assets or model custom gear (e.g., space suits) in DCC tools, attaching to MetaHumans, and importing props like weapons or spacecraft.

- Building the Environment: Block out sets with simple shapes, populate with modular assets or Megascans, and use UE5’s Landscape system for planetary terrain and Sky Atmosphere for skies.

- Lighting Setup: Use Lumen for real-time global illumination, point/spotlights for interiors, or sky systems for exteriors, enabling ray-traced reflections/shadows for metallic surfaces.

- Animation & Performance:

- Body Animation: Combine keyframe animation, mocap, or Marketplace assets retargeted to MetaHumans, using Control Rig for manual posing.

- Facial Animation & Lip Sync: Use MetaHuman Animator or Live Link Face for expressions, with audio-to-face plugins for lip sync, focusing on emotional nuances.

- Timing & Blocking: Arrange animations in Sequencer for storyboard pacing, creating separate sequences for scenes to ensure narrative flow.

- Cinematography: Place cameras in Sequencer with real-world lens settings for wide, medium, or close-up shots, animating for dynamic movements.

- VFX Integration: Add sci-fi effects like holograms or lasers via Niagara, ensuring MetaHuman interactions (e.g., triggering holograms) and compositing live-action if needed.

- Audio: Record dialogue, sync in Sequencer, and add sci-fi sound effects (e.g., engines, weapons) with spatialized sound for immersion.

- Post-Processing and Color Grading: Apply bloom, lens flares, or color grading (e.g., blue for labs) via post-process volumes to enhance mood and simulate camera imperfections.

- Rendering: Export via Movie Render Queue in 1080p/4K with anti-aliasing, using Lumen or Path Tracer for hero shots to optimize quality.

- Editing: Assemble shots in Premiere or Resolve, adding overlays, subtitles, or sound mixes, with UE5’s real-time iteration for quick adjustments.

- Review and Iteration: Adjust lighting, timing, or animations and re-render as needed, leveraging UE5’s real-time feedback for fast refinements.

This workflow balances creative and technical execution with flexibility for iteration.

How do I set up Metahuman characters for a cinematic sci-fi scene?

After importing MetaHumans via Quixel Bridge, configure them for sci-fi scenes:

- Import and Verify Assets: Import MetaHuman into UE5’s Content Browser (Body, Face, Materials, Clothes sub-folders), dragging the Blueprint into the scene, initially in T-pose/A-pose.

- Quality Settings: Set Forced LOD = 0 in the MetaHuman Blueprint’s LOD Sync component for maximum detail (LOD 0) during close-ups, ensuring high-quality face, skin, and hair rendering.

- Configure Materials: Adjust material instances for sci-fi appearances (e.g., holographic or slimy skin) by tweaking shininess or color for narrative effects (e.g., pale for space-lost characters).

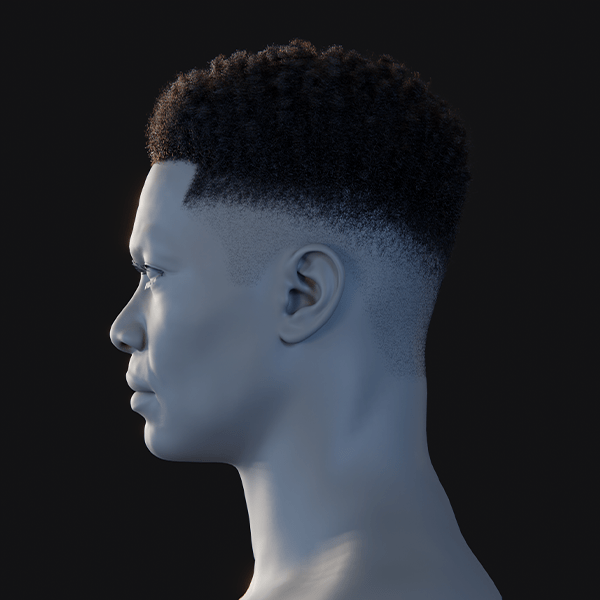

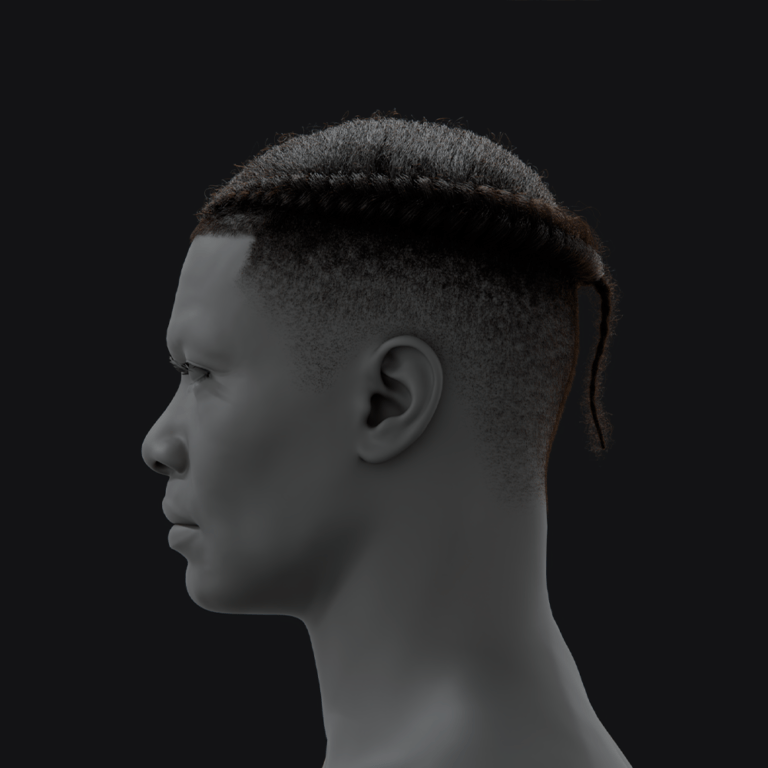

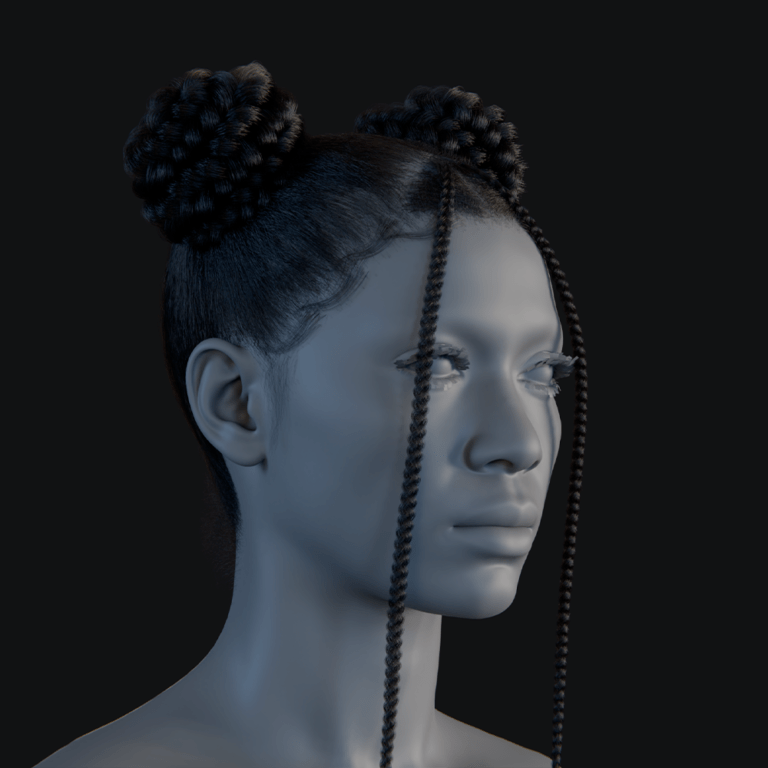

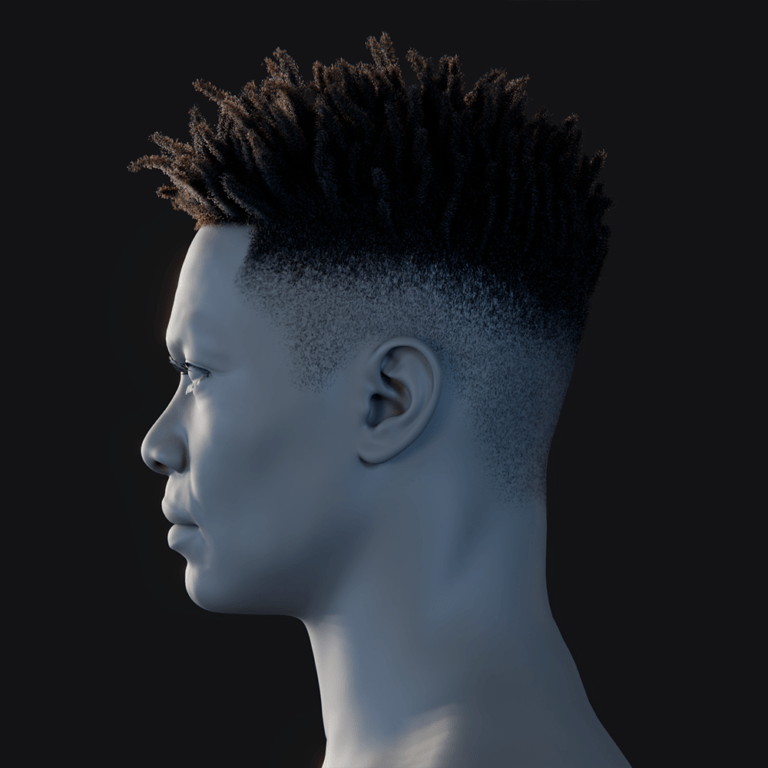

- Attach Hair Grooms: Ensure strand-based hair grooms are attached in the Blueprint, testing physics simulation (gravity, sway) for realistic movement in cinematics.

- Clothing and Gear Setup:

- Assign custom sci-fi clothing (e.g., space suits) to the Body > Torso slot in the Blueprint, replacing default garments per asset instructions.

- Hide underlying body parts to prevent clipping, ensuring a polished look.

- Attach gear (e.g., helmets) to sockets like the “Head” bone, positioning for fit.

- Animation Setup: Add a Control Rig track in Sequencer for keyframe animation or use Live Link/AnimBP for mocap, retargeting UE5 mannequin animations to the MetaHuman skeleton.

- Testing and Calibration: Test facial animations via the Face Subsystem with Live Link, ensuring correct eye blinks and mouth movements, calibrating eye gaze for natural target tracking.

- Optimizations:

- Lower LOD for distant characters while editing, raising for render.

- Disable hair physics during Sequencer scrubbing, re-enabling for rendering.

- Selectively disable cast shadows on hair/eyelashes for FPS gains while working, enabling for final render.

- Use levels/sub-levels to isolate characters for editing, enhancing scalability.

This ensures MetaHumans are cinematic-ready with custom sci-fi appearances and optimized performance.

How do I create space suits or futuristic costumes for Metahuman characters?

To outfit MetaHumans in sci-fi attire:

- Pre-made Assets: Use Marketplace assets like “MetaHuman Astronaut Space Suit,” weighted to the MetaHuman skeleton, and apply per instructions for quick integration.

- Custom Clothing:

- Export MetaHuman body (FBX via Quixel Bridge or Unreal) for accurate proportions.

- Model outfits in Marvelous Designer for realistic cloth folds, rigging to the MetaHuman skeleton.

- Import into Unreal, assign to MetaHuman, and hide covered body parts to prevent clipping.

- Kitbashing: Combine existing assets (e.g., astronaut suit with custom emblems or armor pieces), attaching accessories (e.g., jetpacks) to bones via Unreal’s Attach to Component.

- Clothing Physics: Apply Chaos Cloth to dynamic parts (e.g., capes, coat flaps), painting weights for simulation to ensure natural movement.

- Material Customization: Adjust material instances for narrative (e.g., shiny new or scuffed suits), adding decals like logos for storytelling.

- Proportions: Stick to standard MetaHuman body types for asset compatibility, adjusting costumes for non-standard proportions if needed.

- Hair and Helmets: Use short or no hair under helmets to avoid clipping, switching hairstyles or hiding hair meshes as needed.

These methods create unique sci-fi costumes that move naturally with MetaHumans.

How do I build sci-fi environments in Unreal Engine for Metahuman use?

To create immersive sci-fi environments:

- Concept: Use concept art to define the visual style (e.g., metallic corridors, neon cities, barren planets), guiding asset and lighting choices.

- Block Out: Use simple shapes or UE5 modeling tools to define structures (e.g., spaceship rooms, city layouts), ensuring MetaHuman scale.

- Modular Assets: Assemble environments with Marketplace sci-fi kits (panels, pipes) and Megascans for details (rusted metal, industrial props).

- Terrain and Sky: Sculpt alien planet terrain with UE5’s Landscape system, adding Megascans foliage/rocks, and use Sky Atmosphere for alien skies (e.g., reddish Martian sky).

- Buildings and Structures: Use City Sample or Kitbash3D assets for cities, with static backdrops or skyboxes for distant skylines, detailing camera-visible interiors.

- Lighting and Atmospherics: Use Lumen for real-time lighting, emissive materials for glowing panels, and volumetric fog for depth, with placeholder lights to define form.

- Optimization: Use Nanite for high-poly meshes (cities, terrain) and Virtual Textures for large surfaces, prioritizing quality for cinematic rendering.

- Interactive Elements: Add Blueprint-triggered doors or Media Texture UI screens for realism, ensuring MetaHuman interactions.

- Set Dressing: Place props (chairs, cables) and decals (grime, scorch marks) in camera-framed areas for detail, including exotic plants for alien planets.

- Real-World Reference: Blend real designs (pipes, trusses) with futuristic elements (advanced doors) for credibility, referencing submarines or megacities.

- Testing: Test with MetaHumans and cameras to adjust scale, composition, and lighting, ensuring backgrounds enhance characters without distraction.

These steps create cinematic sci-fi environments that complement MetaHumans, focusing on visual coherence and narrative immersion.

What lighting techniques work best for sci-fi scenes with Metahuman?

Lighting is pivotal for cinematic quality in sci-fi scenes with MetaHumans, enhancing mood and realism. UE5’s Lumen provides real-time global illumination, ideal for indoor scenes with bouncing light, like a spaceship’s metallic floor illuminating a character’s face. Ray-traced reflections add crispness to shiny surfaces, while Path Tracer offers physically accurate results for key shots.

- Leverage Lumen for Global Illumination: Lumen provides real-time global illumination for indoor scenes. A ceiling light bounces off surfaces, softly lighting MetaHumans. It’s enabled in project settings and works with key lights. Adjust emissive details for optimal light contribution.

- Augment with Ray Tracing or Path Tracing: Ray-traced reflections enhance shiny surfaces like visors. Combined with Lumen, it ensures crisp reflections. Path Tracer delivers physically correct lighting for hero shots. Use these for high-quality cinematic renders.

- Cinematic Lighting Principles: Use three-point lighting for close-ups: key (e.g., monitor glow), fill (ambient bounce), and rim (overhead glow). Environmental sources motivate lighting. This separates characters from backgrounds. It highlights MetaHumans’ features effectively.

- Color and Mood: Bold colors like red or blue convey sci-fi moods. Use colored rim lights for neon vibes. Balance strong colors with neutral fill to maintain realistic skin tones. This enhances narrative atmosphere.

- Volumetrics and Fog: Exponential Height Fog with volumetric fog creates light rays. Strong sources show beams in dusty or hazy scenes. Adjust density to avoid washing out visuals. This adds depth to sci-fi settings.

- Practical Light Sources: Place UE5 light objects at lamps or screens for realistic illumination. Use IES profiles for shaped light patterns. Attach spotlights to flashlights for dynamic effects. This grounds lighting in the environment.

- High Dynamic Range and Exposure: Disable auto-exposure for fixed, high-contrast shots. Manual exposure allows dark shadows and bright highlights. Keyframe exposure for effects like silhouetting. This suits sci-fi’s dramatic lighting.

- Reflections and Light on MetaHumans: Position lights for eye catchlights to bring faces to life. Ensure fill light reveals expressions. Soft lights prevent shadowed faces. This maintains performance visibility.

- Mix of Static and Dynamic Lighting: Use static lights for efficiency in non-moving scenes. Stationary lights offer high-quality shadows. Limit dynamic lights to key sources. This balances quality and performance.

- Lighting Shifts as Storytelling: Animate lights in Sequencer for narrative shifts, like dimming to red in battle mode. MetaHumans respond naturally to changes. This enhances storytelling. Maintain consistency within shots.

- Reference Cinematic Lighting: Study films like Star Trek or Blade Runner for lighting cues. Mimic multiple sources or neon backlighting. Epic’s cinematographer presets optimize MetaHuman lighting. This ensures professional results.

- Test in Engine and Iterate: Adjust lights in real-time for perfect highlights. Use Movie Render Queue for stills to compare setups. Real-time feedback speeds up refinements. This perfects the cinematic look.

These techniques combine UE5’s real-time features with cinematic principles, ensuring MetaHumans appear realistic and emotionally engaging in bold, stylized sci-fi lighting.

How do I record dialogue and lip sync with Metahuman in UE5?

- Recording Dialogue Audio: First, record your dialogue with human voice actors (or yourself). This should be done in a quiet environment with a good microphone to ensure clarity. You might have a script reading session where actors perform the lines with the intended emotion. Save the audio files (preferably as uncompressed WAV for quality).

- Importing Audio into Unreal: Bring your dialogue audio files into UE5 (just import .wav files into the Content Browser). You can create an Audio Cue for each line or have them as Wave assets. In Sequencer, you can add an Audio track and drop the file in at the desired time. This ensures audio plays during your cinematic sequence.

- Lip Sync Methods: There are a few ways to lip-sync the MetaHuman to the audio:

- MetaHuman Animator (Facial capture): Record an actor’s face with an iPhone or head-mounted camera. MetaHuman Animator converts video into high-fidelity facial animation, capturing lips and expressions. It uses audio to drive tongue movements. Results are production-ready in minutes.

- Manual Keyframe or Pose Asset Animation: Animate the face rig manually in Sequencer with controls for jaw, lips, and tongue. Create pose assets for phonemes and blend them. This is time-consuming but precise. Control Rig simplifies the process.

- Third-party Lip Sync Tools: Plugins like Replica Studios generate lip sync from audio. These output animation curves for mouth movements. Evaluate for feasibility if capture isn’t possible. They save time but may need tweaks.

- Aligning Audio and Animation with Timecode: MetaHuman Animator and Live Link Face support timecode sync. Facial animation aligns with audio and body mocap via shared timelines. In Sequencer, place animation and audio tracks at the same timecode. This ensures perfect mouth-word alignment.

- Fine-Tuning the Lip Sync: After a first pass, check for mismatches in mouth movements. Adjust plosives (“B”, “P”) for closed lips and vowels (“EE”) for stretched shapes. Tweak timing or exaggerate shapes for clarity. Add head nods or blinks for realism.

- Scrub and Preview in Real-Time: Scrub the timeline with audio enabled to pinpoint issues. Zoom frame-by-frame to align keyframes with phonemes. Display audio waveforms in Sequencer for syllable matching. This ensures precise synchronization.

- Consider Dialog Context: Close-ups need perfect lip sync; wide shots can be less detailed. Focus effort on visible scenes. MetaHumans’ fidelity shines in close-ups. This optimizes animation workload.

- Voice Performance and MetaHuman Performance: Use video references of actors’ faces to guide animation. Capture unique mannerisms like smirks or frowns. MetaHumans’ rig supports micro-expressions. This adds character authenticity.

- Additional Facial Animation Layers: Add expressions beyond lip sync, like furrowed brows for anger. Use Control Rig to blend animations. This enhances emotional weight. MetaHumans support layered performances.

- Testing Playback with Sound: Play sequences at full speed to check audio-visual sync. Slight mismatches are jarring. Mouth movements should align or precede sound slightly. This ensures natural dialogue.

- Tools for Polish: Use Sequencer’s Curve Editor to smooth animation curves. Override eye movements for scene-specific focus. Adjust blinks or smiles for effect. This refines the final performance.

These steps ensure realistic lip sync, leveraging MetaHuman Animator for efficiency or manual methods for control, making dialogue scenes engaging and believable.

Can I use Metahuman Animator for realistic facial performances in sci-fi films?

MetaHuman Animator is ideal for realistic facial performances in sci-fi films, capturing an actor’s expressions with high fidelity in minutes.

- Overview: Introduced in UE 5.2, it uses iPhone (12+) video or head-mounted camera (HMC) rigs to generate facial animations, analyzing video and depth data for nuanced expressions, from jaw movements to subtle eye/lip twitches.

- Ease of Use: Accessible with an iPhone and PC, using the Live Link Face app’s TrueDepth sensor for depth data, or stereo HMC for higher detail, reducing the need for expensive mocap stages.

- Quality: Reproduces micro-expressions and slight movements accurately, delivering clean, editable animation data for dramatic sci-fi scenes like monologues, with smooth rig controls.

- Examples: Epic’s Blue Dot demo showcased emotional performances, and indie film The Last Wish used it for lifelike expressions, proving its capability for demanding acting.

- Workflow: Record actor’s face (optionally with body mocap), process video in MetaHuman Animator to create an animation asset, apply in Sequencer, with minimal manual tweaks needed due to audio-driven tongue animation.

- Real-Time Feedback: Live Link provides real-time MetaHuman facial movement during capture, allowing directors to adjust performances instantly for iterative refinement.

- Sci-Fi Use: Captures human performances for human-like characters or retargets to alien rigs, ensuring authentic emotion in close-up scenes like speeches.

- Limitations: Requires good lighting and clear video; facial accessories may interfere, and non-humanoid aliens need rig adjustments.

- Manual Tweaks: Animators can polish captured animations, adjusting smiles or blinks for effect, using editable curves for artistic enhancement.

MetaHuman Animator democratizes high-quality facial capture, enabling indie filmmakers to focus on performance direction.

How do I use Sequencer to direct a sci-fi movie with Metahuman in UE5?

Sequencer in Unreal Engine 5 acts as a virtual film studio for directing sci-fi movies with MetaHumans, combining animation, cinematography, and editing.

- Organization: Create separate Sequencer assets for scenes (e.g., IntroScene.seq, BattleScene.seq), using a Master Sequence to stitch sub-sequences or render separately for post-editing.

- Adding Characters/Objects: Add MetaHumans to Sequencer tracks by dragging from the World Outliner, creating tracks for Transform and Animation.

- Animation:

- Premade Animations: Assign existing clips (e.g., walk) to Animation tracks, trimming for continuous performances.

- Control Rig: Use MetaHuman Control Rig for keyframing custom gestures, allowing precise in-engine adjustments.

- Live Input: Record mocap via Take Recorder with Live Link, saving takes as keyframes for selecting the best performance.

- Constraints: Animate constraints to attach props (e.g., gadgets) to hands using Constraint tracks or blending for dynamic interactions.

- Camera Work:

- Transform: Keyframe pans, tilts, or dollies for dynamic shots.

- Focal Length/Focus: Animate zooms or rack focus to shift between subjects for cinematic depth.

- Aperture: Use low f-stop for blurry backgrounds, animating focus for approaching characters.

- Editing/Timing: Rearrange and trim clips in Sequencer for pacing and narrative flow, editable in-engine or exported to video editors.

- Layering/Blending: Layer animation tracks (e.g., walking plus head turn) with weighted blending for nuanced performances.

- Visibility/Events: Animate visibility for effects like teleportation and use Event Tracks to trigger particle systems (e.g., explosions) for precise VFX timing.

- Managing Complexity: Use folders, clear track names, playback ranges, and markers to organize tracks and focus editing.

- Previewing: Play sequences in-editor for real-time review, lowering settings for smooth playback or using a new window for heavy scenes.

- Adjusting: Reflect lighting or costume changes instantly, running through sequences to ensure continuity.

- Master Sequencer (Optional): Arrange sub-sequences with cross-fades or transitions for final assembly.

- Rendering: Use Movie Render Queue to render sequences with cinematic settings for high-quality video output.

- Directing Tips: Use wide shots to establish scenes, close-ups for emotion, ensure prop continuity, experiment with duplicate sequences for angles, and frame in Cinematic Viewport.

Sequencer enables complex camera moves and effects for sophisticated sci-fi sequences.

Can Metahuman characters interact with sci-fi VFX like holograms or lasers?

MetaHumans can interact with sci-fi VFX like holograms or lasers in UE5’s integrated environment using Niagara, Blueprints, and Sequencer.

- VFX as Scene Actors: Implement holograms or lasers as Niagara particle systems or Blueprint-driven meshes, controlled via Sequencer or Blueprints for precise timing.

- Timing/Sync: Animate MetaHuman actions (e.g., hand press) in Sequencer to trigger VFX (e.g., hologram activation) at exact frames, ensuring cause-and-effect alignment.

- Physical Interaction: Use collision systems for VFX like laser projectiles to trigger MetaHuman reactions (e.g., hit animations, ragdoll for explosions), with manual timing for cinematics or physics for realism.

- Hologram Example: Animate hand motions to “touch” hologram buttons, syncing with VFX changes like ripples or particle bursts for convincing activation.

- Laser Shootout Example: Animate trigger pulls to emit Niagara laser beams with muzzle flash and impact effects (e.g., sparks), syncing with target reaction animations.

- Blueprints for Complexity: Use Blueprints with Sequencer to trigger multiple VFX based on MetaHuman actions, simplifying complex cinematic sequences.

- Environmental VFX: Integrate ambient effects like smoke or rain with material tweaks (e.g., wet surfaces) and MetaHuman reactions (e.g., stumbling against force fields).

- Post-Process Effects: Apply shaders for holographic MetaHumans with transparency and scanlines, animating parameters in Sequencer for interaction effects.

- VFX Sequencing: Control Niagara emitters in Sequencer to animate hologram stages (e.g., off, booting), adjusting spawn rates or materials for precise timing.

- Compositing (If Needed): Use in-engine VFX for consistency, with placeholders for external compositing in After Effects, matching lighting/perspective for 2D effects like lens flares.

Careful planning and testing ensure seamless, cinematic VFX interactions with MetaHumans.

How do I render cinematic-quality visuals for a Metahuman sci-fi film?

Steps for high-quality rendering in UE5:

- Movie Render Queue (MRQ): Enable MRQ plugin, add sequences via Sequencer’s Render Movie option for high-fidelity output.

- Image Sequence: Output PNG or EXR sequences for lossless quality, enabling frame fixes and HDR for post-processing.

- Resolution/Aspect Ratio: Use 4K (3840×2160) or widescreen (e.g., 3840×1634), matching Cine Camera’s aspect ratio for detailed visuals.

- Anti-Aliasing/Temporal Samples: Set Temporal Sample Count to 8-16 or use Path Tracing with high samples for smooth edges, enabling Motion Blur and Depth of Field.

- High-Quality Features: Enable Cinematic Quality, disable Texture Streaming, and use High Resolution or extra Spatial Samples for noise-free Lumen/ray-traced reflections.

- Render Passes (Optional): Capture depth or ambient occlusion passes for compositing, ensuring motion blur/bloom render correctly with warm-up frames.

- Sound: Render visuals separately, syncing audio in a video editor, or use OBS for preview renders with sound, reserving MRQ for final visuals.

- Performance vs. Quality: Adjust sample counts or resolution for slow renders, splitting frame ranges across machines for long sequences.

- Color Output: Use 16-bit PNG/EXR in linear space for grading flexibility or 8-bit PNG in sRGB for simpler workflows, preserving HDR details.

- Verify Frames: Check for glitches (e.g., hair, lighting), re-rendering specific frames using image sequences.

- Compile Video: Import sequences into a video editor, add audio, apply grading, and export as MP4/ProRes for distribution.

- Output Gamma/Color: Use Unreal’s filmic tonemapper or apply LUTs in post for cinematic looks (e.g., teal-orange), rendering neutral images for grading flexibility.

Unreal’s real-time rendering with MRQ’s super sampling and path tracing delivers near-offline-render quality for filmic visuals.

What are the best practices for storytelling using Metahuman in sci-fi projects?

Key practices for compelling sci-fi storytelling with MetaHumans:

- Character and Script: Develop a strong script with clear motivations, testing narrative flow via table reads or story reels before MetaHuman production.

- Visual Storytelling (Pre-vis): Use Unreal to block out scenes, experimenting with camera angles and lighting to amplify narrative moments like sci-fi silhouettes.

- MetaHuman Emotions: Animate subtle facial expressions for show-don’t-tell moments (e.g., sorrowful looks), making sci-fi stakes personal and relatable.

- Feedback Iteration: Show early cuts to viewers for clarity/pacing feedback, using Unreal’s real-time editing to adjust shots or sequences.

- Purposeful VFX: Ensure VFX like holograms advance the plot or reveal character traits, avoiding flashy distractions from emotional arcs.

- Continuity/World-Building: Maintain consistent MetaHuman designs and use dialogue to reference off-screen events, reinforcing the sci-fi world’s depth.

- Avoid Uncanny Valley: Use realistic animations, depth of field, and micro-movements (e.g., breathing) to ensure natural, lifelike performances.

- Practical Techniques: Apply dynamic blocking to convey power dynamics or emotional distance, using environments for subtext in MetaHuman interactions.

- Strategic Sound: Plan sound design to enhance dramatic moments, syncing MetaHuman reactions with music swells or silences for emotional impact.

- Resource Management: Focus on key scenes, implying larger events off-screen with dialogue/reactions to manage scope for small teams.

- Learn from Others: Analyze indie projects like Firmware for pacing/visual insights, studying their making-of content for practical storytelling approaches.

Focus on a resonant narrative prioritizing character and emotional engagement, using MetaHumans as digital actors to enhance storytelling.

Are there real sci-fi films or shorts made using Metahuman?

Yes, there are already several real-world sci-fi short films and projects that have been created using MetaHumans in Unreal Engine. These serve as excellent examples of what’s possible and often provide inspiration and lessons for new creators. Here are a few notable ones:

- “Firmware” (2024) – Sci-Fi Short Film on DUST: A 20-minute solo project by Calvin Romeyn, featuring MetaHumans and mocap, about a woman and a droid, with cinematic lighting and emotional depth, premiered on DUST.Firmware showcases a solo creator’s ability to craft a compelling narrative using MetaHumans. Its high-quality visuals and mocap-driven performances highlight Unreal’s cinematic potential. The film’s success on DUST, with strong audience reception, proves MetaHumans can carry a story. Romeyn’s course on its production offers valuable workflow insights.

- “The Well” (2021) – Animated Horror/Sci-Fi Short by Treehouse Digital: A stylized horror/sci-fi short using MetaHumans, created in Unreal by a small studio, showing kids in a spooky tale with real-time rendering.The Well demonstrates MetaHumans in a stylized, non-realistic context, blending horror and sci-fi. Treehouse Digital’s shift to CG during the pandemic highlights Unreal’s accessibility. The short’s real-time rendering showcases production efficiency. It proves MetaHumans suit diverse narrative styles.

- “Mandatory” (2024) – Sci-fi Animated Short: A 2.5-minute indie short by Grossimatte, created in three months, focusing on a remote worker’s secret, using MetaHumans with Lumen for character-driven storytelling.Mandatory’s compact narrative emphasizes MetaHuman performance in a short format. Its quick production shows solo creators can achieve polish. Lumen and ray tracing enhance its visual quality. Shared on Unreal forums, it inspires accessible sci-fi storytelling.

- “The Last Wish” (2024 in progress): A 15-minute cinematic short using MetaHumans, Move.AI, and marketplace assets, noted as award-winning, showcasing team-driven ambition in Unreal.The Last Wish exemplifies a team leveraging MetaHumans for high-quality output. Move.AI and MetaHuman Animator streamline mocap and facial animation. Its festival recognition suggests strong execution. The project highlights Unreal’s role in ambitious indie filmmaking.

- “CARGO” (in production): An upcoming short inspired by Love, Death & Robots, using customized MetaHumans for alien characters, showing the tool’s flexibility for non-human designs.CARGO’s use of modified MetaHumans for aliens expands creative possibilities. Its high-profile inspiration indicates ambitious storytelling goals. The project underscores MetaHumans as a versatile base for unique designs. Behind-the-scenes insights reveal advanced customization techniques.

- Epic’s Tech Demos: Demos like the MetaHuman Creator sample, The Matrix Awakens, and Blue Dot showcase MetaHumans in scripted sci-fi scenes, proving cinematic fidelity.Epic’s demos, though not full films, validate MetaHumans in narrative contexts. The Matrix Awakens features MetaHumans in dynamic city scenes. Blue Dot’s emotive monologue highlights facial animation quality. These tech showcases inspire full-fledged sci-fi productions.

These projects, from solo shorts like Firmware to team efforts like The Last Wish, demonstrate MetaHumans’ viability in sci-fi filmmaking, with positive audience reception and community-shared insights guiding new creators.

How do I mix practical VFX and Metahuman animation in a sci-fi movie?

Mixing practical effects or live-action footage with MetaHuman animation involves compositing Unreal-rendered MetaHuman scenes with real-world elements, akin to traditional VFX workflows. Composite CG MetaHumans into live-action plates, use in-camera VFX with LED screens for real-time integration, or blend practical elements like explosions into Unreal renders. Capture live footage with camera tracking anMixing practical effects or live-action footage with MetaHuman animation involves compositing Unreal-rendered MetaHuman scenes with real-world elements, similar to traditional VFX workflows. Key approaches include:

- Mix Method: Composite CG MetaHumans into live-action plates, use in-camera VFX with LED screens for real-time integration, or blend practical elements like explosions into Unreal renders.

- Shooting Live Plates: Use static or tracked cameras, match lighting with HDRI captures, and employ greenscreen for layering MetaHumans, ensuring consistent resolution and motion blur.

- Rendering for Compositing: Output MetaHuman renders with alpha channels, shadow passes, or lighting layers to facilitate integration with live footage in post-production.

- Integrating Practical Elements: Overlay practical effects like explosion stock footage onto Unreal renders, matching lighting and color grading, or light MetaHumans in-engine for interactive effects.

- Mixing Live Actors with MetaHumans: Animate MetaHumans to sync with live actors’ actions, using 3D tracking for integration and avoiding complex physical contact to simplify compositing.

- Composure/OCIO Pipeline: Use Unreal’s Composure plugin for real-time compositing of greenscreen actor feeds with MetaHumans, though offline compositing is simpler for films.

- Color Grading: Apply a final color grade or film LUT to unify live and CG elements, masking color or black level differences for a cohesive sci-fi look.

- Example: Composite a MetaHuman into a filmed miniature cockpit using transparent background renders, tracked camera moves, and post-added reflections for realism.

- Real-World Usage: MetaHumans are royalty-free for films per Epic’s EULA, as shown by ActionVFX’s demos integrating them with practical backplates, proving VFX compatibility.

This hybrid approach combines practical effects’ tactile quality with MetaHuman flexibility, requiring careful matching of lighting and perspective for seamless sci-fi visuals.

What are the limitations of using Metahuman in sci-fi filmmaking?

While MetaHumans are a powerful tool, it’s important to be aware of their limitations so you can plan around them in your sci-fi project:

- Realism vs. Stylization: MetaHumans are designed for realistic humans, limiting their use for non-human aliens or stylized characters, requiring custom models for exaggerated features.The MetaHuman Creator restricts designs to plausible human ranges, unsuitable for cartoonish or alien forms. Sci-fi projects needing unique creatures demand external modeling and rigging. Some artists modify MetaHumans in DCC tools, but this requires expertise. Custom solutions bridge this gap for diverse character needs.

- Limited Out-of-the-Box Customization: The Creator offers finite face and body blends, preventing exact real-person recreations or unique skin textures without exporting to tools like Maya.Predefined blends limit specific likenesses, intentional to avoid copying real faces. Skin texture options are preset, requiring external edits for variety. Exporting to DCC tools enables material tweaks, but adds workflow complexity. This constraint impacts highly specific character designs.

- Clothing Variety: Limited built-in clothing options necessitate custom sci-fi outfits like space suits, requiring modeling skills or marketplace assets to meet genre needs.The Creator’s casual clothing doesn’t suit sci-fi’s futuristic aesthetics. Custom outfits demand rigging and modeling expertise. Marketplace assets offer solutions but increase costs. Planning for this ensures MetaHumans fit the sci-fi narrative visually.

- Hair and Accessories: Restricted hairstyle options and lack of sci-fi accessories like helmets require custom grooms or manual additions, increasing asset creation workload.Exotic sci-fi hairstyles or cybernetic implants aren’t included, needing external tools like Blender. Custom grooms add production time and complexity. Marketplace assets can supplement but require integration. This limitation demands proactive asset planning.

- Performance and Resources: High-poly MetaHumans with 8K textures strain GPUs, slowing scenes with multiple characters, requiring LOD adjustments for large casts.Resource-heavy MetaHumans challenge real-time performance, risking crashes. LOD syncing helps but demands manual optimization. Background characters need lower LODs to manage memory. This impacts scene complexity and production efficiency.

- Facial Animation Nuances: MetaHuman Animator captures faces well but may need tweaks for subtle expressions, with limits on non-human or exaggerated animations.Subtle smirks or unique expressions require manual refinement to avoid jitters. The rig’s realistic anatomy restricts cartoony exaggerations. Eye animations need care to prevent a “dead-eye” look. These adjustments ensure natural, engaging performances.

- Uncanny Valley and Repetition: Similar source data risks generic-looking MetaHumans without customization, and repeated animations can appear unnatural, needing varied behaviors.Insufficient tweaking makes characters feel repetitive, triggering uncanny valley. Custom scars or hair add uniqueness but take effort. Varied animations prevent robotic uniformity. This demands careful design to maintain character distinctiveness.

- Licensing/Engine Constraints: MetaHumans are restricted to Unreal Engine, preventing use in other renderers, and Creator requires an internet connection, limiting offline work.The EULA ties MetaHumans to Unreal, blocking Blender or Unity use. Internet dependency for Creator access can hinder remote workflows. These constraints lock projects into Unreal’s ecosystem. Workarounds are limited, requiring pipeline alignment.

- Learning Curve and Workflow: Effective MetaHuman use requires Unreal, Control Rig, and DCC tool knowledge, posing a learning barrier for beginners despite Epic’s resources.Unreal’s complexity demands time to master for MetaHuman integration. Control Rig and animation workflows add technical layers. Epic’s tutorials help, but proficiency isn’t instant. This learning investment is necessary for polished results.

- Heavy Scenes and Lighting: Large sci-fi scenes with reflections and multiple MetaHumans slow performance, needing optimization like disabling hair grooms during editing.Reflective surfaces and hair grooms tank frame rates in complex scenes. Manual optimization, like LOD toggling, mitigates slowdowns. Editing with simplified assets preserves workflow speed. This requires technical management alongside creative direction.

- No Alien Facial Rig (Yet): Non-human facial structures, like snouts, aren’t supported, requiring custom rigs for alien faces while using MetaHuman body skeletons.MetaHuman’s human-centric rig limits alien facial animations. Custom rigs in external tools address this but add complexity. Body skeletons remain compatible for hybrid use. Sci-fi creature designs demand significant rigging investment.

- Updates and Compatibility: Engine updates may alter shaders or rigs, risking look inconsistencies, so stick to one Unreal version during production to maintain stability.Updates can improve features but disrupt ongoing projects. Shader or rig changes may affect character appearance. Testing upgrades on project copies prevents issues. Version consistency ensures visual continuity throughout production.

Awareness of these limitations allows proactive planning, like budgeting for custom assets or optimizing scenes, while Epic’s ongoing updates and community workarounds mitigate many challenges.

Where can I find tutorials on creating sci-fi movies with Metahuman and Unreal Engine?

There is a wealth of resources available to learn how to create sci-fi movies using MetaHumans and Unreal Engine 5. Here are some of the best places to find tutorials, courses, and community insights:

- Official Unreal Engine Learning Portal: Epic’s free Unreal Online Learning offers courses on MetaHumans, Sequencer, and cinematic techniques, with documentation and forums providing detailed guides.Courses like “Cinematic Production Techniques” teach Sequencer and lighting for storytelling. “Animating MetaHumans” covers control rig workflows. Epic’s documentation details MetaHuman setup and tools. Forums offer community-driven solutions for specific challenges.

- Epic’s YouTube Channel (Inside Unreal): Inside Unreal livestreams on YouTube cover MetaHuman workflows, cinematic lighting, and animation, featuring expert demos and Q&A sessions.Sessions like “Cinematic Lighting for MetaHumans” provide practical setups. Experts demonstrate real-time techniques with MetaHumans. Q&A segments address common issues. These recordings are accessible for self-paced learning.

- YouTube Tutorials by Creators: Channels like JSFilmz, William Faucher, and UE5 Pros offer tutorials on MetaHuman animation, Movie Render Queue, and sci-fi cinematic techniques in UE5.JSFilmz covers Sequencer-based MetaHuman animation workflows. William Faucher details MRQ settings for film quality. UE5 Pros and Unreal Sensei tackle specific sci-fi VFX. A video like “How to make a movie with Metahumans” offers a quickstart guide.

- Written Tutorials and Blogs: Sites like 80.lv and ActionVFX provide breakdowns and how-tos, such as CARGO’s production insights or MetaHuman VFX integration guides.80.lv’s interviews, like CARGO’s, reveal professional workflows. ActionVFX’s “Getting Started with MetaHuman” suits VFX-focused sci-fi. YelzKizi.org offers niche guides, like PixelHair integration. These resources provide actionable steps for filmmakers.

- Community Forums and Reddit: Unreal Engine forums and r/unrealengine subreddits feature user tips, project showcases, and AMAs, like a 10-minute MetaHuman short’s workflow discussion.Forums host MetaHuman-specific threads for troubleshooting. Reddit’s r/unrealengine5 shares short film breakdowns. AMAs from creators offer direct insights. These platforms foster peer learning and problem-solving.

- Udemy and Online Courses: Udemy’s “Make Short Films in Unreal Engine 5 with Metahumans” course covers character design and animation, with CGMA and FXPHD offering related virtual production training.Udemy’s structured course teaches MetaHuman Animator and Sequencer. CGMA’s virtual production courses may include MetaHuman techniques. FXPHD explores Unreal’s cinematic applications. These paid options provide comprehensive, guided learning.

- Virtual Production Communities: Virtual Production and Unreal Engine Discord channels connect users with professionals, offering real-time advice and tutorial links for MetaHuman projects.Discord’s Virtual Production channels host industry pros sharing tips. Unreal Engine Discord includes MetaHuman-focused discussions. Users can ask specific questions for quick responses. These communities bridge beginners and experts.

- Example Project Files: Epic’s MetaHuman sample, Matrix City Sample, and DoubleJump Academy’s Firmware course provide project files to study MetaHuman cinematic setups.Epic’s sample projects demonstrate MetaHuman animation and crowds. Matrix City shows MetaHumans in large scenes. Firmware’s course includes production breakdowns. These files offer hands-on learning opportunities.

- Documentation for Tools: Move.ai, Rokoko, and MetaHuman Animator documentation detail integration with UE5, providing step-by-step setup for mocap and facial animation.Move.ai’s docs guide mocap workflows for MetaHumans. Rokoko explains suit integration with Unreal. MetaHuman Animator’s Epic documentation is essential for facial capture. These resources ensure proper tool setup for sci-fi films.

Start with a small practice project, like a 30-second scene, to apply tutorial lessons, using these resources to troubleshoot and refine your sci-fi filmmaking skills.

FAQ Questions and Answers

- Do I need a high-end computer to make a MetaHuman sci-fi film?

A powerful PC with a modern GPU (RTX 30/40-series), 32GB+ RAM, and multi-core CPU is ideal for Unreal Engine 5 with MetaHumans and Lumen. Mid-range PCs can work with lower settings during editing, ramping up for final renders. High-end setups ensure smooth real-time workflows with multiple MetaHumans and effects. Performance optimization is key for complex scenes. - Is MetaHuman completely free to use for movies?

MetaHuman Creator and MetaHumans are free within Unreal Engine, with no royalties for films under the Unreal license. Crediting Epic is optional. Use MetaHumans in UE or rendered output, but don’t export raw assets to other engines or sell models. This supports cost-free film production. - Can I use other software like Maya or Blender with MetaHumans?

MetaHumans can be exported to Maya via Quixel Bridge for custom edits or animation. Blender lacks official support, but community workflows enable FBX exports. Animate in Maya/Blender and return to UE for rendering, where MetaHuman shaders work best. External DCCs aid customization, but UE is central. - How long does it take to create a short film with MetaHumans?

A 1-2 minute short may take weeks for a solo creator using existing assets. A polished 2.5-minute film took 3 months part-time, while a 10-minute short took 5 months. Mocap and asset reuse speed production; new users face a learning curve. Unreal is faster than traditional CG. - Can I mix real footage or actors with MetaHuman characters?

Real actors can be composited with MetaHumans using greenscreen, matching lighting and camera tracking. Animate MetaHumans to sync with live footage, using post-production tools like After Effects or Unreal’s Composure for real-time mixing. Careful planning ensures seamless CG-real integration. Tests refine the composite’s believability. - How many MetaHumans can I have in one scene?

Multiple MetaHumans are possible, but 5-10 high-quality ones strain performance, depending on hardware. Use lower LODs or proxy characters for crowds. Composite layers or optimize hair/streaming for heavy scenes. Reserve high-detail MetaHumans for close-ups, using simpler models for distant figures. - Do I need motion capture to animate MetaHumans?

Motion capture isn’t required; hand animation via Sequencer or keyframes works. Mocap (e.g., Rokoko, Move.ai, or iPhone with MetaHuman Animator) enhances realism and speed. Without mocap, preset animations or manual keyframing suffice. Mocap is a time-saving, realism-boosting option. - Can MetaHumans be aliens or non-human characters?

MetaHumans are human-focused, unsuitable for non-human anatomy like four arms. Modify in external software, sculpting alien features and using Mesh to MetaHuman for rigging. Create separate alien rigs or swap heads/textures for humanoid aliens. Advanced skills are needed for non-human designs. - How can I make MetaHumans more unique and avoid them looking similar?

Customize extensively with facial sliders, hairstyles, eye/skin colors, and accessories like scars or glasses. Vary body types, postures, and clothing. Use Mesh to MetaHuman for unique scans. Treat character design like casting and styling to ensure distinct, diverse appearances. - Should I use Unreal Engine 5 instead of traditional 3D software for my sci-fi film?

Unreal Engine 5 is ideal for small teams, offering real-time feedback, integrated tools, and MetaHuman support. It rivals traditional CGI (Maya/Blender) for indie films, with Lumen and fast iteration. Traditional pipelines may edge out for hyper-realism but require more resources. UE5 suits efficient, cinematic sci-fi production.

With these FAQs covered, we’ve addressed a broad range of concerns, from technical to creative, that come up when making a sci-fi movie with MetaHumans in Unreal Engine 5.

Conclusion

Using MetaHumans in Unreal Engine 5, creators can craft photorealistic sci-fi films, blending animated or hybrid live-action narratives with real-time tools. This guide details the workflow: story planning, character setup, environment creation, lighting, animation via MetaHuman Animator and Sequencer, and high-quality rendering. It addresses mixing live footage, narrative focus, and cites indie projects like Firmware and The Well as proof of solo or small-team success. MetaHumans offer cost-effective, expressive digital actors, while Unreal’s real-time features enable rapid iteration, freeing creators to explore bold stories and worlds. This empowers indie developers, filmmakers, and 3D artists to produce cinematic content, blurring the line between studio and independent capabilities. The growing Unreal community and resources further accelerate this storytelling revolution.

A few key takeaways:

- Plan meticulously: A strong script and clear visual plan (storyboards/pre-vis) will guide your use of MetaHuman and Unreal’s tools effectively, preventing tech overwhelm.

- Embrace the technology: Utilize features like Lumen GI, Control Rig, and Movie Render Queue to their fullest. They are your allies in achieving cinematic quality. At the same time, be mindful of limitations (realism range, performance) and creatively work around them (custom assets, LOD management).

- Iterate creatively: Unreal’s real-time nature lets you experiment with camera angles, lighting moods, and even edit sequences on the fly. Use that to refine your storytelling – something traditionally very costly to change in CG.

- Leverage community and resources: You’re not alone. There’s a growing community of Unreal filmmakers. Tutorials, forums, and shared assets can accelerate your process. Don’t hesitate to learn from others’ experiences (and our FAQ answers compiled some of that communal wisdom).

Now, armed with knowledge and inspiration, it’s time to bring your sci-fi story to life. Lights, camera, Unreal! Good luck on your filmmaking journey – we can’t wait to see the worlds and characters you create.

Sources and Citation

- Epic Games – MetaHuman documentation and blog: Provided technical details on MetaHuman capabilities and MetaHuman Animator, and insights from Epic’s own short film experimentsEpic Developer Community – MetaHuman Documentation Unreal Engine – MetaHuman Creator Sneak Peek.

- ActionVFX Blog: “Why VFX Will Change Forever with MetaHuman Creator” – Background on MetaHuman’s impact and featuresActionVFX – Why VFX Will Change Forever With MetaHuman Creator

- Unreal Engine Forums – User posts and moderator replies: e.g., grossimatte’s Mandatory short film post gave a creator’s perspective on workflow and lighting (Lumen + RT); The Last Wish project post highlighted the use of Move AI and MetaHuman Animator in an indie productionUnreal Engine Forums – Character & Animation

- 80.lv Interview with Marcelo Vaz – Creating Realistic Alien for Short Film CARGO: Discussed customizing MetaHumans for an alien character and general production notes80.lv – Creating Realistic Alien for Short Film CARGO

- YelzKizi Tutorials – Guides on using PixelHair with MetaHumans, exporting MetaHumans to Maya/Blender, etc., which informed our sections on hair and cross-software workflowsYelzkizi – Modifying MetaHumans: An Easy 3D Comprehensive Guide (e.g., MetaHuman Animation Tutorial) support cross-software workflows.

- Reddit (r/unrealengine) – Community experiences like the “5 months for 10 min short film using MetaHumans” post provided anecdotal evidence of production time and feasibilityReddit – r/unrealengine

- Unreal Official Documentation – Sequencer and cinematic guides that describe using the tool for animation and camera work, as well as technical guides on Lumen, performance, etc., to support our recommendationsEpic Developer Community – Sequencer Overview Lumen Technical Documentation.

- Unreal Engine Spotlight – “MetaHumans star in Treehouse Digital’s The Well”: Gave context on a fully CG short film made with UnrealUnreal Engine – MetaHumans Star in Treehouse Digital’s The Well

- Forum Q&A – Licensing clarification (MetaHumans for VFX production) indicating it’s allowed and intended for use in filmEpic Games – MetaHuman License FAQ

Recommended

- How to Integrate Metahuman with ALSV4 in Unreal Engine 5: Complete Setup Guide

- Cyberpunk 2077 Hair Mods: Top Custom Hairstyles, Installation Guides, and Community Favorites

- Metahuman Animator: How to Animate Realistic Characters in Unreal Engine 5

- How do I create a bird’s-eye camera view in Blender?

- What Is Depth of Field in Blender, and How Do I Set It?

- What 3D Program Did Arcane Use? An In-Depth Look at the Animation Tools Behind Riot Games’ Hit Series

- How to Animate in Blender: The Ultimate Step-by-Step Guide to Bringing Your 3D Scenes to Life

- How to Put Pattern on Texture in Blender: A Step-by-Step Guide to Creating Custom Materials

- The Ultimate Guide to the Most Popular Black Hairstyle Options

- inZOI: The Ultimate Life Simulation Game – Features, Gameplay, and Comparisons