Modern creators are witnessing a surge in AI-powered visual effects (VFX) tools. Wonder Dynamics’ Wonder Studio platform introduced an AI solution that automates animating and compositing CG characters into live-action scenes. However, it’s not the only player in this space. If you’re looking for alternatives, this comprehensive guide will delve into the best Wonder Dynamics alternatives – from motion capture solutions to AI animation tools – comparing their features, usability, pricing, integrations, and more. We’ll also address how these platforms cater to indie filmmakers, their learning curves, and what the community is saying about them.

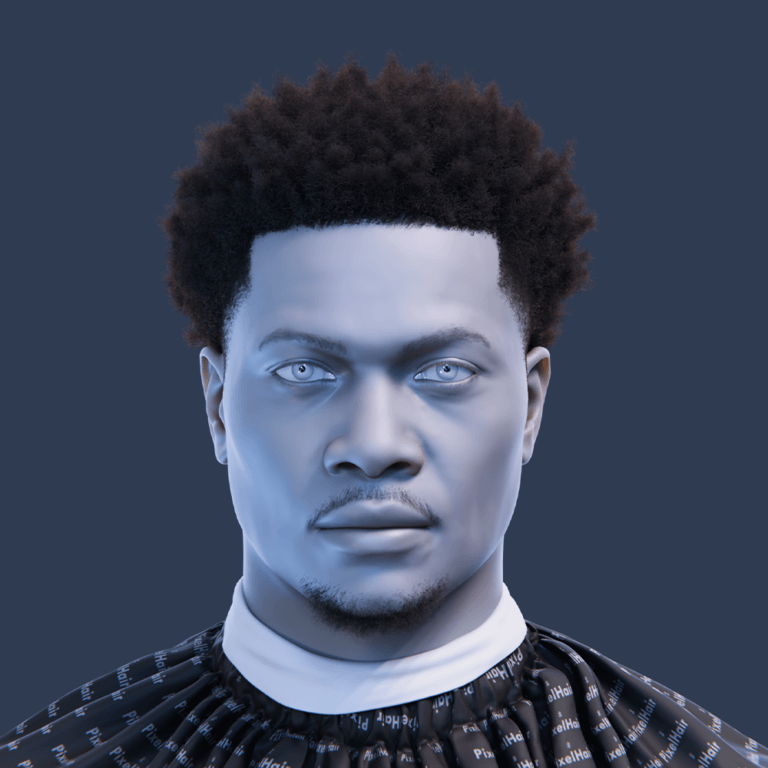

The dashboard of Wonder Studio (Wonder Dynamics) provides a user-friendly web interface with templates and character assets. Competing AI VFX platforms offer similarly accessible interfaces via the web, lowering the barrier for creators.

What are the best alternatives to Wonder Dynamics for AI-driven VFX?

Wonder Studio by Wonder Dynamics automates CG character replacement in live-action footage, but several AI-driven alternatives offer unique VFX and animation solutions. These tools vary in focus, from video editing to motion capture, catering to filmmakers, game developers, and creators seeking accessible VFX workflows.

Below are the top five alternatives, each leveraging AI to streamline animation and VFX processes:

- Runway ML: A versatile AI suite for video editing, offering generative video and background removal. It excels in rotoscoping and effects for post-production. Complements VFX workflows but lacks 3D character replacement. Ideal for editors needing flexible AI tools.

- DeepMotion (Animate 3D): Converts 2D video into 3D skeletal animations for realistic movement. Outputs data for games or films, requiring manual scene integration. Suits developers needing flexible mocap solutions. Affordable with a free trial for indie creators.

- Kinetix: AI-driven 3D avatar animations from video, with Unity/Unreal SDKs. Focuses on game development and interactive media. Automates rigging for quick emotes. Best for real-time animation in games or metaverse projects.

- Move.ai: Markerless motion capture using multiple cameras for high-fidelity data. Ideal for VFX and game pipelines needing animation libraries. Offers accuracy for complex motions. Requires more setup than Wonder Studio’s single-camera approach.

- Plask: Web-based motion capture and animation editing via webcam or video. Accessible for indie creators and developers with an online editor. Exports to game engines for versatile use. Simplifies animation with minimal hardware needs.

Other tools like Radical, Cascadeur, Mixamo, and Krikey AI also offer AI-assisted animation, but the five listed align closely with Wonder Studio’s accessibility goals. These alternatives empower creators to produce high-quality VFX efficiently, often at lower costs or with flexible workflows.

How does Runway ML compare to Wonder Dynamics in terms of features and usability?

Runway ML and Wonder Studio approach AI in video production differently, with Runway being a general AI toolkit and Wonder Studio specializing in 3D character animation and compositing. Here are the key comparisons:

- Features: Runway ML offers AI tools like Green Screen, text-to-video, and color grading for rotoscoping and effects, complementing post-production. Wonder Studio automates 3D character integration, tracking actors for motion, lighting, and facial expressions, focusing on VFX shots. Runway lacks 3D animation but supports generative video. Wonder Studio excels in CG character replacement.

- Usability: Runway’s web-based interface is simple, with drag-and-drop AI effects like Green Screen requiring minimal skills. Wonder Studio’s clear, automated interface allows non-experts to upload footage and select characters for processing. Both prioritize ease of use for non-VFX artists. Runway suits editors, while Wonder Studio caters to VFX workflows.

- Output & Workflow: Runway outputs video files or alpha mattes for editing software, ideal for compositing tasks. Wonder Studio provides composited videos or 3D scene data for Unreal Engine, supporting VFX tweaks. Runway complements broad post-production needs. Wonder Studio automates end-to-end character insertion for VFX shots.

Runway ML is a versatile tool for AI-assisted video editing, while Wonder Studio excels in automated character animation for VFX. Runway’s broader feature set suits creators needing multiple AI tools, but it doesn’t match Wonder Studio’s specialized CG integration.

What makes DeepMotion a viable alternative for motion capture and animation?

DeepMotion’s Animate 3D excels in AI-driven motion capture, offering a strong alternative to Wonder Studio for animating 3D characters. Here’s why it stands out:

- Markerless Motion Capture: Generates 3D animations from standard video with physics-based tracking for natural motion. Reduces jitter for accurate results, similar to Wonder Studio’s actor capture. Ideal for realistic character movements in various projects. Suits creators needing reliable mocap without gear.

- Animation Output: Outputs FBX/BVH files for Blender, Maya, Unity, or Unreal, providing raw animation data. Unlike Wonder Studio’s scene-integrated composites, it’s tool-agnostic for custom projects. Flexible for game or film pipelines. Requires manual integration for VFX shots.

- Ease of Use: Web-based platform with minimal setup, where users upload video for AI processing. Shallow learning curve for indie creators and professionals. Praised for requiring little technical expertise. Streamlines animation workflows for accessibility.

- Viability and Use Cases: Suits game development, AR/VR, and indie films with multi-person tracking support. Offers a free trial for budget-conscious creators. Ideal for animating game characters or CGI figures. More manual than Wonder Studio but highly flexible.

While DeepMotion focuses on raw animation data rather than compositing, it pairs well with 3D software for realistic character animation. Its affordability and flexibility make it a compelling choice for creators needing motion capture without Wonder Studio’s full VFX pipeline.

How does Kinetix offer AI-powered animation solutions for creators?

Kinetix provides AI-driven 3D animation for games and interactive media, offering an alternative to Wonder Studio’s VFX focus. Here’s how it serves creators:

- Video to Animation (Emotes): Converts video performances into 3D avatar animations (emotes) for games. Automates rigging and retargeting for quick, game-ready clips. Similar to Wonder Studio’s performance capture process. Ideal for fast character animation needs.

- Integration with Game Engines: Offers Unity/Unreal SDKs for real-time animation generation in games or apps. Enables user-generated content, unlike Wonder Studio’s offline process. Streamlines interactive workflows for developers. Supports dynamic game or metaverse experiences.

- Platform and Features: Cloud-based with a user-friendly web app and asset library for non-coders. Supports real-time team collaboration via the cloud. Simplifies animation creation with templates. Emphasizes accessibility and speed for creators.

- Who it’s for: Targets indie game developers and metaverse creators for interactive media. Unlike Wonder Studio’s filmmaker focus, it prioritizes games and VR. Suits projects needing quick animation clips. Less suited for producing final VFX shots.

Kinetix’s usage-based pricing suits sporadic use, making it cost-effective for interactive content creators. It prioritizes animation clips over final VFX shots, offering developers flexible integration.

What are the key differences between Move.ai and Wonder Dynamics in VFX workflows?

While both Move.ai and Wonder Studio capture human performances for digital characters, their workflows and outputs differ significantly. Here are the key differences:

- Capture Method: Move.ai uses 2-6 cameras for high-fidelity markerless motion capture, mimicking a virtual mocap stage. Requires more setup than Wonder Studio’s single-camera approach for scene footage. Offers greater accuracy for complex motions like stunts. Ideal for detailed animation libraries.

- Output and Integration: Move.ai delivers FBX motion data for manual integration in 3D software like Maya or Blender. Wonder Studio outputs composited videos or 3D scenes with CG characters. Move.ai requires traditional compositing tools for VFX. Wonder Studio automates the full VFX pipeline.

- Workflow Use Cases: Move.ai suits traditional pipelines needing animation libraries or complex stunts with high fidelity. Wonder Studio streamlines replacing actors with CG characters in post-production. Move.ai is modular for custom projects. Wonder Studio is ideal for automated VFX shots.

- Real-time vs Offline: Move.ai’s live sync (beta) enables previews in Unreal Engine for on-set use. Wonder Studio processes offline, requiring footage upload for results. Move.ai supports director previews better. Wonder Studio focuses on post-production workflows.

- Facial and Hands: Wonder Studio animates faces and hands automatically using blendshapes for expressions. Move.ai focuses on body motion, requiring separate facial solutions. Wonder Studio covers full performance capture. Move.ai prioritizes skeletal accuracy for body movements.

Move.ai offers customizable, high-fidelity motion data for modular workflows, while Wonder Studio provides a faster, automated solution for simpler VFX shots. Professionals might combine both for complex projects.

How does Plask provide AI motion capture and animation tools for developers?

Plask offers a web-based, developer-friendly platform for AI motion capture and animation, ideal for indie creators and small teams. It provides tools in the following ways:

- Web-Based Motion Capture: Captures motion via webcam or uploaded video in the browser for real-time tracking. No software installation needed, aligning with Wonder Studio’s accessibility. Ideal for lightweight workflows or webapps. Suits developers with minimal resources.

- Built-in Animation Editing: Includes an online editor for refining and retargeting motion with a drag-and-drop interface. Streamlines iteration without external tools like Unity or Blender. Lowers skill barriers for developers. Simplifies testing animations on 3D models.

- Developer-Friendly Outputs: Exports FBX, BVH, and JSON for game engines or custom pipelines. JSON supports web animations for unique applications. Ensures flexible integration with standard formats. Aligns with animation workflows for developers.

- Collaboration and Cloud Storage: Cloud-based platform enables project sharing for remote team collaboration. Reduces pipeline friction for small studios or distributed teams. Enhances accessibility for group workflows. Simplifies animation project management.

- Target Audience: Targets indie animators, educators, and developers for apps or prototypes. Suits workshops or hackathons needing quick motion capture. Offers accessible solutions for animation. Less focused on VFX compositing than Wonder Studio.

- No Specialized Hardware Needed: Requires only a basic camera, like Wonder Studio, for high accessibility. Supports webcam-based real-time capture for ease of use. Simplifies motion capture setup for resource-limited developers. Aligns with indie-friendly ethos.

Plask’s freemium model and focus on general-purpose animation make it a versatile alternative for developers, though it lacks Wonder Studio’s VFX compositing.

What are the pricing models of top Wonder Dynamics alternatives like Runway ML and DeepMotion?

Pricing is a crucial factor when choosing an AI VFX platform. Here’s a breakdown of how some top alternatives are priced (as of 2025), especially compared to Wonder Studio’s subscription model:

- Runway ML: Subscription with credits includes a Free tier (125 credits for testing). Standard ($15/mo) andyields monthly credits for video/image generation. Pro ($28–$35/mo) adds features, while Unlimited ($95/mo) removes most limits. Flexible for occasional use, cheaper than Wonder Studio’s Pro.

- DeepMotion (Animate 3D): Freemium with a trial; Starter ($9/mo) offers basic animation seconds. Innovator ($17/mo) and Professional ($39/mo) increase output and features. Business/Unlimited ($79–$83/mo) suits high-volume needs, competitive with Wonder Studio’s Lite for pros.

- Kinetix: Usage-based model with free integration and ~$0.10–$0.15 per emote after a free quota. No traditional tiers; costs accrue with usage volume. Cost-effective for sporadic animation needs in games. Suits developers with variable output requirements.

- Move.ai: Professional pricing at ~$100–$150/mo or $1200+/year for basic plans, often custom for studios. Pricier but cost-efficient for high-fidelity mocap with multi-camera support. Less accessible for indie creators than other options. Suits serious, high-throughput projects.

- Plask: Freemium model with premium plans (~$20–$30/mo) for priority processing and storage. Indie-friendly pricing, comparable to streaming services for basic needs. Aligns with Wonder Studio’s accessibility ethos. Suits creators needing affordable animation tools.

In comparison, Wonder Studio (Wonder Dynamics) now has:

- Lite: $29.99 monthly (~150 seconds of footage processing, limited resolution).

- Pro: $149.99 monthly (more credits, 1080p+ support, more storage).

- Annual Discounts: Lite ~$16.99/mo, Pro ~$84.99/mo when paid yearly.

- Free Tier: In beta with very limited usage.

Runway and DeepMotion offer affordable entry points with free tiers, unlike Wonder Studio’s limited beta access. Move.ai is pricier but cost-efficient for professional mocap, while Kinetix and Plask provide flexible, low-cost options for specific use cases.

How do these alternatives integrate with popular 3D software like Blender and Maya?

AI VFX platforms aim to integrate seamlessly with popular 3D software like Blender, Maya, and game engines like Unreal Engine and Unity, ensuring compatibility with existing workflows. Each tool supports standard file formats or direct integrations to slot into professional pipelines, allowing creators to import, refine, or render assets as needed.

- Wonder Studio: Exports animation data, camera tracks, and clean plates as FBX, USD, or native scene files for Blender, Maya, and Unreal Engine. Direct Unreal Engine scene export includes camera and lighting for immediate refinement. Blender users can import FBX or scene files to tweak animations or composites. Supports seamless integration for final polishing in 3D software.

- DeepMotion: Outputs animations as FBX or BVH, importable into Blender, Maya, Unity, or Unreal. Provides retargeting profiles to match common rigs, simplifying integration. Users import FBX into Blender or Maya to attach to custom models. API available for developers to automate pipeline integration.

- Kinetix: Offers Unity and Unreal SDKs for direct animation integration during development or runtime. Exports FBX or GLB for Blender and Maya, requiring manual import. Strongest in game engines, with asset library animations usable directly in Unity/Unreal. Blender/Maya integration involves standard file import steps.

- Move.ai: Outputs FBX motion capture data, importable into Blender, Maya, Unity, or Unreal. Unreal plugin enables live-preview with MetaHuman rigs. Integration scripts support Blender and Maya for applying mocap to custom rigs. Comparable to importing Vicon/OptiTrack data, requiring retargeting in 3D software.

- Plask: Exports FBX, BVH, and JSON, compatible with Blender, Maya, Unity, and Unreal. Optimized for Blender and Unreal with matching skeleton structures. JSON supports custom web pipelines (e.g., Three.js). Browser-based workflow allows quick drag-and-drop into Blender for animation application.

- Runway ML: Outputs video files or image sequences, not 3D assets, for use in After Effects or Premiere. Can provide textures or backplates for Blender/Maya scenes. Python SDK enables pipeline automation for batch processing. Primarily integrates with 2D editing, not direct 3D software workflows.

These tools prioritize open formats (FBX, BVH) and plugins/SDKs to ensure compatibility. Blender and Maya users benefit from standard imports, while Unreal/Unity users gain from direct integrations, enhancing workflow flexibility.

What are the system requirements for using AI-powered VFX platforms similar to Wonder Dynamics?

Most AI VFX platforms are cloud-based, offloading heavy computation to servers, resulting in minimal local system requirements. Users need a stable internet connection and a modern browser to handle video uploads and results, with some tools requiring modest hardware for previews or integration.

- Wonder Studio / Autodesk Flow: Requires a modern browser (Chrome/Edge) and stable internet for uploading large video files. No specific hardware needed; cloud processes everything. Modest PC (8GB RAM) handles interface and previews. Input video resolution (e.g., HD) may have minimum requirements.

- Runway ML: Runs via web interface or lightweight desktop app using cloud compute. Needs Chrome/Edge and 8GB RAM for smooth browser previews. No GPU required; cloud handles AI tasks. Local Renderer (optional) may need a GPU for specific effects.

- DeepMotion: Web-driven; minimal requirements for video upload/download. Any device with a browser suffices. 3D software (Blender/Maya) for results requires capable PC. Stable internet needed for processing large video inputs.

- Kinetix: Cloud-based; needs internet access for processing. SDK integration requires Unity/Unreal-compatible dev PC. Browser interface works on typical laptops. Good connection ensures efficient animation generation and uploads.

- Move.ai: Cloud processing; requires recent iPhones or cameras for multi-angle video capture. Needs storage for large video files and fast internet for uploads. Web-based interface runs on standard PCs. On-premises option (rare) demands strong hardware.

- Plask: Browser-based; needs Chrome and webcam for capture. Modest laptop handles 720p webcam feed. Cloud processes motion data, requiring minimal local specs. Stable internet supports video uploads and result downloads.

General Requirements: 64-bit OS (Windows 10+, macOS, Linux), 8GB+ RAM, high-speed internet for large files, and storage for videos/animations. GPU aids local previews but isn’t mandatory. Cloud reliance ensures accessibility on standard hardware.

How do user reviews rate the performance and reliability of these alternatives?

User feedback highlights the performance and reliability of AI VFX tools, emphasizing time-saving capabilities and occasional limitations. Reviews from platforms like G2, Futurepedia, and community forums provide insights into real-world usage.

- Wonder Studio: Rated 4.6/5; praised for innovative automation and ease. Users call it “game-changing” for simple shots but note beta-like glitches in complex scenes. Reliable for straightforward composites; may struggle with fast motions. Community forums like r/vfx discuss workarounds for limitations.

- Runway ML: Scores ~4.5/5 on G2; lauded for versatility and intuitive interface. Green Screen and editing tools are reliable and fast, though credit limits frustrate some. Stable, with occasional peak-time slowdowns. Broad user base shares tips on Discord and r/runwayml.

- DeepMotion: Rated 4.8/5; valued for accurate body motion capture. Users report significant time savings, though jittery outputs need cleanup. Reliable for standard movements; input video quality critical. Active Discord and forum support enhance usability.

- Kinetix: Limited ratings but praised for game dev integration. Users appreciate efficiency but note slow processing for heavy tasks. Learning curve for SDK integration reported. Developer-focused Discord and Unity forums provide growing community support.

- Move.ai: High fidelity praised in pro forums; best for complex motions with multi-camera setups. Reliable but sync issues with phones possible. Costly for casual use; strong for production. Unreal forums and Reddit offer setup tips and comparisons.

- Plask: Near 5/5 on Product Hunt; loved for accessibility and browser-based ease. Decent accuracy for single-camera; shaky outputs need smoothing. Reliable for hobby projects; improving features. Vibrant Discord and YouTube tutorials aid beginners.

Users find these tools reliable for reducing grunt work, with cleanup often needed for polished results. Active communities enhance performance through shared best practices.

What are the strengths and weaknesses of each Wonder Dynamics alternative?

Each alternative to Wonder Dynamics’ Wonder Studio has unique strengths and weaknesses, catering to specific project needs. Below is a summarized analysis of their capabilities and limitations, with each tool’s strengths and weaknesses presented in a single four-line list item, without sub-lists, as requested.

- Runway ML: Highly versatile platform offering video editing, image generation, and effects, with an intuitive interface for fast iteration, ideal for creators needing multiple tools quickly. It enables quick subject isolation and clip generation, outpacing manual methods significantly. However, it lacks 3D character integration or CG replacement, and its credit system can make long projects costly. Text-to-video output isn’t photorealistic, making it a complementary tool rather than a direct VFX substitute.

- DeepMotion (Animate 3D): Excels at capturing accurate body motion from video, integrating into any 3D pipeline, saving days of keyframing with quick outputs, and offering a free tier for indie users. It achieves high accuracy for standard movements, enhancing animator efficiency. Outputs may need cleanup for jitter or sliding feet and rely heavily on input video quality. Complex stunts or interactions can confuse the system, and it lacks facial animation capture.

- Kinetix: Provides real-time animation with seamless Unity/Unreal integration, automating rigging to make 3D animation accessible to non-animators, with collaboration features and new AI models for game developers. It’s ideal for games and interactive content creation. Usage-based pricing may deter creators, and its developer focus is less approachable for artists. Film VFX requires manual tweaks for pixel-perfect results, lacking nuanced facial or finger motion capture.

- Move.ai: Delivers high-quality motion capture rivaling suit-based systems, capturing complex motions without markers using multi-camera setups, reliable for small studio production work, and suitable for professional-grade projects. Multi-camera setups are logistically challenging and costly for casual users. It’s primarily body-focused, requiring separate tools for face and hand capture, and needs skilled artists for scene integration, unlike Wonder Studio’s all-in-one approach.

- Plask: Browser-based simplicity enables quick, free motion capture on modest hardware, with a user-friendly interface for immediate animation editing, supporting multi-person capture for group scenes, and accessible to beginners without expensive software. Accuracy lags behind high-end mocap systems, with shaky outputs needing smoothing. It’s limited to human motion, excluding non-human subjects, and lacks an SDK for automated integration, with complex motions requiring refinement.

In summary, user reviews are generally positive across these AI VFX tools. They all deliver on the promise of saving time and effort:

- Runway: praised for ease and versatility.

- DeepMotion/Plask/Move.ai: praised for freeing users from mocap suits and giving solid results, with the caveat that some cleanup or careful use is needed for perfect results.

- Wonder Studio: praised as a revolutionary concept that works “like magic” on simple scenes, but criticized for occasional glitches on complex shots (e.g., losing track on fast motions or heavy occlusion).

Importantly, many reviewers emphasize that these tools, while powerful, don’t completely eliminate the expert’s touch – they greatly reduce grunt work, but for polished results an artist/animator’s cleanup or refinement can be necessary. Reliability is improving with each update, and communities around each tool often share tips to get the best performance (like how to film for best DeepMotion results, etc.).

How do these platforms handle real-time rendering and compositing?

AI VFX tools vary in their approach to rendering and compositing, with none offering full real-time frame-by-frame output like game engines. Most prioritize fast processing or live previews, with compositing handled to varying degrees.

- Wonder Studio: Offline cloud processing; shots take minutes based on complexity. Automates compositing with clean plates and matched lighting. Produces near-final composites but lacks real-time rendering. Complex effects like water interaction require external tools.

- Runway ML: Near real-time feedback for 2D effects (e.g., Green Screen matte in seconds). Cloud GPUs enable fast previews; final video generation takes longer. Outputs alpha masks for external compositing. No 3D rendering; suits 2D video workflows.

- DeepMotion: Offline processing; short clips process in minutes. Outputs animation data (FBX/BVH) without compositing. Rendering done in external 3D software (Unity/Unreal for real-time). Focuses on motion, not scene integration.

- Kinetix: Near real-time animation application via Unity/Unreal SDKs. Cloud processes animations in seconds to minutes. In-engine rendering handles real-time visuals; no video compositing. Suits game and interactive contexts over film VFX.

- Move.ai: Offers real-time mocap preview in Unreal with slight latency. High-quality solving is offline; compositing done externally. Unreal’s real-time rendering can combine motions with scenes. Best for virtual production with post-production compositing.

- Plask: Real-time webcam mocap preview in browser; shows simplified character motion. Final data refined offline; no compositing features. External tools (Blender/Unreal) handle rendering. Ideal for quick motion tests or live demos.

Runway and Plask offer the fastest feedback, while Move.ai and Kinetix support real-time mocap previews. Wonder Studio excels in automated compositing but requires processing time.

What support and community resources are available for users of these alternatives?

Support and community resources are crucial for mastering AI VFX tools. Each platform offers documentation, tutorials, and communities, with varying levels of engagement and accessibility.

- Wonder Studio: Official Help Center with FAQs and in-app tutorials (e.g., “Character Creation Rules”). Active Discord community from beta phase; r/vfx forum discussions. Autodesk forums may support Flow Studio. Paid tiers offer email/ticketing support.

- Runway ML: Extensive help guide and Discord server with team presence. Active r/runwayml subreddit and YouTube tutorials. Courses available for content creation. Paying users get email support; enterprise offers dedicated assistance.

- DeepMotion: Knowledge base, tutorial videos, and active Discord channel. Community forum (forums.deepmotion.com) for user Q&A. Team responds on YouTube and forums. Priority email support for paying customers; indie-focused community.

- Kinetix: Developer documentation for SDKs/API; Discord for creators/devs. Blog and newsletters highlight projects and tips. Unity forums and gamedev subreddits discuss usage. Enterprise users get account managers; indies use community support.

- Move.ai: Help center with camera setup guides; Slack/Discord for users (invite on signup). Unreal forums and Reddit (r/vfx) share tips. Team active at conferences (GDC/SIGGRAPH). Paid users receive direct, one-on-one support.

- Plask: Tutorial section, documentation, and responsive Discord community. YouTube reviews and how-to videos by users. Team adds features based on feedback. Pro subscribers get email support; free users rely on community.

So practically:

- If you need to do a live interactive session (like motion capture for a live event), a combination of Move.ai (for body) and perhaps an engine like Unreal for rendering is the route – Wonder Studio can’t do that live.

- If you need rapid turnaround for VFX shots on a deadline, Wonder Studio, Runway, and these AI tools dramatically cut down the time compared to traditional methods, but you still have some waiting (minutes not days, which is a huge improvement).

Communities on Discord, Reddit (r/vfx, r/animation), and YouTube provide shared tips and workarounds. Official documentation is robust, ensuring users can troubleshoot and learn efficiently.

How do these AI VFX tools cater to indie filmmakers and small studios?

AI VFX tools democratize advanced effects for indie filmmakers and small studios by reducing costs, simplifying workflows, and offering scalable solutions tailored to limited budgets and resources.

- Lowering Cost and Barrier to Entry: Wonder Studio requires only a camera, replacing costly mocap stages. Plask offers free mocap, bypassing $50k suit costs. Runway provides affordable editing tools. DeepMotion’s free tier suits short projects, making VFX accessible.

- Ease of Use and Speed: Wonder Studio’s automated pipeline allows directors to handle VFX alone. Runway’s intuitive interface simplifies editing for generalists. Plask’s browser-based mocap is instant. DeepMotion speeds up animation, saving days for tight deadlines.

- Scalability and On-Demand Usage: Subscription models (e.g., Wonder Studio, DeepMotion) allow use only when needed. Kinetix’s pay-per-use fits sporadic game dev needs. Freemium tiers (Plask, Runway) support testing and small projects. No permanent software costs required.

- Community and Learning: Wonder Studio’s beta films inspire indies; Runway and DeepMotion spotlight user projects. Plask’s YouTube tutorials aid beginners. Active Discords (all tools) and r/vfx share workflows, helping indies learn fast and gain exposure.

- Limitations: Complex scenes (e.g., heavy occlusion) may need workarounds in Wonder Studio. Runway lacks 3D integration. Move.ai’s multi-camera setup is costly. Indies must plan shots to maximize AI capabilities, avoiding overambitious sequences.

These tools empower indies to achieve high production value, closing the gap with blockbusters. Strategic shot planning mitigates limitations, enhancing output quality.

What are the learning curves associated with adopting these Wonder Dynamics alternatives?

AI VFX tools have gentler learning curves than traditional VFX workflows, but each varies based on interface simplicity, required 3D knowledge, and setup complexity. Community resources help flatten these curves.

- Wonder Studio: Simple interface; basic results achievable on day one. Learning nuances (e.g., shot selection, 3D model prep) takes days. Requires basic 3D concepts (rigs, blendshapes) for pro use. Tutorials and Discord ease adoption for beginners.

- Runway ML: Intuitive like a video editor; basic tasks (Green Screen) learned in hours. Crafting effective text prompts for generative tools needs practice. Advanced features (API, custom models) require technical skills. YouTube and r/runwayml accelerate learning.

- DeepMotion: Straightforward web interface; upload and download is easy. Learning curve lies in applying FBX to 3D software (Blender/Maya). Beginners need days to master retargeting; 3D artists find it plug-and-play. Guides and forums simplify 3D integration.

- Kinetix: Web interface is easy for non-devs; SDK integration needs Unity/Unreal knowledge. Exploring features (asset library, collaboration) takes days. Developers spend days on SDK setup; artists learn faster. Documentation and Discord support full utilization.

- Move.ai: Steepest curve due to multi-camera setup and calibration. Filming and uploading require careful documentation study. Applying mocap data is standard but needs 3D skills. Trial runs and Unreal/Reddit tips help small studios master it.

- Plask: Easiest; webcam mocap is like a Zoom call, learned in hours. Applying to custom rigs requires basic rigging knowledge. Editor GUI simplifies tweaks for beginners. Discord, YouTube tutorials make full use achievable in a day.

In essence, all these tools drastically shorten the learning curve compared to traditional methods (imagine learning Maya animation vs. learning Plask – the latter is infinitely easier). For a user coming from zero background:

- Plask and Runway are easiest (very little 3D knowledge needed).

- Wonder Studio is also easy to get basic results, slightly more to master.

- DeepMotion and Kinetix require some knowledge of 3D or coding respectively to use fully, but are still easy in their core usage.

- Move.ai is the most involved, but still easier than setting up an optical mocap stage from scratch!

Each platform’s community also helps flatten the curve, as mentioned – there are tutorial videos and step-by-step guides which means you often don’t have to learn by trial and error alone.

How do these platforms ensure data security and privacy for users?

When uploading videos of performers or using cloud AI services, privacy and data security are important. All platforms leverage secure cloud infrastructure (e.g., AWS, Google Cloud) with encryption in transit (HTTPS) to protect data during uploads and downloads. Account security includes standard protections like passwords and two-factor authentication (2FA) where offered, with no reported breaches. Privacy policies ensure user control over content, allowing deletion of sensitive footage, and most platforms commit to not using uploads for model training without consent.

- Cloud Infrastructure & Encryption: Platforms use secure cloud providers like AWS or Google Cloud for data storage. Encryption in transit (HTTPS) safeguards uploads and downloads from interception. Privacy statements confirm secure data handling practices. No reported vulnerabilities have compromised user data.

- Data Usage Policies: Uploaded content remains user-owned, with policies preventing unauthorized model training. Users can typically remove videos via account dashboards. Content moderation (e.g., Kinetix) ensures compliance with service terms. Encourages trust through transparent data practices.

- Account Security: Account creation requires email and password, with 2FA available on some platforms. Standard security measures protect personal information. No known data breaches reported across these services. Users are advised to secure their accounts diligently.

- Deletion and Retention: Users can delete projects or footage from cloud storage via dashboards. Limited storage plans incentivize data removal after use. Critical for protecting sensitive unreleased content. Ensures user control over data retention.

- Privacy Statements: Autodesk Flow (Wonder Studio) follows enterprise-grade Autodesk trust guidelines. Other platforms provide clear privacy policies outlining data use. Enterprise tiers may offer on-premise options for sensitive projects. Builds confidence in data handling.

- Exported Data and Local Security: Downloaded files (e.g., FBX) require local security by users. Platforms provide secure, expiring download links. Enterprise users may access VPN-secured data. Shifts responsibility to users post-download.

- Anonymization: Motion capture outputs (skeletal data) are not personally identifiable. Original videos remain private within user accounts. Wonder Studio avoids facial recognition in CG character mapping. Reduces privacy risks for actor likeness.

- Content Moderation and Terms: Automated moderation (e.g., Kinetix) detects illicit or copyrighted uploads. Protects services from misuse without human viewing unless flagged. Ensures compliance with platform policies. Enhances community and service security.

These platforms prioritize secure data handling and user control, making them suitable for most indie creators. Reviewing specific Terms of Service is recommended for sensitive projects to ensure compliance with privacy needs.

What are the export options and file formats supported by these AI VFX tools?

Export capabilities are crucial as they determine how you can use the results in other software. Here’s what each platform offers:

- Wonder Studio (Wonder Dynamics): Exports CG character animation, camera tracking, clean plates, and composites as FBX, USD, or Unreal Engine scenes. Supports Blender project files and MP4/MOV videos for composites. Includes mask sequences for custom compositing. Enables integration with 3D software and game engines.

- Runway ML: Exports videos as MP4 or image sequences as PNG for editing tasks. Green Screen tool provides foreground video with alpha or matte sequences. Generated images are downloadable as PNGs. Suits 2D workflows with no 3D model exports.

- DeepMotion (Animate 3D): Outputs motion capture data as FBX or BVH for 3D software compatibility. May support USD or motion JSON for developers. Includes MP4 video previews of animated characters. Focused on animation data for pipeline integration.

- Kinetix: Exports animations as FBX or GLB/GLTF for 3D software and web use. SDK integration delivers animations directly in Unity/Unreal projects. Web platform provides downloadable FBX and video previews. Primarily supports game engine workflows.

- Move.ai: Exports skeletal animation as FBX for 3D software and Unreal projects. Unreal plugin enables MetaHuman retargeting or animation assets. May support Maya .MA/.MB files with baked animation. Focused on universal mocap data compatibility.

- Plask: Exports motion data as FBX, BVH, or JSON for 3D software and custom pipelines. JSON supports web apps with per-frame joint data. No rendered video output; provides browser-based motion previews. Suits animation integration across platforms.

Some tools export metadata like mask sequences or T-pose skeletons, enhancing compositing flexibility. Interoperability via standard formats (FBX, MP4) ensures practical use in production environments.

How do these alternatives handle facial animation and lip-syncing?

Facial animation and lip-sync are critical for character realism. The AI tools vary in their support for this:

- Wonder Studio (Wonder Dynamics): Wonder Studio transfers actor facial performances to CG characters with blendshapes, capturing eye movements and dialogue lip-sync. Quality depends on character setup and footage clarity, excelling in broad expressions. It’s performance-based, requiring actors to speak lines for accurate lip-sync. Subtleties may need manual tweaks for close-ups.

- DeepMotion: DeepMotion focuses on body animation, with limited facial tracking via SayMotion for basic head/jaw movements. It lacks detailed facial capture or lip-sync, requiring third-party tools like FaceCap. Users combine it with external solutions for facial animation. Body motion remains its primary strength.

- Kinetix: Kinetix prioritizes body animation for emotes/dances, with no facial or lip-sync support. Facial animation isn’t included, focusing on body motion and head orientation. External tools like Oculus Lipsync are needed for lip-sync. Future updates may add facial capabilities.

- Move.ai: Move.ai captures body motion only, with no current facial or lip-sync support. Face tracking is possible with dedicated cameras but isn’t output. Separate methods like ARKit are required for facial capture. Body animation is the core focus.

- Plask: Plask captures body motion, excluding facial animation or lip-sync. It doesn’t generate face rig animation curves, requiring tools like Adobe Face Animator. Designed for body dynamics, it needs external facial solutions. Hand capture is under development.

- Other Tools: Specialized AI tools like Lalamu Studio provide lip-sync for facial animation. Users combine these with body capture tools for complete animation. No single tool covers both except Wonder Studio. Ecosystem integration meets diverse needs.

- Lip-sync from audio vs performance: Only Wonder Studio uses actor performance for lip-sync; others, except DeepMotion’s SayMotion, don’t generate it from audio. Separate AI tools like Papagayo-NG are needed for audio-based lip-sync. Wonder Studio excels with spoken dialogue capture.

- Quality: Wonder Studio’s facial animation is decent but varies, requiring manual tweaks for dramatic close-ups. It handles expressions and lighting well in wide shots. Subtle lip-sync issues are less noticeable in creature animations. Fine-tuning enhances close-up quality.

In summary:

- Full body + face: Wonder Studio is the only one in this list doing both out-of-the-box (and even fingers). It automates a lot of facial acting but within limits.

- Body only: DeepMotion, Move.ai, Plask, Kinetix are body-centric. They require complementary solutions for detailed face animation and lip movements if your project needs character dialogue or emotive facial performance.

Wonder Studio uniquely supports body and facial animation, though quality varies for close-ups. Other tools focus on body motion, requiring external solutions for facial and lip-sync needs.

What are the latest updates and features introduced in these platforms?

AI platforms are rapidly evolving. As of 2024-2025, here are some of the notable recent updates for each:

- Wonder Dynamics (Wonder Studio): Introduced Unreal Engine scene export with cameras, animation, and lighting in 2024. Wonder Animation beta reconstructs full 3D scenes from video. Partnered with Autodesk, rebranding to Flow Studio with tighter tool integration. Shifted from free beta to paid Lite/Pro tiers with credit system.

- Runway ML: Launched Gen-3/Gen-4 video models and Multimodal AI editor in 2024. Added Infinite object removal, Motion Tracking AI, and improved Green Screen accuracy. Introduced iOS mobile app and enhanced timeline editing. Increased resolution and length limits for Gen-2 videos.

- DeepMotion: Added multi-person tracking and hand tracking for finger motions in 2024. Improved physics filtering to reduce jitter and foot slide. Introduced SayMotion for text-to-animation and API/SDK for app integration. Enhanced capture quality with stabilization toggles.

- Kinetix: Launched AI Emote Creator SDK and improved character retargeting in 2023-2024. Developing Video Generation Model for motion-controlled video. Started $1M SuperGrant for game devs. Expanded asset library and creator-friendly UI for broader accessibility.

- Move.ai: Supported iPhone footage and diverse cameras (GoPros, webcams) in 2024. Released Unreal Engine plugin for MetaHuman retargeting. Improved processing speeds and teased real-time capture for 2025. Enhanced accessibility for non-studio setups.

- Plask: Introduced multi-person capture and animation style filters (stability vs. accuracy) in 2023. Added glTF/GLB exports for web/USD workflows. Launched community gallery for shared animations. Continuously refined AI to reduce capture noise.

These tools evolve quickly, addressing user demands and expanding capabilities. Regular checks of official blogs ensure users stay updated on new features.

Where can users find tutorials and documentation for getting started with these alternatives?

Getting started resources are abundant for these platforms:

- Official Documentation:

- Wonder Studio (Autodesk Flow): Official Help Center provides Getting Started guides, export instructions, and FAQs. Autodesk’s site offers Flow Studio overviews and basics. YouTube channel features tutorials like “How to animate a character.” Videos cover workflows and character setup.

- Runway ML: Help Center details features with examples and tutorial videos on YouTube. Blog posts include step-by-step creative technique guides. Videos cover Green Screen, Gen-2, and more. Interface guides support quick learning for new users.

- DeepMotion: Offers tutorial videos on YouTube and written API/best practices docs. Forum includes staff-answered Q&A on motion alignment. Guides explain optimal filming techniques. Resources focus on practical Animate 3D usage.

- Kinetix: Developer docs with code samples for SDK integration on portal. YouTube and blog provide creator tutorials on video-to-animation. Unity SDK guides available for developers. Content emphasizes accessible animation workflows.

- Move.ai: Website hosts setup guides for camera calibration and uploads. Webinars and YouTube videos cover iPhone recording. Documentation details camera placement requirements. Official resources are critical due to limited community tutorials.

- Plask: Tutorial page on website explains recording and editing animations. YouTube channel offers short “MoCap in 1 minute” videos. Interface includes tooltips and guided tours. Blog features user case studies for inspiration.

- Community and Third-Party Tutorials:

- YouTube & Blogs: Creators share guides like “Wonder Studio tutorial” by Hugo’s Desk, “Runway ML for beginners,” and “Plask mocap tutorial” by Blender users. Videos cover DeepMotion, Kinetix, and Move.ai workflows. Real-world usage and troubleshooting are highlighted. Search yields abundant step-by-step content.

- Reddit & Forums: Subreddits r/vfx, r/animation, and r/gameDev host threads with user answers. Discussions provide mini-tutorials on tool-specific issues like DeepMotion foot sliding. Community insights complement official resources. Searching uncovers practical tips and comparisons.

- Official Communities: Discord servers for Runway, Kinetix, and others have #tutorials or #tips channels. Users share techniques, e.g., Runway’s Discord showcases creative looks. Kinetix’s Discord pins getting-started messages. Community interaction accelerates learning.

- Academic/Professional Guides: Sites like 80.lv and fxguide offer tutorial-like articles on tool usage. GDC/SIGGRAPH talks, uploaded online, serve as expert tutorials. Presentations reference AI mocap applications. Resources provide advanced insights for professionals.

Users should start with official docs for setup, watch YouTube tutorials for workflows, and practice with test projects. Community resources enhance learning with practical tips.

Having covered all these points in detail, we can now proceed to some frequently asked questions to further clarify how these Wonder Dynamics alternatives stack up in practice.

FAQ Questions and Answers

- Is there a free alternative to Wonder Studio (Wonder Dynamics) for AI character animation?

Free alternatives like Plask offer browser-based mocap, while DeepMotion provides a trial for limited animations. Runway ML’s free plan includes video editing credits, though less comprehensive than Wonder Studio. Combining these tools can replicate AI animation with more manual effort. They enable cost-effective animation for budget-conscious creators. - How does Runway ML’s output quality compare to Wonder Studio’s results?

Wonder Studio delivers film-quality CG character composites with integrated lighting, ideal for VFX. Runway ML produces stylized AI videos or high-quality masks, requiring extra work for CG integration. Wonder Studio excels in polished 3D shots, while Runway suits creative video tasks. Runway’s masks match Wonder Studio’s actor isolation quality. - Can DeepMotion or Move.ai capture face and fingers like Wonder Studio does?

Wonder Studio captures facial expressions and finger movements, unlike DeepMotion and Move.ai, which focus on body motion. DeepMotion needs separate facial tools; Move.ai may add finger tracking later. Users combine iPhone-based facial mocap with these for full animation. Only Wonder Studio offers integrated face and hand capture. - Is Wonder Dynamics’ Wonder Studio better than hiring a VFX artist for character animation?

Wonder Studio automates most animation tasks, ideal for indie creators and simple shots. Complex scenes with occlusions require an artist’s polish for nuanced results. It saves significant time and cost but may need tweaks. Studios use it to assist, not replace, professional artists. - Can I use Wonder Studio or its alternatives without special hardware or a high-end PC?

These cloud-based tools require no high-end hardware, running on standard laptops with internet access. Wonder Studio, Runway ML, DeepMotion, and Plask process AI computations server-side. Move.ai uses iPhones, Plask a webcam, for capture. Adequate bandwidth supports video uploads and downloads. - Is Wonder Studio or an alternative like Kinetix better for game development?

Kinetix is tailored for game development, offering Unity/Unreal SDKs for real-time animations. Wonder Studio focuses on film VFX, producing pre-rendered composites unsuitable for games. Kinetix, DeepMotion, or Plask provide game-ready animation data. Wonder Studio suits cinematic cutscene pre-visualization. - How does Wonder Studio’s cost compare to alternatives like Runway ML or DeepMotion?

Wonder Studio’s plans ($30-$150/month) are costlier than Runway ML ($15-$35/month, free tier) and DeepMotion ($9-$17/month, free trial). Plask’s free/Pro (~$20) and Kinetix’s usage-based pricing are cheaper options. Wonder Studio’s comprehensive features may justify costs for specific projects. Budget creators benefit from exploring affordable alternatives first. - How can I access Wonder Dynamics’ Wonder Studio, and are alternatives easier to access?

Wonder Studio is accessible through its website – you sign up for an account, choose a plan (they have Lite and Pro subscriptions), and use it via the web interface. During early beta it was invite-only, but it’s now open to the public as Autodesk Flow Studio (formerly Wonder Studio), so anyone can sign up and start a subscription (or possibly a trial if offered). Alternatives are generally very easy to access:- Runway ML: Just go to runwayml.com, create a free account, and you can start using it immediately in your browser (no install needed, though there’s an optional desktop app).

- DeepMotion: Go to deepmotion.com, sign up (free trial available), and use the web portal to upload videos.

- Plask: Visit plask.ai, you can even try it without sign-up for some demo, but to save/export you’ll create a free account. It runs in-browser.

- Kinetix: Head to kinetix.tech, create an account. The interface is web-based for their animation tool. If you want the SDK, you download it from their developer portal. But for just trying it out, it’s all online.

- Move.ai: You sign up on their site to get an account. Move.ai is a bit more involved because you also need their app on iPhones (if using phones for capture) and then upload footage. But signing up is straightforward; they often grant a trial if you request one. In summary, alternatives are often one sign-up away with free trials, whereas Wonder Studio now requires picking a paid plan for full access (though they might have some demo mode). From an ease-of-access perspective: Runway and Plask are the easiest (instant free use in browser), DeepMotion next (free but with limited usage until you pay), Wonder Studio requires commitment to a subscription earlier in the process. All these services are cloud, so you don’t have to download large software packages or worry about installation conflicts, which makes trying them quite convenient.

- Will AI tools like Wonder Studio replace traditional motion capture suits?

AI markerless mocap like Wonder Studio and Move.ai is cost-effective for indie and basic work, reducing suit reliance. High-end productions still use suits for precise, subtle motion capture. AI struggles with fast motions or occlusions, but its quality is improving. A hybrid approach persists, with AI closing the gap. - Are there open-source alternatives to Wonder Studio for AI VFX?

No mature open-source tool fully replicates Wonder Studio’s AI VFX pipeline. Blender with Mixamo or OpenPose/Mediapipe enables manual motion capture but lacks automation. These require technical effort and yield less polished results. Proprietary tools remain superior for user-friendly, turnkey solutions.

Conclusion

AI-powered VFX platforms like Wonder Studio are transforming animation and visual effects, with alternatives like Runway ML for video editing, DeepMotion, Move.ai, and Plask for markerless mocap, and Kinetix for game animations. Wonder Studio excels at replacing actors with CGI, while creators combine tools like DeepMotion for motion capture, Blender for polishing, and Runway for background removal to achieve similar results. Indie filmmakers and small studios benefit from cost-effective, time-saving VFX solutions. Game developers leverage AI for rapid animation production. Project needs budget, quality, and skills determine tool choice, supported by gentle learning curves and strong communities. These tools empower creators to integrate high-quality CGI and VFX with unprecedented ease.

Sources and Citation

- Lukesh S, “Top 10 AI-Driven Tools for 3D Animation and VFX” – GUVI Blog (April 17, 2025guvi.comguvi.com】

- Hrushikesh Shinde, “How AI is Transforming the Future of VFX” – LinkedIn Article (Apr 24, 2025linkedin.com】

- Toolify AI, “Pushing Wonder Studio to its Limits: A Testing Review” – (analysis of Wonder Studio’s pros and constoolify.aitoolify.ai】

- Futurepedia, “Wonder Studio AI – Reviews, Pricing & Alternatives” – (user rating and pricing infoguvi.com80.lv】

- TopAI.tools, “Compare DeepMotion vs. Wonder Dynamics” – (pricing comparison detailstopai.tools】

- Kinetix Tech Documentation – *Pricing and Features overviewkinetix.techkinetix.tech】

- Plask – Official description and features (OpenTools summary, Aug 2024opentools.aiopentools.ai】

- Nerd News Social, “Interview with Plask – Making Motion Capture Accessible!” – (on accuracy vs suitsnerdnewssocial.com】

- Autodesk/Wonder Dynamics – Flow Studio Help: Getting Started (official docshelp.wonderdynamics.com】

- 80.lv, “Export Videos into 3D Unreal Engine Scenes with Wonder Studio” – (Wonder Studio Unreal export feature80.lv】

- Reddit r/vfx – User discussions on Wonder Studio vs others (experience-based insightstoolify.aireddit.com】

- Futurepedia, “Kinetix AI – Pros and Cons” – (accessibility vs learning curvefuturepedia.iofuturepedia.io】

- Official Sites – (Wonder Dynamics, Runway ML, DeepMotion, Move.ai, Plask) for features, pricing, and support infguvi.comguvi.com】.

Recommended

- Top 20 Ugliest Video Game Characters: A Celebration of Unconventional Design

- How do I set up a VR camera in Blender?

- How do I set a camera as the active camera in Blender?

- The View Keeper Add-on: Why Every Blender Artist Needs It

- Improving Blender Rendering: How The View Keeper Makes Rendering a Fun Experience

- Mesh to Metahuman: How to Scan Yourself and Create a Realistic Digital Double in Unreal Engine 5

- How to Create a 3D Environment Like Arcane: A Step-by-Step Guide

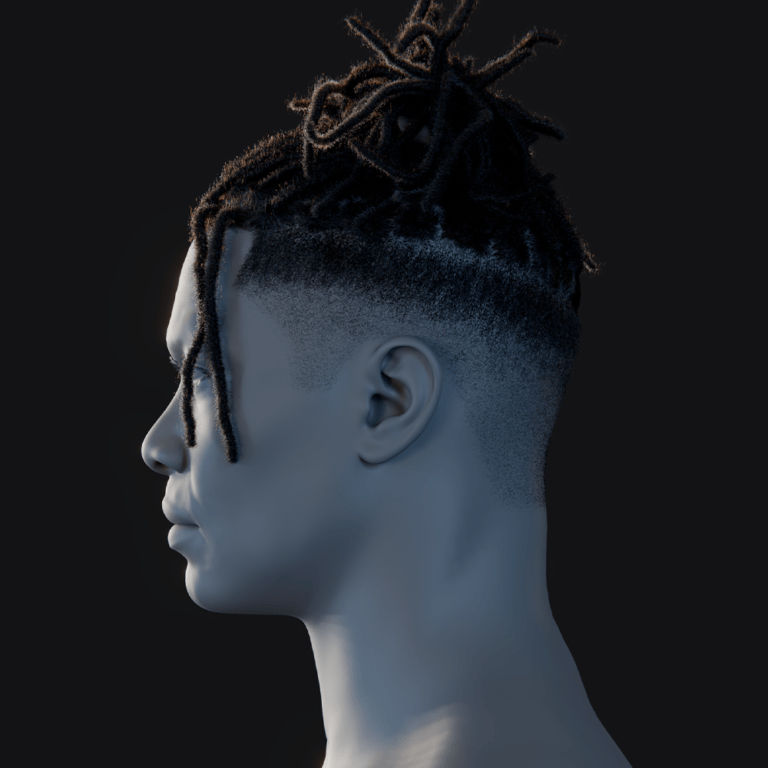

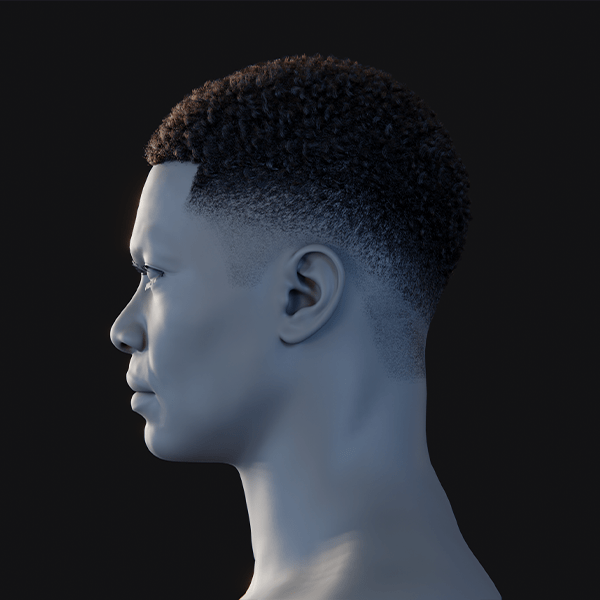

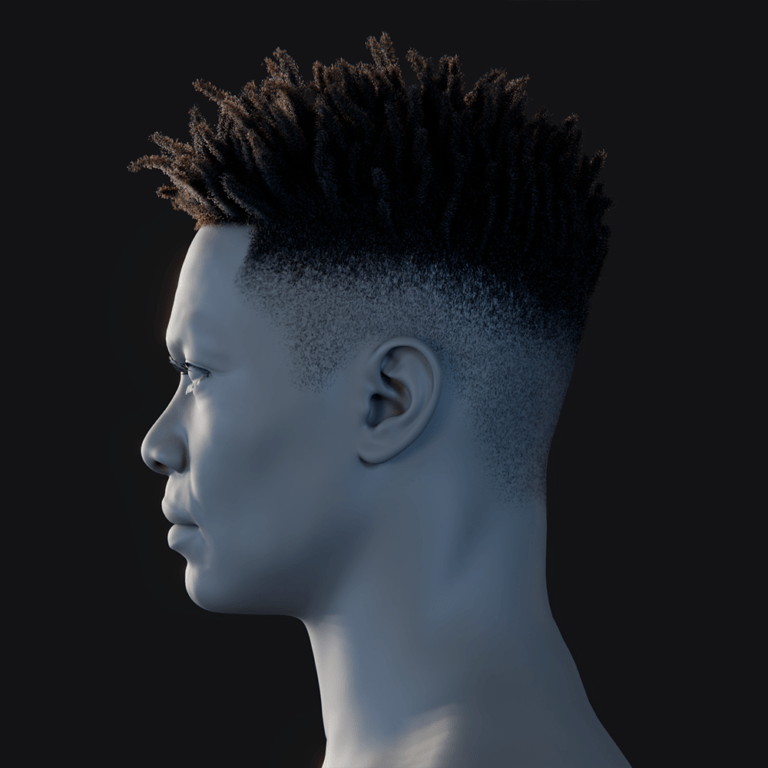

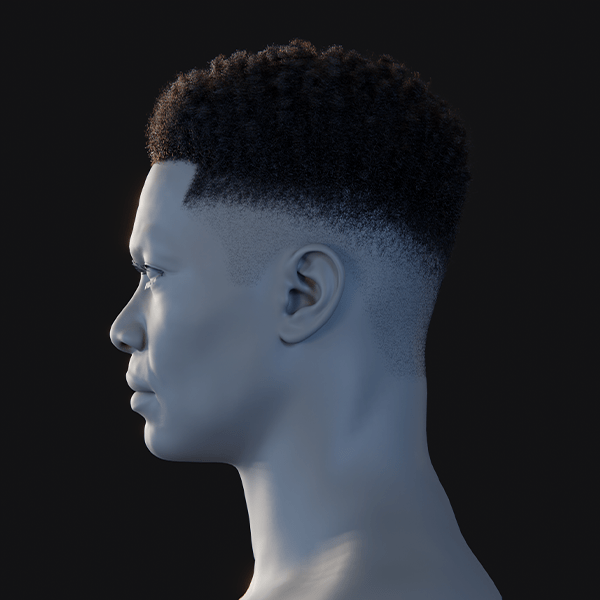

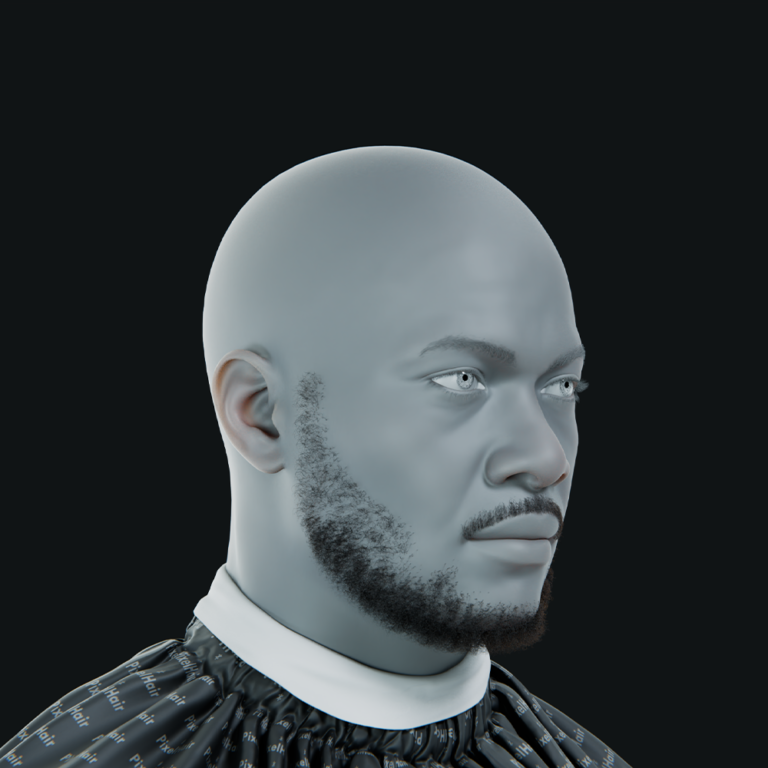

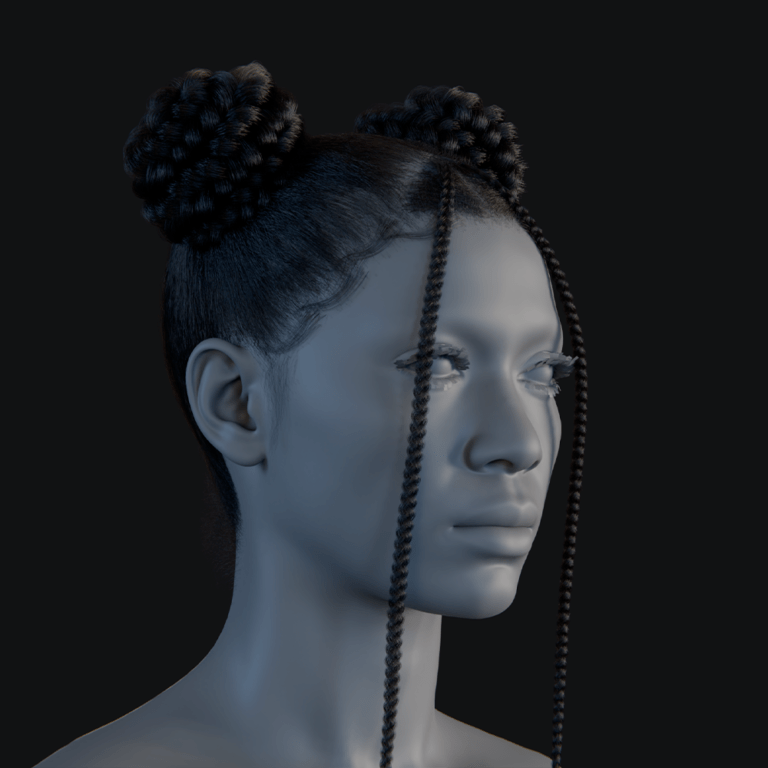

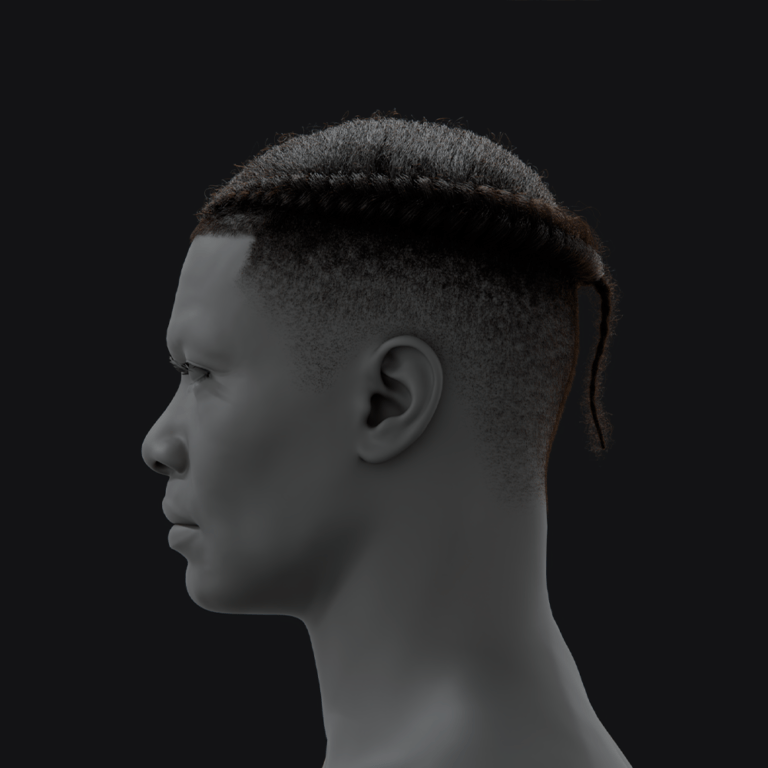

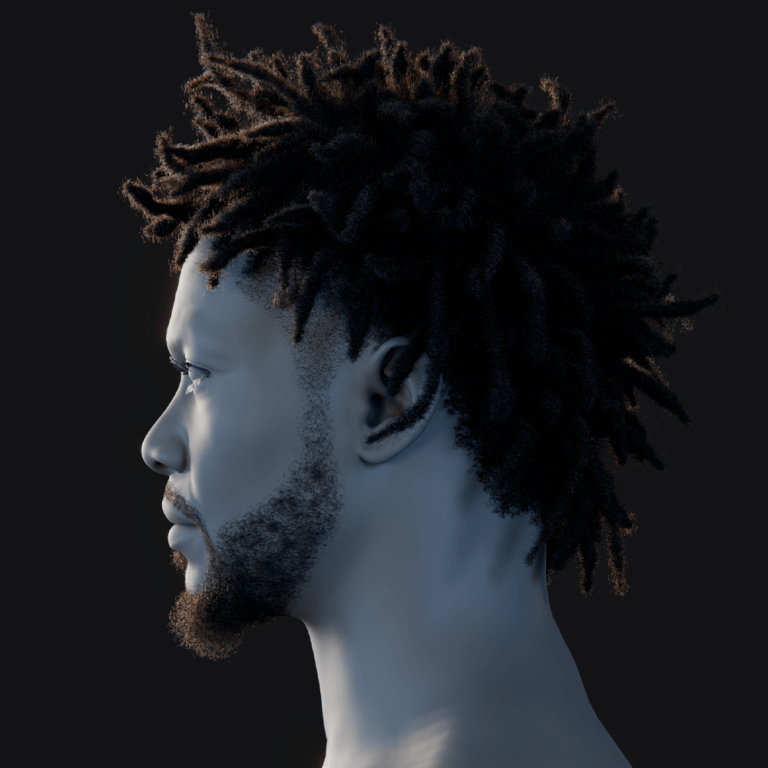

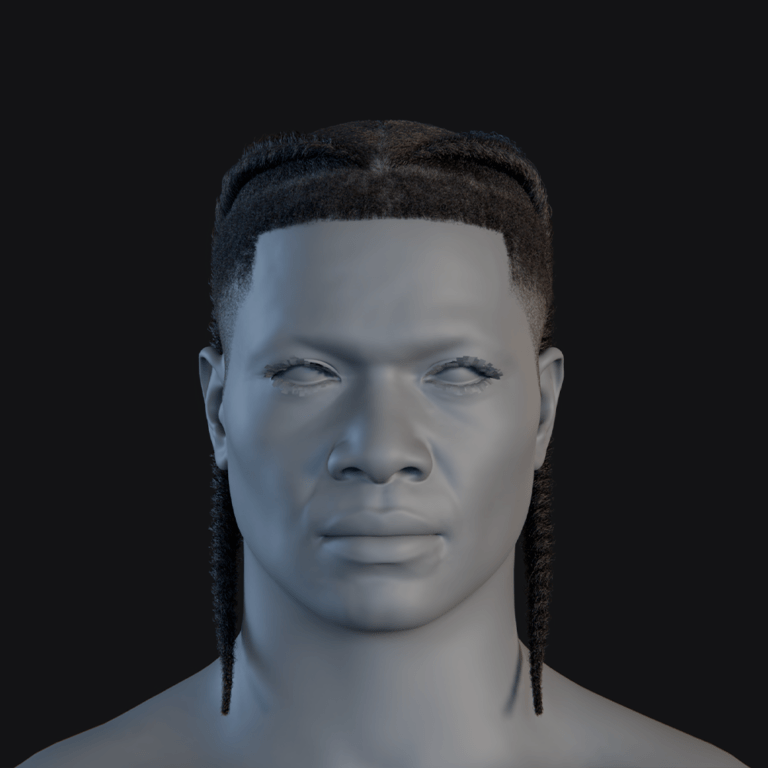

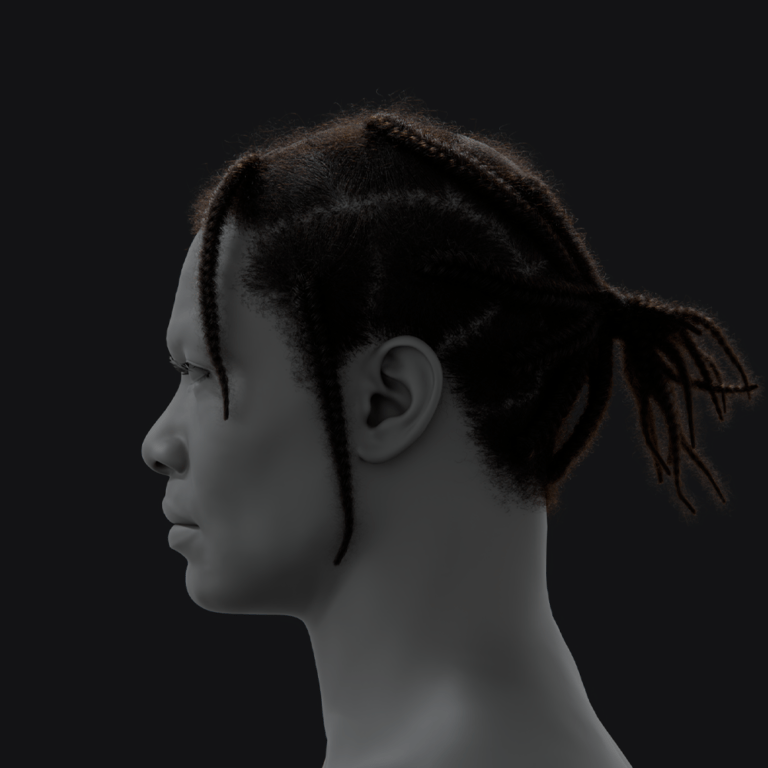

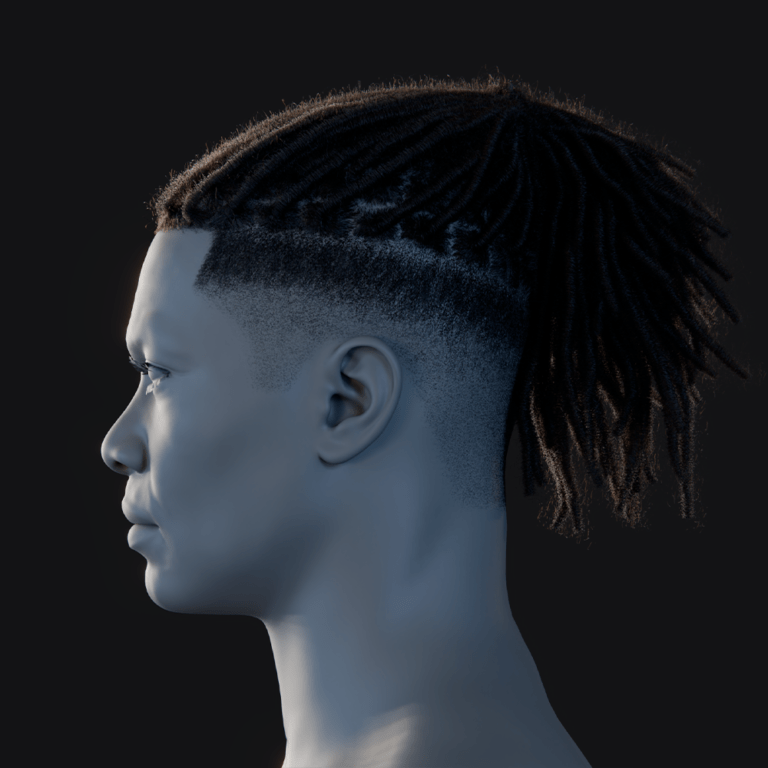

- The Ultimate Guide to Hair for Games: Techniques, Tools, and Trends

- How to Make a Character Follow a Path in Blender: A Complete Beginner’s Guide

- How to Retarget Any Animation in Unreal Engine: Mixamo, CC3, Daz3D, and Metahuman Workflow Guide