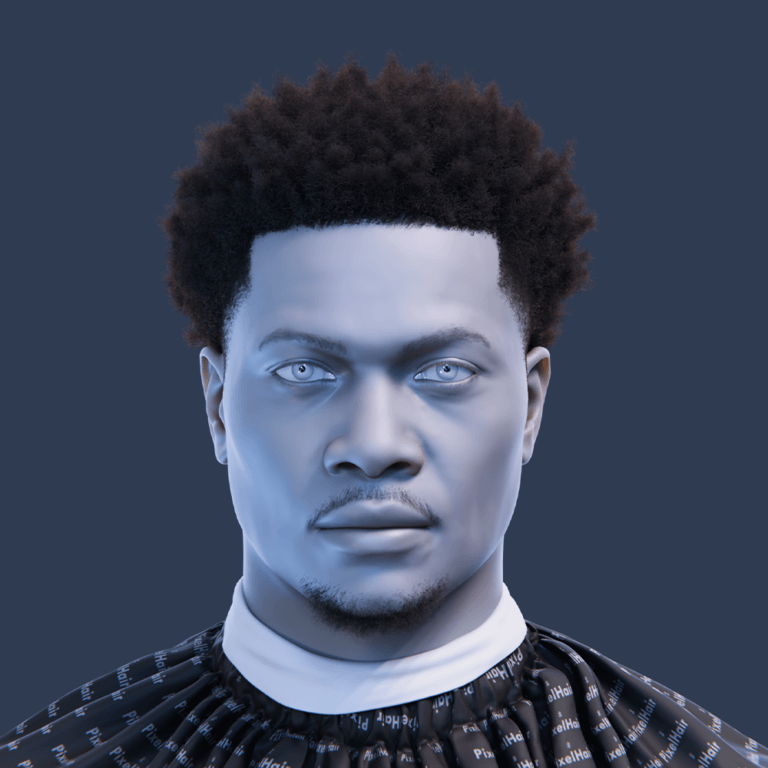

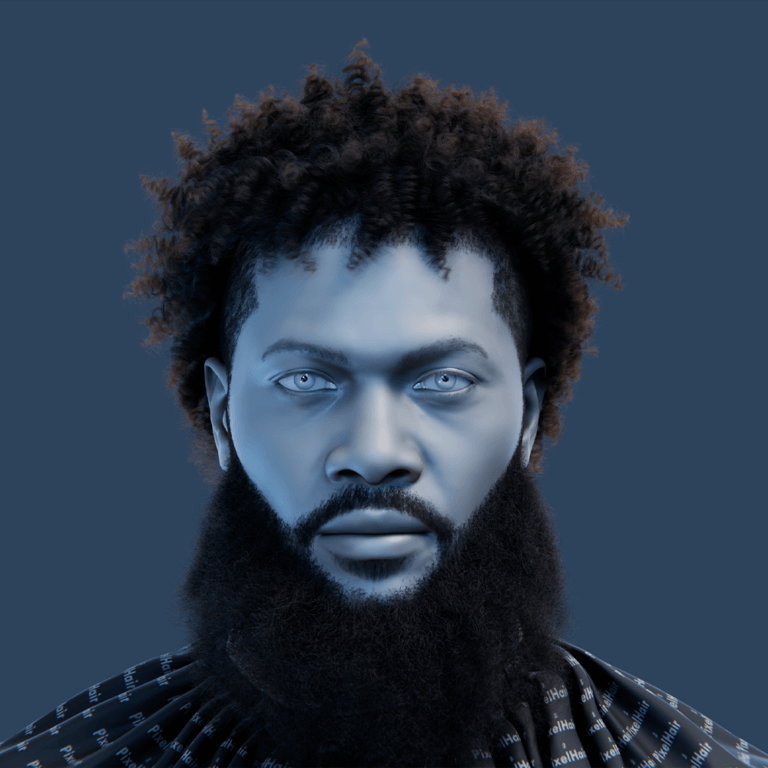

Creating a digital alter ego for the Metaverse is now more achievable than ever. With Epic Games’ MetaHuman Creator and Unreal Engine 5, anyone can design a lifelike virtual avatar that represents their identity or creativity in immersive worlds. In this comprehensive guide, we’ll explore what a metaverse alter ego is, how to build one using MetaHuman, and tips for customizing and deploying your digital self across virtual platforms. We’ll cover the workflows, tools (including PixelHair for custom hairstyles), animation techniques, compatibility considerations, and future trends – all in an easy-to-follow format for beginners and experienced creators alike. Let’s dive into bringing your virtual identity to life!

What is an alter ego in the Metaverse and how is it used?

In the Metaverse, an alter ego is a virtual avatar representing a user in 3D digital worlds, enabling interaction in VR spaces, games, and social platforms. Unlike simple usernames, these avatars can reflect personality and style, fostering immersive engagement. Alter egos allow users to socialize, work, or explore creative identities, often anonymously, in virtual environments like concerts, meetings, or role-playing games. Realistic avatars enhance authenticity by conveying facial expressions and body language, bridging the gap between virtual and real interactions.

These digital personas extend beyond gaming characters, forming emotional connections with users and enhancing social presence. For instance, detailed avatars can act as virtual influencers or embody idealized or fantastical personas. By narrowing psychological distances, alter egos make virtual communication feel more genuine, supporting diverse applications from entertainment to professional settings in the Metaverse.

How do I create a digital alter ego using Metahuman in Unreal Engine?

To create a photorealistic digital alter ego, use Epic’s MetaHuman Creator, a cloud-based app integrated with Unreal Engine 5. The process is user-friendly and requires no advanced 3D modeling skills. Here’s the workflow:

- Access MetaHuman Creator: Sign up for a free Epic Games account and launch the Creator in a supported browser with a stable internet connection. It’s free for Unreal Engine projects, streamlining character creation via cloud streaming. This accessibility allows anyone to start designing without costly software. The tool’s intuitive interface simplifies the process for beginners.

- Choose a Starting Model: Select from preset human models of diverse ethnicities and genders, blending features with sliders for a unique base. This ensures a personalized starting point without manual modeling. The presets provide a foundation for customization, making it easy to craft a distinct character. Users can mix multiple faces for added uniqueness.

- Customize Facial Features: Adjust facial details like nose, jaw, and eyes using intuitive controls, ensuring realistic results based on real human scans. The system restricts changes to plausible human features, simplifying the design process. A wide range of skin tones and eye colors allows for diverse representations. This flexibility supports creating a character resembling the user or an imagined persona.

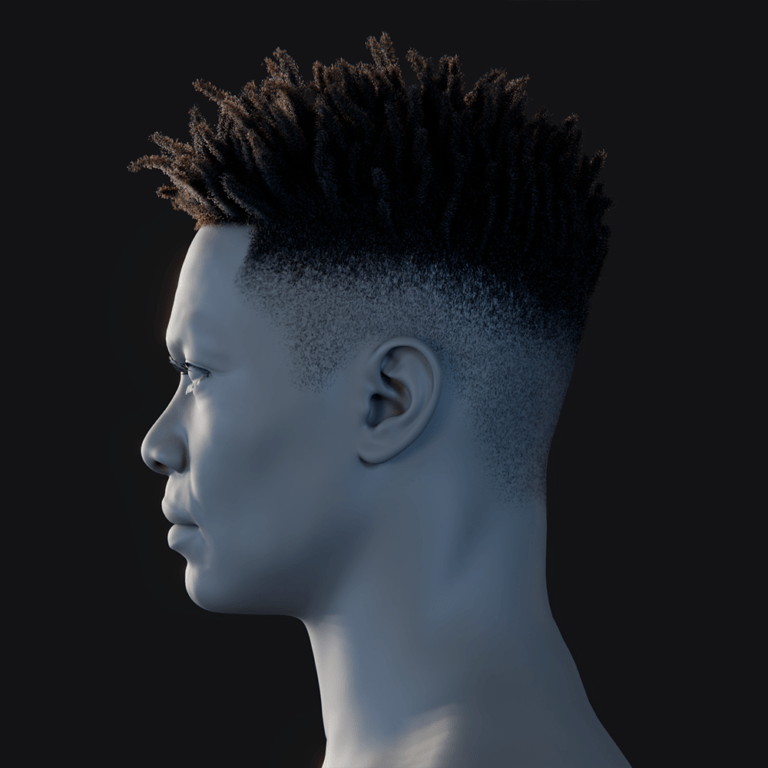

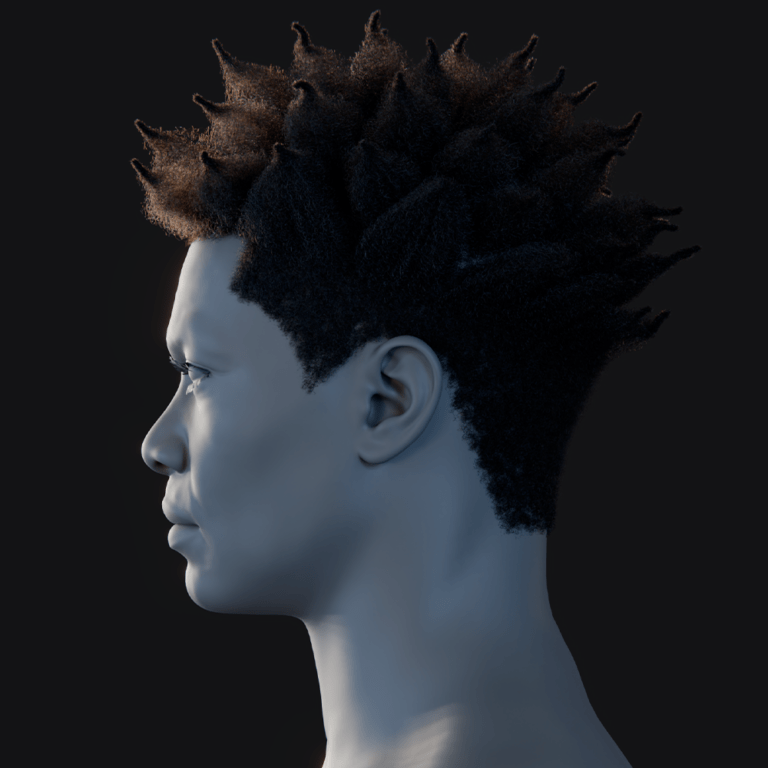

- Select Hair and Body Details: Choose from a library of realistic hairstyles, facial hair, and body types, plus preset clothing options. The strand-based hair grooms enhance realism, though clothing choices are limited. Users can select styles that reflect their alter ego’s personality. This step adds visual identity to the avatar.

- Refine and Save: Tweak skin complexion, makeup, and iris texture, then save the named MetaHuman to the Epic cloud library. Fine details like blemishes or freckles enhance character depth. The cloud storage ensures easy access for future edits. This finalizes the avatar’s appearance before integration.

- Import into Unreal Engine 5: Download the MetaHuman into Unreal Engine 5 via Quixel Bridge, importing mesh, rig, and animation controls for real-time use. The fully rigged character includes eight Levels of Detail for performance optimization. This enables seamless integration into virtual worlds. Users can animate or customize further in Unreal Engine.

The MetaHuman Creator simplifies complex modeling, producing a production-ready avatar in minutes. The character can be animated or integrated into metaverse platforms within Unreal Engine, with optional features like Mesh to MetaHuman for custom face imports.

Can Metahuman be used to build realistic avatars for the Metaverse?

Absolutely. MetaHumans are among the most realistic avatars available today, making them ideal for lifelike metaverse experiences. MetaHuman characters are created from high-fidelity 3D scans of real people, and only allow natural modifications, so the results are extremely photorealistic. The level of detail in skin, hair, and facial animation is comparable to CGI characters in films. This means you can embody a virtual self that looks and moves like a real human, greatly enhancing immersion.

For example, MetaHumans capture subtle facial expressions (like eye movements, smirks, frowns) that many simpler avatars cannot. Each MetaHuman face rig has dozens of control points to replicate human expressions, which can translate into more meaningful social interactions in the Metaverse. These hyper-realistic avatars help create emotional connection – users respond to them almost as they would to real faces, which is valuable in virtual meetings, education, or any scenario where non-verbal expression matters.

What tools do I need to design a Metahuman alter ego for virtual worlds?

To design a MetaHuman alter ego, Epic provides free, accessible tools requiring minimal 3D expertise. The core tools are streamlined for ease of use. Here’s what you need:

- MetaHuman Creator (Cloud App): This browser-based tool designs your avatar’s appearance using cloud computing, requiring only a supported browser and internet. Its user-friendly sliders simplify character creation for all skill levels. No local software installation is needed, lowering the entry barrier. Epic’s design ensures accessibility for beginners.

- Unreal Engine 5: This free game engine imports and deploys your MetaHuman via Quixel Bridge, enabling animation and integration into virtual worlds. It handles advanced editing like material adjustments or custom clothing. UE5’s robust environment supports professional-grade projects. It’s essential for bringing your avatar to life.

- MetaHuman Plugin for UE: This optional plugin adds features like Mesh to MetaHuman and MetaHuman Animator, enhancing customization and animation. It’s installed via the Epic Games Launcher for UE5.2+. The plugin supports advanced workflows like importing custom meshes. It’s ideal for users seeking deeper functionality.

- 3D Modeling & Content Tools (Optional): Tools like Blender or Maya can create custom clothing or accessories, imported into Unreal for unique designs. Blender is popular for its free access and compatibility with UE5. These tools are unnecessary for basic avatars but enhance personalization. They support advanced users crafting bespoke assets.

- Hardware for Mocap (Optional): Devices like an iPhone with TrueDepth camera or motion capture suits animate your avatar in real time. These are optional for design but enable lifelike movements. Basic webcams can also provide simple tracking. Such hardware elevates interactive experiences.

The MetaHuman Creator and Unreal Engine 5 form the core pipeline, eliminating the need for expensive software or rigging skills. Optional tools like Blender or mocap hardware offer advanced customization, making the process flexible for creators of all levels.

How do I customize facial features, hair, and clothing for my Metahuman avatar?

MetaHuman Creator offers robust customization for your avatar’s appearance. Here’s how to personalize facial features, hair, and clothing:

- Facial Features: Adjust head, eyes, nose, and more using sliders, ensuring realistic results from real-human data. Add skin details like freckles or makeup for personality. The system supports diverse complexions and plausible modifications. This creates a unique face, resembling you or a new persona.

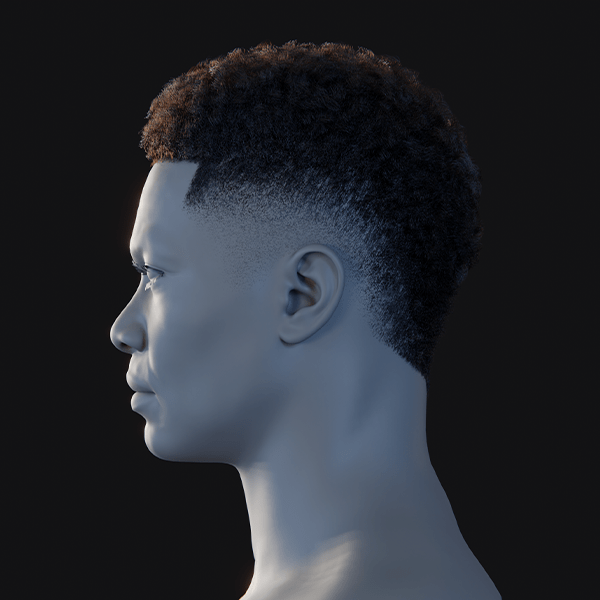

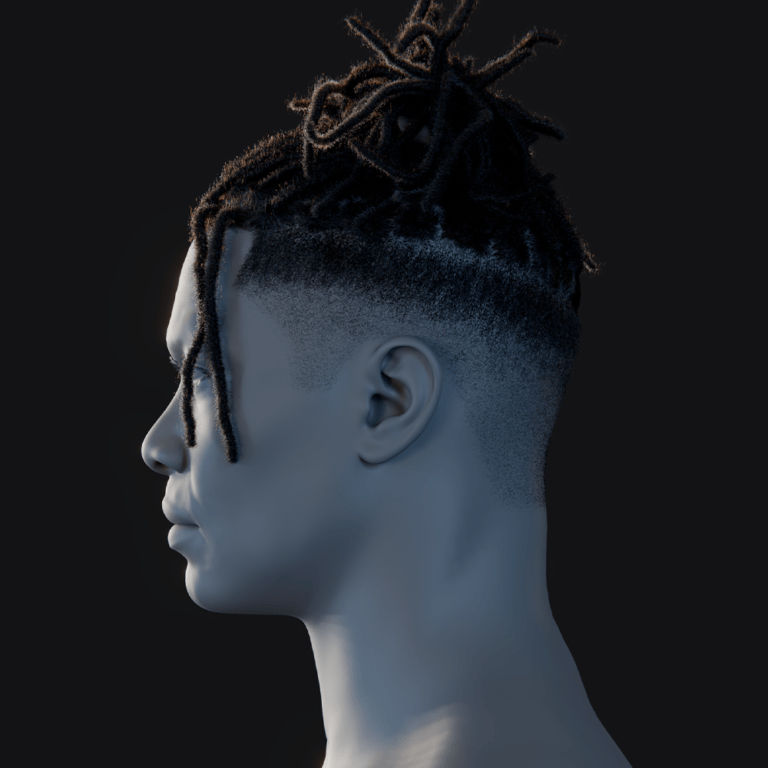

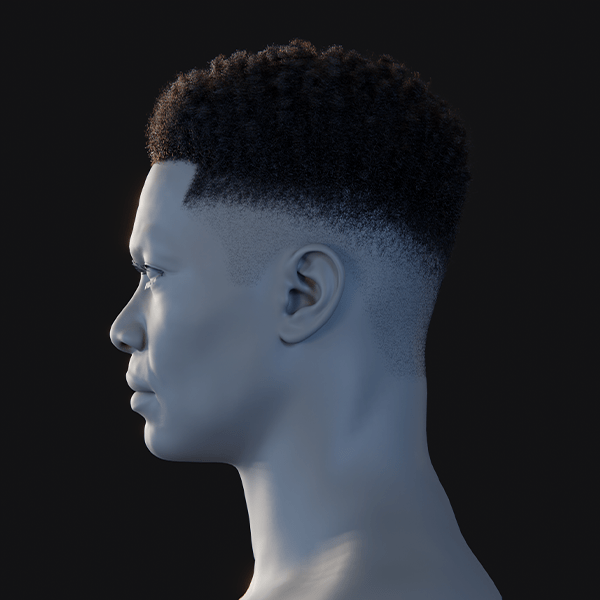

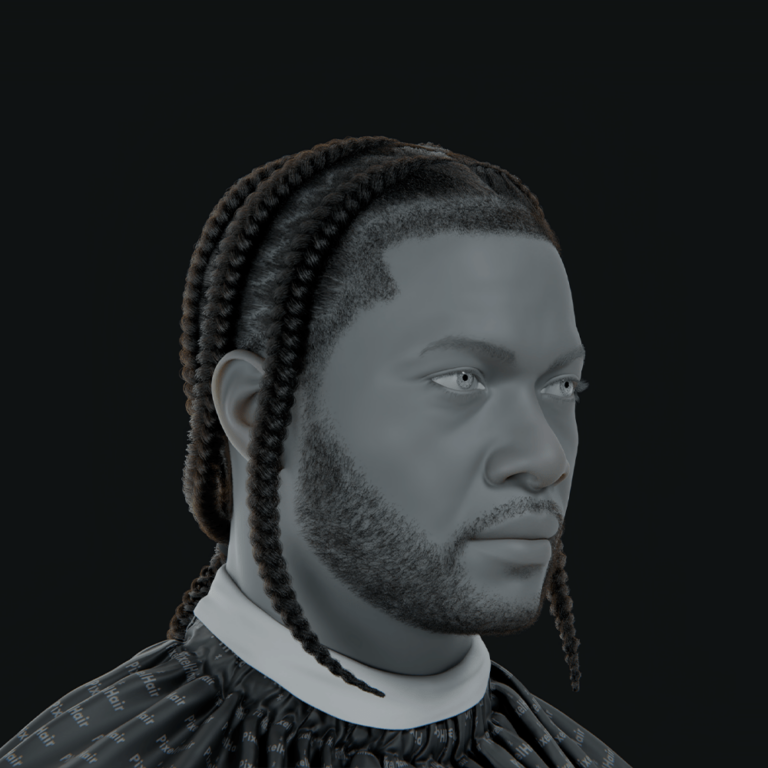

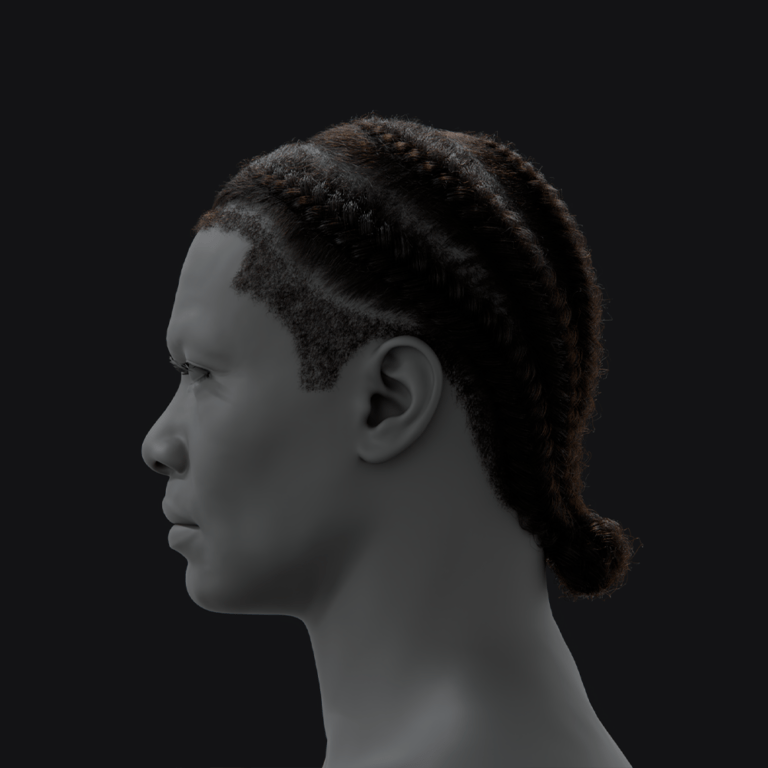

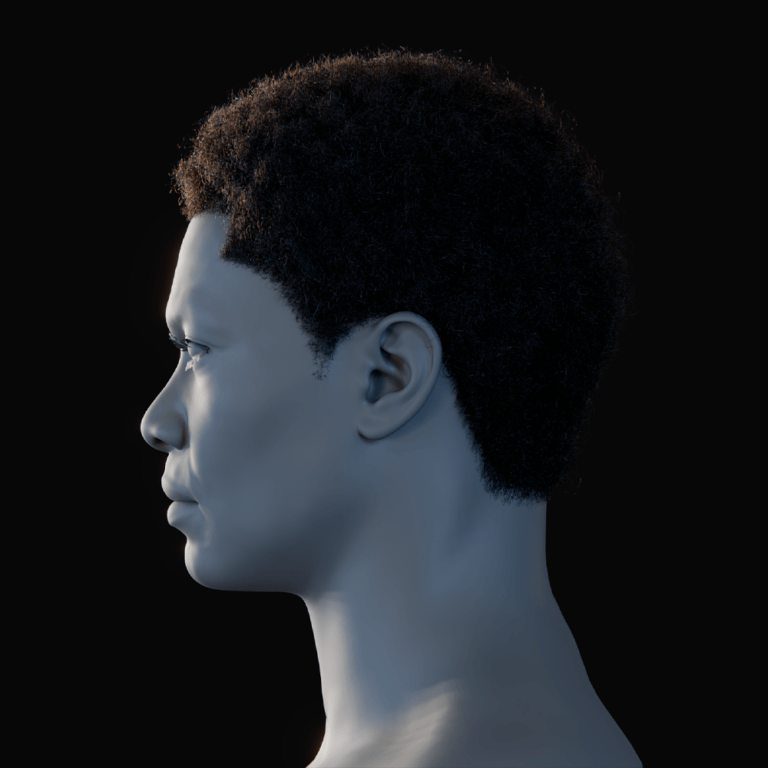

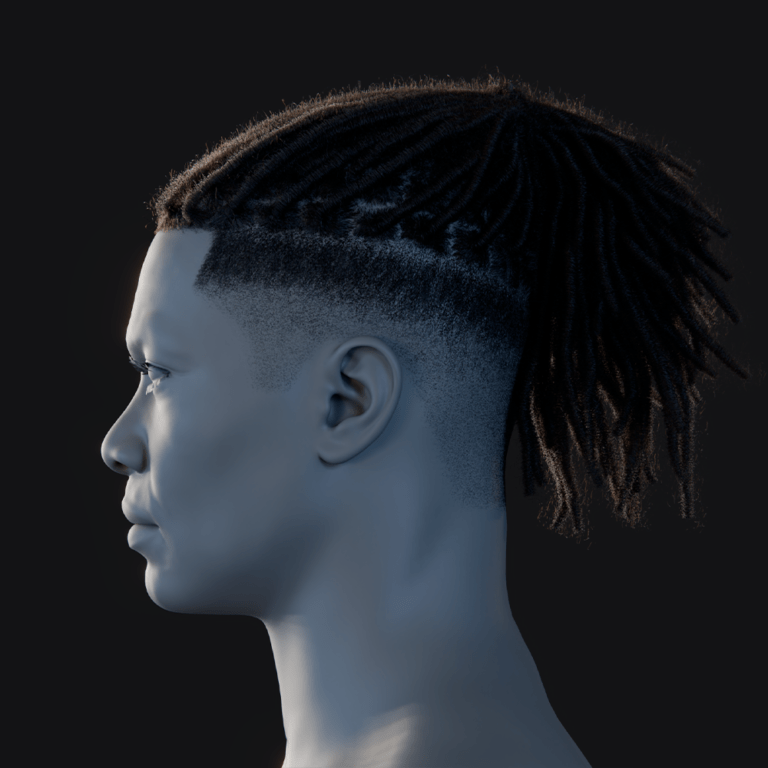

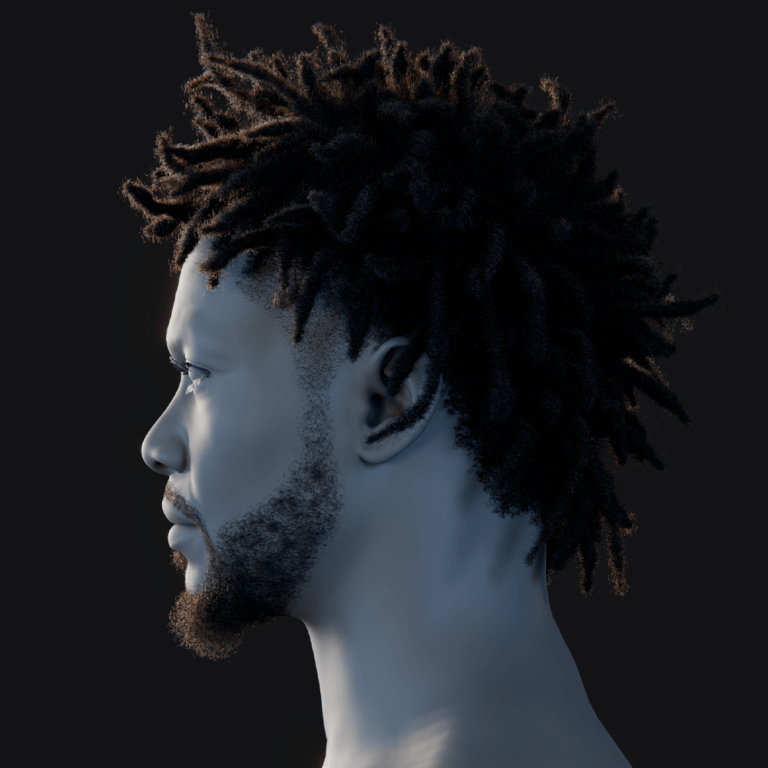

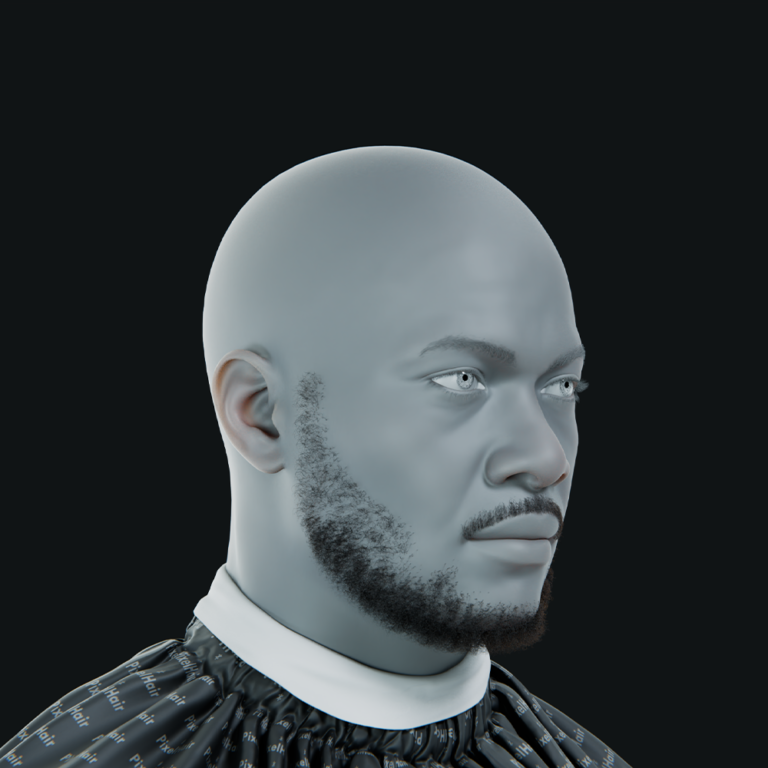

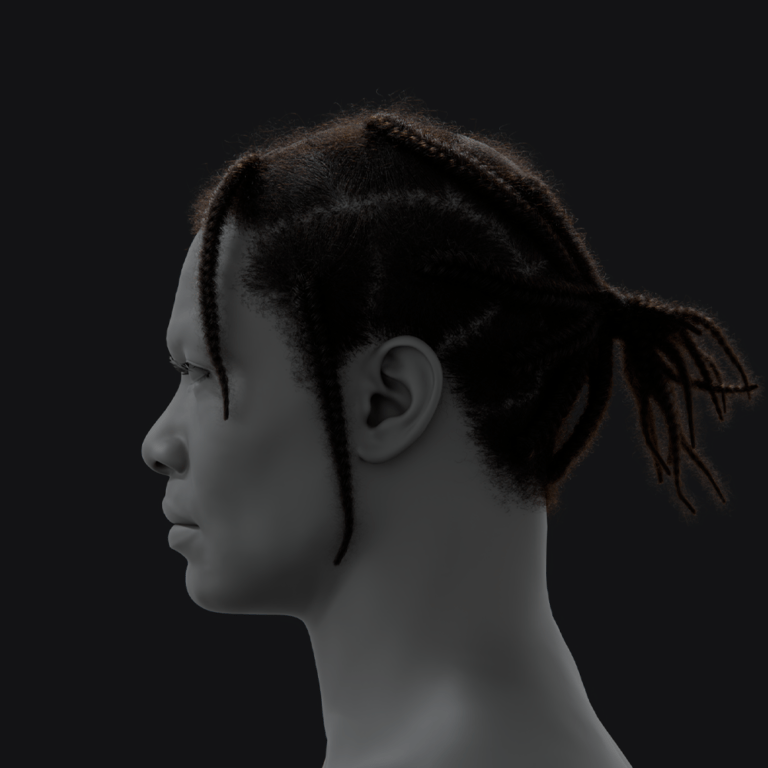

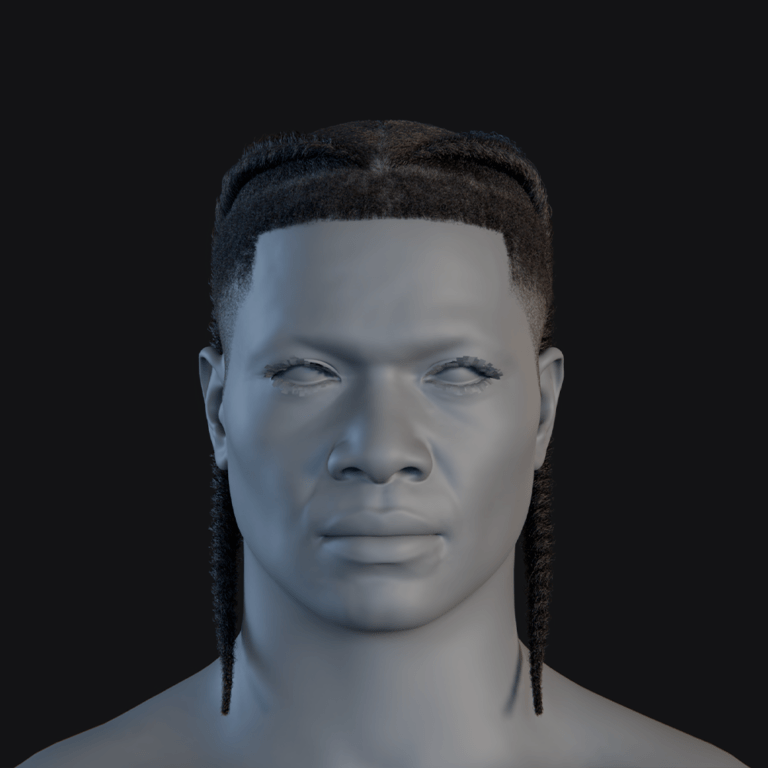

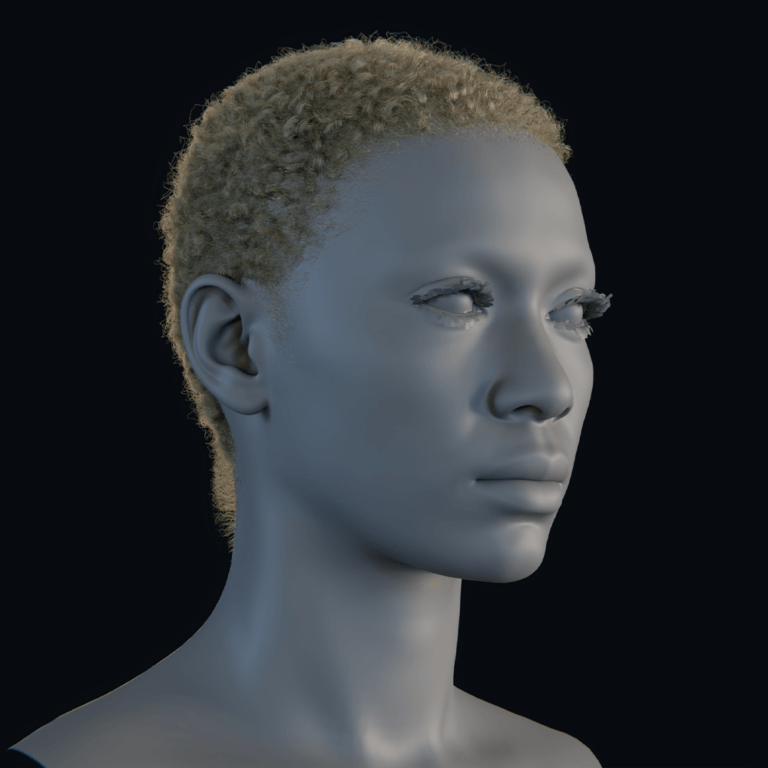

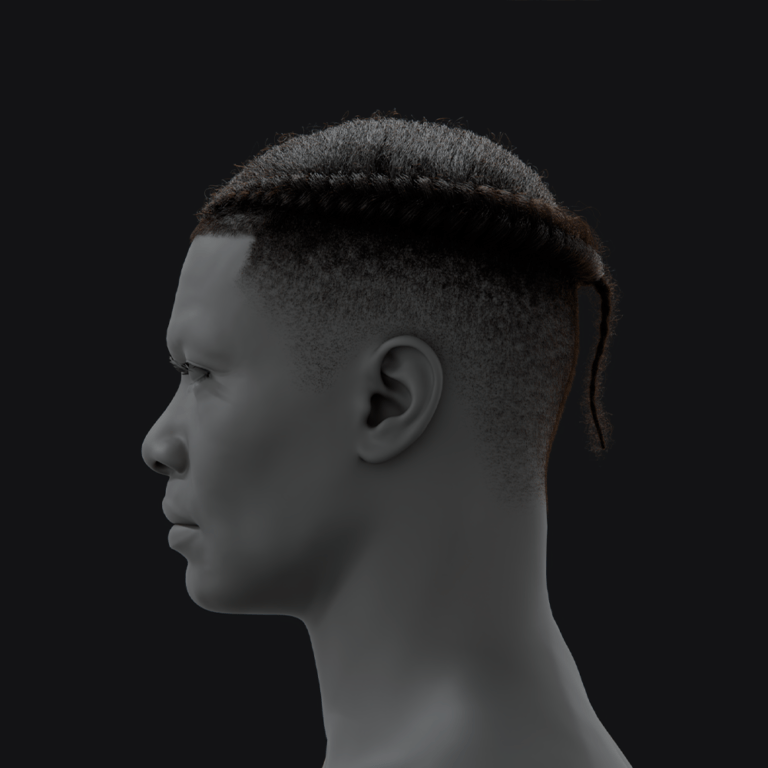

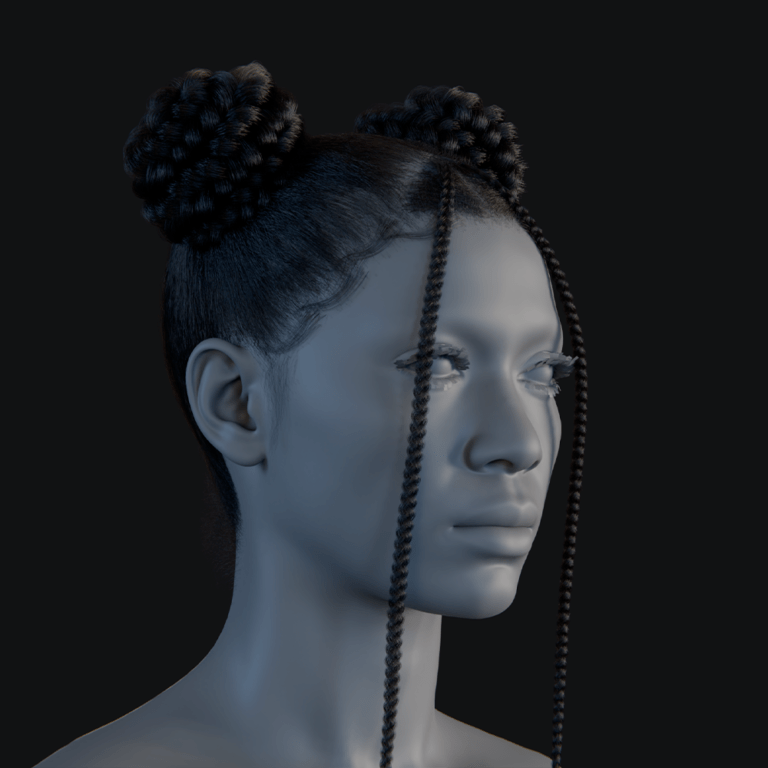

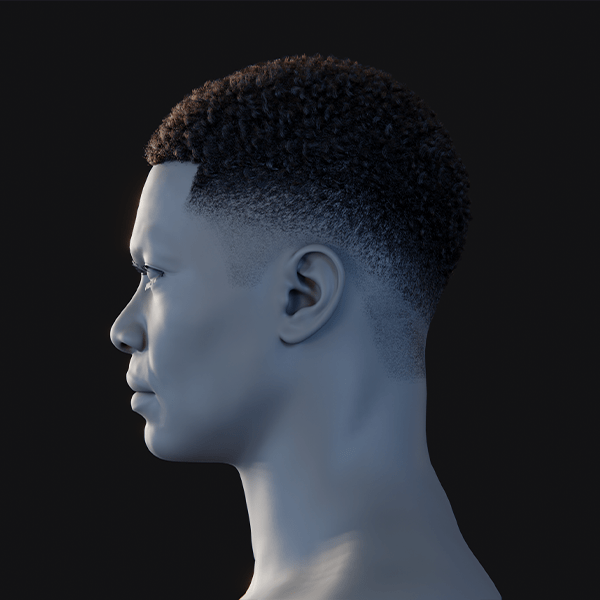

- Hair: Select from preset hairstyles, including various textures and lengths, with realistic strand-based grooms. Customize colors and add highlights for flair. Built-in options cover many styles, though advanced users can use tools like PixelHair. Eyebrows and eyelashes complement the look.

- Clothing: Choose from preset modern outfits like t-shirts or suits, with color options. For unique styles, import custom clothing modeled in Blender or Marvelous Designer into Unreal Engine. Preset wardrobes are limited but functional. Custom designs elevate your avatar’s distinctiveness.

Body proportions can be tweaked within preset human ranges. While clothing customization may require extra steps, Unreal Engine’s ecosystem supports unique designs, ensuring your alter ego’s appearance is fully personalized.

How can PixelHair be used to create unique hairstyles for your Metahuman alter ego in the Metaverse?

PixelHair enhances MetaHuman hairstyles with unique, realistic options via Blender and Unreal Engine. Here’s how it works:

- Apply PixelHair in Blender: Use PixelHair’s premade hairstyles, fitted to a base head, and adjust to match your MetaHuman’s scalp. The hair cap ensures a perfect fit. Styles include braids, afros, and more, with customizable strands. Export as an Alembic groom file for Unreal.

- Import to Unreal Engine: Attach the exported groom to your MetaHuman’s head via the Groom component. PixelHair’s realistic, physics-enabled hair adds variety not found in MetaHuman’s library. It’s optimized for performance with LOD options. This creates a signature look for your avatar.

PixelHair’s versatility allows culturally specific or stylized hairstyles, enhancing your alter ego’s identity. While performance considerations apply, its optimized assets ensure compatibility, making it ideal for distinctive metaverse avatars.

Can I create a stylized or fantasy alter ego using Metahuman Creator?

MetaHuman Creator focuses on realistic human avatars, limiting stylization to human-like features. Creating cartoonish or non-human characters (e.g., elves, animals) isn’t directly possible, but you can achieve some stylization:

- Pushing the Presets: Blend presets and use extreme slider values for unique, human-like looks. Apply metallic makeup or fantasy eye colors for flair. This keeps the face realistic but distinctive. It’s ideal for subtle stylization within human bounds.

- Custom Mesh Import (Mesh to MetaHuman): Import a moderately stylized humanoid mesh (e.g., caricatured face) to rig with MetaHuman’s skeleton. Non-humanoid meshes may fail or look odd. This requires external tools like ZBrush. It’s suited for advanced users.

- Stylized Rendering: Apply cel-shading or custom materials in Unreal Engine for a cartoon or anime-like appearance. The underlying model remains realistic, but rendering alters the style. This achieves artistic looks without changing proportions. It’s effective for visual stylization.

For non-human fantasy characters, use separate 3D modeling tools. MetaHuman excels for realistic alter egos, but future updates may support broader stylization.

How do I export my Metahuman character for use in Metaverse platforms?

Exporting a MetaHuman for non-Unreal platforms is possible but restricted by technical and licensing challenges. Here’s the process and limitations:

- Technical Export Process: Export MetaHuman components (mesh, skeleton) as an FBX file from Unreal Engine for use in other software or engines. This includes animation data or blendshapes. The process is straightforward but requires further optimization. Compatibility varies by platform.

- Optimization for Other Platforms: Simplify polygon count using lower LOD meshes, reduce material slots, and limit bones to meet platform requirements (e.g., VRChat’s 255-bone limit). Convert facial rigs to simpler blendshapes. This ensures performance on resource-constrained platforms. Retargeting to standard humanoid rigs may be needed.

- Platform SDKs: Some platforms like Spatial or VRChat allow custom avatar imports via Unity SDKs, but MetaHumans require heavy optimization. Most users opt for platform-native avatar systems. The process is complex and not officially supported. Platform-specific requirements add challenges.

- Licensing and Restrictions: Epic’s license restricts MetaHumans to Unreal Engine, prohibiting use in other engines like Unity or platforms like VRChat. Exporting for personal editing is allowed, but not for final deployment. This limits legal use outside Unreal. Always check Epic’s FAQ.

- Practical Workarounds: Recreate a similar avatar using cross-platform tools like Ready Player Me for non-Unreal platforms. Alternatively, stream Unreal’s MetaHuman render as a video feed to comply with licensing. This maintains a consistent identity. It’s a legal and practical solution.

MetaHumans are best used in Unreal-based metaverse projects, where they integrate seamlessly. For other platforms, technical and legal barriers make alternative avatar systems more practical.

Is MetaHuman compatible with Metaverse-ready environments like VRChat or Spatial?

MetaHumans are optimized for Unreal Engine, not natively compatible with platforms like VRChat or Spatial due to technical and licensing issues. Here’s the breakdown:

- VRChat (Unity-based): VRChat’s Unity-based system and strict performance limits make MetaHumans impractical. Their license prohibits use outside Unreal, and optimization is complex. Users prefer VRChat-native avatars like VRoid or Ready Player Me. MetaHumans are neither legally nor technically supported.

- Spatial (Web/Unity-based): Spatial supports custom avatars via Unity, but MetaHumans require significant optimization and violate Epic’s license. Spatial’s default Ready Player Me avatars are preferred for ease. High-fidelity MetaHumans are impractical for web/mobile. Alternative avatar systems are recommended.

Other platforms like Mozilla Hubs or Decentraland use simpler avatar formats, incompatible with MetaHumans’ complexity. For Unreal-based environments, MetaHumans excel, but for broader metaverse compatibility, use platform-specific avatars or emerging cross-platform solutions.

Can I animate my Metahuman alter ego with real-time facial tracking or mocap?

MetaHumans support real-time animation via motion capture (mocap) for lifelike performances. Here’s how to animate your alter ego:

- Facial Tracking (Live): Use Epic’s Live Link Face app on an iPhone with TrueDepth camera to stream facial movements to Unreal Engine. Alternative webcam-based solutions like Faceware exist. MetaHuman Animator processes video for high-fidelity animations. This enables expressive real-time performances.

- Body Motion Capture: Retarget mocap data from suits like Xsens or Vive trackers to MetaHuman’s standard skeleton. Unreal’s Control Rig and IK tools ensure accurate movements. Pre-recorded or VR-based tracking is also viable. This captures full-body actions seamlessly.

- Hands and Fingers: Devices like Leap Motion or data gloves animate MetaHuman hands for detailed interactions. Finger animations can be predefined if not captured. This adds realism to close-up gestures. It’s optional but enhances immersion.

- Audio-to-Facial (Speech Animation): Tools like Nvidia’s Audio2Face or Oculus Lip Sync generate lip-sync from audio inputs. AI-driven solutions animate expressions for pre-recorded or real-time speech. This is ideal for scripted or automated dialogue. It simplifies animation without live capture.

Combining facial and body mocap creates fully expressive avatars for streaming or virtual events. Even without gear, manual animations or marketplace assets work, leveraging MetaHuman’s industry-standard rigging for versatile, realistic performances.

How do I bring my Metahuman alter ego into a multiplayer Metaverse experience?

MetaHumans can be used in multiplayer Unreal Engine projects but face limitations in existing metaverse platforms. Here’s how:

- Your Own Multiplayer Project (Unreal Engine): Import MetaHumans as player characters, using blueprints and Unreal’s replication system for networked movement and animations. Optimize performance with LODs for scalability. This works for custom virtual worlds. Fortnite’s UEFN integration proves feasibility.

- Existing Multiplayer Metaverse Platforms: Platforms like VRChat or Horizon Worlds don’t support MetaHumans due to avatar system restrictions and Epic’s licensing. Workarounds include streaming MetaHuman renders as video. Unreal-based platforms are the best fit. Alternative avatars are needed elsewhere.

For Unreal projects, MetaHumans integrate like standard characters, supporting multiplayer with optimized performance. For external platforms, use platform-native avatars, as MetaHumans are restricted by technical and legal barriers until interoperable standards emerge.

Can I use voice AI or speech synthesis with my Metahuman alter ego?

MetaHumans can use AI voice or speech synthesis for convincing dialogue, ideal for virtual influencers or NPCs. Here’s how:

- AI Voice Generation: Services like Replica Studios generate natural-sounding voices from text, integrated with MetaHuman for accurate lip-sync. Other tools like Amazon Polly require manual sync. This creates distinct voices without recording. It’s streamlined for scripted content.

- Real-time Voice AI: Combine text generation (e.g., GPT-4) with TTS for interactive chatbot-like MetaHumans. Lip-sync may have slight delays but is feasible. This enables dynamic conversations. It’s advanced but achievable with plugins.

Voice AI allows flexible voice characteristics, from warm to authoritative, or even cloned voices (ethically). Replica’s MetaHuman integration ensures realistic lip-sync, enabling your alter ego to host videos or converse in multiple languages without voice acting.

What are the benefits of using Metahuman for identity and expression in the Metaverse?

Using a MetaHuman avatar for your digital identity offers several significant benefits in terms of realism, expressiveness, and personal branding in the Metaverse:

- Unparalleled Realism and Emotional Expressiveness: MetaHumans capture subtle expressions like smiles or frowns, enhancing emotional connections in virtual interactions. This realism makes your avatar’s body language and facial cues feel natural, ideal for meetings or therapy. It outperforms cartoon avatars by making conversations more lifelike and engaging. The technology narrows the gap between physical and digital communication effectively.

- Personalization and Identity Authenticity: MetaHumans can mirror your real self or a desired identity with detailed customization of features. This authenticity aids recognition across platforms and boosts confidence through true representation. You can reflect heritage, style, or gender accurately in a virtual space. It empowers users to express their ideal selves authentically.

- High-Quality Content Creation: MetaHumans offer cinematic-quality models for creators, perfect for videos or streams. They enable storytelling with realistic visuals using mocap and voice tools, all while preserving privacy. This allows you to become a virtual influencer with professional-grade output. Their realism rivals top virtual influencers, enhancing creative potential.

- Professional and Brand Opportunities: MetaHumans serve as virtual ambassadors or service reps, opening doors for collaborations. Their lifelike presence enhances credibility at virtual events or conferences over cartoon avatars. Brands already use similar models for campaigns, signaling growing opportunities. It’s like a polished digital suit for professional settings.

- Enhanced Self-Expression: MetaHumans let you design appearances unbound by real-world limits, like neon hair. This freedom supports creativity, gender expression, and cultural identity realistically. The avatar becomes a tangible canvas for personal exploration. It liberates users to express hidden facets believably.

- Interoperability of Identity (Future Potential): MetaHumans could standardize realistic avatars across platforms, aided by blockchain. Early adoption prepares your identity for future portability and consistency. It positions you for when interoperability becomes widespread. This foresight ensures a cohesive virtual presence long-term.

In essence, MetaHumans provide realistic expression and creative freedom, making interactions authentic and impactful. They merge real-life emotional depth with virtual versatility, fueling excitement for digital humans in the Metaverse.

How does Metahuman compare to ReadyPlayerMe and other Metaverse avatar tools?

There are many avatar creation tools out there, each with different strengths. MetaHuman vs. Ready Player Me is a common comparison, since Ready Player Me (RPM) is a popular platform for cross-game avatars. Here’s a breakdown of how MetaHuman stacks up against RPM and others:

- Realism and Style: MetaHuman delivers near-photographic realism, excelling in emotional nuance. RPM offers a stylized, cartoonish look suited for casual gaming contexts. Tools like VRoid favor anime styles, while Mozilla Hubs are simpler. MetaHuman’s depth can feel uncanny, unlike stylized options.

- Customization and Diversity: MetaHuman provides precise facial sculpting for realistic humans, lacking non-human options. RPM has limited presets but supports fantastical styles like cyberpunk easily. VRoid excels in anime detail; Reallusion matches MetaHuman’s realism for a cost. MetaHuman’s focus limits extreme diversity compared to others.

- Cross-Platform Compatibility: RPM integrates across many apps, perfect for metaverse roaming. MetaHuman is Unreal-bound, restricting its portability significantly. Sansar and Second Life are platform-specific; VRM offers style flexibility. RPM’s interoperability outshines MetaHuman’s confined ecosystem.

- Ease of Use: RPM creates avatars fast from selfies, ideal for casual users. MetaHuman needs more time and Unreal skills, targeting creators. Its workflow is professional, unlike RPM’s simple web interface. Apps like Zepeto also prioritize quick, stylized creation.

- Capability and Animation: MetaHumans support advanced mocap and subtle expressions for film-quality work. RPM provides basic rigs for simple animations, less detailed. MetaHuman’s complexity hampers portability but boosts performance. RPM suits lightweight multiplayer environments better.

- Content and Accessories: RPM includes a growing library of NFT and branded outfits. MetaHuman’s options are limited but customizable in Unreal with effort. RPM caters to casual fashion fans; MetaHuman needs manual work. IMVU and Second Life offer similar built-in variety.

MetaHuman prioritizes high realism and quality within Unreal, while RPM excels in simplicity and cross-platform use. Your choice depends on valuing depth or convenience, with potential to use both strategically.

Can I use Unreal Engine to build Metaverse spaces for my Metahuman alter ego?

Yes – Unreal Engine 5 is a powerful tool not just for avatars but for creating entire Metaverse spaces and experiences. In fact, many of the most visually impressive “metaverse” demos and projects have been built with Unreal. If you have a MetaHuman alter ego, you can absolutely build a virtual world or environment in Unreal for that avatar to inhabit and for others to join.

Unreal Engine 5 empowers you to craft detailed Metaverse spaces like nightclubs or fantasy worlds for your MetaHuman, using its top-tier rendering and physics. You can create multiplayer experiences with full control over lighting and interactivity, surpassing web-based platforms. MetaHumans integrate natively, ensuring a seamless, high-quality look across the environment. Virtual events can be hosted via cloud or pixel streaming, broadening access without Unreal installation. Plugins add social features like voice chat, enhancing immersion.

The engine’s flexibility supports unique mechanics and blockchain integration for NFT assets, though it demands development skills. Tools like UEFN hint at future ease, already using MetaHumans as NPCs. Unreal’s realism sets your alter ego’s world apart from simpler platforms. Its use in pioneering projects ensures a visually stunning, immersive experience for your MetaHuman.

How do I integrate my Metahuman with blockchain or NFT-based identity systems?

Integrating a MetaHuman avatar with blockchain or NFTs is an emerging area in the metaverse space, combining digital identity with decentralized ownership. While MetaHumans themselves are not inherently blockchain-enabled, you can use external systems to link your avatar or its attributes to blockchain assets. Here are some ways and considerations:

- Avatar as an NFT (Digital Asset): Minting your MetaHuman as an NFT verifies ownership via its unique design data. This prevents duplication and could allow imports if platforms support it. Current support is limited, but NFT avatars are a growing concept. It ensures a portable, secure digital identity.

- Wearables and Accessories as NFTs: Attach NFT clothing to your MetaHuman, verified by blockchain ownership. This enables a portable wardrobe across experiences with custom Unreal logic. Brands like Nike issue such wearables, enhancing your avatar’s style. It’s already seen in other platforms’ ecosystems.

- Identity Tokens and Login: Link your MetaHuman to a blockchain identity like DID for verification. This auto-loads your avatar or customizations when logging in with a wallet. It secures and personalizes your digital presence seamlessly. It’s a back-end solution for platform access.

- Selling and Trading Avatars or Looks: Mint and sell your MetaHuman as an NFT, pending Epic’s licensing approval. This mirrors trading in VRChat or Second Life with added transparency. Digital avatar art’s value is rising, especially for 3D models. It offers potential economic benefits.

Integrating MetaHumans with blockchain is possible but not seamless, aligning with trends toward owned digital identities. NFTs and blockchain enhance ownership and portability, promising a persistent avatar presence.

What is the role of digital fashion for Metahuman avatars in the Metaverse?

Digital fashion is vital for personalizing your MetaHuman, reflecting identity through clothing as in real life, and leveraging their realism as fashion models. It allows outfit changes for various virtual occasions, unrestricted by physical limits, boosting creativity. Brands like Gucci create digital NFTs, making MetaHuman outfits status symbols in virtual events. Virtual fashion shows feature MetaHumans, like Eva Herzigová’s digital twin, showcasing apparel effortlessly.

This fashion bridges real and virtual, letting you preview styles or collect wardrobes, including rare NFT drops, for a unique avatar style. Subcultures use it to signal identity, aided by Unreal’s realistic fabric simulation tools like Marvelous Designer. Examples like Balenciaga’s digital models show its growing value. For your MetaHuman, it offers versatile, low-stakes expression, positioning them as key players in the metaverse fashion scene.

How can I use my Metahuman alter ego in social media or virtual influencer content?

Your MetaHuman alter ego can become a social media persona, rendered in Unreal for striking Instagram posts or TikTok videos, mimicking virtual influencers like Lil Miquela. You can animate it for short clips or livestreams via OBS, offering privacy and engagement on platforms like Twitch. It opens brand collaboration opportunities, as seen with fashion partnerships, leveraging its controlled appeal. Blend it with real-world content or build a narrative to deepen audience connection.

This approach requires creativity but taps into a growing virtual influencer trend, with examples like AI Angel showing audience potential. A MetaHuman offers high-fidelity uniqueness, enhancing privacy and flexibility. It’s an artistic way to extend your digital presence innovatively across social platforms.

Can Metahuman avatars be used for livestreaming or virtual meetings?

Yes, MetaHuman avatars can be used in livestreams and virtual meetings – essentially as a real-time stand-in for your live video feed. This is a growing practice often referred to as “Virtual YouTubing” (VTubing) or simply avatar-based telepresence. The idea is that instead of people seeing your real face on Zoom or Twitch, they see your animated MetaHuman.

You can livestream as your MetaHuman using Unreal and OBS, capturing real-time animations for Twitch, offering a novel, expressive persona. For meetings, it feeds into Zoom via virtual camera tools, reducing fatigue with a futuristic flair. It requires a strong PC and setup, with slight latency manageable for interaction. Benefits include privacy and a human-like presence, though acceptance varies.

The trend is gaining traction, with Microsoft exploring simpler avatars, suggesting a future of blended virtual-real meetings. Using a MetaHuman now showcases innovation, hinting at evolving remote engagement with meaningful avatar-driven interaction.

Where can I see examples of Metahuman alter egos being used in Metaverse projects?

Several projects showcase MetaHumans in metaverse scenarios, inspiring their use as alter egos:

- Virtual K-pop Band MAVE:: MAVE:, a South Korean virtual group, uses MetaHumans for realistic performances in music videos. Their debut garnered millions of views, engaging fans via social media and TV. The choice highlights MetaHuman’s animation fidelity for entertainment. They rival human performers in audience impact.

- AYAYI – Chinese Virtual Influencer: AYAYI, a lifelike influencer, partners with brands like Porsche, building a digital persona. With huge followings, she attends events via hologram, akin to MetaHuman potential. She exemplifies marketing and community engagement digitally. Her success suggests MetaHuman’s influencer viability.

- MetaHuman NPCs in Fortnite Creative: Epic’s UEFN uses MetaHumans as NPCs in Fortnite for storytelling. Demos like Jokenite show their cinematic integration in games. This blends realism into a cartoonish metaverse effectively. It hints at future player avatar use.

- Somnium Space and High-Fidelity Avatars: Somnium demos detailed avatars, with users testing MetaHumans in VR unofficially. This aligns with realistic alter ego goals in social VR. It shows enthusiast interest in high-fidelity applications. MetaHumans could expand here officially.

- Virtual Events and Concerts: MetaHumans appear in virtual concerts, like Epic’s song demo, enabling performances. Platforms like Wave XR use similar tech for VR shows. They offer a stage for your alter ego to perform. This taps into entertainment’s digital shift.

- Corporate Training and Simulation: Unreal simulations use MetaHumans as role-play characters, like medical patients. They enhance training realism over other digital people solutions. Your alter ego could instruct in such setups. It’s a practical metaverse application.

- Indie Metaverse Projects: Indie demos feature MetaHumans, like VR bartenders or ChatGPT hybrids. These experiments populate YouTube with interactive concepts. They extend to Unreal short films as narrative actors. It’s a grassroots metaverse exploration.

- Media and Advertising: MetaHumans host TV segments or star in ads as brand ambassadors. These one-offs demonstrate broader tech adoption. They inspire varied alter ego uses in media. It’s a growing visibility trend.

View MAVE: on YouTube, AYAYI via news, or Epic’s showcases for proof of MetaHuman engagement in entertainment and games. Early adoption places you at this trend’s edge.

What are the future trends for digital alter egos and virtual identity using Metahuman?

The landscape of digital alter egos and virtual identity is rapidly evolving, and MetaHuman is at the forefront of this movement. Looking to the future, several trends are emerging:

- Greater Realism and Ubiquity of Virtual Identities: Virtual alter egos will match social media’s ubiquity, with MetaHumans nearing human realism. Interactions will feel lifelike, enhanced by AI for autonomous conversation. They’ll act as digital twins, blurring human-digital lines. 3D personas will become daily norms.

- Standardization and Interoperability: Standards may enable MetaHuman portability across platforms via blockchain. This ensures consistent identity and ownership universally. Companies push NFT avatar protocols for this shift. By 2026, persistent identities will dominate metaverse use.

- Blending of Virtual and Real (Mixed Reality Identities): AR glasses will project MetaHumans as holograms in reality or meetings. This “holoportation” refines presence across virtual and real spaces. It thins interaction barriers as AR/VR merge. Your alter ego will span both realms seamlessly.

- AI-Driven Autonomous Avatars: AI will power MetaHumans to mimic you or manage tasks independently. They could attend meetings or act as relatable companions. This envisions independent virtual beings in the metaverse. It blends human and artificial social roles.

- Integration with Personal Data (Quantified Self): Avatars may reflect health or mood from wearables, adapting contextually. A market for enhancements like animations could personalize them. This ties your digital self to real data. It deepens the user-avatar bond.

- Virtual Society and Identity Governance: Systems will verify avatar identities for trust or legitimize pseudonyms. Laws may address impersonation and content rights in 3D spaces. This shapes digital personhood’s legal framework. It’s a new philosophical frontier.

- Improved Creation Tools and Accessibility: Smartphone scans could simplify MetaHuman creation, expanding diversity. The process will quicken, boosting adoption widely. Non-human options may emerge eventually. It aims for universal avatar accessibility.

- Entertainment and Transmedia: Your MetaHuman could star in media, earning royalties across platforms. NFT portability supports this transmedia presence. It turns avatars into licensed story characters. This fuses identity with creative output.

- Social Acceptance and Evolution of Self-Image: Avatars in daily life will normalize, encouraging fluid appearances. This fluidity may foster empathy through diverse skins. It challenges fixed identity notions socially. Creative expression will thrive unbound.

The future metaverse will integrate realistic MetaHumans into daily life as vital identity extensions. Supported by AI and blockchain, this shift redefines self-expression excitingly.

FAQ Questions and Answers

- Is MetaHuman Creator free to use?

MetaHuman Creator is completely free for anyone with an Epic Games account. You can design and download MetaHumans at no cost for Unreal Engine projects. Characters are licensed only for Unreal Engine rendering using them in other engines violates the EULA. There is no subscription or upfront fee for the Creator tool itself. - What do I need to run MetaHuman Creator and Unreal Engine?

MetaHuman Creator runs via cloud streaming in Chrome, Edge, Firefox, or Safari on Windows or macOS with a good internet connection and an Epic Games account. To import and use your MetaHuman, you need Unreal Engine 4.26.2 or later (UE5 recommended) installed locally. A relatively powerful PC with a capable GPU and sufficient disk space is required for real-time animation and high-res textures. For live facial capture, an iPhone X or newer (TrueDepth camera) gives the best results. - Can I use my MetaHuman avatar in Unity, VRChat, or other non-Unreal apps?

Officially, MetaHumans are licensed only for Unreal Engine use; exporting to Unity or VRChat violates the EULA. While you can technically export an FBX and import it elsewhere, this risks account penalties and often exceeds other platforms’ poly/bone limits. Hobbyists have done personal tests, but public use is prohibited. For non-Unreal avatars, recreate your design using tools like Ready Player Me or Unity-compatible workflows. - How can I make my MetaHuman look like me or a specific person?

Start in Creator with a preset closest to your reference, then adjust sliders (eye spacing, nose, jawline) with side-by-side photos. For photorealistic likeness, scan a face via photogrammetry or an iPhone app, import the mesh into UE5, and run the Mesh to MetaHuman workflow. This transfers the scan onto a rigged head you can fine-tune (skin, hair, texture). Ensure neutral expression and proper lighting when scanning for best results. - Can I create a non-human or highly stylized character with MetaHuman?

Not directly, Creator is constrained to realistic human proportions and features. You might rig a custom alien or creature head via Mesh to MetaHuman, but results vary and require extensive manual fixes. Alternatively, apply post-process effects (cel-shading) to a human base for a stylized look. For true non-humans or extreme stylization, use specialized avatar tools (e.g., VRoid, custom modeling). - How do I add custom clothes or accessories to my MetaHuman?

In Unreal Engine, import or model clothing/accessory meshes (jackets, hats, glasses) and weight-paint them to the MetaHuman skeleton. Simple items (glasses) can attach as static meshes to head bones; full outfits may require hiding underlying body meshes.Epic’s MetaHuman Scene sample, Marketplace, and Quixel Bridge offer compatible clothing assets you can adjust in Blender or Maya. Switch outfits at runtime by enabling/disabling meshes in your Blueprint. - Can I animate my MetaHuman if I don’t have an iPhone or mocap gear?

Yes, you have multiple options:- Manual keyframe animation: Use Sequencer or external tools (Maya, Blender) to animate the rig by hand.

- Pre-recorded libraries: Apply body animations from Marketplace or Mixamo and preset facial curves.

- Audio-to-Face: Generate lip-sync via tools like Daz Mimic Live, Oculus Lip Sync, or NVIDIA Audio2Face.

- Webcam tracking: Use VSeeFace or Papagayo+FaceFX routed into Unreal via Live Link.

- Keyboard/MIDI puppeteering: Map keys or MIDI to blendshape triggers for live expression control.

- Pose assets: Create and blend predefined facial poses (smiles, visemes) in Control Rig.

- Does using MetaHumans require coding or Unreal Engine expertise?

No coding is needed to design or pose in Creator; the tool is very artist-friendly. Basic use in Unreal (importing, Sequencer) is mostly point-and-click. For interactive or game scenarios, you’ll need some Blueprint scripting and familiarity with animation Blueprints or Control Rig. Epic’s sample projects, documentation, and community tutorials provide plenty of guidance. - Can I change my MetaHuman’s hair or facial hair after creation?

Yes, open your character in MetaHuman Creator and swap hairstyles or beards, then re-download the updated version. In Unreal, hair grooms and facial-hair assets are separate components you can mix across MetaHumans. You can also tweak hair color via material parameters in UE. Community packs and PixelHair/custom grooms extend your styling options. - How does MetaHuman compare to other avatar creation options like Ready Player Me or Character Creator?

MetaHuman offers unmatched realism detailed skin, hair, and micro-expression rigs, ideal for film or high-end games. However, its assets are heavy and locked to Unreal. Ready Player Me is lightweight, stylized, and cross-platform but less realistic; Character Creator (CC4) is paid and multi-engine but may require extra work for high fidelity. Other tools (Daz3D, MakeHuman) serve still renders or lower-fidelity pipelines. Choose based on your needs: cinematic quality versus broad compatibility versus stylization.

Conclusion

Digital alter egos are central to virtual identity, and Epic’s MetaHuman in Unreal Engine 5 offers a cutting-edge way to design, animate, and deploy hyper-realistic avatars across metaverse platforms.

In this guide we covered creating and customizing your MetaHuman alter ego, including hair with PixelHair, using it in virtual worlds, live interactions, and animations without specialized capture gear.

We addressed compatibility and performance considerations, explained alternative animation methods, and compared MetaHuman avatars to other solutions for stylized or cross-platform uses.

With MetaHuman, users gain unprecedented fidelity and emotional expressiveness, enabling personal branding as virtual influencers, VR event participants, game characters, or professional avatars in meetings.

Despite current engine exclusivity and high-end hardware requirements, workflows are rapidly simplifying, making the vision of a seamless, connected metaverse, where your digital self travels with you, increasingly achievable.

By following these workflows and tips, you can harness MetaHuman’s blend of artistry and technology to build a rich, dynamic virtual identity that blurs the boundary between real and virtual.

Sources and Citations

- Epic Games – MetaHuman Official Documentation and FAQs (Unreal Engine): MetaHuman overview, requirements, and license detailsunrealengine.comunrealengine.com.

- Epic Games Blog – “Delivering high-quality facial animation in minutes, MetaHuman Animator is now available!” (June 2023): Announcement of MetaHuman Animator for facial captureunrealengine.com.

- Epic Games Blog – “MetaHuman comes to UEFN, dynamic clothing with Marvelous Designer and more” (March 2024): GDC news on MetaHumans in Fortnite Creative and CLO clothing integrationunrealengine.comunrealengine.com.

- Epic Games Spotlight – “Meet MAVE: the virtual K-pop stars created with Unreal Engine and MetaHuman” (June 2023): Case study of the virtual K-pop group MAVE: and why MetaHuman tech was usedunrealengine.comunrealengine.com.

- Epic Developer Community Forums – MetaHuman License Q&A: Clarification that MetaHuman assets must be rendered in Unreal (not allowed in Unity/VRChat)forums.unrealengine.com.

- YORD Studio Blog – “Expanding Brands in the Metaverse: From Avatars to Metahumans” (Oct 2023): Explains metahuman vs. avatar differences, virtual influencers, and use in video conferencingyordstudio.comyordstudio.com.

- Blender Market – PixelHair for Blender & UE5 Product Page: Details on PixelHair features and usage with MetaHumansblendermarket.comblendermarket.com.

- VentureBeat – “Replica Studios goes early access with AI voice actors for Unreal’s MetaHuman Creator” (July 2021): Article on using Replica’s AI voices with MetaHumans for automatic lip-syncventurebeat.comventurebeat.com.

- GLG Insights – “Hyper-Realistic MetaHuman: The ‘Bridge’ to the Metaverse in Web 3.0” (Interview, 2022): Discusses digital humans’ development and mentions virtual influencer AYAYI’s impact and brand collaborationsglginsights.com.

- Ready Player Me Docs – “Ready Player Me – Cross-game Avatar Platform”: Highlights RPM’s cross-platform integration in 300+ apps (contrast to MetaHuman’s UE-only use)docs.readyplayer.me.

- LinkedIn (Emergen Research) – “Metaverse, Digital Human Avatar, and NFT Markets” (Nov 2023): Industry analysis mentioning how NFTs safeguard avatar identity and efforts towards interoperabilitylinkedin.comlinkedin.com.

- VirtualHumans.org – Coverage on Virtual Influencers & MetaHuman Creator: Notes how tools like MetaHuman lower the barrier to create realistic virtual influencerspebblestudios.co.uk.

- Reddit (Epic Forums repost) – User discussion on Mesh to MetaHuman stylized use cases: Community insights on using MetaHuman rig for cartoon charactersmedium.comreddit.com.

- YouTube – Unreal Engine MetaHuman tutorials and demos: e.g., JSFilmz’s “Mesh to MetaHuman” tutorial, showing how to import custom meshesyoutube.com, and CodeMiko/Offworld Live showcases for live MetaHuman streaming.

- NVIDIA AI Tech – Audio2Face: Demonstration of generating facial animation from audio, used in projects like MAVE: (mentioned in Epic Spotlight)unrealengine.com.

- Microsoft Tech Community – “Mesh avatars for Microsoft Teams” (2022): Announcement of avatar feature for meetings (though cartoon style)techcommunity.microsoft.com, indicating trend of avatars in professional settings.

- Rococo Blog – “How to use MetaHumans to make epic content” (2021): Discusses using motion capture suits and MetaHumans togetherrokoko.comyoutube.com.

Recommended

- Trish from Devil May Cry – Complete Character Guide

- How to Install and Set Up The View Keeper in Blender

- How Do I Track an Object With a Camera in Blender?

- Can you control a camera in Blender with game controllers?

- Devil May Cry Nero: Character Backstory, Abilities, Game Appearances, and Lore Explained

- The Best Bearded Video Game Characters: Iconic Facial Hair and Their Impact on Gaming

- Discover the Allure of Chrome Heart Braids

- How to Integrate Metahuman with ALSV4 in Unreal Engine 5: Complete Setup Guide

- Blender Trim Hair Curves Geometry Nodes Preset: Comprehensive Guide for 3D Hair Grooming

- Devil May Cry Vergil: Complete Guide to Dante’s Iconic Rival, Powers, and Storyline