Metahuman Creator in Unreal Engine is a groundbreaking tool that allows creators to design hyper-realistic digital humans with relative ease. This comprehensive guide will walk you through everything you need to know – from accessing the tool and customizing your character’s features, to exporting into Unreal Engine 5 and animating your MetaHuman. We’ll also cover common questions, troubleshooting tips, and how to extend the tool’s capabilities (for example, using PixelHair for custom hairstyles). Whether you’re a beginner or an advanced user, this step-by-step resource will help you build high-fidelity digital humans using MetaHuman Creator Unreal Engine workflows effectively.

What is Metahuman Creator in Unreal Engine?

MetaHuman Creator is a free cloud-based application by Epic Games for designing photorealistic digital people, fully rigged and ready for animation. It runs in a web browser and lets you create unique, high-fidelity human characters (called MetaHumans) by providing an intuitive interface to adjust facial features, skin complexion, teeth, body shape, hair, clothing, and more. In other words, MetaHuman Creator takes care of the complex 3D modeling and rigging in the background, so you can focus on artistic choices. Each MetaHuman you create is truly game-ready – once designed, you can download the character through Quixel Bridge and use it directly in Unreal Engine with a full facial and body rig for animation.

MetaHuman Creator stands out from other character generators by combining an ever-expanding library of real human data with real-time rendering. As you adjust sliders or sculpt features, the tool blends between actual scanned human examples to ensure your creation looks realistic and grounded. The result is that what used to take character artists weeks of labor can now be achieved in under an hour. MetaHuman characters are already used in games, films, and virtual productions for their unparalleled realism. However, it’s important to note that MetaHumans are licensed for use within Unreal Engine projects – you cannot render them in other engines or outside Unreal without permission.

How do I access and use MetaHuman Creator in Unreal Engine 5?

MetaHuman Creator is a cloud-based tool for crafting photorealistic MetaHumans, requiring an Epic Games account and a supported browser. It streamlines character design and integrates with UE5 via Quixel Bridge. Here’s how to start:

- Sign Up or Log In: Visit the MetaHuman Creator portal (metahuman.unrealengine.com) and log in with your Epic Games account. If it’s your first time, you may need to sign up for access – Epic often grants access instantly now that MetaHuman Creator is out of beta.

- Launch in Browser: MetaHuman Creator runs via pixel streaming in-browser. Use Chrome, Edge, Firefox, or Safari on a Windows or macOS computer with a reliable internet connection. The app will stream an interactive 3D viewport to you. (Ensure your connection is stable; the session may time out after around an hour, but you can always reconnect.)

- Choose a Starting Template: Once in the app, you’ll see a gallery of preset characters. Select a preset that you want to use as a starting point and click “Create Selected”dev.epicgames.com. This loads the character into the editor, where you can modify it extensively.

- Use the Editing Controls: The MetaHuman Creator interface is organized into categories (Face, Hair, Body, etc.). You can click and drag on facial features directly or use the parameter sliders provided. Changes are saved in real time to your Epic account.

To import into UE5: - Install/Enable Quixel Bridge: In Unreal Engine 5, Quixel Bridge is available as a built-in plugin. Make sure the Bridge plugin is enabled (Edit > Plugins > Bridge). You can then access it from the Content menu > Quixel Bridge. Log in with the same Epic account inside Bridge.

- Download Your MetaHuman: In Quixel Bridge, navigate to the MetaHumans section (usually under the “MetaHumans” or using the search). You should see the MetaHuman(s) you saved. Click on your character and choose a quality level (typically Medium or High LOD to start) and Download the assets.

- Export to Unreal: After downloading, click Export. Bridge will then transfer the MetaHuman into your open Unreal Engine project. The first time you do this in a project, Unreal may prompt you to enable some missing plugins or settings (such as the MetaHuman plugin, IK Rig, etc.).

- Use in Project: Drag the MetaHuman Blueprint into your level viewport. The fully rigged character is ready for animation, gameplay, or cinematics. Enable prompted plugins and restart UE5 if required.

MetaHuman Creator simplifies design. Quixel Bridge ensures efficient UE5 integration.

Is MetaHuman Creator free to use with Unreal Engine?

MetaHuman Creator is free for Unreal Engine users, requiring only an Epic Games account, with no fees for creating unlimited MetaHumans. Licensed exclusively for Unreal, characters cannot be used in other engines without Epic’s permission, aligning with Epic’s ecosystem strategy. This accessibility empowers indie developers to produce AAA-quality characters cost-effectively. The Unreal-only restriction ensures deep integration with UE5’s tools, enhancing workflow efficiency.

The free model disrupts traditional character creation costs, benefiting small teams. Staying within Unreal’s licensing terms guarantees high-fidelity results for games, films, or VR projects, leveraging Epic’s optimized pipeline. MetaHuman’s no-cost model supports diverse creators. Its seamless UE5 integration makes it a cornerstone for real-time production.

How do I customize facial features in MetaHuman Creator?

MetaHuman Creator excels at facial customization, using Blend and Sculpt modes with a human scan database for realistic results. Blend Mode allows quick preset blending for macro changes, while Sculpt Mode offers precise marker-based tweaks for detailed features like eyelids or nose shape. Skin texture sliders control aging, pores, or blemishes, and makeup options like blush or eyeliner personalize looks. The system ensures anatomical accuracy, guiding users to realistic outcomes.

Beginners achieve professional results with intuitive controls, ideal for rapid prototyping. Advanced users can match reference images, creating diverse ethnicities or specific likenesses, making MetaHuman Creator versatile for cinematic or game character design. Its database-driven approach enhances realism. The tool supports a wide range of facial expressions for animation.

Can I create stylized characters with MetaHuman Creator?

MetaHuman Creator prioritizes photorealistic humans, constrained by human scan data, limiting cartoonish stylization. Slight stylization is possible via Sculpt Mode for exaggerated features or creative textures like bold makeup. External workflows, such as Mesh to MetaHuman or UE5 toon shaders, enable comic-book aesthetics. Tools like ZBrush or Blender are needed for extreme stylization, integrating with MetaHuman rigs.

Highly stylized characters demand external effort, but MetaHuman excels for realistic or subtly stylized humans. It’s ideal for cinematic styles, with UE5’s rendering enhancing semi-stylized aesthetics. Community solutions like Character Creator 4 expand stylization options. MetaHuman’s realism remains its core strength for professional projects.

How do I export a MetaHuman character from Creator to Unreal Engine?

Exporting MetaHumans to UE5 uses Quixel Bridge to transfer cloud-saved characters as rigged assets. Here’s the process:

- Open Quixel Bridge in UE5: In Unreal Engine 5, go to Content > Quixel Bridge. (If you don’t see it, ensure the Quixel Bridge plugin is enabled.) The Bridge interface will pop up, usually docked like a panel.

- Log In and Find MetaHumans: Log in to Bridge with your Epic Games account (the same account used in MetaHuman Creator). In Bridge’s left sidebar, navigate to the MetaHumans section. You should see thumbnails for all MetaHumans associated with your account.

- Select and Download Assets: Click on the MetaHuman you want to export. On the MetaHuman’s page in Bridge, choose a quality level (Medium, High, Epic, etc.) and hit the Download button. This fetches necessary files like meshes, textures, and rig data.

- Export to Unreal: Once downloaded, click Export. Bridge will transfer the MetaHuman into your project. Enable prompted plugins (e.g., MetaHuman, IK Rig) and restart UE5 if needed to complete the process.

Quixel Bridge streamlines exports. Its UE5 integration ensures a user-friendly workflow.

What are the system requirements to run MetaHuman Creator effectively?

MetaHuman Creator’s cloud-based design requires minimal local hardware: a Windows or macOS PC with a modern browser, 8 GB RAM, and 10 Mbps broadband for streaming the 3D viewport. Supported browsers include Chrome, Edge, Firefox, or Safari. An Epic Games account links creations to cloud storage. Using MetaHumans in UE5 demands a gaming-level GPU (e.g., RTX 3060, 32 GB RAM) for high-fidelity rendering, especially at LOD0 with strand hair or ray-traced skin.

The cloud setup broadens accessibility, enabling diverse users to create without high-end hardware. UE5 development requires robust systems to handle MetaHuman assets, ensuring smooth performance in real-time applications like games or virtual production. Mesh to MetaHuman is Windows-only. MetaHuman Creator’s low entry barrier supports rapid prototyping across skill levels.

Can I edit MetaHuman body proportions and height in Creator?

MetaHuman Creator offers preset height (Short, Average, Tall) and build (Underweight, Medium, Overweight) options, creating 18 unique shapes. A Head Scale slider refines proportions to avoid unnatural head sizes after height changes. Short male MetaHumans are ~5’5”, tall females ~5’6”. Detailed control is limited to maintain rig compatibility, requiring external tools like Maya for non-standard bodies.

Presets cover common human shapes, simplifying design for most projects. Advanced users can modify assets externally, though this involves complex workflows to preserve MetaHuman rigging. The system prioritizes ease of use and animation readiness. It’s effective for realistic character diversity in UE5 projects.

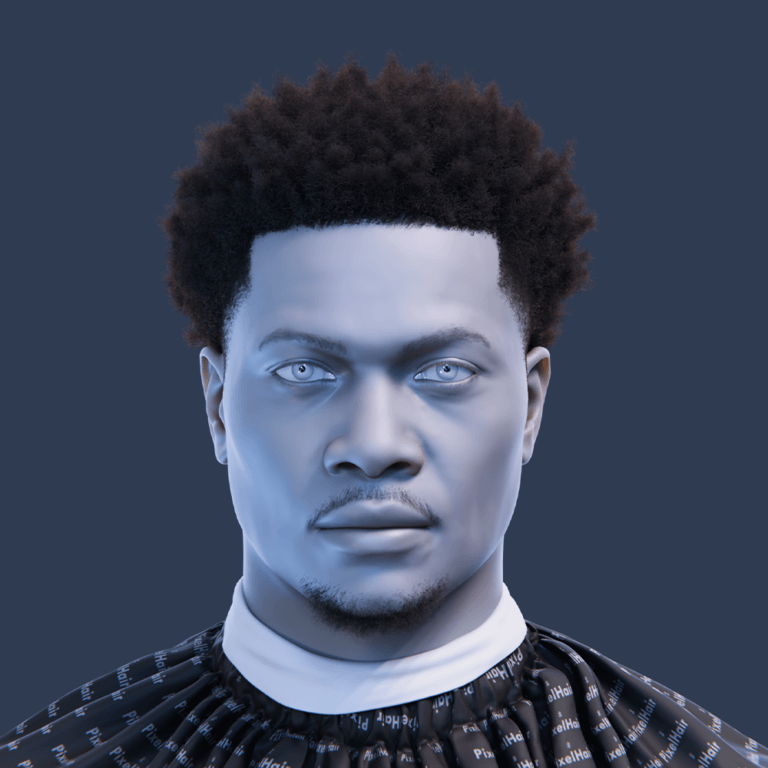

How do I apply clothing and textures in MetaHuman Creator?

MetaHuman Creator provides preset clothing (tops, bottoms, shoes) with customizable colors and patterns, such as hoodies, suits, or sneakers with plaid or solid options. Skin texture controls adjust aging, freckles, or makeup like eyeliner, while hair and eye textures offer further styling. The library is limited, but combinations create distinct looks. Custom clothing requires UE5 or external tools like Blender for advanced designs.

The preset system ensures quick styling for beginners, ideal for rapid character creation. Creative use of colors and external workflows enables specialized appearances, enhancing MetaHuman versatility for professional game or cinematic projects requiring unique character aesthetics.

Does MetaHuman Creator support facial rigging and blendshapes?

MetaHuman characters feature advanced facial rigs (~700 bones and blendshapes) and standard body rigs, supporting ARKit-compatible animations. Fully rigged upon export, they’re ready for realistic performances using MetaHuman Animator or Live Link. The hybrid bone-blendshape system balances efficiency and fidelity, with controls for jaw, brows, and lips. Maya exports include rig setups for external animation workflows.

The sophisticated rig simplifies animation for games, films, or virtual production. It delivers high-fidelity expressions, making MetaHumans ideal for dialogue-heavy scenes or emotive performances, with seamless integration into UE5’s animation pipeline for professional results.

Can I animate MetaHuman characters inside Unreal Engine after creation?

MetaHumans in UE5 support animation via Sequencer for cinematics, Control Rigs for keyframing, and Live Link/MetaHuman Animator for performance capture. Here’s how:

- Sequencer: Add MetaHumans to timeline tracks. Keyframe face/body movements using provided channels. Apply Facial Pose Library for quick visemes or emotions. Ideal for cinematic cutscenes with precise control.

- Control Rig: Use built-in rigs for intuitive keyframing. Adjust brows, jaw, or limbs via GUI controls. Pre-made setups simplify bone animation. This streamlines keyframe workflows for animators.

- Live Link/Animator: Stream facial expressions via Live Link Face for real-time puppeteering. MetaHuman Animator processes iPhone videos for high-fidelity facial animation. Captures micro-expressions effectively.

- Retargeting: Retarget UE4 mannequin animations to MetaHumans using IK Retargeter. Apply existing animation libraries for basic movements. Ensures seamless animation sharing across characters.

Specialized tools streamline MetaHuman animation. They integrate with UE5’s pipeline for professional results.

How does MetaHuman Creator compare to other character creation tools?

MetaHuman Creator excels in photorealistic human creation for Unreal Engine, offering unmatched visual fidelity and ease of use with a browser-based interface. Compared to Character Creator (~$599, multi-engine support) or Daz3D (extensive assets, free base), it’s less flexible for non-human characters but free and UE5-optimized. Its Unreal-only licensing limits versatility, unlike FBX-compatible alternatives. MetaHuman’s advanced rigging streamlines UE5 animation, surpassing generic rigs in other tools.

Combining MetaHuman with ZBrush or Blender addresses stylization needs, balancing realism with flexibility. Its no-cost model and seamless UE5 integration make it ideal for Unreal-focused projects, while community ecosystems like Daz3D offer broader asset variety for diverse creative workflows.

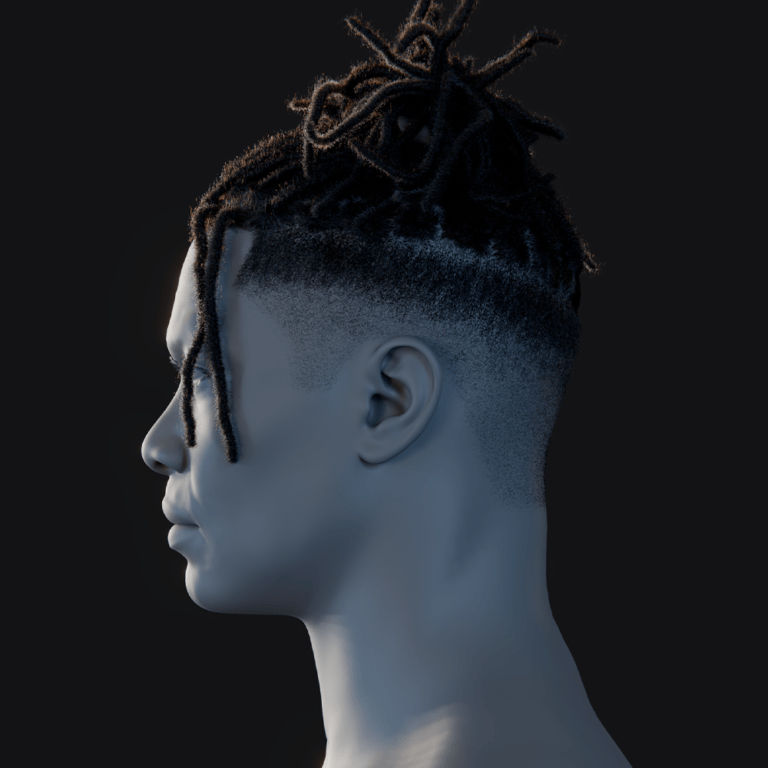

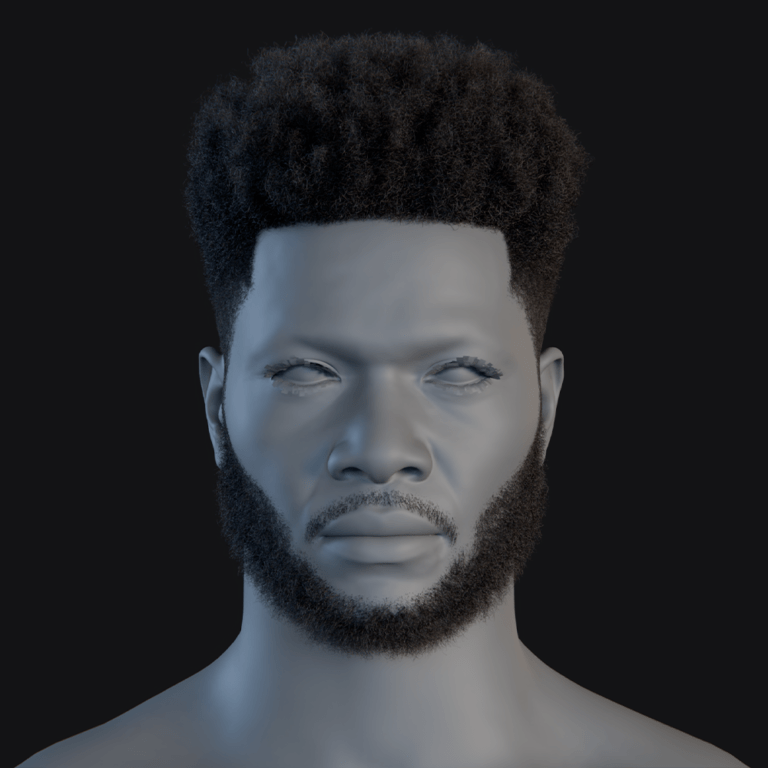

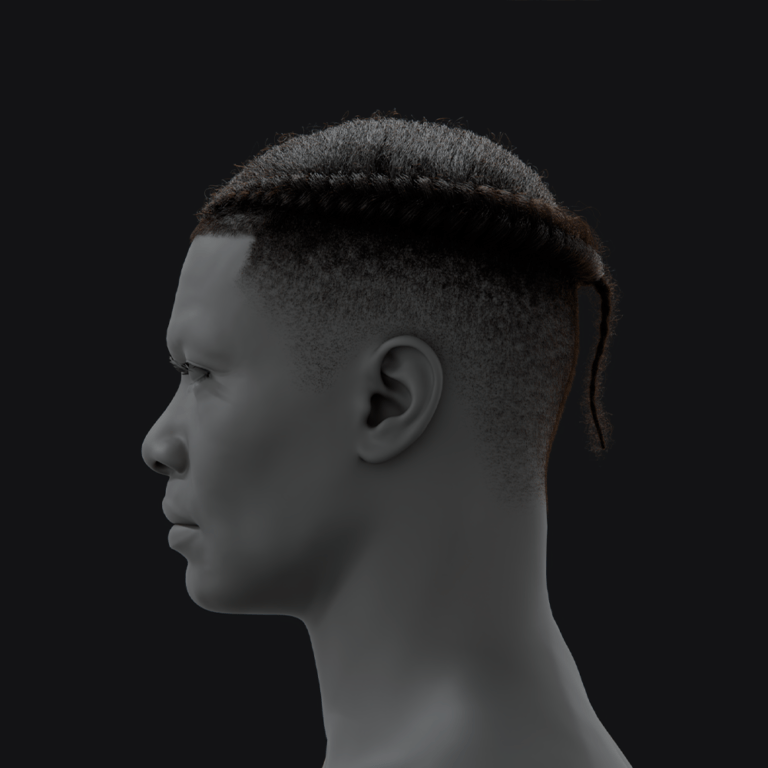

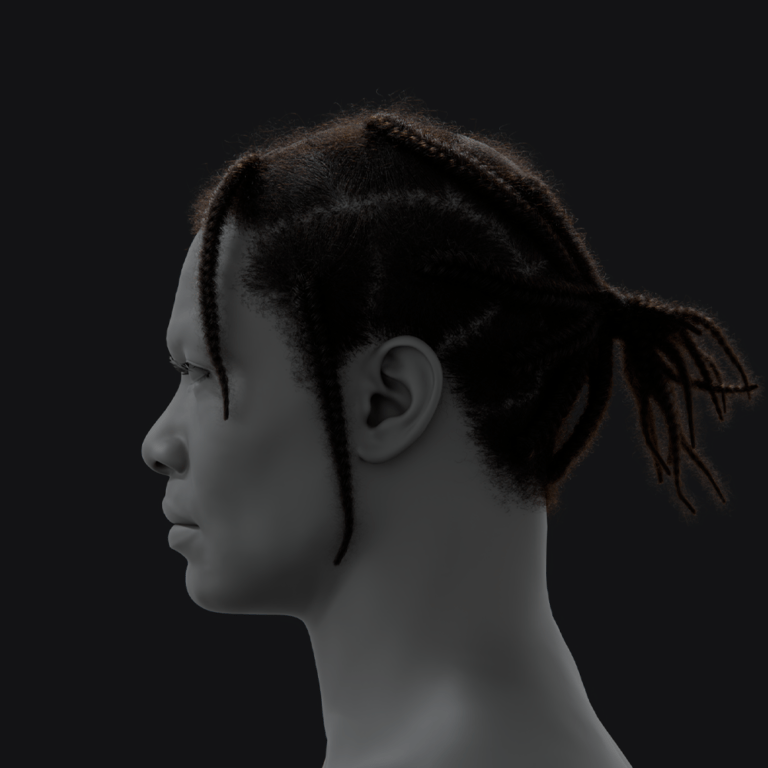

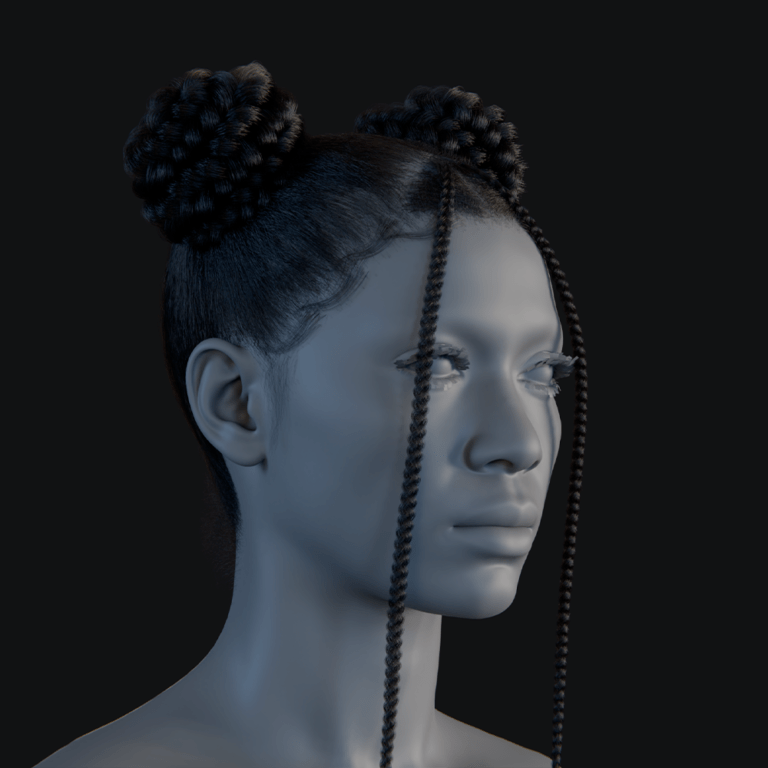

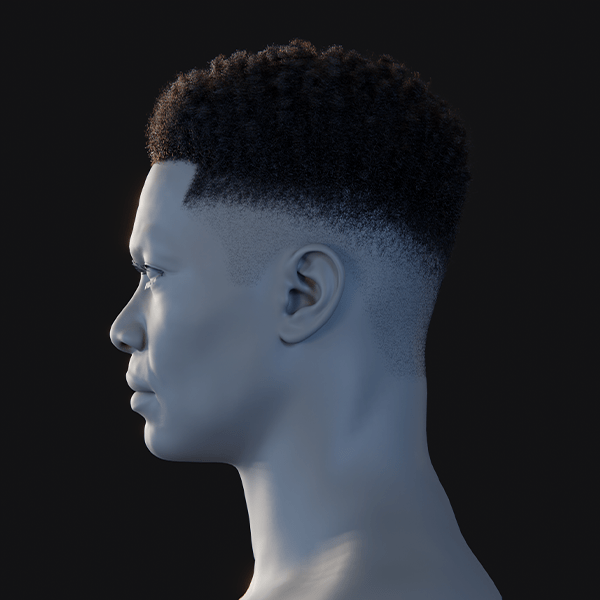

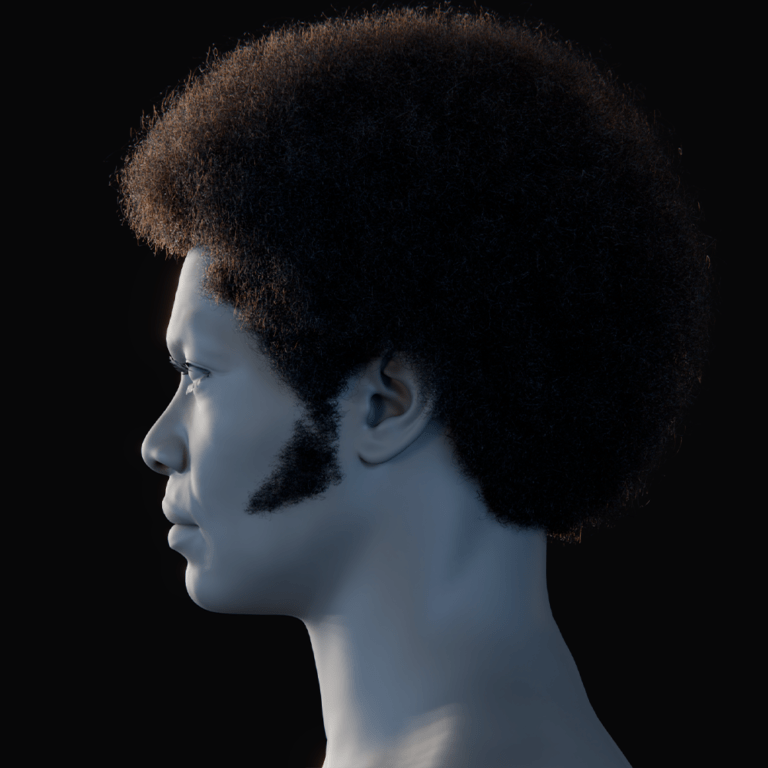

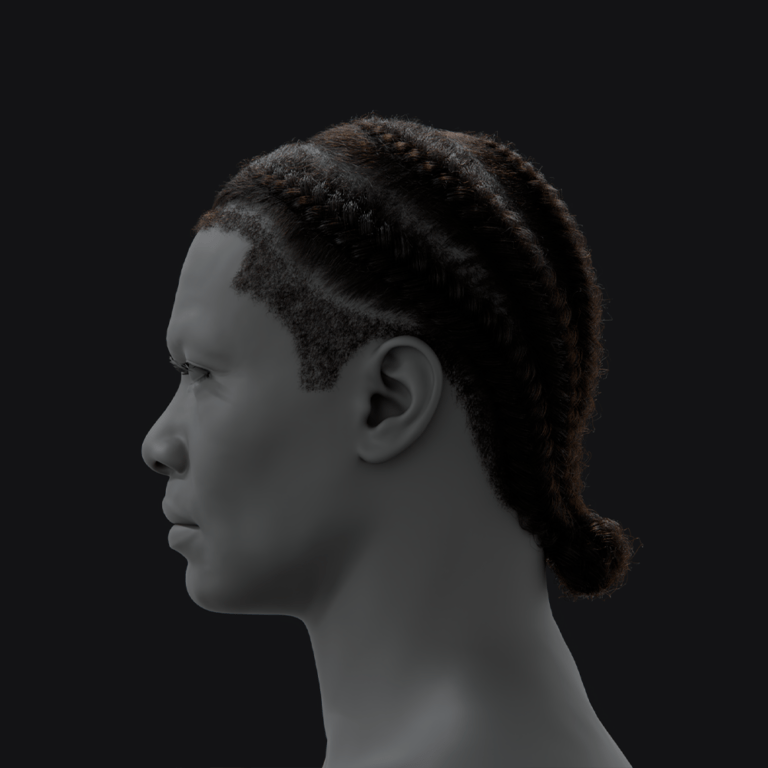

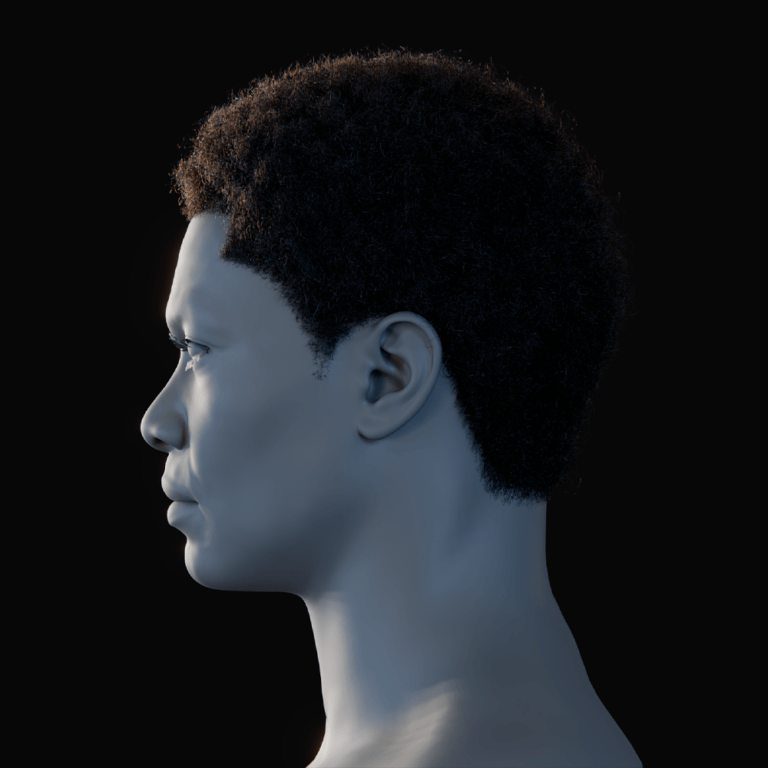

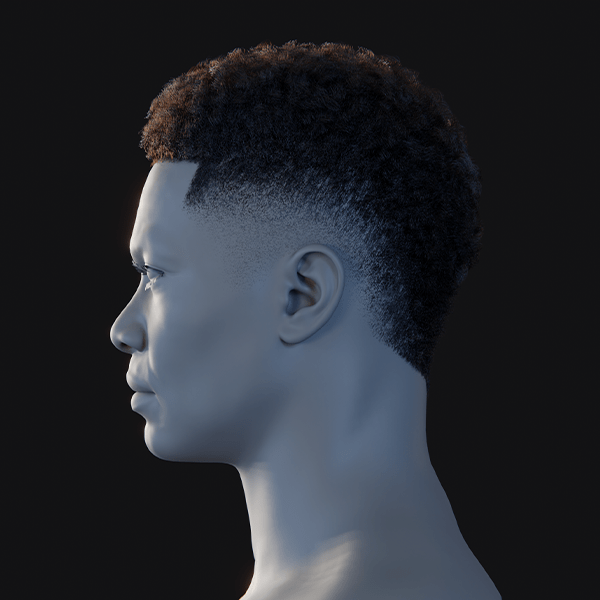

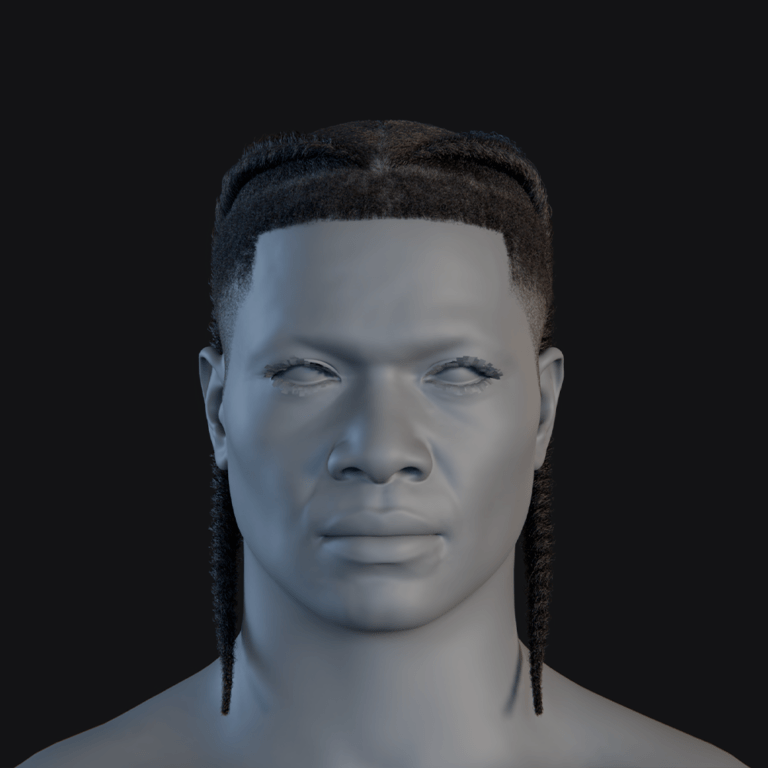

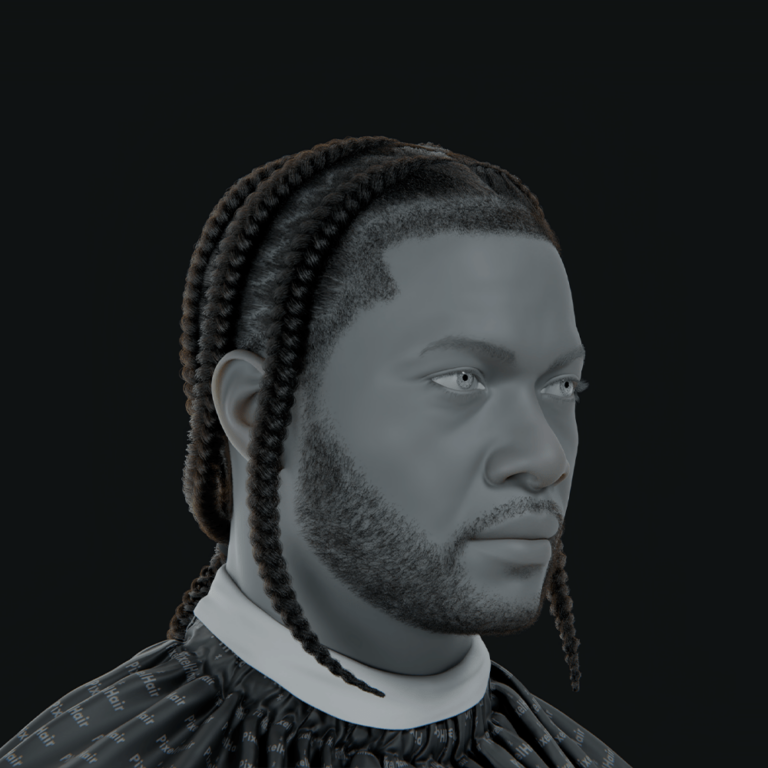

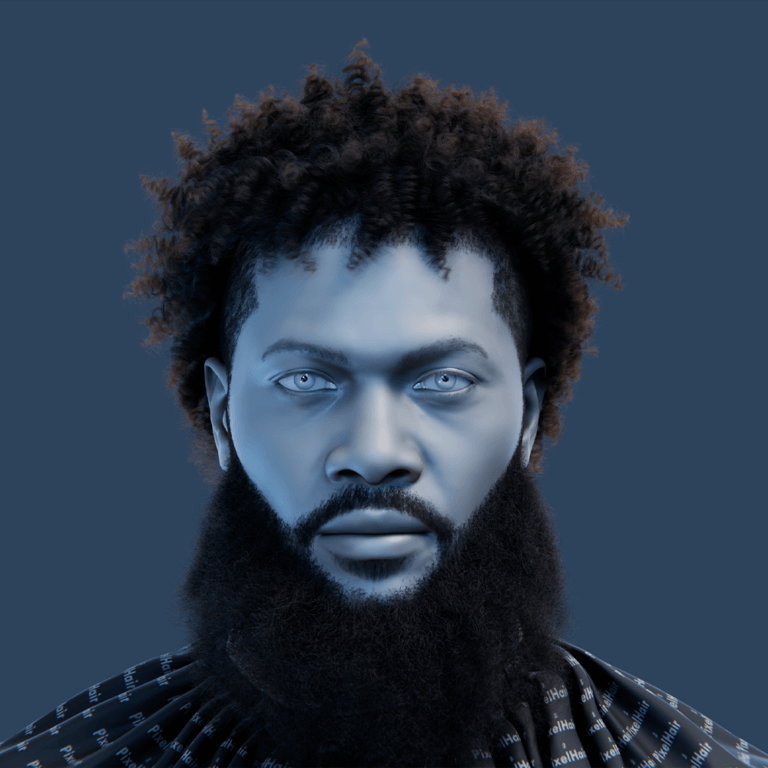

How can PixelHair be used to enhance hairstyles for MetaHuman characters created in Unreal Engine?

PixelHair provides high-quality 3D hair assets to enhance MetaHuman hairstyles. Here’s how:

- Import Assets: Import Alembic Groom files from Blender to Unreal. Use Groom plugins to create assets. Include hair cap meshes for proper scalp fitting.

- Attach to MetaHuman: Open the MetaHuman Blueprint. Swap default hair groom with PixelHair’s. Adjust hair cap to fit head.

- Customize Hair: Tweak strand length, thickness, or material properties. Apply Unreal hair shaders for realism. Enable physics for dynamic movement.

- Optimize Performance: Adjust strand density for real-time use. Create LOD cards for games. High-poly styles suit cinematics.

PixelHair expands hairstyle variety. It delivers realistic, customizable looks for MetaHumans.

Can I Use MetaHuman Creator with Motion Capture and Facial Tracking?

Yes, MetaHuman characters are fully compatible with motion capture (mocap) and facial tracking, designed for production-ready workflows in Unreal Engine (UE). They support both body and facial mocap, with built-in tools for real-time integration. Below are the detailed capabilities:

- Facial Motion Capture: MetaHumans use a facial rig aligned with Apple’s ARKit blendshapes, making them compatible with many facial capture solutions.

- Live Link Face App: Epic’s Live Link Face app allows real-time facial tracking via an iPhone’s TrueDepth camera, enabling live puppeteering or recording. This app streams facial data directly into Unreal Engine, providing immediate feedback for animators. It’s ideal for quick setups and solo creators. Users can record performances for later refinement.

- MetaHuman Animator: Available in UE 5.2+, this tool converts video input (from an iPhone or stereo camera) into high-fidelity facial animations, capturing fine details like wrinkles and eye movements. It uses advanced algorithms to interpret subtle expressions accurately. The process simplifies animation workflows significantly. Results rival professional studio outputs.

- Third-Party Solutions: Tools like Faceware and Dynamixyz are supported for advanced facial capture needs. These integrate seamlessly with MetaHuman’s rig for precise tracking. Studios can leverage existing pipelines with these options. Compatibility broadens professional use cases.

- Body Motion Capture: MetaHumans have a standard human skeleton, compatible with mocap systems like Vicon, Xsens, and Rokoko.

- Live Link Support: Unreal’s Live Link supports real-time body motion streaming, and captured data can be retargeted to the MetaHuman skeleton. This allows animators to see movements instantly in-engine. It’s perfect for virtual production shoots. Retargeting ensures smooth integration.

- Full Performance Capture: Combining face and body mocap creates lifelike digital doubles with synchronized animations. This setup is used in high-end productions for realism. It requires multi-device coordination. Results are cinematic-quality performances.

- Finger Joints: The skeleton includes finger joints for detailed hand animations, enhancing realism. This feature supports intricate gestures like sign language or object interaction. It’s fully compatible with glove-based mocap. Hand detail elevates character expressiveness.

- Calibration and Setup: Some calibration may be needed for actor proportions or facial tracking accuracy.

- IK Retargeter: Unreal’s IK Retargeter simplifies skeleton mapping between actors and MetaHumans. It adjusts for size and proportion differences effortlessly. This tool saves time in mocap preparation. Animation fidelity remains intact.

- Live Link Face Mapping: The app auto-maps blendshapes for facial tracking, reducing setup complexity. It aligns ARKit data to MetaHuman rigs quickly. Manual tweaks are rarely needed. This streamlines the process for beginners.

- Markerless Tracking: Markerless facial tracking is supported, alongside marker-based systems for flexibility. This option lowers equipment barriers for smaller teams. It still delivers reliable results. Documentation guides setup clearly.

MetaHumans excel in mocap-driven projects, offering lifelike animations with minimal manual effort, ideal for games and virtual production.

How Do I Import My MetaHuman into Sequencer for Cinematic Animation?

To animate a MetaHuman in Unreal Engine’s Sequencer for cinematics, follow these steps, each detailed in a four-line paragraph:

- Drag the MetaHuman into the Level: Place the MetaHuman Blueprint (BP_[Name]) from the Content Browser into the viewport to set its starting position. This establishes the character’s physical presence in the scene. The Blueprint contains all necessary components. Positioning is adjustable later in Sequencer.

- Open Sequencer (Create a Level Sequence): Right-click in the Content Browser, select Animation > Level Sequence, and open it to access the Sequencer timeline. This creates a new sequence for animation control. The timeline organizes all tracks logically. It’s the foundation for cinematic workflows.

- Add the MetaHuman to Sequencer: Click “+ Track,” choose “Actor to Sequencer,” and select your MetaHuman to add its animation tracks. This links the character to the timeline for animation. Tracks include Transform, Animation, and Control Rig. All are editable within Sequencer.

- Animation Tracks Appear: Tracks for Transform (movement), Animation (body), and Face Control Board (facial) are added automatically. If missing, manually add Control Rig tracks via the track menu. These tracks manage different animation aspects. They ensure full character control.

- Add Keyframes or Animations: Use the Transform track for movement, apply animations to the Animation track, or keyframe facial expressions via the Face Control Board. This step builds the animation sequence dynamically. Keyframes offer precise timing control. Pre-made animations speed up workflows.

- Use the Face Pose Library (optional): Apply predefined expressions (e.g., “Joy”) from the pose library to streamline facial animation. The library provides ready-made emotions for quick application. It’s accessible within the Face Control Board. This simplifies complex expression setups.

- Playback: Scrub or play the timeline to view the animation; ensure all tracks are active and keyed correctly. This tests the sequence in real time. Adjustments can be made on the fly. Final playback confirms the cinematic vision.

For example, keyframe a wave, apply facial expressions, and sync with audio for a complete scene.

What Are Common Issues When Using MetaHuman Creator with UE5?

Common issues and their solutions are listed below, with each item and sub-item detailed:

- Hair LOD and Bald MetaHumans: Some hairstyles lack lower LODs, causing baldness at distance.

- Full LOD Hairstyles: Use hairstyles with full LODs to maintain hair visibility across distances. Epic labels these in Creator for easy identification. They ensure consistent character appearance. Performance impact is minimal with optimization.

- Adjust LOD Settings: Modify LOD settings or use LODSync to force higher LODs, though this may affect performance. This workaround prevents baldness in scenes. It’s adjustable per project needs. Test for balance between quality and speed.

- Export Fails or Not Appearing: Non-alphanumeric names may cause silent export failures.

- Simple Names: Use names like “Andre01” to avoid export issues from special characters. This ensures successful cloud processing. It’s a simple fix for a common error. Consistency avoids confusion.

- Plugin Check: Ensure the MetaHuman Plugin is enabled in UE5 for proper integration. Disabled plugins block imports silently. Check under Edit > Plugins. Restart UE5 after enabling.

- MetaHuman Not Moving/Animatable: Mannequin animations need retargeting due to MetaHuman’s unique skeleton.

- IK Retargeter: Use this tool to map animations to the MetaHuman skeleton accurately. It handles differences from the UE4 mannequin effortlessly. Setup is quick and intuitive. Results are smooth animations.

- MetaHuman AnimBP: Apply MetaHuman-specific animation blueprints for body and face control. These are designed for MetaHuman rigs specifically. They ensure full functionality. Retargeting aligns external animations.

- Performance Drops/Stutters: Multiple MetaHumans strain resources.

- Lower LODs: Use lower LODs for distant characters to reduce processing demands. This maintains frame rates effectively. Detail scales with proximity. It’s essential for large scenes.

- Simplify Materials: Reduce material complexity and disable ray tracing for better performance. Simplified shaders lighten GPU load significantly. Visual quality remains acceptable. Test settings for optimal results.

- Materials Look Different in Engine: Incorrect quality settings affect skin and eye appearance.

- Cinematic Settings: Set Engine Scalability to Cinematic/Epic for realistic rendering. This enhances shader accuracy noticeably. Subsurface Scattering must be enabled. Skin and eyes regain fidelity.

- Subsurface Profile: Enable this for proper skin rendering in-engine. It simulates light penetration realistically. Without it, skin looks flat. Adjust eye materials similarly.

- Control Rig Not Appearing in Sequencer: The Control Rig plugin may not be active.

- Enable Plugin: Activate the Control Rig plugin under Edit > Plugins for facial animation. This is required for Face Control Board functionality. Restart UE5 post-activation. It resolves missing tracks.

- Manual Assignment: Assign the MH Face Control Board manually in Sequencer if needed. This ensures facial controls appear correctly. It’s a quick fix for plugin issues. Update assets if persistent.

- Mesh to MetaHuman Issues: Improper mesh preparation or platform limitations cause failures.

- Mesh Prep: Use a neutral-expression mesh with clean topology for best results. Eyes open and mouth closed are critical. Orientation must match documentation. This prevents processing errors.

- Windows Only: Mesh to MetaHuman works only on Windows, per Epic’s specs. Other platforms aren’t supported yet. Use a Windows machine for this feature. Check system compatibility first.

- Too Many MetaHumans in One Project: Excessive MetaHumans bloat project size.

- Organize Assets: Keep only necessary characters to manage storage effectively. Unused assets waste space unnecessarily. Regular cleanup maintains efficiency. Monitor disk usage closely.

- Delete Unused: Remove unneeded MetaHumans to free up space in the project. This keeps file sizes manageable. Back up assets externally if needed. It’s a proactive space-saving step.

Most issues are resolved with settings adjustments, updates, or adherence to best practices, as detailed in Epic’s resources.

Can I Use My Own Mesh with Mesh to MetaHuman After Using Creator?

Yes, you can convert a custom 3D head mesh into a MetaHuman using the Mesh to MetaHuman feature. The process includes:

- Prepare Your Mesh: Use a neutral-expression head mesh (eyes open, mouth closed) for compatibility. This can come from scans or sculpts with clean topology preferred. Proper preparation ensures accurate conversion. Misaligned meshes fail processing.

- Use the MetaHuman Plugin in UE5: Enable the plugin, create a MetaHuman Identity asset, import your mesh, and align landmarks. Landmarks (e.g., eyes, nose) guide the retopology process. The plugin handles initial setup intuitively. This starts the conversion workflow.

- Mesh to MetaHuman Process: Process the mesh to generate a rigged MetaHuman, stored in your online library. Cloud processing retopologizes it to MetaHuman standards. The result is a fully rigged character. It integrates seamlessly with UE5.

- Refine in Creator: Open the new MetaHuman in Creator to adjust skin, hair, and features. Mesh to MetaHuman focuses on the face primarily. Creator adds body and detail customization. This completes the character design.

- Download to Unreal: Export via Quixel Bridge for use in your UE5 project. This brings the refined MetaHuman into your workflow. It’s ready for animation and rendering. Original textures need manual adaptation.

This workflow creates precise likenesses with MetaHuman’s rigging benefits.

Are There Limits to How Many MetaHumans I Can Create for a Project?

There’s no strict creation limit, but practical constraints exist:

- Session Time Limit: Creator sessions last about one hour, requiring reconnection to continue. This prevents indefinite single-session use. Reconnection is straightforward via login. Work resumes seamlessly after.

- Cloud Library: All MetaHumans are stored online with no known quota limit reported. Users have created dozens without restrictions. Storage is managed by Epic’s cloud. Access remains reliable long-term.

- Project Size (Disk Space): Each MetaHuman adds hundreds of MB to your project locally. Importing only necessary characters controls disk usage. Large projects need careful management. Optimization prevents bloat.

- Performance Limits (In-Engine): Multiple MetaHumans require optimization like lower LODs for real-time playback. High detail strains GPUs without adjustments. Simplified grooms help maintain frame rates. Test scenes for performance.

- Concurrent Downloads: Team members can create MetaHumans on separate accounts for collaboration. Coordination ensures project consistency. Downloads integrate via Quixel Bridge. This supports multi-user workflows.

Creation is unlimited, but storage and performance need optimization.

Can MetaHuman Creator Characters Be Used in Games and Virtual Production?

Yes, MetaHumans are designed for both contexts:

- In Games: Use LODs (up to 8 levels) to balance quality and performance.

- Eight LODs: These ensure scalability across hardware, from high-end to low-spec. Detail adjusts automatically with distance. This supports smooth gameplay. Optimization is key for success.

- Simplified Materials: Reduce material complexity for better resource efficiency. This lowers GPU load without sacrificing too much quality. It’s adjustable per character. Performance improves noticeably.

- In Virtual Production: Use MetaHumans as digital doubles or live performers with mocap.

- Mocap Integration: Drive characters in real time for filming with motion capture. This creates interactive performances on set. High-end GPUs handle the load. It’s ideal for dynamic shoots.

- High LODs: Use high detail for close-ups in cinematic VP workflows. This maximizes visual fidelity for key shots. Real-time rendering supports live adjustments. Quality rivals pre-rendered outputs.

Optimize for games; prioritize fidelity with mocap for VP.

What Updates and Features Were Added to MetaHuman Creator in Unreal Engine 5?

Key updates include:

- MetaHuman Plugin & Mesh to MetaHuman (UE5.0): Import custom meshes to create unique MetaHumans. This expands customization beyond presets. It’s accessible via the plugin. Results are production-ready.

- MetaHuman Animator (UE5.2): High-fidelity facial capture from video simplifies animation. It captures subtle details like eye twitches. Workflows become faster and easier. Quality matches high-end setups.

- Improved Hair and Clothing: Expanded options include better shaders and LOD support. Hair rendering is more realistic now. Clothing variety suits diverse characters. This enhances visual appeal.

- New Body Types: Diverse proportions and presets cover varied ages and ethnicities. This broadens character representation options. Presets speed up creation. Customization is more inclusive.

- Performance and LOD Improvements: Enhanced optimization with LODSync and ray tracing support. This balances detail and speed effectively. Projects run smoother in-engine. It’s a technical leap forward.

- Audio-to-Facial Animation (UE5.5): Generate lip sync from audio for quick dialogue animation. This automates a tedious process. Results are natural and editable. It saves significant time.

- UEFN Support: Integration with Unreal Engine for Fortnite expands MetaHuman use. Characters can appear in Fortnite projects. This broadens their creative reach. Setup follows standard workflows.

These updates improve versatility and ease of use.

Where Can I Find Tutorials on MetaHuman Creator for Unreal Engine?

Tutorials are available at:

- Official MetaHuman Documentation: Epic’s site offers detailed guides on creation and animation. These cover every step comprehensively. They’re updated with UE5 features. It’s the primary resource hub.

- Unreal Engine Official YouTube Channel: Videos like “Introduction to MetaHuman Creator” teach basics. They’re visual and easy to follow. Official content ensures accuracy. New videos address updates.

- Community Tutorials on Epic Developer Community: User guides and forum tips provide practical advice. These reflect real-world experiences. Solutions to niche issues abound. It’s a collaborative knowledge base.

- 80.lv and Industry Articles: In-depth articles and case studies explore MetaHuman techniques. They offer professional insights and workflows. Examples inspire advanced use. Content is high-quality and detailed.

- YouTube Creators: Channels like Matt Workman and InspirationTuts offer free tutorials. These break down complex tasks simply. They’re ideal for visual learners. Topics range widely.

- Blender/Third-Party Guides: Guides for custom assets (e.g., PixelHair) integrate with MetaHumans. These bridge external tools and UE5. They’re niche but valuable. Check compatibility with UE5.

Start with official sources, then explore community options.

FAQ Questions and Answers

- Do I need Unreal Engine 5 installed to use MetaHuman Creator?

MetaHuman Creator runs entirely in a web browser via cloud streaming, so you don’t need Unreal Engine installed to design a character.

You only need a supported browser and an internet connection to create and tweak your MetaHuman.

However, to deploy or use the character in a game or scene, you must import it into Unreal Engine 5. - I have no 3D modeling experience – is MetaHuman Creator easy to use?

Yes – it features an intuitive, game-style interface with preset human bases you adjust via sliders and drag controls.

You don’t need to sculpt or model from scratch, and the system maintains realistic results automatically.

Changes appear in real time, so even complete beginners can craft a convincing character quickly. - Can I use a MetaHuman character outside of Unreal Engine (for example, in Unity or in a rendered video)?

MetaHumans are officially licensed only for use and rendering in Unreal Engine.

You cannot directly import them into Unity or another real-time engine.

For non-real-time output, you may animate and export a video from Unreal, but final renders must pass through UE per the license. - Can I create a MetaHuman from a photo or scan of a real person?

You cannot import a 2D photo directly into the Creator tool.

Instead, use the Mesh to MetaHuman feature in UE5 by supplying a 3D scan or custom head model.

If you only have photos, first generate a 3D head (e.g. via photogrammetry or modeling software) and then convert it to a MetaHuman. - What if I want to change my MetaHuman after I’ve already exported it to Unreal Engine?

Open MetaHuman Creator again—your character remains in your online library for edits.

Make your changes, then re-download and export via Quixel Bridge to overwrite the existing assets in Unreal.

Keep the same MetaHuman identity (don’t create a new one), but note that major bone-structure changes may affect existing animations. - Why does my MetaHuman’s hair disappear or make my character bald when the camera is far away?

This is due to LOD (Level of Detail). Some high-strand hairstyles only include mesh for LOD 0–1 and lack lower-LOD hair cards.

When the camera distance triggers LOD 2+, the hair vanishes.

To fix it, either choose a hairstyle with full LOD support (warnings appear in Creator) or adjust LOD settings to force higher detail at range. - Can I add custom outfits or hairstyles to my MetaHuman beyond what the Creator offers?

Not directly in the Creator—but in Unreal Engine you can import and assign your own assets.

For hair, import strand-based grooms or hair cards (e.g., PixelHair) and attach them to the MetaHuman head.

For clothing, model or acquire a skeletal-mesh outfit, import it, weight-paint as needed, and attach it to the MetaHuman skeleton. - How can I make my MetaHuman talk or show facial expressions?

MetaHumans include a complete facial rig; you have several animation options:- Use Unreal Engine’s Control Rig in Sequencer to keyframe expressions and lip-sync.

- Use MetaHuman Animator or Live Link Face to drive the face via performance capture.

- Use pre-made animation assets or the Pose Library for common expressions/visemes.

No extra rigging is needed—just animate with the provided tools.

- Are MetaHuman characters optimized for game performance? Will they run at good frame rates?

They’re high-fidelity and require optimization like any detailed asset. Built-in LODs allow you to drop detail for distant characters, and you’ll typically use medium LOD for in-game heroes. Performance depends on your target hardware—high-end PCs/consoles handle a few detailed MetaHumans, while crowds/mobile require simpler builds (e.g., hair cards, fewer high-res textures). - Where can I learn more or find step-by-step guidance on using MetaHuman Creator?

Start with Epic Games’ official MetaHuman documentation and tutorials on their website. Their YouTube channel offers video walkthroughs of the Creator and UE5 workflows. Community resources include tutorial sites like 80.lv, the Unreal Engine forums, and courses in Unreal Online Learning.

Conclusion

MetaHuman Creator has transformed high-fidelity digital human production by making it fast, accessible, and tightly integrated with Unreal Engine. This guide covered every step: cloud-based access, facial and body customization, exporting to Unreal Engine 5, and bringing characters to life with animation. It addressed practical concerns such as system requirements, body proportion editing, clothing options, and troubleshooting common issues like hair LOD quirks and export hiccups.

The workflow’s production viability was demonstrated—MetaHumans are free for Unreal projects, suitable for games and virtual productions, and extensible via Mesh to MetaHuman and external asset integrations. Compared to other tools, MetaHuman stands out for realism and ease-of-use though it is Unreal-centric, and it continues to evolve with UE5 updates like MetaHuman Animator, improved workflows, and new learning resources. By enabling rapid cloud-to-engine iteration, MetaHuman Creator empowers creators across game development, film, and VR to prototype and deploy hyper-realistic digital humans without specialist character teams.

Happy MetaHuman creating, and we look forward to seeing the high-fidelity characters and stories you bring to life!

Sources and Citations

- Epic Games – MetaHuman Creator Overview & Documentation (official docs on using MetaHuman Creator, system requirements, and features)Epic Developer Community – MetaHuman Creator Overview

- Epic Games FAQ – Frequently Asked Questions: MetaHuman (licensing terms and usage constraints, e.g., MetaHumans for Unreal Engine only)Epic Games – MetaHuman License FAQ

- Epic Games Blog – A Sneak Peek at MetaHuman Creator: High-Fidelity Digital Humans Made Easy (introducing MetaHuman, capabilities like quick creation under an hour)Unreal Engine – MetaHuman Creator Sneak Peek

- Unreal Engine Forums – Community Q&A and Reports (discussions on limits and issues, e.g., session time limit ~1 hour, hair LOD disappearing issues)Unreal Engine Forums – MetaHuman Community

- Epic Developer Community – MetaHuman Release Notes & Updates (information on new features in UE5 like Mesh to MetaHuman and MetaHuman Animator)Epic Developer Community – What’s New in MetaHuman Documentation

- 80 Level Article – Step-by-Step Guide for UE5 MetaHuman Animator (community tutorial highlighting how to create a custom MetaHuman, demonstrating Mesh to MetaHuman and animation workflows)80.lv – UE5 MetaHuman Animator Guide

- iRender Blog – MetaHuman vs iClone: Character Creation Comparison (insights on MetaHuman’s strengths in realism, integration, and its limitations on customization and engine usage)iRender Blog – MetaHuman vs iClone

- Blender Market – PixelHair for MetaHumans (product page explaining PixelHair features for Unreal & MetaHuman hair, e.g., hair cap, export to UE, customization)Blender Market – PixelHair

- Epic Games – Animating MetaHumans Documentation (guides for using Control Rig, Sequencer, Live Link for MetaHuman animation in UE5)Epic Developer Community – Animating MetaHumans

- ActionVFX – MetaHuman Creator Getting Started Guide 2023 (beginner-friendly overview and tips for using MetaHuman Creator with UE5, complementing official docs)ActionVFX – MetaHuman Creator Getting Started 2023

Recommended

- inZOI vs The Sims 4: A Comprehensive Comparison of Life Simulation Games

- Understanding Interpolate Hair Curves geometry nodes preset

- How do I export a camera from Blender to another software?

- Top 20 Ugliest Video Game Characters: A Celebration of Unconventional Design

- Blender Roll Hair Curves Geometry Nodes Preset: Comprehensive Guide

- Mastering Hair Creation in Blender: A Step-by-Step Guide

- Camera Switching in Blender Animations: Mastering The View Keeper

- What is the camera’s field of view in Blender?

- How to Animate in Blender: The Ultimate Step-by-Step Guide to Bringing Your 3D Scenes to Life

- Blender Set Hair Curve Profile Geometry Nodes Preset