Creating a MetaHuman in UE5 involves designing a character in MetaHuman Creator and importing it for animation or gameplay. The process is streamlined for efficiency:

- Access MetaHuman Creator: Sign in with an Epic Games account at metahuman.unrealengine.com. Launch the cloud-based app in Chrome, Edge, Firefox, or Safari. Requires stable internet for streaming. First-time users may request access, often granted instantly.

- Customize Your Character: Select a preset and adjust facial features, body, skin, and clothing. Use sliders or blend presets for unique designs. Intuitive controls ensure realistic results. Changes save in real-time to your account.

- Download to UE5: Use Quixel Bridge in UE5 to download your MetaHuman. Log in, select your character, and choose quality level (e.g., High LOD). Assets include meshes, textures, and rigs. Download size ranges from hundreds of MB to a few GB.

- Animate or Use in Game: Drag the MetaHuman Blueprint into a level. Animate via Sequencer for cinematics or possess for gameplay. Fully rigged character supports immediate animation or mocap.

MetaHuman Creator simplifies character creation. UE5 integration ensures seamless animation workflows.

What is Metahuman Creator and how do I access it?

MetaHuman Creator is a free, cloud-streamed character creation tool from Epic Games that allows you to craft photorealistic digital humans via a web browser. Instead of installing software, the MetaHuman Creator runs on Epic’s cloud servers and streams the interface to your browser (using Pixel Streaming). This means the heavy computation is done remotely, so you just need a decent internet connection and a Chrome, Firefox, or Safari browser to use it. The system requirements are minimal on your end: Windows or macOS, internet, and a supported browser (no high-end GPU needed just for the creator).

To access MetaHuman Creator, you will need an Epic Games account (the same one used for Unreal Engine or Fortnite). Once logged in, you can access the app by visiting the MetaHuman Creator website and clicking “Launch”. The first time, you might have to request access or join a waiting queue if the service is busy (wait times are usually short). After that, you’ll see a gallery of preset characters to choose from as a starting point.

Do I need an Epic Games account to create a Metahuman?

Yes. You must log in with an Epic Games account to use MetaHuman Creator and to download your character. MetaHumans you create are tied to your Epic account in the cloud. This allows you to access your custom characters from any computer by logging in. If you don’t have an Epic account, you can create one for free on Epic’s website. Also, make sure the account is linked to Quixel Bridge (which uses the same login) to download assets.

In short, without an Epic account you cannot use MetaHuman Creator or Bridge. The account is what keeps track of your MetaHuman library and ensures only you can access your custom models. The good news is the account and all MetaHuman tools are completely free (more on usage licensing in the FAQ).

How do I customize facial features in Metahuman Creator?

Customizing facial features in MetaHuman Creator is very intuitive. Once you select a starting face (preset), you can modify it in two primary ways:

- Blend with Other Faces: MetaHuman Creator lets you blend shapes between different preset faces. For example, you can pick a base face, then choose a second face and morph certain features towards it. By clicking on areas of the face (like the nose, jaw, eyes, etc.), you can drag a gizmo that interpolates between various example faces. This system ensures any combination you create remains realistic (within plausible human ranges).

- Fine-Tune & Sculpt: After blending overall shapes, you can fine-tune details. MetaHuman Creator provides facial control sets for specific features. For example, you can adjust the skin texture and aging (make a face look younger or older by controlling wrinkle details), tweak the skin tone precisely with a color picker, and add elements like freckles, blemishes, or makeup. You can change the eye color and iris pattern from a realistic palette, choose different teeth models (the tool offers a few dental variations), and apply makeup like eyeliner or lipstick by selecting styles and colors.

In practice, designing a face might go like this: choose a preset close to what you envision, then enter the blend mode to adjust the shape of the head, brow, eyes, nose, mouth, etc. For instance, you could widen the jaw or make the eyes larger by blending toward a preset that has those qualities. Then switch to detail mode to adjust skin and asset details – pick a darker or lighter skin tone, add freckles or scars for character.

Can I change body types, gender, and skin tone in Metahuman Creator?

MetaHuman Creator gives you control over the entire character, not just the face. In the Body tab of the creator, you can adjust characteristics like masculine or feminine body types), physique, and skin. Here’s how:

- Body Type and Gender: You can pick between masculine or feminine archetypes and three body builds: Underweight, Medium, or Overweight. The tool actually provides combinations of Height and Build – for example, 18 shapes covering short/medium/tall heights for each build type. Selecting a different body preset will change the character’s overall shape. You can also adjust the height (short, average, tall options) which correspond to real-world statures (e.g. about 5’3” for an average female or 5’8” for average male).

- Skin Tone and Texture: In the Face or Skin settings, you have full control over skin complexion. You can pick any skin color using a color wheel and sliders, allowing representation of any ethnicity or fantasy skin hue within realistic human range. MetaHuman skins come with high-resolution textures that include detail like pores and wrinkles. By adjusting the skin texture slider, you effectively control age – low wrinkle detail makes the character look young and smooth, while high values add wrinkles and sunken features for an older appearance.

Overall, MetaHuman Creator is quite flexible in defining a character’s age, ethnicity, and body shape. While it doesn’t explicitly label “male/female” toggles, the body type selection inherently covers gender by offering masculine vs feminine forms, and all the secondary sex characteristics (like face shape subtleties, Adam’s apple, etc.) come from the face presets you choose.

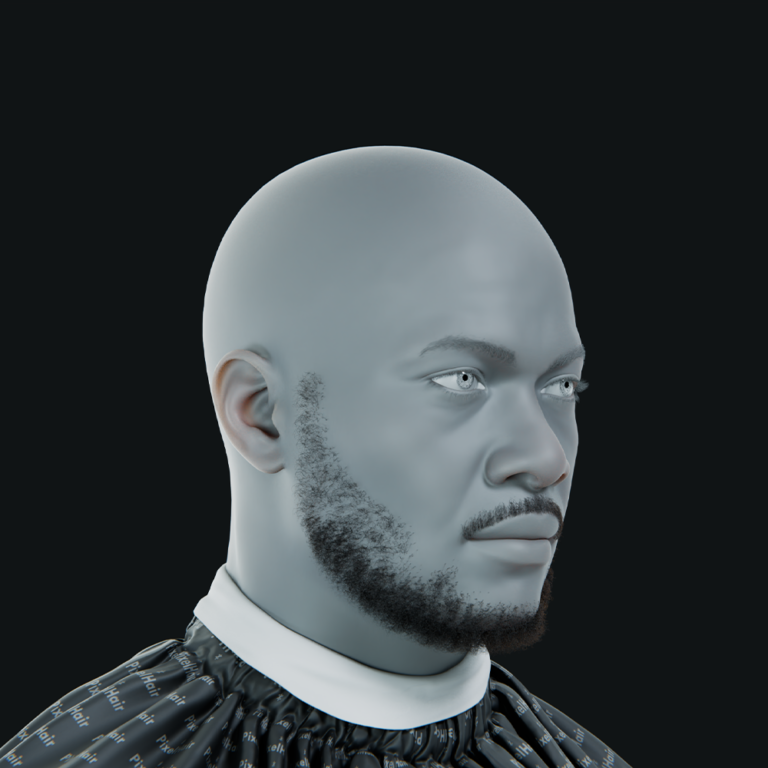

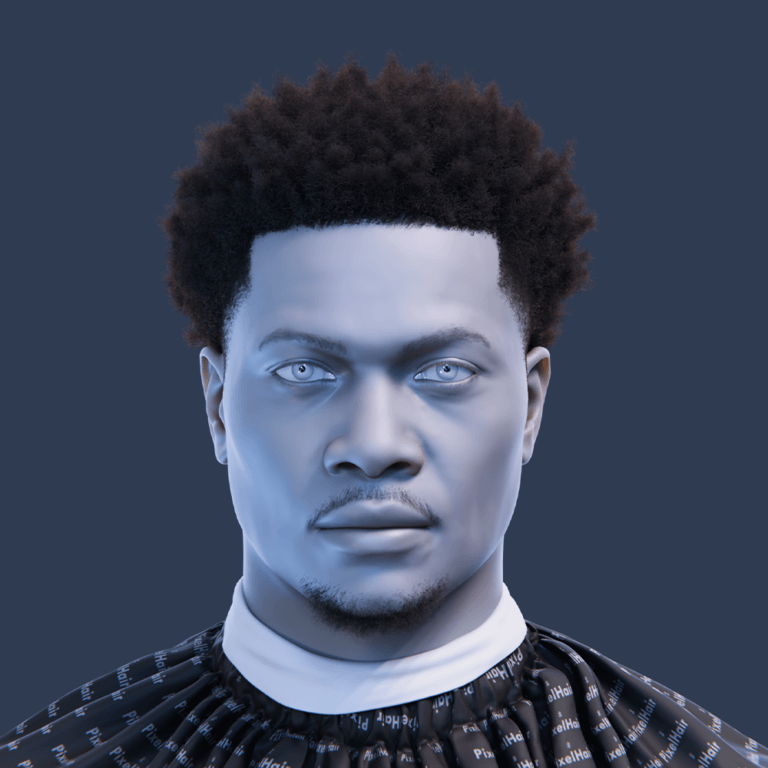

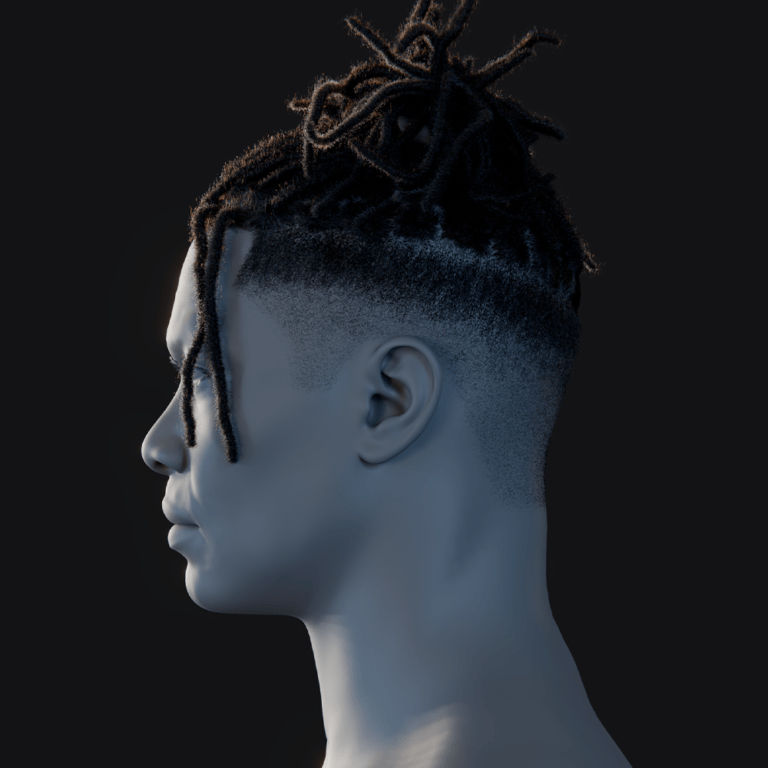

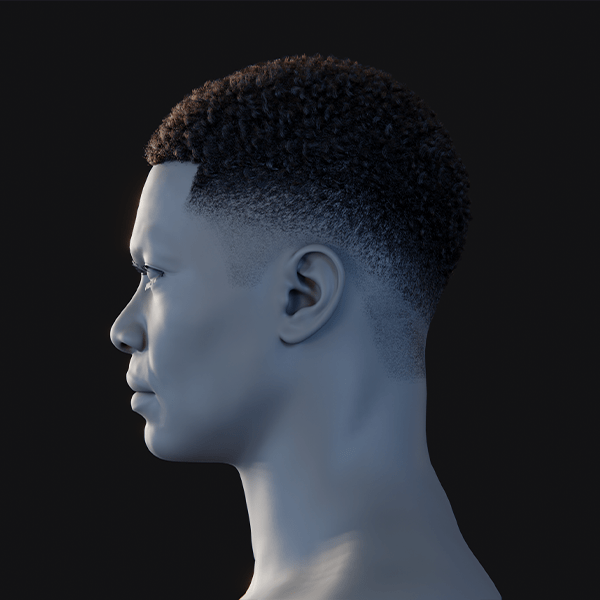

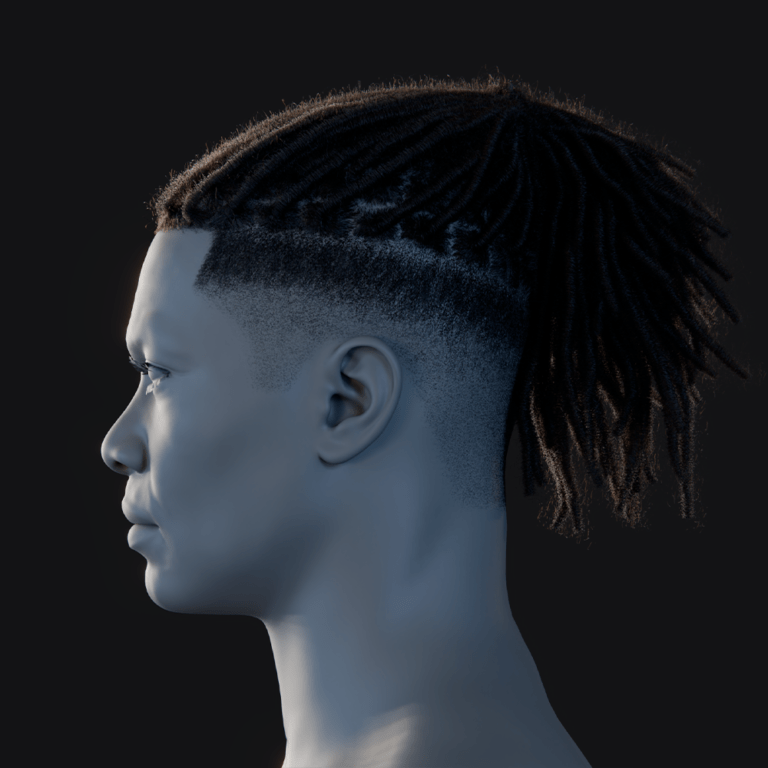

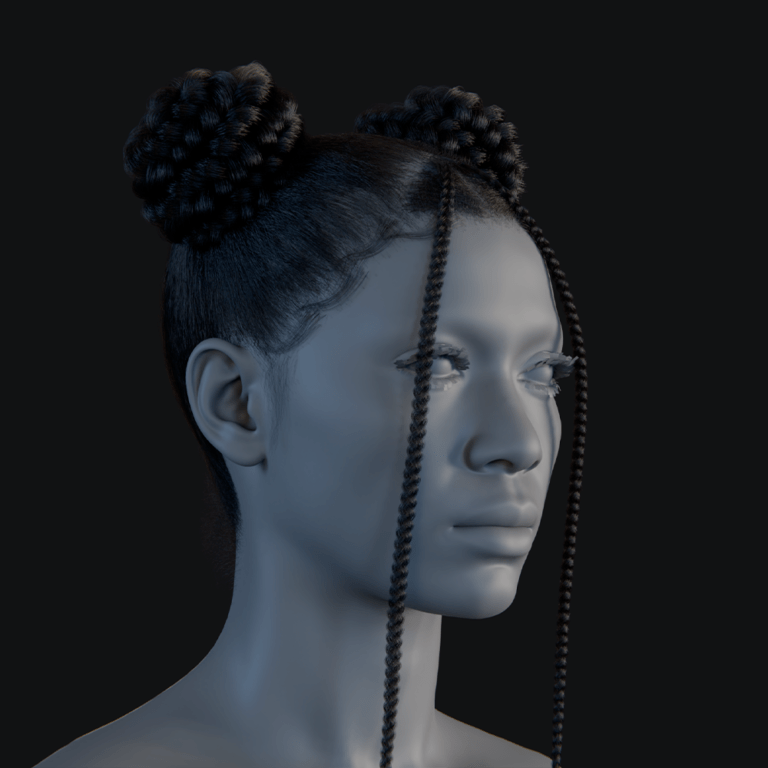

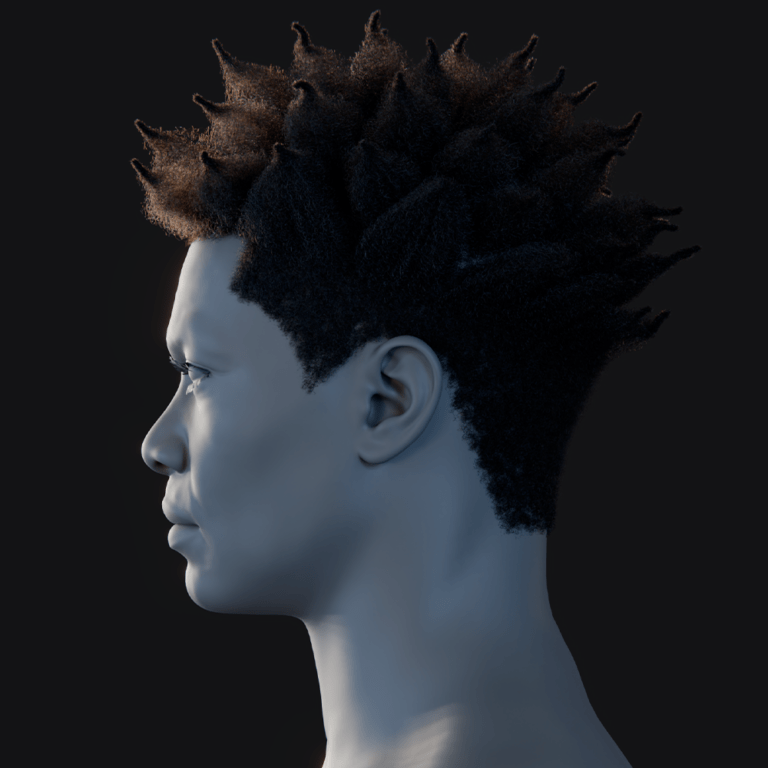

How can PixelHair be used to enhance your custom Metahuman with realistic or stylized hair in Unreal Engine 5?

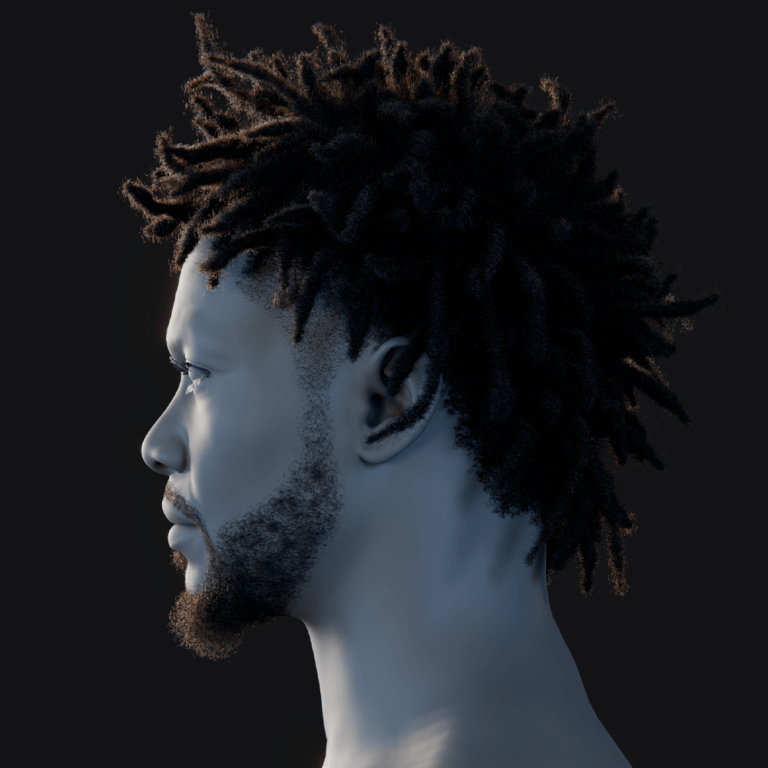

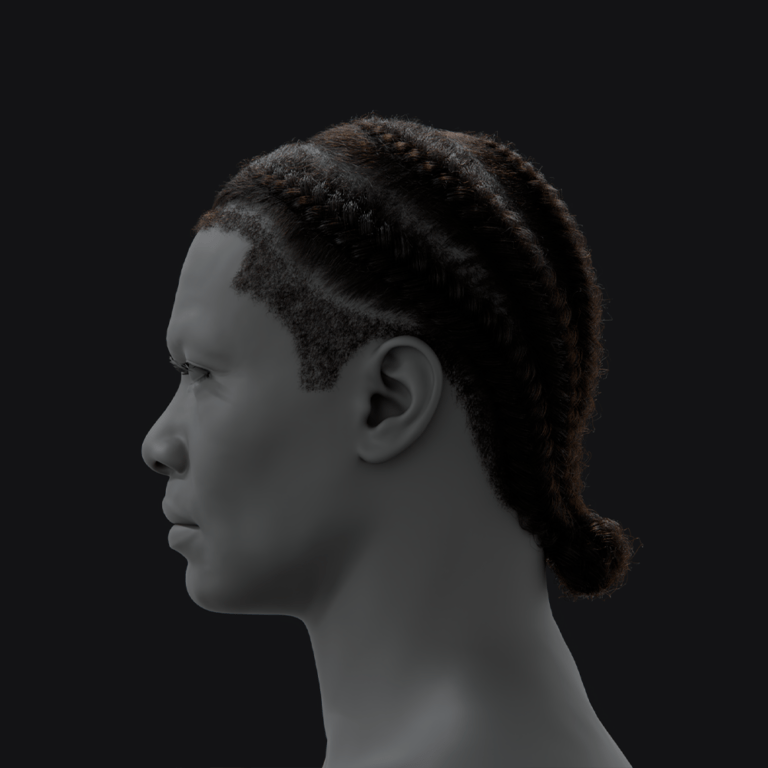

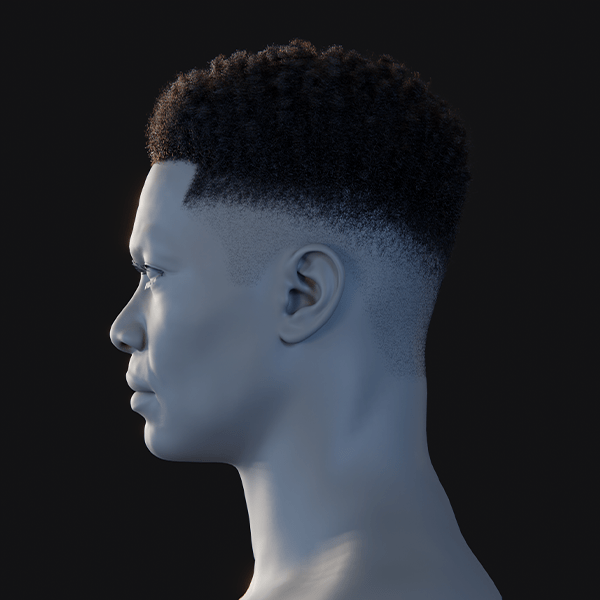

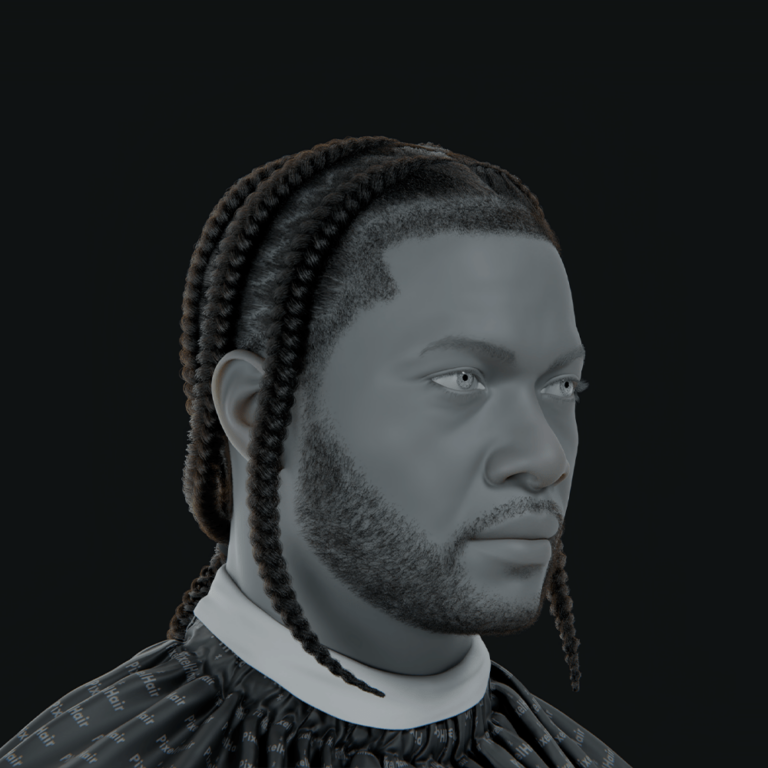

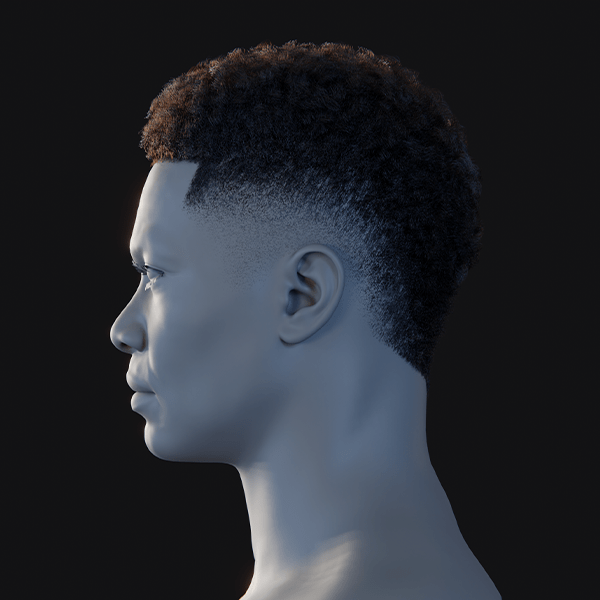

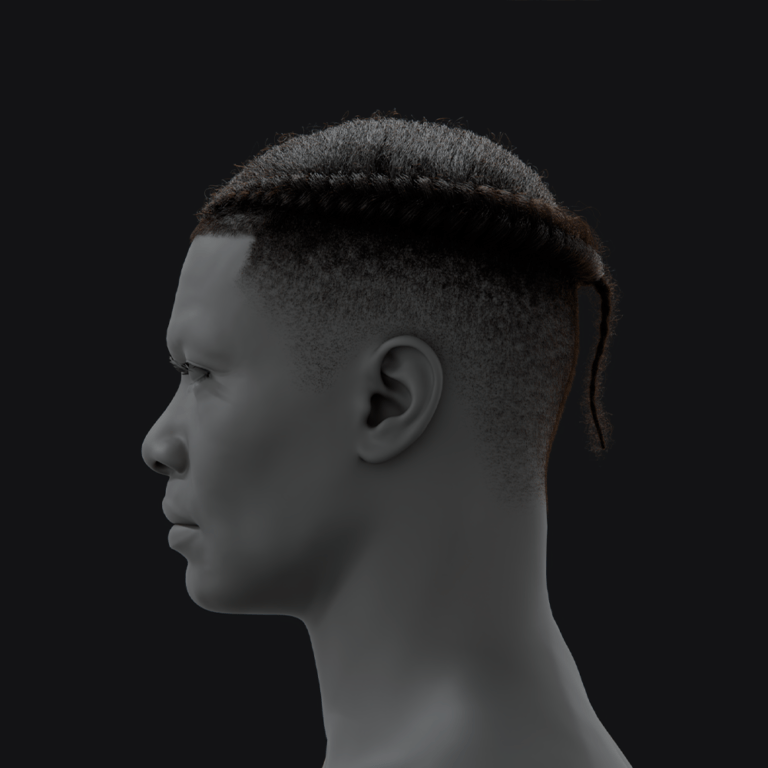

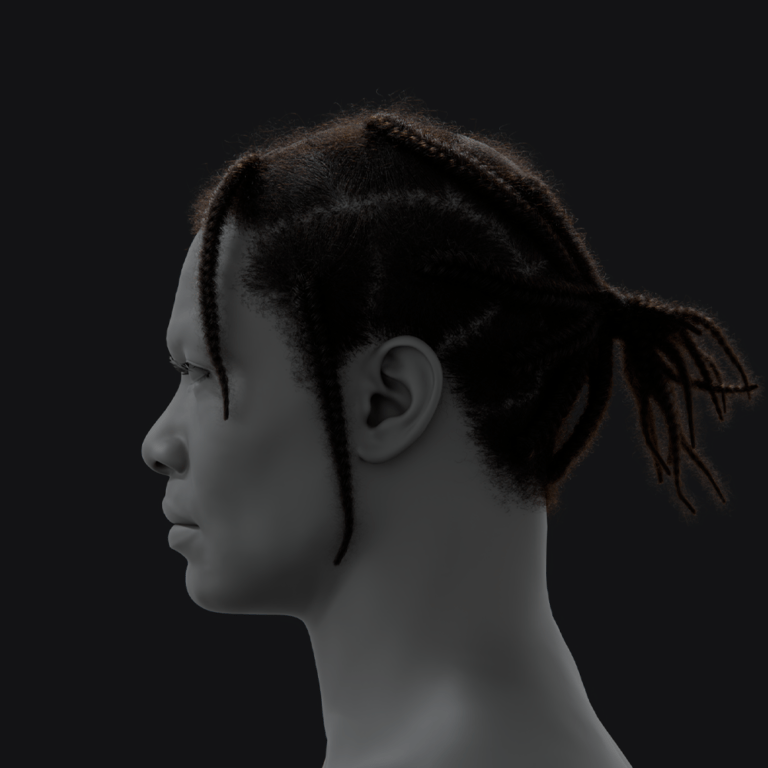

PixelHair provides high-quality hair grooms for MetaHumans, offering styles beyond default options:

- Creating PixelHair Groom: Obtain PixelHair assets from Blender Market or ArtStation, including Blender hair particles and a hair cap. Styles like braids, afros, or fades support diverse or fantasy looks. Assets are modeled for realistic strand-based rendering.

- Exporting to Unreal: Export grooms from Blender as Unreal Groom Assets using shrinkwrap to fit the MetaHuman’s head. Import into UE5 and attach to the Groom component, replacing default hair. Ensures proper alignment and rendering.

- Realistic and Stylized Looks: Achieve realistic volume with accurate lighting or stylized effects like neon colors. Customize strand thickness, materials, or shapes for unique aesthetics, enhancing creative flexibility.

- Performance Consideration: High-fidelity grooms may impact performance in dense scenes. Use LODs or switch to hair cards for optimization in real-time applications. Ideal for cinematics or high-detail characters.

PixelHair enhances MetaHuman uniqueness. It delivers authentic or creative hairstyles for diverse projects.

How do I add clothing and accessories to my Metahuman?

MetaHuman Creator includes a Clothing section where you can outfit your character with a selection of tops, bottoms, and shoes. These are preset clothing options provided by Epic (for example, shirts, jackets, pants, skirts, sneakers, boots, etc.). Within the creator, you can mix and match those to suit your character’s style and even customize the colors or patterns on the fabric.

To add clothing in MetaHuman Creator: go to the Body > Clothes section. You’ll find subcategories for Tops, Bottoms, and Shoes. Select a category and choose from the thumbnails (e.g. different shirt styles or pants styles). The chosen garment will appear on your character. You can then pick a material or color variant. The interface might offer a color palette or patterns (for example, you could choose a solid color for a t-shirt or add a printed pattern from their library).

Adding Accessories: MetaHuman Creator’s current version does not have a wide range of accessories like jewelry or glasses built-in. It focuses on clothing pieces. However, you can add accessories once in Unreal Engine. After you import your MetaHuman to UE5, you can attach any static mesh (3D model) to your character’s skeleton as an accessory. For example, if you want your MetaHuman to wear glasses, you can import a glasses model and attach it to the head bone of the MetaHuman skeleton. This can be done in the Blueprint or via sockets.

How do I export my Metahuman from the cloud to Unreal Engine 5?

Exporting MetaHumans to UE5 uses Quixel Bridge for seamless asset transfer:

- Save in Creator: Save your MetaHuman in MetaHuman Creator with a simple alphanumeric name (e.g., “JohnDoe01”) to avoid export issues. The character is stored in your online MetaHumans library, tied to your Epic Games account for access from any device.

- Open Quixel Bridge: Ensure the Quixel Bridge plugin is enabled in UE5 (Edit > Plugins). Access it via Content > Quixel Bridge in the editor. Log in with your Epic account to view your MetaHuman library in the MetaHumans section.

- Download MetaHuman: Select your character in Bridge and download assets (meshes, textures, rigs) at a chosen quality level, such as High LOD for cinematics. Download size ranges from hundreds of MB to a few GB, depending on complexity.

- Export to Project: Click Add/Export in Bridge to transfer assets to your open UE5 project’s Content Browser. Enable prompted plugins (e.g., MetaHuman, Control Rig, Groom) and restart UE5 if required to finalize the import.

Bridge simplifies the cloud-to-UE5 workflow. Using simple names ensures error-free exports for smooth integration.

What are the steps to import a Metahuman into an Unreal Engine project?

Importing MetaHumans into UE5 continues the export process via Quixel Bridge:

- Open UE5 Project: Use a UE5.0+ project configured for Desktop/Console to support MetaHuman’s high-end assets. Ensure the project is open in the UE5 editor before initiating the import process.

- Enable Plugins: Activate required plugins like Quixel Bridge, Groom, Control Rig, Live Link, and Rig Logic (Edit > Plugins). These may auto-enable during import when prompted by UE5, ensuring compatibility.

- Use Quixel Bridge: In Content > Quixel Bridge, download your MetaHuman and click Add/Export. This imports assets into the Content Browser under Content > MetaHumans > [YourMetaHumanName], including Blueprint and meshes.

- Verify Import: Check the Content Browser for BP_[YourMetaHumanName]. Drag the Blueprint into the level viewport to spawn the character, which includes idle animations like blinking or breathing for immediate use.

Bridge handles imports efficiently. Once imported, MetaHumans are fully functional for animation or gameplay without further internet dependency.

What plugins are required to use Metahuman in UE5?

When you import a MetaHuman into Unreal Engine 5, the engine will enable a set of specific plugins and project settings to ensure the character works correctly. The required plugins (as of UE 5.x) are:

- Megascans/Quixel Bridge: Allows Unreal to import assets from Quixel Bridge seamlessly. This is used to bring in the MetaHuman content from the cloud.

- Groom (Hair Strands): Enables the engine to render and simulate strand-based hair and fur. MetaHumans use Groom assets for hair, eyebrows, and eyelashes, so this must be enabled to see the hair.

- Control Rig: Provides the node-based rigging system for animation. MetaHumans come with control rigs for body and face, which require this plugin to be on.

- Rig Logic: This plugin is specific to MetaHumans (from 3Lateral’s technology). It drives the facial rig’s internal logic (the deformation behavior that links the high-fidelity face to the underlying controls).

In summary, Unreal will prompt for all needed plugins when you first export a MetaHuman to the project. If you want to manually ensure everything is ready, you can enable the above plugins yourself. After enabling, you must restart the editor for them to take effect.

How do I set up a Metahuman character for animation in Unreal Engine 5?

Setting up a MetaHuman for animation in UE5 is straightforward with its pre-configured rig:

- Drag into Level: Locate BP_[YourCharacterName] in Content Browser and drag into the viewport. The Blueprint includes skeletal mesh and Animation Blueprint for idle facial animations like blinking.

- Sequencer Setup: Create a Level Sequence (Cinematics > Add). Add the MetaHuman via + Track > Actor to Sequencer. Use Body and Face Control Rig tracks for keyframing limbs, torso, or expressions.

- Live Link/Mocap: Enable Live Link plugin and stream data from sources like Live Link Face (facial) or Rokoko (body) to the Control Rig. Supports real-time performance capture for dynamic animation.

- Animation Blueprint: Retarget UE mannequin animations to the MetaHuman skeleton using UE5’s retargeting tool. Modify the AnimBP for gameplay animations like running or jumping, leveraging the Epic Skeleton compatibility.

The pre-built Control Rig simplifies animation tasks. Sequencer and Live Link enable both cinematic keyframing and real-time mocap for versatile workflows.

Can I use Metahuman Animator for facial capture and performance?

MetaHuman Animator, introduced in Unreal Engine 5.2+, is a powerful tool for capturing facial performances and applying them to MetaHuman characters with high fidelity. It simplifies facial motion capture using video input from devices like an iPhone or stereo cameras, requiring no markers or specialized rigs.

What is MetaHuman Animator?

MetaHuman Animator is integrated into the MetaHuman Plugin for Unreal Engine 5, alongside Mesh to MetaHuman. It converts video or audio input into detailed facial animations, capturing expressions, lip sync, and eye movements. Using machine learning, it processes data from devices like an iPhone’s TrueDepth camera for real-time or offline results. This makes high-quality facial animation accessible without complex setups.

How to use MetaHuman Animator:

The process involves recording an actor’s performance and processing it in Unreal:

- iPhone capture: The Live Link Face or MetaHuman Animator app records video, depth data, and audio. Users can stream or record performances, which are then processed in Unreal. This setup is user-friendly, requiring only a recent iPhone. Epic showcased this at GDC 2023 with sample apps.The iPhone’s TrueDepth sensor captures detailed facial movements. These are streamed or recorded via the Live Link Face app. In Unreal, the data is mapped to the MetaHuman’s facial rig. This produces an animation that mirrors the actor’s performance.

- Stereo camera capture: Dual-camera setups record facial footage from two angles. This is processed later in Unreal for high-quality results. Stereo cameras are common in VFX for precise tracking. The workflow integrates with MetaHuman’s rigging system.Footage from stereo cameras provides robust data for facial tracking. In Unreal, users create a MetaHuman Identity asset to align the performance. The system processes the dual-angle input into animation curves. This method suits professional studios needing detailed captures.

- Processing in Unreal: Create a MetaHuman Identity asset to calibrate tracking. MetaHuman Animator’s tools process video data using machine learning. Animation curves are generated for facial controls. The result is an animation sequence ready for use.The MetaHuman Identity asset ensures accurate tracking of facial features. Machine learning analyzes video to produce precise animation curves. Processing is fast, often completing in seconds. Users get a sequence that matches the actor’s lip sync and expressions.

- Real-time capability: Record a performance and see the MetaHuman mimic it almost instantly. This is ideal for quick iterations in the Unreal editor. No large mocap stages or coding are needed. The workflow is designed for artists.Real-time processing allows immediate feedback in Unreal. Users can record with an iPhone and see results in moments. This eliminates the need for complex setups. It empowers small teams to create lifelike animations efficiently.

Using MetaHuman Animator for voice acting/dialogue:

MetaHuman Animator’s Audio-to-Facial Animation, introduced in UE 5.5, generates facial animations from audio clips:

- Audio-driven animation: Import a voice recording as a SoundWave asset. MetaHuman Animator processes it to animate the MetaHuman’s lips, jaw, and expressions. This automates lip syncing for dialogue-heavy scenes. Manual tweaks can enhance emotional nuances.Audio clips are analyzed to produce viseme-based animations. The system generates jaw and lip movements matching the speech. It’s a time-saver for cutscenes or voiceovers. Users can refine expressions for added realism.

The toolset requires Unreal Engine 5.2+ (5.5 for audio features) and the MetaHuman plugin enabled. Hardware recommendations include an i7 6700/Ryzen 5 2500X CPU, 32GB RAM, and RTX 2070/RX 5500 XT GPU, with an iPhone 12+ for capture. MetaHuman Animator democratizes performance capture, enabling small teams to create convincing digital actors for cinematics or games.

How do I animate my Metahuman using Control Rig in UE5?

Control Rig in Unreal Engine 5 allows precise animation of MetaHumans without motion capture, using a rigging interface similar to Maya. Here’s how to animate a MetaHuman step-by-step using Control Rig:

- Add to Sequencer: In the Level Sequencer, add the MetaHuman Blueprint and a Control Rig track. Epic provides preset rigs like MetaHuman_ControlRig for the body and Face_ControlBoard_CtrlRig for the face. These are usually auto-assigned when adding a MetaHuman. If not, manually assign the ControlRig class.The MetaHuman Blueprint integrates seamlessly with Sequencer. Preset Control Rigs simplify setup for body and face animation. Auto-assignment reduces manual configuration. This streamlines the animation workflow for users.

- Familiarize with Controls: Select the MetaHuman in the viewport to see rig controls (e.g., wrist, elbow, hip). Facial controls are accessed via the Control Rig panel or a 2D Picker widget. The body uses 3D widgets for IK/FK controls. The face rig simplifies complex blendshapes into grouped sliders.Rig controls are intuitive, with visual widgets for manipulation. The Picker widget organizes facial controls for easy access. Body controls support both IK and FK methods. This setup caters to animators of varying expertise.

- Posing the Character: Pose the MetaHuman at different frames to create animations, such as a wave:

- Frame 0 pose: Select the arm control and position it down. Set a keyframe (auto-key enabled or manual). This establishes the starting pose. The process is straightforward for beginners.Arm controls are easily manipulated in the viewport. Keyframing locks the pose at frame 0. Auto-key simplifies the process for quick setups. This ensures a smooth animation foundation.

- Frame 20 pose: Move the arm up to a raised position. Set another keyframe. Sequencer interpolates the motion. The wave animation is now visible on playback.The arm’s raised position is keyed at frame 20. Sequencer handles smooth interpolation between keyframes. Playback confirms the wave motion. Users can adjust timing as needed.

- Control types: Includes IK for hands/feet, FK for spine/head, and facial controls like JawOpen or Smile. Keyframe these for expressions or lip sync. The rig supports detailed animation. Users can create complex performances.IK and FK controls offer flexibility for body posing. Facial controls enable precise expression keyframing. The rig’s versatility supports varied animation needs. This empowers detailed character performances.

- Using the Facial Control Board: The facial rig uses sliders for controls like JawOpen or BrowDown. Keyframe these for speech or emotions. Manual lip syncing involves adjusting mouth shapes to phonemes. The board simplifies complex facial animation tasks.Sliders streamline facial animation for accessibility. Keyframing phoneme shapes creates realistic dialogue. The Control Board reduces complexity for users. This enhances efficiency in facial animation.

- Set Keyframes and Polish: Key multiple controls for coherent poses. Adjust timing and add in-between poses in Sequencer. Use the curve editor for smoothing motion. This refines the animation for professionalism.Multi-control keyframing builds cohesive poses. Timing adjustments ensure natural motion flow. The curve editor fine-tunes easing. Polishing elevates the animation’s quality.

- Use Auxiliary Tools: Leverage additional features for efficiency:

- Pose Library: Epic’s premade facial expressions (e.g., Happy, Frown) save time. Apply and tweak these for quick results. The library accelerates expression creation. It’s ideal for iterative workflows.The Pose Library offers ready-to-use expressions. Users can customize poses for specific needs. This reduces manual keyframing efforts. It enhances productivity for animators.

- IK/FK Snap: Switch between IK and FK for arms to pin or arc motions. This provides animation flexibility. The rig supports seamless transitions. It caters to diverse animation styles.IK/FK switching adapts to animation requirements. Pinned or arcing motions are easily achieved. The rig’s design ensures smooth transitions. This supports creative animation choices.

- Bake Animation: Bake mocap or existing animations to the Control Rig for editing. Convert back to animation assets. This is useful for mixed workflows. It integrates various animation sources.Baking integrates mocap with Control Rig edits. The process supports hybrid animation pipelines. Users can refine imported animations. This ensures compatibility across techniques.

Control Rig enables precise, Maya-like animation within Unreal, ideal for custom performances in cinematics. For example, to animate a MetaHuman turning, smiling, and waving, key the torso, facial controls, and arm IK in Sequencer, adjusting timing for a natural result.

Can I use Metahuman in gameplay, cinematics, or virtual production?

MetaHumans are versatile for gameplay, cinematics, and virtual production, with tools like LODs ensuring real-time performance. Their high fidelity suits various Unreal Engine applications, though optimization is key for large-scale projects.

Gameplay (Real-time in Games):

- LODs for performance: MetaHumans have 8 LODs (LOD0 for close-ups, LOD7 for distance). High LODs use strand hair and 8K textures, while lower ones simplify to hair cards. LODSync manages transitions for smooth performance. Optimize groom physics for distant characters.Eight LODs balance detail and performance in games. Close-up LODs deliver cinematic quality. LODSync ensures seamless transitions. Simplified distant LODs maintain frame rates.

Cinematics:

- High-quality rendering: MetaHumans excel in cinematics with LOD0, ray-traced lighting, and realistic shaders. They achieve film-quality results in Sequencer or offline renders. Short films leverage MetaHumans for AAA visuals. Supersampling enhances pre-rendered outputs.LOD0 maximizes visual fidelity for cinematics. Ray tracing enhances realism in renders. MetaHumans streamline high-end short film production. Supersampling boosts offline render quality.

Virtual Production:

- Real-time rendering: MetaHumans serve as digital doubles in live broadcasts or LED wall shoots. Live Link drives real-time performances via mocap. High-end GPUs ensure smooth frame rates. Lower LODs optimize background characters.Live Link enables real-time MetaHuman performances. Robust GPUs support virtual production demands. LODs Optimize multi-character scenes. This suits live events and filmmaking.

MetaHumans are real-time ready, as shown in The Matrix Awakens demo with crowds on consoles. For large-scale games, optimize LODs and materials; for cinematics, push quality settings; for virtual production, ensure robust hardware and Live Link setup.

How do I apply voice acting or dialogue to my Metahuman?

Adding dialogue to a MetaHuman involves syncing facial animations to audio for realistic speech. Multiple methods achieve this in Unreal Engine:

- MetaHuman Animator (Audio-to-Facial): Import a voice clip as a SoundWave asset. MetaHuman Animator generates lip sync and jaw animations. It’s efficient for basic visemes but may need manual tweaks for emotions. This suits dialogue-heavy cutscenes.Audio-to-Facial automates lip sync from voice clips. The system produces accurate viseme animations. Manual tweaks add emotional depth. It’s ideal for quick dialogue integration.

- Live Link Face: Use an iPhone’s Live Link Face app to capture an actor’s dialogue performance. Stream facial movements and audio to Unreal in real time. Record in Sequencer with Take Recorder. This captures nuanced expressions for lifelike results.Live Link Face streams real-time facial data. Actors’ performances are recorded with audio. Sequencer captures the full performance. It delivers highly realistic dialogue animations.

- Manual Keyframe Animation: Import audio into Sequencer and manually animate facial controls to match visemes. Adjust mouth shapes for phonemes (e.g., “EE”, “OO”). Use the pose library for efficiency. This offers full control for detailed lip sync.Manual animation aligns facial controls to audio. Viseme keyframing creates precise lip sync. The pose library speeds up expression setup. It’s suited for customized performances.

- Third-Party Plugins: Tools like Replica Studios or FaceFX generate lip sync from audio or text. These are alternatives if MetaHuman Animator isn’t suitable. Epic’s built-in solution is often preferred. Ensure audio playback is attached to the character.Third-party plugins offer additional lip sync options. They process audio or text for animation. Epic’s solution is typically more integrated. Audio attachment ensures spatial sound.

For realism, add body gestures via mocap or manual animation. MetaHuman Animator’s audio-to-animation is efficient, while live capture with Live Link Face provides emotive performances for cutscenes or live puppeteering.

What is the difference between Mesh to Metahuman and Metahuman Creator?

MetaHuman Creator and Mesh to MetaHuman are distinct tools for creating MetaHuman characters, serving different purposes but can be used together.

- MetaHuman Creator: A cloud-based app where users start with preset characters and adjust sliders to create realistic, rigged MetaHumans. It’s fast and ideal for crafting characters from Epic’s library. The browser-based interface requires an Epic account. It’s like an advanced character creator.MetaHuman Creator offers intuitive preset-based creation. Sliders enable quick customization of features. The cloud app ensures accessibility. It guarantees rigged, realistic results.

- Mesh to MetaHuman: A Unreal Engine plugin feature that converts a custom 3D head mesh (e.g., from a scan) into a MetaHuman. Users import the mesh, align landmarks, and process it via the cloud. It’s ideal for specific likenesses. The result is a rigged MetaHuman resembling the input.Mesh to MetaHuman transforms custom meshes into MetaHumans. Landmark alignment ensures accurate feature mapping. Cloud processing finalizes the character. It excels for precise, unique faces.

Key differences and use cases:

- Input: Creator uses presets and sliders; Mesh to MetaHuman uses a user-provided mesh. Creator is limited to Epic’s feature combinations. Mesh to MetaHuman handles scanned or sculpted heads. It’s perfect for replicating real people.Creator’s preset-based input is user-friendly. Mesh to MetaHuman requires a pre-made mesh. Creator suits general character creation. Mesh to MetaHuman targets specific likenesses.

- Complexity vs. Specificity: Creator is simple but less precise for unique faces. Mesh to MetaHuman achieves exact likenesses from scans. Creator is fastermeets faster for scanned or sculpted heads. It’s perfect for replicating real people.Creator simplifies character creation with constraints. Mesh to MetaHuman prioritizes precision for custom faces. Creator is ideal for quick results. Mesh to MetaHuman serves specialized needs.

- Process: Creator involves interactive tweaking in a browser. Mesh to MetaHuman requires importing a mesh, creating a MetaHuman Identity, and cloud processing in Unreal. Creator is standalone. Mesh to MetaHuman integrates with Unreal’s workflow.Creator’s browser-based process is straightforward. Mesh to MetaHuman involves Unreal-based steps. Creator requires no engine setup. Mesh to MetaHuman leverages Unreal’s tools.

- Refinement: After Mesh to MetaHuman, refine the character in Creator for skin tone or hair. Creator alone may not achieve specific likenesses. The tools complement each other. Use Mesh to MetaHuman for the face, Creator for final tweaks.Mesh to MetaHuman sets the initial likeness. Creator refines hair, skin, and body. The combined workflow enhances flexibility. It ensures polished, customized MetaHumans.

Both produce rigged MetaHumans for Unreal Engine. Creator is generative for quick results; Mesh to MetaHuman is projective for custom inputs. For example, scan your face for Mesh to MetaHuman, then tweak in Creator for a digital twin.

How can I create a Metahuman that looks like me?

Creating a MetaHuman resembling yourself involves either scanning your face or manually adjusting presets. Here are the approaches:

- Use Mesh to MetaHuman (Scan Yourself): Create a 3D head mesh via smartphone apps or photogrammetry. Import it into Unreal, align landmarks, and process with Mesh to MetaHuman. The result is a rigged MetaHuman matching your likeness. Refine skin tone and hair in Creator.Smartphone apps like PolyCam generate 3D head meshes. Unreal’s Mesh to MetaHuman converts the mesh accurately. Landmark alignment ensures feature precision. Creator finalizes the character’s appearance.

- Manual Recreation in MetaHuman Creator: Start with a preset matching your features. Blend presets and adjust sliders for skin tone, hair, and details. Use reference photos for accuracy. This method is less precise but viable without scanning.Presets provide a starting point for customization. Sliders fine-tune facial features and textures. Reference photos guide accurate adjustments. It’s accessible for non-technical users.

- External Modeling: Sculpt a head in Blender or ZBrush using photos. Import the mesh into Mesh to MetaHuman. This is like scanning but hand-crafted. It suits advanced users with modeling skills.Sculpting in Blender allows precise likeness creation. Mesh to MetaHuman converts the model to a MetaHuman. This method requires modeling expertise. It offers high control over details.

- From a Single Photo Approach: Use third-party tools like KeenTools FaceBuilder in Blender to create a head mesh from photos. Process it with Mesh to MetaHuman. This is technical but effective with limited images. Epic doesn’t offer a single-photo solution.FaceBuilder generates meshes from minimal photos. Mesh to MetaHuman ensures MetaHuman compatibility. The workflow is complex but feasible. It’s an alternative for photo-based creation.

After creating the MetaHuman, customize hair and clothing in Creator to match your style. Mesh to MetaHuman is the most accurate method, while Creator’s manual adjustments are a practical fallback.

What are the system requirements for using Metahuman with Unreal Engine 5?

MetaHuman Creator runs in the cloud, requiring only a modern browser and decent internet on Windows or macOS. A larger monitor helps for detailed editing, but no high-end hardware is needed.

For Unreal Engine 5 with MetaHumans, a robust PC is essential due to high-poly meshes and textures:

- CPU: Epic suggests an Intel i7 6700 or AMD Ryzen 5 2500X (4-core/8-thread) as minimum. For better performance, an i9 or Ryzen 9 is ideal. Multi-core CPUs handle heavy rendering tasks. This ensures smooth editor performance.The minimum CPU supports basic MetaHuman tasks. High-core CPUs accelerate complex scenes. Rendering benefits from additional cores. This enhances workflow efficiency.

- RAM: 32GB is the minimum for comfortable work; 16GB may cause slowdowns. 64GB is recommended for multiple MetaHumans or Animator. Large textures consume significant memory. Adequate RAM prevents bottlenecks.32GB RAM supports standard MetaHuman projects. 64GB handles intensive tasks like Animator. Memory demands scale with project complexity. This ensures stable performance.

- GPU: Minimum is an RTX 2070 or RX 5500 XT (6+ GB VRAM). An RTX 3080 or AMD 6800 XT (10+ GB VRAM) is recommended. GPUs drive real-time rendering and Lumen. High VRAM supports ray tracing.Entry-level GPUs manage basic MetaHuman scenes. High-end GPUs enable advanced features. VRAM capacity impacts rendering quality. This supports cinematic outputs.

- Storage: An SSD, preferably NVMe, is critical for fast loading. Mechanical drives cause slow load times. MetaHuman assets are large. SSDs improve project responsiveness.SSDs drastically reduce asset load times. NVMe SSDs offer top performance. Large assets demand fast storage. This streamlines development tasks.

- Operating System: Windows 10/11 is required for the MetaHuman plugin. macOS supports UE5 but not the plugin. Windows is the primary platform. This ensures full tool compatibility.Windows supports all MetaHuman features. macOS limits plugin functionality. Plugin reliance favors Windows development. This aligns with Epic’s ecosystem.

For MetaHuman Animator specifically:

- Recommended specs: A 16-core CPU, 64GB RAM, and RTX 3080 ensure smooth capture and processing. Minimum is an 8-thread CPU, 32GB RAM, RTX 2070. Animator’s machine learning is resource-intensive. High-end hardware supports live capture.Top specs optimize Animator’s performance. Minimum specs handle basic captures. Machine learning demands robust hardware. This enables real-time workflows.

Lower-spec systems can work with optimizations (e.g., lower LODs, no ray tracing), but a high-end PC (Ryzen 9/i9, 64GB RAM, RTX 3080/4080, NVMe SSD) is ideal for development and cinematic rendering. Scalability allows experimentation on mid-range hardware with simpler scenes.

Are there pre-made Metahuman templates or presets I can use?

Yes, MetaHuman Creator itself starts you off with a variety of premade MetaHuman presets. When you first launch the Creator, you’re presented with a gallery of preset characters (over 50 of them, representing different ethnicities, ages, and genders). Each preset is a fully rigged MetaHuman that you can use as a base. You can simply pick one and use it as-is or with minimal changes if it fits what you need.

- Using Presets: In MetaHuman Creator, click “Create” and then select a preset and click “Create Selected”. This duplicates that MetaHuman into your library, and you can start customizing it if desired. But if you don’t change anything and just save it, you basically have that preset as your own MetaHuman ready to export. So in effect, those are pre-made MetaHumans you can use.Presets are immediately usable without modification. The Creator’s interface simplifies preset selection. Duplicating presets preserves the original for flexibility. This accelerates project setup.

- MetaHuman Sample Project: Epic’s Marketplace offers a MetaHumans Sample project with example characters in a lit scene. These can be migrated to your project. The sample showcases setup techniques. It’s licensed for Unreal use.The sample project provides pre-configured MetaHumans. Users can study lighting and animation setups. Assets are migratable to other projects. This serves as a practical template.

- Community Shared MetaHumans: Creators sometimes share MetaHuman settings or assets on forums. This isn’t common due to account-specific GUIDs. Presets remain the primary source. Community sharing is limited but possible.Forum-shared MetaHumans offer additional options. Account restrictions limit widespread sharing. Presets are more reliable for most users. Community resources supplement official assets.

- Third-party Characters: MetaHumans are realistic humanoids; no child or alien presets exist. Use external methods for non-human characters. Presets focus on adult/teen human diversity. Customization is required for unique types.Presets cover realistic human variations only. Non-human characters need custom workflows. Adult/teen focus limits preset scope. Users must adapt for specialized needs.

Presets are ideal for quick starts or learning, licensed for Unreal projects. They can be blended or minimally tweaked for varied characters, perfect for crowds or NPCs.

Where can I find free tutorials and resources to learn Metahuman creation?

Free resources abound for learning MetaHuman creation, from official Epic content to community contributions:

- Official Epic Games Resources:

- MetaHuman Documentation: Epic’s Developer Community offers detailed guides on Creator, exporting, and animation. It’s comprehensive and up-to-date. The site covers best practices. It’s the go-to for technical details.Documentation provides step-by-step instructions. It’s regularly updated with new features. Users find solutions for common issues. This ensures accurate, reliable learning.

- Online Tutorials: The Unreal Learning Portal hosts MetaHuman courses and videos. The MetaHuman product page links to tutorials and forums. These are accessible to all users. Epic’s resources are beginner-friendly.The Learning Portal offers structured courses. Product page links streamline resource access. Tutorials cater to various skill levels. This supports diverse learning needs.

- YouTube Channel: Unreal’s YouTube has tutorials like “MetaHuman Creator in 15 minutes.” Webinars and GDC talks showcase workflows. Videos are freely available. They demonstrate practical techniques.YouTube videos provide visual workflow guides. Webinars offer in-depth feature insights. GDC talks highlight advanced use cases. This enhances practical understanding.

- Sample Projects: The MetaHumans Sample Project on Marketplace shows setup examples. It’s a hands-on learning tool. Assets can be migrated. It’s licensed for Unreal use.The sample project illustrates MetaHuman integration. Users learn from pre-built scenes. Asset migration supports project use. This bridges theory and practice.

- Community Tutorials:

- YouTube Creators: Channels like JSFILMZ and William Faucher cover MetaHuman creation and animation. They address Animator, hair, and Blender integration. Videos are free and practical. They offer real-world tips.Community YouTubers share accessible tutorials. They cover specialized topics like hair. Practical tips enhance workflows. This complements official resources.

- Blender Guides: Tutorials on exporting Blender hair or clothing to MetaHumans exist. PixelHair’s content is a notable example. These are technical but valuable. They support custom asset creation.Blender guides focus on custom asset workflows. PixelHair tutorials detail hair integration. Technical steps are clearly explained. This aids advanced customization.

- Forums and Reddit: Unreal forums and /r/unrealengine discuss MetaHuman techniques. Users share solutions and resources. Community insights are practical. These platforms foster collaboration.Forums provide user-driven problem-solving. Reddit threads link to new resources. Community tips address real challenges. This builds a supportive learning network.

- 3D Content Websites: 80.lv and CGSociety publish MetaHuman-related articles. They detail project workflows and tips. Content is freely accessible. These offer professional insights.3D sites share industry-level MetaHuman use cases. Articles highlight workflow efficiencies. Free access broadens learning opportunities. This inspires professional-grade work.

Start with Epic’s documentation, videos, and forums, then explore community tutorials for practical tips. Search for specific topics (e.g., “MetaHuman Animator tutorial”) and filter for recent content (2022-2025).

What are common mistakes to avoid when creating your own Metahuman in UE5?

Here are common pitfalls to avoid when creating MetaHumans in Unreal Engine 5:

- Not Logging into Quixel Bridge: Forgetting to log into Bridge or disabling the plugin prevents MetaHuman downloads. Enable Bridge in UE5 and log in with your Epic account. Check your username in Bridge. This ensures asset access.Bridge is essential for MetaHuman imports. Logging in connects your Epic account. A disabled plugin blocks downloads. Verifying the username avoids errors.

- Using Special Characters in MetaHuman Name: Non-alphanumeric names (e.g., “José #1”) may cause export failures. Use simple names like “Jose01”. Rename by duplicating in Creator if needed. This prevents silent errors.Special characters disrupt export processes. Alphanumeric names ensure compatibility. Duplication allows safe renaming. This maintains workflow stability.

- Ignoring the LOD Warning for Hair Grooms: Some hairstyles lack lower LODs, appearing bald at distance. Choose full-LOD grooms or adjust LODSync settings. Warnings in Creator highlight this issue. Plan for camera distances.Limited LODs cause distant hair disappearance. Full-LOD grooms or LODSync resolve this. Creator warnings guide hairstyle choices. This ensures visual consistency.

- Forgetting to Enable Missing Plugins/Settings: Skipping plugin prompts (e.g., Groom, Control Rig) breaks functionality. Enable plugins in Edit > Plugins and restart UE5. Set 16-bit bone indices in Project Settings. This ensures proper rendering.Plugins are critical for MetaHuman features. Enabling them post-import fixes issues. Project Settings adjustments support rigging. Restarting UE5 applies changes.

- Using an Outdated Engine Version: Older Unreal versions (e.g., 4.27) don’t support new features like Animator. Use UE 5.2+ for full functionality. Avoid mixing old and new MetaHumans. Update projects for compatibility.Outdated versions limit MetaHuman capabilities. UE 5.2+ ensures feature access. Project updates prevent conflicts. This supports modern workflows.

- Attempting Extreme Customization: Editing base meshes or skeletons manually breaks rigs. Use Mesh to MetaHuman or Creator for changes. Avoid external DCC edits unless expert. This preserves rig integrity.Manual mesh edits disrupt DNA assets. Official tools ensure safe customization. External edits require advanced knowledge. This avoids rigging errors.

- Performance Overload: Using multiple LOD0 MetaHumans with ray tracing crashes mid-range PCs. Optimize with LOD Sync and lower LODs for crowds. Disable groom simulation for background characters. This maintains frame rates.High LODs strain system resources. LOD Sync optimizes distant characters. Simplified grooms reduce performance load. This enables scalable scenes.

- Not Utilizing Pose Assets: Keyframing facial expressions from scratch is inefficient. Use Epic’s pose library for smiles or frowns. This saves time and simplifies animation. Leverage provided tools for efficiency.The pose library streamlines facial animation. Pre-made expressions reduce manual work. Ignoring assets slows workflows. This maximizes productivity.

- Lighting and Materials Mistakes: Poor lighting misrepresents MetaHuman shaders. Use HDRI or environment lights for evaluation. Duplicate materials before editing to avoid errors. This ensures accurate visuals.Proper lighting showcases realistic shaders. HDRI setups enhance character appearance. Material duplication preserves originals. This prevents visual errors.

- Mixing Legacy and New MetaHumans: Combining old and new MetaHumans causes conflicts. Use separate projects or update via Bridge. Manual file copying is complex. This maintains project stability.Legacy MetaHumans conflict with newer assets. Separate projects avoid issues. Bridge updates simplify transitions. This ensures compatibility.

Avoiding these pitfalls will make your MetaHuman creation process much smoother. In summary: pay attention to Epic’s guidelines (names, plugins, LOD warnings), leverage provided tools, and be mindful of performance. If something odd happens (e.g., no hair, export failing), it’s likely one of the above common issues – now you know the likely cause and solution.

FAQ questions and answers

- Is MetaHuman Creator free to use?

Yes – it’s completely free with an Epic Games account. You can use MetaHumans in Unreal Engine projects, including commercial ones, as long as rendering is done in Unreal. There’s no cost for the tool or characters. - Can I use MetaHuman characters outside of Unreal Engine (in other engines or DCC tools)?

No – they must be rendered in Unreal Engine. You can export to Maya for animation or asset modification, but final renders must be done in UE. Rendering in Unity or Blender is not allowed. - What platforms are supported for using MetaHumans?

You can use MetaHumans on Windows or macOS. The Creator runs in a browser on both. Plugins like Animator are Windows-only. Packaged projects run on major platforms, but Linux isn’t supported for creation or plugins. - What version of Unreal Engine do I need to work with MetaHumans?

UE 4.26.2 or later is required, but UE5 is recommended. For MetaHuman Animator, you need UE 5.2 or newer. Always use the latest version for best compatibility and features. - Do MetaHumans support motion capture for body and face?

Yes – they use Epic’s skeleton and support mocap suits and ARKit blendshapes. Facial capture works with iPhones (Live Link Face) and other systems. MetaHuman Animator adds high-fidelity facial capture options. - Can I create a MetaHuman from a photo or scan of a real person?Yes – with Mesh to MetaHuman, you can convert a 3D scan or custom model. With only a photo, you’ll need to generate a 3D head first. There’s no direct photo-to-MetaHuman tool, but it’s possible with extra steps.

- Why does my MetaHuman’s hair disappear when I zoom out?

This happens due to LOD settings. Some hairstyles lack lower LODs and vanish at distance. To fix it, either use a hairstyle with full LOD support or force LOD 0 in UE5, though this may impact performance. - I exported my MetaHuman but it’s not appearing in Unreal/Bridge – what went wrong?

Check the character’s name – only use standard ASCII characters. Non-standard characters often cause silent export failures. Also, verify you’re logged into Bridge, the plugin is enabled, and export settings are correct. - How do I make my MetaHuman talk or lip-sync to dialogue?

Use MetaHuman Animator’s audio-to-face to auto-generate lip sync from audio. Alternatively, record facial performance with Live Link Face. Manual animation via Control Rig is also possible for detailed control. Combining methods often gives best results. - Can I use MetaHumans for crowds or multiple characters at once?

Yes, but optimize carefully. MetaHumans are detailed, so use LODs for performance. Use lower LODs for background characters and tools like LOD Sync. For large crowds, consider instancing or plugins, and test on target hardware.

Conclusion

Epic’s MetaHuman tools in Unreal Engine 5 make creating production-ready digital humans both accessible and high-fidelity, handling realism, rigging, customization, and animation end to end.

The workflow spans MetaHuman Creator, Quixel Bridge integration, required plugin setup, PixelHair custom grooming, and high-fidelity facial capture via MetaHuman Animator.

Beginners can craft convincing characters with intuitive sliders and presets, while advanced users can import custom assets, integrate motion capture, or convert scans for exact likenesses.

A growing ecosystem—including audio-driven animation—and plentiful community and official resources speed up workflows and expand creative potential.

Following best practices (LOD management, naming conventions, plugin configuration) ensures smooth performance and avoids technical issues.

Whether you’re a solo filmmaker, game prototyper, or studio, MetaHumans save countless hours and deliver virtual actors ready for cinematics, gameplay, or live AR broadcasts.

Now it’s your turn: fire up MetaHuman Creator, craft your character, and bring them to life in Unreal Engine 5. We can’t wait to see the MetaHumans (and stories) you create. Happy developing!

Sources and Citations

- Epic Games – MetaHuman Creator Overview and Access Requirements – Epic Developer Community – MetaHuman Creator Overview

- Epic Games Documentation – MetaHuman Creator Features (Blending, Presets, Controls) – Epic Developer Community – MetaHuman Creator Features

- Epic Games Documentation – Body and Skin Customization – Epic Developer Community – MetaHuman Creator Overview

- ArtStation Marketplace – PixelHair Unreal/Blender Hair Description – ArtStation – PixelHair

- Epic Games – Using Quixel Bridge to Download/Export MetaHumans – Epic Developer Community – FAQ and Troubleshooting for MetaHumans

- Epic Games Documentation – Required UE5 Plugins for MetaHumans – Epic Developer Community – MetaHuman for Unreal Engine

- Epic Games Documentation – Animating MetaHumans with Control Rig (Sequencer Workflow) – Epic Developer Community – MetaHumans Sample for Unreal Engine 5

- Epic Games – MetaHuman Animator and Requirements (FAQ) – Epic Developer Community – MetaHuman Animator

- Epic Games Documentation – Mesh to MetaHuman Workflow Explanation – Epic Developer Community – Mesh to MetaHuman

- Epic Games Documentation – MetaHuman System Requirements and Platform Support – pic Developer Community – MetaHuman Creator Overview

- Epic Games – MetaHuman License FAQ (Unreal Engine use only) – Epic Games – MetaHuman License FAQ

- Epic Games Documentation – LOD and Hair Groom Warning (Hair disappearing issue) – Epic Developer Community – FAQ and Troubleshooting for MetaHumans

- Epic Games Documentation – Bridge Export Failing (Name character limits) – Epic Developer Community – FAQ and Troubleshooting for MetaHumans

- Epic Games Documentation – FAQ and Troubleshooting for MetaHumans (common issues) – Epic Developer Community – FAQ and Troubleshooting for MetaHumans

- Epic Games – Official Learning Resources for MetaHumans – Epic Developer Community – MetaHuman Documentation

Recommended

- How to Auto Retopology in Blender: A Step-by-Step Guide for Clean 3D Meshes

- How to Create a Metahuman of Yourself: Turn Your Likeness Into a Digital Character in Unreal Engine 5

- Minecraft Movie Cast, Review, Fan Insights, and Box Office Performance: Full Breakdown After Release

- Camera Switching in Blender Animations: Mastering The View Keeper

- What Is Gaussian Splatting? A Complete Guide to This Revolutionary Rendering Technique

- How do I create a cinematic camera effect in Blender?

- Helldivers 2: Comprehensive Guide to Gameplay, Features, Factions, and Strategies

- How to Save Blender Camera Settings: Complete Guide to Managing and Reusing Camera Views in Blender

- Skin Texturing in Substance Painter: How to Create Realistic Digital Skin Step by Step

- Camera Movements: A Complete Guide to Cinematic Motion in Film and Video