Wonder Dynamics has introduced a groundbreaking AI-driven platform called Wonder Studio that is reshaping how visual effects (VFX) are produced. Wonder Studio is a browser-based tool that leverages artificial intelligence to automatically animate, light, and composite computer-generated (CG) characters into live-action scenes. By handling the heavy lifting of VFX tasks, Wonder Dynamics’ technology dramatically transforms visual effects production workflows, automating an estimated 80–90% of the “objective” VFX work (like motion capture, tracking, and masking) and freeing artists to focus on creative refinement. This democratizes high-end VFX, empowering creators of all skill levels to bring CG characters to life on screen more easily than ever before.

What is Wonder Dynamics and how does it transform visual effects production?

Wonder Dynamics, co-founded by Nikola Todorovic and Tye Sheridan, is the creator of Wonder Studio, an AI-powered VFX platform that simplifies integrating CG characters into live-action footage. Unlike traditional VFX requiring motion-capture suits and manual compositing, Wonder Studio automates these tasks, allowing creators to add digital characters with ease, transforming workflows by reducing time, cost, and technical barriers.

The platform revolutionizes VFX production through:

- Speed and Efficiency: Wonder Studio automates rotoscoping, motion tracking, and initial animation, cutting hours of work into minutes. This allows artists to focus on creative tasks rather than repetitive labor. Large teams are no longer needed for complex shots. Workflows are significantly faster.

- Cost Reduction: By eliminating the need for expensive motion-capture setups, it makes high-quality VFX affordable for indie filmmakers. Smaller studios can produce professional results without large budgets. The platform’s automation reduces labor costs. Accessibility increases for budget-conscious creators.

- Accessibility: Its browser-based, cloud-hosted design requires no high-end hardware or deep VFX expertise. Creators with minimal technical skills can use it. The platform democratizes advanced VFX tools. Storytellers of all levels gain access.

- Iterative Creativity: Automation frees up time for directors to experiment with character designs and performances. Quick iterations enhance creative flexibility. Artists can test multiple variations easily. The focus shifts to artistic vision.

Wonder Studio makes VFX production faster, more affordable, and accessible, enabling creators to integrate CG characters as easily as applying a filter. It delivers professional results while empowering storytellers to focus on creativity.

How does Wonder Studio automate animation, lighting, and compositing in VFX?

Wonder Studio uses AI-driven computer vision and machine learning to automate key VFX processes, streamlining the integration of CG characters into live-action footage. By analyzing video, it handles complex tasks like motion capture, lighting, and compositing, reducing manual effort while maintaining realism.

The platform automates these processes as follows:

- Automatic Motion Capture & Animation: Wonder Studio tracks an actor’s body, face, and hand movements from single-camera footage without markers. It retargets this data to animate a CG character, replicating the actor’s performance precisely. No manual keyframing is needed. Advanced algorithms ensure natural motion.

- Automatic Lighting: The AI matches the CG character’s lighting to the scene’s environment by analyzing light sources and intensity. It applies realistic shading automatically, ensuring seamless integration. Artists are spared manual lighting adjustments. The character blends naturally into the footage.

- Automatic Compositing & Rotoscoping: The system generates roto masks and clean plates, removing the actor and inserting the CG character. It aligns the character with the camera’s perspective and scale. The result looks as if filmed originally. Compositing is streamlined significantly.

- Shot Detection & Tracking: Wonder Studio detects cuts in edited sequences and tracks actors across shots using actor re-identification. It processes entire sequences automatically, handling occlusions with motion prediction. Manual shot splitting is unnecessary. The system ensures continuity.

By combining deep learning models for pose estimation, face tracking, and camera movement, Wonder Studio delivers fully animated, lit, and composited CG characters with minimal user input. This automation saves time and effort, making high-quality VFX accessible to creators.

What are the key features of Wonder Dynamics’ AI-powered Wonder Studio?

Wonder Studio is packed with powerful features that make it a versatile tool for VFX artists and creators. Here are some of its key features:

- AI-Powered Markerless Motion Capture: Captures full-body motion, facial expressions, and hand movements from single-camera video without markers. Supports advanced bone retargeting for any rigged character. Motion data is exportable in FBX or USD formats. Turns live footage into animation data.

- Automatic Character Animation & Replacement: Tracks and replaces actors with CG characters, matching body and facial movements. Requires minimal manual animation. Ensures accurate performance replication. Simplifies complex character insertions.

- Lighting and Compositing Automation: Matches CG character lighting to live-action footage automatically. Composites characters with proper layering, alpha masks, and shadows. Produces clean plates for seamless integration. Ensures natural-looking results.

- Multi-Shot Sequence Handling (Video-to-3D Scene): Reconstructs edited sequences into unified 3D scenes. Detects cuts and ensures spatial continuity across shots. Supports consistent character and camera placement. Enhances complex sequence management.

- Custom 3D Character Support: Allows uploading rigged characters in FBX or .blend formats. Supports up to 1.5 million polygons and 100k hair strands. Utilizes facial blendshapes for expressions. Enables unique character designs.

- Built-in Character Library: Provides ready-to-use characters like robots and aliens. Enables quick experimentation for previs or concepts. Supports one-click character replacements. Ideal for users without custom models.

- Exportable VFX Elements and Data: Offers multiple export options to integrate with existing pipelines:

- Final Rendered Video with CG character composited (up to 4K on Pro plan).

- CG Character Pass with transparent background.

- Clean Plate as an image sequence.

- Alpha Masks for actor replacement.

- Motion Capture Data in FBX format.

- Camera Tracking Data as FBX or within scenes.

- Full 3D Scene Exports to Blender, Maya, Unreal Engine, and USD format.

- Cloud-Based Processing: Runs all computation in the cloud via a web-based interface. Requires no high-end local hardware. Supports parallel shot processing. Enhances efficiency for large projects.

- User-Friendly Interface and Workflow: Features an intuitive browser-based interface with guided prompts. Minimizes learning curve for non-experts. Automates complex tasks for ease of use. Accessible to creators with limited VFX experience.

These features make Wonder Studio an end-to-end solution for character-driven VFX, offering automation and compatibility with traditional tools. Its export options ensure seamless integration into existing pipelines, enhancing flexibility for creators.

What are the system requirements and pricing plans for Wonder Studio?

One of the advantages of Wonder Studio is its minimal system requirements, since it runs via web browser and utilizes cloud computing:

- System Requirements: Requires a computer with a modern web browser (Google Chrome or Apple Safari) on Windows or macOS. Mobile browsers are not supported. Cloud-based processing eliminates the need for high-end GPUs or RAM. A stable internet connection is essential for uploading/downloading large video files. For scene exports, Blender and Maya 2022+ are supported, with USD compatibility for other tools like 3ds Max, Houdini, and Unity. No specialized hardware is needed. An up-to-date browser suffices for basic use. Ensures accessibility for most users.

- Recommended Setup: A decent CPU speeds up video encoding/decoding in the browser. Sufficient disk space is needed for video files. High-quality input footage (HD or 4K) improves output quality. Optimizes performance for better results.

Wonder Studio is a subscription-based service with the following pricing plans:

- Lite Plan: $29.99/month ($24.99/mo annually). Includes 3,000 credits (~150s full processing or 750s mocap). Supports 1080p exports, 2 actors, 6 character uploads, 5 GB storage, 500 MB video uploads. Ideal for small projects or learning.

- Pro Plan: $149.99/month ($124.99/mo annually). Offers 12,000 credits (~600s full processing or 3,000s mocap). Supports 4K exports, 4 actors, 15 character uploads, 80 GB storage, 2 GB video uploads, and commercial licensing. Suited for professional use.

- Enterprise Plan: Custom pricing for studios. Includes Pro features plus tailored limits, enhanced security, and priority support. Requires contacting Wonder Dynamics for a quote. Designed for large-scale needs.

- Credits System: Live Action/Animation costs 20 credits/second, AI Motion Capture 4 credits/second. Credits reset monthly without rollover. Additional credits require plan upgrades. Ensures flexible usage tracking.

- Free Trial / Access: No traditional free tier, but demo credits or sample projects may be available. Autodesk occasionally offers trial periods or educational access. Paid plans are required for full custom projects. Encourages experimentation before commitment.

The light system requirements and tiered pricing make Wonder Studio accessible, allowing creators to leverage its power for short-term projects without significant hardware or licensing costs. Cloud-based processing ensures efficiency and scalability.

How to get started with Wonder Studio for seamless VFX integration?

Getting started with Wonder Studio is straightforward. Here’s a step-by-step guide to begin using it and integrating it into your VFX workflow seamlessly:

- Sign Up and Choose a Plan: Create an account on the Wonder Dynamics website and select a Lite or Pro plan. Lite is ideal for testing, with the option to upgrade later. Access the web app via browser. Ensures quick setup.

- Prepare Your Footage and Assets: Use single-camera footage (up to 2 minutes) in MP4 or MOV format, ideally HD or 4K. Identify the actor to replace. Prepare custom 3D character models in FBX or .blend if needed. Built-in characters are available.

- Create a New Project: Upload footage to the web app from the dashboard. Monitor upload progress for large files. Open the project setup interface. Simplifies project initiation.

- Scan or Edit the Video (if needed): The system detects cuts and lists shots. Verify actor detection for replacement. Adjust if multiple actors are present (Pro plan). Ensures accurate processing.

- Assign an Actor and Character: Select the actor to replace in a reference frame. Choose a CG character from the library or upload a custom model. Confirm selection via preview. Streamlines character assignment.

- Configure Settings (Optional): Adjust project settings for Live Action or AI Motion Capture outputs. Toggle Clean Plate or Camera Track options. Default settings work for standard replacements. Offers customization for advanced users.

- Process the Scene: Start cloud-based processing with the “Process” button. The AI handles animation, lighting, and compositing. Work offline during processing; get notified when complete. Enhances workflow efficiency.

- Review the Result: Playback the output in the web app to check the CG character’s integration. Use adjustment tools for minor tweaks or reprocess segments if needed. Ensures quality control. Provides flexibility for refinements.

- Export the Assets: Download final video, Blender/Maya scenes, FBX motion data, or USD files. Use Clean Plates and masks for custom compositing. Fits standard VFX pipelines. Enables seamless integration.

- Integrate into Post-Production: Use exported assets in editing or 3D software for further refinement. Sync with original audio if needed. Supports industry-standard workflows. Ensures professional results.

- Iterate if Necessary: Duplicate projects to test different characters or settings. Quick processing enables multiple iterations. Enhances creative experimentation. Speeds up refinement.

Wonder Studio’s cloud-based, user-friendly interface makes VFX integration plug-and-play, allowing creators to focus on storytelling while the AI handles technical tasks. Parallel processing in the cloud streamlines post-production, enabling efficient workflows.

How does Wonder Studio’s AI motion capture work without traditional equipment?

Wonder Studio’s AI motion capture uses markerless, single-camera technology to extract 3D motion data from regular video, eliminating the need for suits or sensors. Advanced AI interprets human pose, facial expressions, and hand movements, making motion capture accessible to all creators.

The process works as follows:

- Single-Camera, Markerless Capture: Analyzes single-camera RGB footage to detect body joints and infer 3D motion. Tracks movements without markers or suits. Uses pose estimation for coherent motion trajectories. Enables capture in any environment.

- Body, Face, and Hands Tracking: Captures facial expressions and hand gestures using landmark detection. Maps these to CG character blendshapes or bones. Recognizes subtle cues from video. Delivers detailed performance replication.

- Depth and 3D Inference: Predicts 3D pose from 2D images using anatomical and contextual cues. Combines camera tracking to infer scene depth. Ensures accurate spatial movement. Overcomes single-camera limitations.

- No Suits or Sensors Required: Works with regular cameras and ordinary clothing. Enables on-location capture without specialized gear. Lowers barriers for filmmakers. Makes mocap widely accessible.

- Advanced Retargeting: Maps captured motion to custom character rigs, adjusting for scale and proportions. Uses calibration to align skeletons. Preserves performance essence. Ensures compatibility with diverse models.

- Accuracy and Limitations: Accurately captures walking, running, and emoting. Fast motions or occlusions may cause minor issues, mitigated by motion prediction. Comparable to basic optical mocap. Allows post-capture corrections.

- Output of Mocap Data: Exports FBX files with body and hand animation for use in other software. Runs faster, using fewer credits than full renders. Supports game and animation pipelines. Builds motion libraries.

Wonder Studio’s AI mocap democratizes motion capture by using standard video, offering flexibility and high-quality results. It transforms spontaneous footage into professional-grade animation, expanding creative possibilities for filmmakers.

What are the benefits of using Wonder Studio for indie filmmakers and content creators?

Wonder Studio empowers indie filmmakers and content creators by providing affordable, high-quality VFX capabilities. It reduces costs, speeds up workflows, and lowers technical barriers, enabling small teams to produce professional results.

Key benefits include:

- Drastically Reduced VFX Costs: Automates animation and rotoscoping, eliminating the need for large teams or mocap studios. Affordable subscriptions replace expensive VFX outsourcing. Enables budget-friendly production. Levels the playing field for indies.

- Faster Turnaround and Iteration: Completes VFX shots in days, not weeks, allowing quick iterations. Creators can test multiple character designs efficiently. Speeds up tight deadlines. Enhances final product polish.

- Lower Technical Barrier (Accessibility): User-friendly interface requires no VFX expertise. Non-technical creators can add CG characters easily. Focuses on storytelling over technical skills. Democratizes advanced VFX.

- No Special Equipment Needed: Works with standard cameras, even smartphones. Eliminates the need for professional rigs or mocap gear. Enables filming anywhere. Simplifies production logistics.

- Small Crew, Big Results: One person can handle animation, lighting, and compositing tasks. Small teams achieve large-scale effects. Amplifies limited resources. Rivals bigger productions.

- Focus on Creativity and Storytelling: Automates technical tasks, freeing creators for directing and storytelling. Encourages bold, imaginative scenes. Enhances artistic vision. Reduces workflow drudgery.

- Enhanced Production Value for Content Creators: Adds cinematic effects like CG co-hosts or action scenes. Makes online content more engaging. Attracts larger audiences. Elevates professional appeal.

- Previsualization and Concept Testing: Creates impressive previs for pitching projects. Shows investors polished CG concepts. Refines ideas early. Boosts project viability.

- Learning and Skill Development: Exposes creators to VFX processes hands-on. Outputs teach animation techniques. Acts as a stepping stone to advanced skills. Encourages growth without formal training.

Wonder Studio enables indie creators to produce visually stunning content that competes with studio productions. Its automation and accessibility amplify creativity, making ambitious storytelling feasible on limited budgets.

How does Wonder Studio integrate with tools like Maya, Blender, and Unreal Engine?

Wonder Studio integrates seamlessly with industry-standard tools through export formats and plugins, ensuring AI-generated animations fit into existing VFX pipelines. This interoperability allows creators to refine outputs in their preferred software.

Integration methods include:

- Direct Scene Export: Exports complete scenes to Maya, Blender, or Unreal Engine with animated characters and cameras. Simplifies workflow continuation. Enables tweaks in familiar environments. Preserves AI-generated data.

- Universal Scene Description (USD) Export: Provides USD files for compatibility with Maya, Blender, 3ds Max, Houdini, and Unity. Ensures smooth data exchange. Supports complex, multi-tool pipelines. Enhances flexibility.

- Blender & Maya Add-ons: Add-ons streamline sending scenes to Wonder Studio and retrieving results. Ensure proper rig formatting. Enhance workflow efficiency. Integrate directly with software interfaces.

- Engine Integration (Unreal/Unity): Exports Unreal Engine 5 scenes or FBX/USD for Unity. Supports real-time rendering and game pipelines. Enables interactive content creation. Leverages engine capabilities.

- Camera and Layout Integration: Exports camera tracking data to align additional CG elements. Ensures accurate scene matching. Saves matchmoving time. Supports complex VFX shots.

- Collaborative Pipeline Use: Allows departmental handoffs via standard formats. Supports animation tweaks, lighting, and compositing. Fits existing workflows. Enhances team collaboration.

- Version Control and Revisions: Updates assets in Maya/Blender with new exports. Maintains iterative workflows. Simplifies animation updates. Ensures project consistency.

- Autodesk Flow Ecosystem: Expected to deepen integration with Autodesk tools like ShotGrid. May enable cloud-based workflows. Enhances future interoperability. Aligns with industry trends.

Wonder Studio’s export options and plugins make it a versatile component in professional pipelines. Its open design ensures creators can refine AI outputs in Maya, Blender, or Unreal, maintaining creative control.

What is the process for uploading and animating custom 3D characters in Wonder Studio?

Wonder Studio allows users to upload custom 3D characters for animation, bringing unique designs to life with AI-driven motion capture. The process is streamlined to ensure compatibility and ease of use.

The steps are:

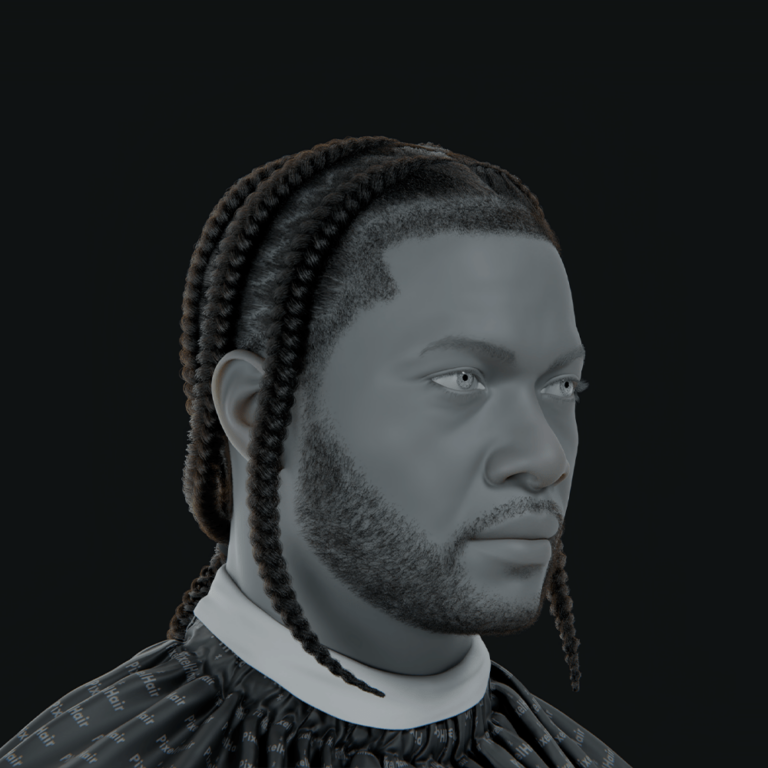

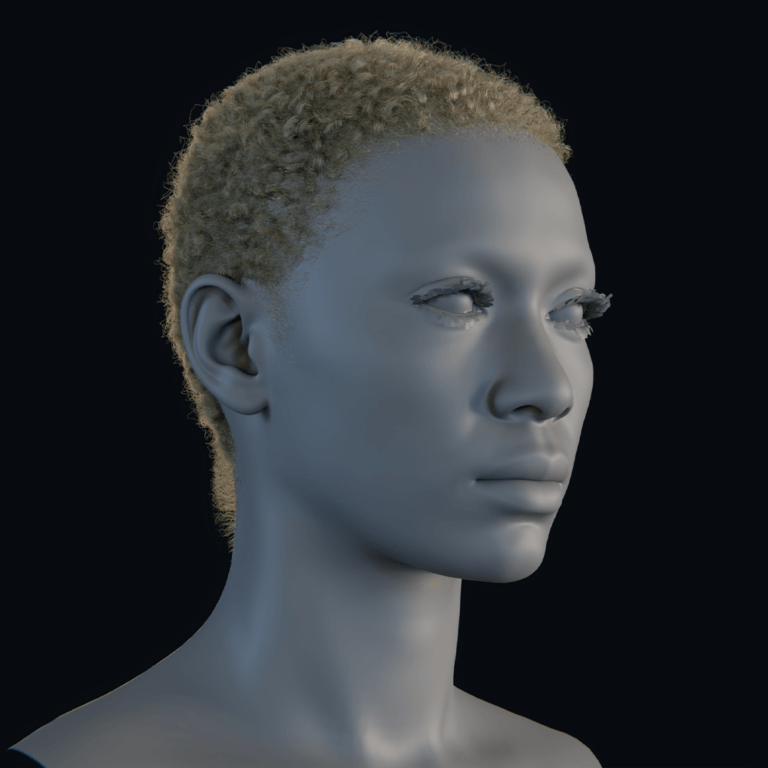

- Prepare Your 3D Character: Use FBX or .blend formats with a humanoid rig and named bones. Keep models under 1.5 million polygons and 100k hair strands. Include facial blendshapes for expressions. Optimize for real-time use.

- Upload the Character to Wonder Studio: Access “My Characters” in the web app to upload the model. The system checks bone structure and compatibility. Add-ons for Maya/Blender assist formatting. Characters are stored for reuse.

- Character Validation: The platform verifies root bones, skeleton continuity, and blendshapes. Errors prompt model adjustments. Ensures retargeting compatibility. Guarantees smooth animation.

- Assign the Custom Character to an Actor in a Project: Select the custom character from the library during actor assignment. Preview confirms selection. Handles multiple sub-meshes. Simplifies character integration.

- Process the Project: Run AI processing to apply actor’s motion to the custom character. Retargeting maps movements to the rig. Facial and hand animations are included. Delivers seamless performance replication.

- Review and Adjust (if needed): Check output for proportion or animation issues. Use Advanced Retargeting to tweak bone mappings. Adjust settings for extreme rigs. Ensures high-quality results.

- Export with Custom Character: Export scenes or FBX motion data with the custom rig. Supports Maya, Blender, and other software. Enables further refinement. Integrates with post-production.

- Repeat Use: Reuse uploaded characters across projects. Update rigs as needed. Simplifies multi-shot workflows. Enhances production efficiency.

Custom character support makes Wonder Studio highly flexible, allowing creators to animate original designs effortlessly. The process balances ease of use with robust validation for professional results.

How does Wonder Studio’s Video-to-3D Scene technology enhance storytelling?

Wonder Studio’s Video-to-3D Scene technology transforms edited video sequences into unified 3D scenes, enhancing storytelling by ensuring continuity and offering creative flexibility. It reconstructs spatial relationships across multiple shots, making complex VFX seamless.

Key enhancements include:

- Multi-Cut Scene Reconstruction: Analyzes sequences to build a 3D scene from multiple shots. Deduces camera and character positions. Ensures spatial coherence. Simplifies VFX for multi-angle scenes.

- Consistent Character and Camera Continuity: Tracks character movements across cuts for consistent CG placement. Maintains eyelines and positions. Eliminates continuity errors. Enhances narrative flow.

- Enhanced Storyboarding and Previs: Creates 3D previs from 2D footage, enabling new angle exploration. Visualizes unfilmed shots. Inspires creative decisions. Improves planning.

- Complex Sequence Handling Made Easier: Manages action sequences with quick cuts. Ensures coherent CG element placement. Maintains audience perspective. Strengthens dynamic storytelling.

- New Creative Angles in Post: Allows reframing or generating new camera moves in 3D. Supports dynamic effects like slow-mo. Enhances visual storytelling. Expands post-production options.

- Better Integration of CG Elements in Story: Ensures CG characters interact realistically with environments. Increases immersion through consistent motion. Supports environmental interactions. Strengthens narrative impact.

- Empowering Complex Narratives: Simplifies ambitious scenes with multiple characters or angles. Acts as a virtual production stage. Encourages bold storytelling. Reduces logistical challenges.

- Example – Enhancing a Dialogue Scene: Reconstructs conversational scenes for consistent CG character placement. Enables unfilmed shots like two-shots. Improves editing flexibility. Enhances dramatic impact.

This technology provides filmmakers with a 3D understanding of their scenes, ensuring VFX consistency and enabling creative shot choices. It empowers storytellers to tackle complex narratives with ease, enhancing audience engagement.

What are the export options available in Wonder Studio for post-production workflows?

Wonder Studio offers a rich array of export options to ensure that whatever it creates can be seamlessly integrated into your post-production workflow. Once your project is processed, you can choose to download a variety of outputs, each useful for different post tasks:

- Final Composited Video: Exports rendered shot with CG character composited (up to 4K on Pro). Provided as PNG frames or MP4. Ideal for quick integration into edits. Delivers finished VFX shots.

- CG Character Render Pass (Alpha): Provides character frames with alpha channel. Enables custom compositing in After Effects or Nuke. Allows effects like motion blur. Offers compositing flexibility.

- Alpha Masks (Rotoscope Masks): Exports black-and-white masks of the actor as PNG sequences. Useful for custom compositing or paint work. Corrects edge errors. Enhances compositing precision.

- Clean Plate: Generates background frames without the actor as PNGs. Saves manual painting effort. Supports re-compositing or adding elements. Simplifies background adjustments.

- Camera Tracking Data: Exports camera animation as FBX or within scenes. Aligns additional CG elements. Saves matchmoving time. Ensures accurate 3D integration.

- Motion Capture Data (Body/Hands): Exports FBX with body and hand animation. Retargets to other rigs in Maya or Unity. Builds motion libraries. Supports animation refinement.

- Full 3D Scene Export (Blender, Maya, Unreal, USD): Exports complete scenes to Blender, Maya, Unreal, or USD. Includes character, camera, and lighting. Enables advanced edits. Fits diverse pipelines.

- Environment/Point Cloud Data: Provides basic scene reconstruction or point clouds. Aligns new objects via USD/Maya exports. Defines ground planes. Aids environmental integration.

- Audio: Excludes audio in exports; reattach original audio in editing. Ensures audio-visual sync. Requires manual integration. Maintains original sound.

Wonder Studio’s exports cater to both quick workflows and detailed post-production, offering flexibility for professional VFX shots. They integrate seamlessly with standard tools, preserving creative control.

How does Wonder Studio’s cloud-based rendering improve efficiency in VFX projects?

Wonder Studio’s cloud-based rendering enhances VFX efficiency by offloading computation to remote servers, freeing local resources and speeding up workflows. It eliminates traditional bottlenecks, making high-end VFX accessible to all creators.

Key efficiency benefits include:

- Local Resource Offloading: Frees local machines for other tasks during processing. Eliminates need for powerful workstations. Enables parallel workflows. Shortens project timelines.

- Faster Iteration Cycles: Cloud hardware delivers quick results, enabling rapid iterations. Encourages creative experimentation. Reduces waiting times. Improves final output quality.

- Multiple Job Processing: Queues multiple shots for concurrent processing. Reduces downtime for artists. Handles entire sequences efficiently. Speeds up large projects.

- No Infrastructure Maintenance: Eliminates hardware setup and maintenance costs. Cloud handles GPU and memory needs. Ensures reliability. Saves time and resources.

- Accessibility and Remote Work: Enables access from anywhere, supporting remote collaboration. Stores results online for easy sharing. Increases team flexibility. Enhances workflow efficiency.

- Consistent Performance: High-end cloud servers ensure predictable, fast processing. Handles complex scenes reliably. Aids schedule planning. Maintains consistent output.

- Scalability: Scales resources with project needs via plan upgrades. Avoids local hardware limitations. Optimizes resource use. Enhances financial efficiency.

- Focus on Creative Work: Frees artists from technical tasks for creative focus. Reduces render wait times. Maintains creative flow. Boosts productivity.

- Reduced Technical Bottlenecks: Robust servers minimize crashes or memory issues. Lowers troubleshooting time. Ensures smooth processing. Keeps projects on track.

Cloud-based rendering transforms VFX production into a scalable, efficient process, allowing creators to deliver high-quality results faster. It empowers small teams to achieve studio-level output without significant infrastructure investments.

What are the limitations and best practices when using Wonder Studio?

While Wonder Studio is a powerful tool, it does have certain limitations, and adhering to best practices will ensure you get the best results. Being aware of these will help you plan your shoot and post-production workflow effectively:

Limitations of Wonder Studio:

- Character Interaction: Currently, Wonder Studio cannot accurately handle physical interactions between a substituted CG character and live-action actors or props. Scenes where the actor hugs another person, fights, or manipulates objects may result in misplaced or static props that do not follow the CG model’s movement. The AI assumes a single isolated performer and cannot infer contact points or object dynamics. Therefore, for best results, avoid shots where the actor is entangled with others or heavily interacts with set pieces.

- Occlusions: Partial occlusion poses a challenge when objects or people pass in front of the tracked actor. The system does not perform full rotoscoping, so CG characters will always appear in front of the original footage, leading to elements like lamps or furniture rendering incorrectly over the subject. Facial capture also degrades if hair, hands, or accessories obscure key features. Heavy occlusions typically require manual compositing fixes to mask foreground elements correctly.

- Extreme Framing (Shot Types): Wonder Studio can manage wide, medium, and close-up shots, but very tight close-ups where most of the body is out of frame may lack enough tracking information. If only eyes or a small body part appear, the AI may misinterpret orientation or lose track entirely. Conversely, extremely wide shots with tiny figures may also struggle, though such shots often don’t require this tool. It is best to capture clear, well-framed footage where the actor remains prominently visible.

- Video Length and Cuts: The platform limits uploads to 15 shots (cuts) per video and recommends keeping each sequence under two minutes for efficient processing. Hard cuts must be used, as transitions like dissolves, fades, and whip pans confuse the AI and produce artifacts. Longer scenes should be split into multiple projects to stay within those constraints. Always trim and organize footage to straightforward cuts before submission.

- Dynamic Camera Limitations: Rapid camera movements, significant motion blur, or shaky handheld footage can impair tracking accuracy. The AI may lose consistency in limb and body position across blurred or jittery frames, requiring additional smoothing or passes. A moderate shutter speed and stabilized camera work better. Plan camera moves that capture clear actor motion to improve results.

- Character Rig Constraints: Custom character rigs must follow a humanoid structure with conventional proportions unusual limb counts, quadrupeds, or extreme proportions often fail. The system’s mocap algorithms assume human-like bone hierarchies and proportional relationships. Characters with nonstandard naming or extra root bones may face upload errors or misalignment. Rigs should strictly adhere to provided guidelines to ensure proper retargeting.

- Output Resolution: Lite plan outputs are capped at 1080p, while 4K delivery requires a Pro plan. Projects on lower tiers must upscale externally if higher resolution is needed. Lossless PNG sequences are available but limited by subscription level. Always verify plan capabilities to avoid resolution mismatches in the pipeline.

- Content of Footage: Single-actor replacements are supported; multi-actor scenes require separate passes or complex masking. The AI is trained exclusively on human motion, so replacing animals or non-humanoids typically fails. Scenes with multiple subjects necessitate isolating each actor in separate clips. Plan your shoot to focus on one actor at a time for seamless CG integration.

- Internet and Cloud Dependency: Wonder Studio requires robust internet to upload and process footage in the cloud. Uploading large 4K files can be time-consuming and subject to network variability. Service availability depends on server load and maintenance schedules. Contingency plans for offline edits and scheduled jobs help mitigate downtime risks.

Best Practices for Using Wonder Studio:

- Plan Shots with Minimal Occlusion: When filming, try to keep your actor fully visible in frame and avoid objects passing in front of them. Prefer simple backgrounds or timed movements to reduce occlusions and simplify tracking. If occlusion is unavoidable, capture alternate clean plates or additional takes without the actor. Manual compositing touch-ups are easier with clear reference footage.

- Use Clean Cuts and Edit Afterwards: Always provide footage with hard cuts only, avoiding fades, dissolves, or other transitions that confuse the AI. Perform Wonder Studio processing on the raw, straight-cut footage and add creative transitions in post-production once the CG is applied. This ensures the system handles each shot cleanly without artifacts. After compositing, reintroduce stylistic editing effects for a polished final sequence.

- Avoid Extreme Close-ups for Replacement: Ensure the camera framing includes enough of the actor’s body to give the AI ample tracking data. If you need close-ups on specific features, consider using live-action or practical solutions for those shots. Alternatively, film a slightly wider frame and crop in post to retain tracking information. This approach balances creative needs with technical requirements.

- Smooth Movement & Visibility: Aim for clear, well-lit performances with minimal motion blur to maintain tracking fidelity. Standard cinematic blur is acceptable, but excessive speed should be avoided. If shooting action or fight scenes, use a higher shutter speed and good lighting. You can reintroduce motion blur in compositing to match the scene’s aesthetic.

- Frame Padding: Leave extra footage at the start and end of each take to allow the AI to predict motion when actors enter or exit the frame. A buffer of one or two seconds beyond the exit helps the system finalize tracking cleanly. Avoid shots where key body parts cross the frame boundary abruptly. This simple technique prevents clipped or erratic animations.

- Lighting and Footage Quality: Provide high-resolution, well-lit footage free of extreme grain or compression artifacts. Consistent lighting conditions improve limb detection and reduce flickering. Avoid strobe effects or harsh shadows that obscure body parts. Clear, stable imagery yields the most reliable CG integration.

- Use Reference Props for Interaction (if needed): Utilize simple stand-in props when actors must hold or touch objects, ensuring correct arm and hand positioning. Plan to replace or animate these props in post to match the CG model’s grip. This technique maintains illusion without confusing the AI’s mocap process. Well-placed props reduce post-compositing effort.

- Check Character Upload Guidelines: Rigorously follow Autodesk’s character preparation rules, including proper bone naming and hierarchy. Ensure a single root bone and remove stray geometry to avoid upload errors. Adhering to the specs eliminates trial-and-error delays in your pipeline. Validate your rig before submission to save time.

- Preview with Templates: Experiment with Wonder Studio’s built-in example projects to assess performance on your footage. Templates reveal how settings and character models interact in controlled scenarios. This early testing uncovers any tracking or compatibility issues. Use these insights to refine your main project setup.

- Post-Process Touch-ups: Always review automated results and plan for manual refinements in compositing software. Minor corrections like adjusting hand intersection or matte anomalies ensure professional polish. Export separate passes to isolate and fix problem areas easily. Allocating time for these tweaks improves overall quality.

- Leverage Exports for Flexibility: Use the provided passes masks, clean plates, camera tracks within your compositing pipeline for precise control. If foreground objects need to be layered over CG, adjust the alpha channel in post. Lighting passes can be fine-tuned independently of the main render. Exploiting these exports maximizes creative freedom.

- Keep Footage Continuous: Process long takes as a single video input (within the 15-cut limit) to benefit from uninterrupted motion tracking. Splitting action prematurely can degrade continuity and create tracking errors. After processing, you can cut the output into editorial segments. This workflow leverages the AI’s temporal coherence.

By following these best practices clean cuts, clear framing, occlusion management, and diligent rig preparation you minimize the need for manual fixes and maximize AI efficiency. Proper planning and leveraging Wonder Studio’s export options ensure smooth integration into any VFX pipeline.

How has Wonder Dynamics impacted the VFX industry since its acquisition by Autodesk?

Wonder Dynamics (the creator of Wonder Studio) was acquired by Autodesk in May 2024, and this move has had significant ripple effects in the VFX industry:

- Validation of AI in VFX: Autodesk’s purchase of Wonder Dynamics validated AI-powered VFX as an industry cornerstone rather than a niche experiment. Major studios and independent artists alike began exploring automated workflows to stay competitive. Investment in similar tools has surged as a result. The endorsement by Autodesk convinced skeptics to incorporate AI into their pipelines.

- Integration into Professional Pipelines: Under Autodesk, Wonder Studio (now Flow Studio) was deeply woven into flagship products like Maya and 3ds Max. This seamless compatibility encourages studios to adopt it without disrupting existing workflows. Plugins and integrated exporters simplified data exchange. Traditional VFX artists feel confident using AI-driven tools alongside legacy software.

- Rebranding and Platform Synergy: Autodesk rebranded Wonder Studio as Autodesk Flow Studio to align with its cloud ecosystem. It now serves as a core component within the broader Flow suite for media production. This integration points to a future where editing, tracking, and AI tasks coexist on the Autodesk cloud. It positions Wonder Studio as one piece in an end-to-end creative toolkit.

- Upskilling and Roles: The acquisition shifted artist roles toward supervising and refining AI outputs rather than manual animation. Training programs and professional discourse emphasize AI tool proficiency. Junior artists handle bulk mocap processing while seniors apply creative judgment. The industry now values familiarity with AI workflows as a key skill.

- Indie and Mid-level Studio Boost: Backing by Autodesk reassured smaller studios that Wonder Studio would have long-term support. Indie filmmakers and mid-tier shops feel safe integrating the tool into their pipelines. Its enterprise-level reliability makes AI VFX accessible to projects with modest budgets. Adoption rates among non-blockbuster productions increased substantially.

- New Features and Accelerated Development: Autodesk resources accelerated the rollout of major updates like the Video-to-3D Scene mode. Continuous feature releases demonstrate a rapid improvement cycle. Teams can expect monthly enhancements driven by scaled engineering efforts. User feedback channels expanded, aligning development with real production needs.

- Industry Competition: The high-profile acquisition spurred competing software vendors to fast-track their own AI initiatives. Adobe and other VFX startups announced similar tools to keep pace. This competitive dynamic broadens available options and drives innovation. VFX professionals benefit from a richer ecosystem of AI-assisted capabilities.

- Training and Education: Autodesk incorporated Flow Studio into its University talks, webinars, and certification programs. Educational institutions now include AI-based VFX modules featuring Wonder Studio. Online courses and tutorials reference its workflows as industry benchmarks. New artists enter the field already versed in AI-driven techniques.

- Autodesk’s Vision of Cloud Collaboration: As part of Autodesk’s cloud strategy, Flow Studio demonstrates that complex VFX tasks can run entirely online. This vision supports remote collaboration and centralized asset management. Major studios piloting cloud production see accelerated workflows. It signals a gradual industry shift from desktop licenses to cloud services.

Since the acquisition, Wonder Studio has moved from an experimental demo to a professionally backed, enterprise-ready solution that reshapes VFX workflows and project planning. VFX teams now design shoots and pipelines with AI integration in mind, reflecting growing confidence in cloud-based automation.

What are the latest updates and features introduced in Wonder Studio?

Here are some of the latest updates and new features that have been introduced (as of late 2024 and early 2025):

- Wonder Animation – Video-to-3D Scene Project Type: The Wonder Animation Video-to-3D Scene project type reconstructs multi-cut video sequences as a single 3D environment. It preserves spatial continuity by matching camera trajectories and scene geometry across cuts. This feature unlocks workflows for short films and complex sequences beyond single-shot VFX. It represents a major expansion from isolated shot processing.

- Standalone AI Motion Capture Mode: The dedicated AI Motion Capture mode exports only raw body and hand motion data without rendering the composite. It uses significantly fewer credits, making it cost-effective for pure animation pipelines. Artists can import the mocap data into any chosen engine for custom rendering. This transforms Wonder Studio into a versatile cloud mocap service.

- Expanded Export Integrations (Maya & Unreal support, USD): Direct exports to Maya and Unreal Engine scenes simplify integration into established pipelines. The addition of USD export ensures broad compatibility across software like Houdini or Unity. These export options eliminate Blender-centric limitations and streamline studio-wide workflows. Users can now choose their preferred rendering environment without conversion hassles.

- Clean Plate & Camera Track as Separate “Wonder Tools”: Wonder Tools now include standalone utilities for generating clean plates and extracting camera tracks. Users can remove actors or track camera motion independently of character animation workflows. Lower credit costs apply when using these tools in isolation. This flexibility broadens the platform’s utility for non-character tasks.

- Project Management Features: New UI features let users star, filter, and sort projects to manage large shot volumes. Improved project organization enhances productivity for teams handling multiple sequences. Favorites and tags make it easy to quickly locate high-priority tasks. These updates streamline workflow oversight within the cloud dashboard.

- Character Library Additions: The built-in character library received new default models and downloadable texture packs. For example, a “Nick” character was added along with updated material resources. These additions provide more options for rapid testing and demo scenes. Users benefit from pre-made assets without creating custom rigs.

- Improved Accuracy and Retargeting: Under-the-hood updates refined motion prediction for brief actor disappearances and complex movements. Focal length estimation and facial blendshape applications also saw notable enhancements. Advanced retargeting settings now let power users adjust bone maps and constraints manually. These improvements yield smoother, more reliable animations.

- Credits System Introduction: The platform now clearly defines credit consumption per project type and subscription tier. Documentation spells out how many credits are required for AI MoCap versus full composites. Promotional pricing and tiered credit allocations help users budget their usage. Transparent costing prevents surprises in production workflows.

- Autodesk Account Integration: User authentication and account management shifted to Autodesk IDs for unified sign-on. This integration paves the way for seamless access to other Autodesk cloud services. Future enhancements will likely include license management and single-pane dashboards. The move strengthens corporate trust and simplifies user administration.

- Rebranding Updates: The interface and documentation now refer to the tool as Autodesk Flow Studio instead of Wonder Studio. Brand alignment across Autodesk’s cloud suite offers a consistent user experience. Migration of help resources to Autodesk’s domains centralizes support materials. Users should note the name change when seeking tutorials or documentation.

- Community and Learning Resources: Enhanced community support includes an official Discord server with dedicated channels and expert moderators. New tutorial videos and template projects help users master features like Wonder Animation. Autodesk University sessions and online webinars incorporate Flow Studio workflows. This expanded educational ecosystem accelerates user proficiency.

- Enterprise Features: An Enterprise plan introduces custom credit allocations, team account management, and priority support. Tailored pricing and service-level agreements suit large studios with high-volume needs. Admin tools let managers oversee usage and project ownership organization-wide. These options demonstrate maturation into a scalable production service.

- Performance and Stability Improvements: Ongoing bug fixes and UI tweaks have enhanced upload validation and error messaging. Improved handling of long filenames and video status displays reduces user frustration. Backend optimizations lead to smoother processing and fewer timeouts. These incremental refinements result in a more robust, reliable tool.

Recent release notes emphasize the Video-to-3D Scene and Standalone AI Mocap modes, complemented by direct exports to Maya/Unreal, USD support, and specialized Wonder Tools like Clean Plate and Camera Track. These enhancements reflect Autodesk’s rapid development cycle, broadening Wonder Studio’s flexibility across diverse production pipelines.

How does Wonder Studio support collaborative workflows among VFX teams?

Collaboration is key in VFX production, and Wonder Studio has features and design choices that facilitate teamwork and integration within a team’s pipeline:

- Multi-User Access (Enterprise): The Enterprise plan supports multiple users under one organization account with shared credits. Supervisors can review and coordinate all artist projects centrally. Multiple artists can contribute shots into a unified credit pool to maintain oversight. Custom scalability and priority support further streamline studio-level collaboration.

- Cloud-Based, Accessible Anywhere: Wonder Studio operates entirely in the cloud so team members worldwide can work on the same project without exchanging large files. Processed results are viewable instantly through the web interface. Directors and supervisors can log in to review shots without waiting for file transfers. This centralized approach ensures everyone sees the latest version, reducing miscommunication.

- Shotgun/Flow Integration: Autodesk is positioning Flow Studio for integration with ShotGrid to automate production tracking. Tasks, versions, and shot statuses can update automatically in the tracking system. Artists could flag Wonder Studio completions to notify downstream departments. Even before formal integration, teams link cloud outputs into their ShotGrid pipelines manually.

- Open Standards for Smooth Handoffs: Wonder Studio exports in industry formats like USD and FBX. Animation data and scene assets import seamlessly into tools such as Maya, Houdini, or Unreal without loss. Departments use their preferred software with minimal friction. This openness ensures each team member can pick up AI-generated assets easily.

- Integration with Version Control: Outputs (images, FBXs, USDs) integrate into asset management systems like Perforce or Git LFS. Automated scripts can pull completed jobs directly into shared folders. Because processing is deterministic, teams can rerun shots for consistent results. As soon as a Wonder Studio job finishes, downstream artists immediately gain access.

- Consistent Results for all Team Members: The same AI algorithms process every shot, producing uniform motion style and lighting across a sequence. This baseline consistency avoids the stylistic variances of different animators. Teams receive reliable outputs that require only minor tweaks for continuity. It effectively standardizes the animation “assistant” role across the production.

- Collaboration between Artists and AI: Wonder Studio takes on routine grunt work, letting junior artists bulk-process shots while seniors refine creative details. The AI acts as a technical partner, freeing human artists to focus on higher-level decisions. This division of labor accelerates throughput and leverages individual strengths. It creates a new dynamic where artists and AI collaborate on each shot.

- Review and Feedback Loops: Fast web-based previews and downloadable quicktimes enable supervisors to review AI outputs immediately. Rapid turnaround accelerates iteration cycles and boosts overall quality. Directors and animators give precise notes based on real frames. The tightened loop encourages more collaborative refinement.

- Community Collaboration Support: Wonder Dynamics maintains Discord servers and forums for sharing tips, scripts, and workflows. Teams learn integration tricks from Unreal engine hacks to pipeline automations directly from peers. This communal knowledge base complements in-house pipelines. Shared best practices help studios adopt Wonder Studio more effectively.

- Example Workflow: A small studio logs actor footage, preps characters in Wonder Studio, and assigns junior artists to run overnight jobs. Leads review USD/FBX outputs in Maya and apply tweaks as needed. Refined assets flow into lighting and compositing for environment integration. Producers track progress on a cloud dashboard, viewing playblasts and overall shot status at a glance.

Overall, Wonder Studio fosters team collaboration through shared cloud access, open-standard exports, and consistent AI-driven results. By automating routine tasks and integrating with existing pipelines, it shifts human effort to creative decision-making and efficient interdepartmental handoffs.

What are the use cases of Wonder Studio in film, television, and gaming industries?

Wonder Studio’s ability to automate character animation and integration opens up a variety of use cases across different media industries:

- Film (Movies): Indie filmmakers can generate previz or final VFX shots by filming stand-ins and replacing them with CG characters, saving time on basic movements. Stunt actors’ performances map directly onto creature models for hero shots or background crowds. Low-budget productions gain ambitious effectsCG aliens, robots, or actor doubleswithout large animation teams. Even major films can offload 80–90% of routine animation tasks to Wonder Studio.

- Television (TV Series & Streaming): Episodic shows with tight schedules can insert recurring CG charactershumanoid aliens or virtual hostsusing the same AI pipeline each week. Quick turnarounds allow sci-fi and fantasy series to maintain visual consistency across episodes. Directors get rapid previz for complex sequences, aiding fast decision-making. Children’s and educational programs benefit from budget-friendly cartoon/live-host integrations.

- Gaming Cinematics and Trailers: Game studios film actors for motion reference and use Wonder Studio to animate in-engine models for cutscenes, reducing reliance on mocap stages. Marketing trailers featuring CG characters alongside live actors become more affordable. Exported animations plug into Unreal Engineoften driving MetaHumansfor rapid storytelling prototypes. Smaller developers deliver high-quality cinematics without extensive mocap gear.

- Augmented Reality/Virtual Production: Though not real-time, Wonder Studio can generate quick CG content for LED-wall backgrounds or AR demos between takes. Virtual production teams can preview CG doubles on set by reprocessing takes overnight. Future consumer-focused AR apps might let users record and insert full-body CG figures. The technology lays groundwork for real-time collaborative uses down the line.

- Advertising and Commercials: Agencies animate mascots or products by filming actors performing motions and replacing them with CG versions. Campaigns requiring lifelike product interactions or digital spokescharacters become faster and cheaper. Wonder Studio supports lossless mask and clean-plate exports to integrate seamlessly with compositing. Tight ad budgets benefit from AI-driven turnaround.

- Visualization and Theme Parks: Pre-show videos and ride media often mix live actors with CG figures. Wonder Studio simplifies creating these mixed media experiences without full animation pipelines. Museums and experiential venues can produce high-quality projections or interactive demos cost-effectively. Live footage of hosts can be augmented with digital characters on par with film-grade VFX.

- Education and Fan Projects: Film schools and hobbyists leverage Wonder Studio to explore VFX without steep learning curves. Students produce professional-quality creature effects or lightsaber duels with minimal resources. Fan filmmakers integrate CG droids or duplicates into their shoots, raising the bar for independent content. The tool democratizes VFX experimentation for learning and passion projects.

Some specific hypothetical examples to illustrate:

- Film: An indie horror film films actors for ghost effects, then uses Wonder Studio to replace them with spectral models and clean up plates for creepy apparitions.

- TV: A fantasy series films an actor delivering lines as a creature on set, then auto-animates the final CG model for weekly episodes, tweaking only facial details.

- Gaming: A developer shoots a live actor running alongside a stunt double, then uses Wonder Studio to swap in a CG hero model for a mixed-reality trailer.

Across these industries, the common thread is dramatic time and cost savings, enabling content that might otherwise be out of reach. Wonder Studio brings Hollywood-caliber VFX within budget and schedule constraints of film, TV, and game productions.

How does Wonder Studio ensure high-quality output in various production environments?

Ensuring high-quality output means that Wonder Studio’s results meet the bar for professional use across different scenarios (film, broadcast, games). It achieves this through several means:

- High Fidelity Motion and Animation: Wonder Studio captures detailed body and facial motion including finger movements and retargets it to character rigs with proper foot planting. Advanced AI models minimize jitter and preserve natural physics. The retargeting respects rig constraints so movements align with character proportions. This detail makes CG performances feel authentic and professional.

- Physically Based Lighting Integration: The system analyzes scene lighting direction, intensity, color and applies matching illumination to CG characters automatically. Ambient occlusion and grain matching further blend the elements. The result avoids the “pasted-on” look and maintains photorealism. Artists can still refine lighting in high-end renderers if desired.

- Resolution and Format Support: Outputs support up to 4K resolution on Pro plans and provide lossless PNG sequences for final compositing. Clean plates, alpha masks, and camera data export without compression artifacts. Character textures and normal maps maintain their original fidelity during rendering. This ensures no quality loss entering post-production pipelines.

- Professional Export Elements: Wonder Studio delivers motion data, camera tracks, and layered exports in industry-standard formats (USD, FBX, EXR). Compositors receive granular assets masks, clean plates, passes to address any imperfections. This “pipeline-ready” approach lets teams fine-tune composites rather than rebuild elements from scratch. It provides a robust foundation for cinematic polishing.

- Artist in the Loop – Subjective Work: While AI handles technical tasks, final artistic judgments color grading, motion smoothing remain with the artist. Exports to Maya, Nuke, or Blender facilitate human-driven refinements. By leaving the last 10% of work to skilled artists, productions achieve truly high-end quality. Wonder Studio focuses on the 90%, empowering teams to elevate the final 10%.

- Continuous Learning and Updates: The AI models improve over time as Wonder Dynamics (now Autodesk) releases updates. New training data and optimizations continually enhance motion accuracy and lighting matching. Studios benefit from ongoing improvements without changing their workflow. This ensures Wonder Studio keeps pace with evolving production standards.

Together, these capabilities deliver professional-grade outputs across film, television, and gaming pipelines. By combining detailed motion capture, coherent lighting, high-resolution exports, and robust asset layers, Wonder Studio provides a solid base for final artistic polish.

What educational resources and tutorials are available for mastering Wonder Studio?

To help users get up to speed and master both basic and advanced features, Wonder Studio offers these learning resources:

- Official Documentation: The Wonder Studio Help Center (now labeled as Autodesk Flow Studio documentation) is the primary reference. It contains step-by-step guides on everything from getting started to advanced features. Key sections include:

- Getting Started: Basics of the interface, how to create projects, and quick start guide.

- Character Upload: Detailed instructions on preparing and uploading custom 3D characters, including requirements and how to fix validation.

- Project Workflows: Guides on using different project types (Live Action Easy vs Advanced, AI Motion Capture only, Animation/Video to 3D Scene, etc..

- Wonder Tools: Explanation of Clean Plate and Camera Track tools and how to use them in isolation.

- Export Instructions: How to export scenes to Blender, Maya, Unreal, and USD, and how to export individual elements like masks and camera track.

- Platform Limitations: A page outlining current limitations and best practices (some of which we covered, like shot lengths, occlusion issues, etc.– reading this helps avoid common pitfalls.

- Release Notes: A running changelog of new features and fixes. This is useful to keep track of updates and understand newly added capabilities.

- Video Tutorials: Wonder Dynamics has provided a suite of video tutorials to visually guide users through common task. As of early 2025, these include:

- Platform Tutorials: Overviews of the Wonder Studio workflow – showing how to take a sample footage and process it through the tool.

- Blender Scene Workflow Tutorial: Demonstration of exporting to Blender and doing further work ther.

- Character Creation Tutorials: They have multiple parts here –

- Character Requirements Tutorial: What is needed in a rig for best result.

- AI MoCap Overview: How the motion capture system works for custom character.

- Maya Add-On Tutorial: Using the Maya plugin for character prep and joint mappin. processes step by step.

- Community Forums & Blog: The Wonder Studio Discord and Autodesk Community forums host FAQs, usage tips, and troubleshooting threads. Official blog posts and case studies showcase real project breakdowns and advanced workflows. These peer-driven channels complement formal docs with practical, real-world advice.

- Conferences, Webinars & Online Courses: Autodesk University sessions and occasional webinars cover Flow Studio features and future roadmaps. Third-party platforms like Udemy or CGCircuit may offer dedicated courses on AI-driven VFX. Recorded sessions provide flexible learning for diverse schedules.

- Template Projects & Sample Files: Built-in templates let users reverse-engineer example scenes. Loading sample projects demonstrates best practices for shot setup, character replacement, and final exports. Experimenting with these templates accelerates hands-on mastery.

By combining official guides, video walk-throughs, community wisdom, and real project examples, users can quickly progress from beginner to advanced workflows. Regularly checking release notes and engaging with peers ensures continuous learning and mastery.

Where can users find community support and share experiences with Wonder Studio?

A strong and active community has formed around Wonder Studio, where users can seek support, share work, and learn from one another:

- Official Discord Server: The primary hub for real-time Q&A, workflow tips, and showcase channels. Wonder Dynamics staff and experienced peers answer questions, share scripts, and provide early announcements. Community-driven channels foster collaboration and quick troubleshooting.

- Social Media (X/Twitter, Instagram): @WonderDynamics posts bite-sized updates, demo clips, and retweets impressive user showcases. Instagram highlights visual before/after reels, while Facebook groups may host longer discussions. Tagging official accounts can earn broader visibility for standout projects.

- Reddit & Online Forums: Subreddits like r/vfx, r/Filmmakers, and r/AfterEffects feature threads on Wonder Studio tests, integration tips, and industry perspectives. Autodesk’s Community site offers formal support forums monitored by experts and power users. Stack Exchange occasionally hosts in-depth technical Q&A.

- YouTube & Content Creators: Independent creators produce tutorials, reviews, and live demos often with detailed comments and user feedback. Searching for “Wonder Studio tutorial” yields step-by-step guides and creative experiments. Comment sections serve as informal help desks.

- ArtStation, CGSociety & Showcases: Artists share breakdowns, reel updates, and case studies on portfolio platforms. These high-quality showcases provide inspiration and technical insights. Vimeo or personal blogs often accompany shared projects with workflow notes.

- User Groups & Events: Autodesk user-group meetups, SIGGRAPH sessions, and local VFX gatherings sometimes include Flow Studio discussions. These in-person or virtual events allow networking with fellow professionals and direct feedback from developers.

- Blogs, Articles & Case Studies: Medium posts and VFX-industry blogs document individual experiences, lessons learned, and best practices. Published breakdowns in outlets like CGChannel or VFX Voice offer production-grade insights.

- Collaborative Challenges & Community Projects: Discord-hosted challenges (“Replace yourself with a superhero,” etc.) encourage friendly competition and shared learning. Peer reviews during these events help participants refine their pipelines.

Whether you need fast technical support, creative inspiration, or deep production insights, the Wonder Studio community spans real-time chat, social showcases, and formal forums. Engaging across these channels keeps you informed, supported, and connected as the tool evolves.

In essence, the Wonder Studio community is active and welcoming, spanning real-time chat to social platforms. New users are encouraged to jump in, ask questions, and even show off their experiments – it’s a collective learning experience as this tech develops.

FAQ questions and answers

- What does Wonder Studio do in a VFX pipeline?

Wonder Studio automates animation, lighting, and compositing of CG characters into live-action footage, tracking an actor’s performance to replace them with a 3D character, handling motion capture and scene integration. - Do I need special cameras or a motion capture suit?

No, it uses single-camera footage (phone, DSLR, etc.) for markerless motion capture, requiring no suits or special hardware. - Can it animate any 3D character?

Best for humanoid characters with rigged skeletons; non-humanoid creatures don’t map well due to human motion-based AI. Polycount limit is ~1.5M polygons. - What output formats does it provide?

Outputs include 4K rendered video, CG character pass, clean plate, alpha masks, camera tracking (FBX), 3D scene files (Blender, Maya, Unreal, USD), or AI mocap data (FBX, USD). - How long of a video can it process, and can it handle multiple shots?

Processes up to 2 minutes, handling 15 hard cuts. No dissolves or transitions; longer scenes need separate projects. “Video to 3D Scene” maintains spatial continuity. - What are the system requirements?

Browser-based (Chrome/Safari) and cloud-run, needing only a computer and good internet. No mobile browser support. - How much does it cost? Is there a free version?

Lite plan: $29.99/month (1080p, 3000 credits). Pro plan: $149.99/month (4K, 12000 credits). Enterprise plan: custom pricing. No fully free version; trial periods sometimes offered. - What are some limitations?

Struggles with physical interactions, heavy occlusions, multi-view input. Tracks up to 2 (Lite) or 4 (Pro) actors. Best for realistic animation, not exaggerated motions. - How does the credit system work?

Credits (3000 Lite, 12000 Pro) used per project: 20 credits/second for live-action, 4 credits/second for AI mocap or tools. Credits reset monthly; no rollover. - Can it be integrated into professional VFX workflows?

Yes, exports to FBX, USD, Maya/Blender/Unreal files, EXR/PNG sequences. Used for first-pass animation/comp, refined in Nuke, Maya, etc. Autodesk ownership enhances Maya/USD integration.

Conclusion

Wonder Studio, an AI-driven tool by Wonder Dynamics, automates VFX by animating and compositing CG characters into live-action scenes, streamlining complex workflows for creators. It features markerless motion capture, intelligent lighting, custom character support, and exports for major 3D/game engines, fitting seamlessly into existing pipelines. Cloud-based processing speeds up workflows, while updates like Video-to-3D Scene reconstruction enhance capabilities. Despite limitations like occlusions, it democratizes VFX, enabling indie projects to rival big-budget productions. Now part of Autodesk, Wonder Studio gains industry trust, reshaping VFX with AI-assisted workflows. It empowers creators to focus on storytelling, making high-quality visuals more accessible and achievable.

Sources and citation

- Wonder Dynamics Official Website – Autodesk Flow Studio (formerly Wonder Studio) OverviewAutodesk – Wonder Dynamics: AI-powered VFX Software

- Autodesk Solutions – Wonder Dynamics: AI-powered VFX softwareAutodesk – Wonder Dynamics: AI-powered VFX Software

- CG Channel – Wonder Studio is now available (July 2023)CG Channel – Wonder Studio is Available

- TechCrunch – Autodesk acquires AI-powered VFX startup Wonder Dynamics (May 2024)TechCrunch – Autodesk Acquires Wonder Dynamics

- Wonder Dynamics Help Center – Getting Started & Platform LimitationsAutodesk Flow Studio Help – Getting Started

- CG Channel – Wonder Studio export features and limitationsCG Channel – Wonder Studio is Available

- Autodesk Flow Studio Documentation – FAQ and Community LinksAutodesk Flow Studio Help – Documentation

- CG Channel – Wonder Studio pricing and terms of useCG Channel – Wonder Studio is Available

- Autodesk (Wonder Dynamics) – Release Notes (Latest Features like Video to 3D Scene, AI Mocap)Autodesk Flow Studio Help – Release Notes

Recommended

- How to Download MetaHuman: The Ultimate Step-by-Step Guide to Accessing Digital Humans

- Virtual Influencers: AI-Generated vs 3D-Modeled – Which Is Better?

- How to Make Hair in Blender

- Blender Redistribute Curve Points Geometry Nodes Preset: A Comprehensive Guide

- Top 10 Cyberpunk 2077 Mods to Transform Your Night City Experience

- Conversational AI in Unreal Engine: Integrate (MetaHuman, ReadyPl AayerMe, Convai etc) for Real-Time Interactive Characters

- Ornatrix Guide: Best Hair and Fur Plugin for 3D Artists in Maya, 3ds Max, and Blender

- Saving and Switching Blender Camera Angles with The View Keeper

- A Beginner’s Guide to The View Keeper Add-on for Blender

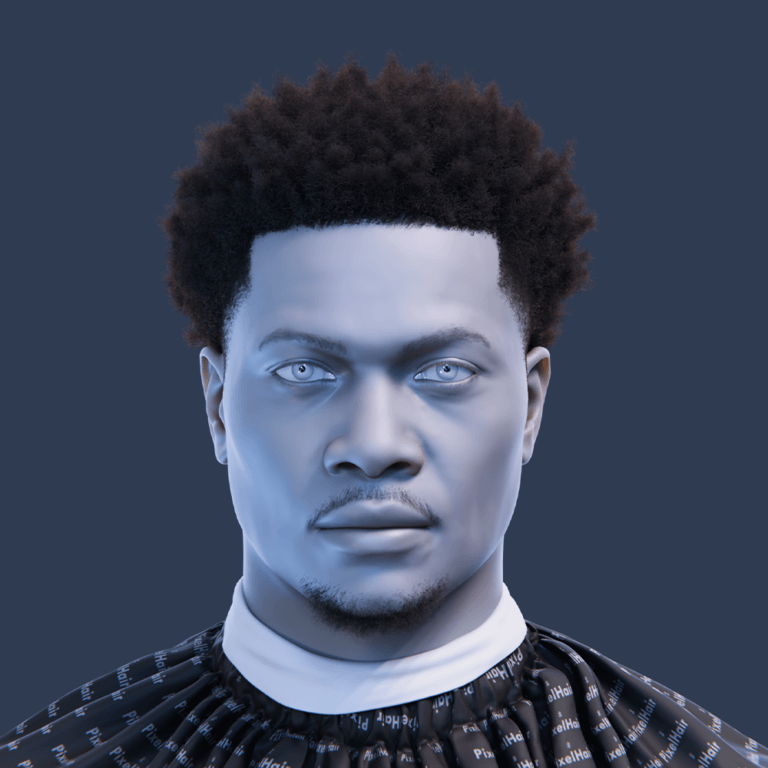

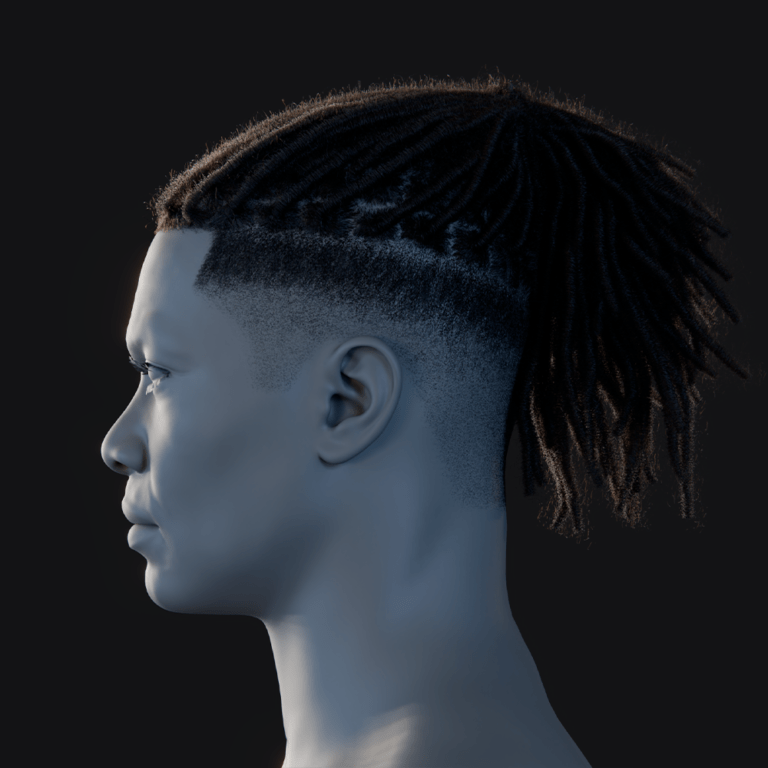

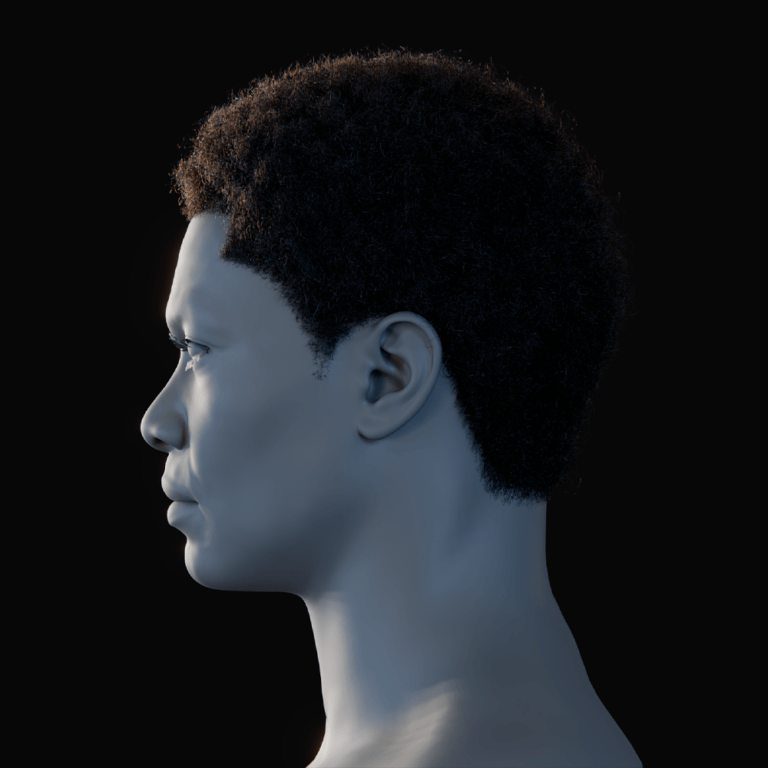

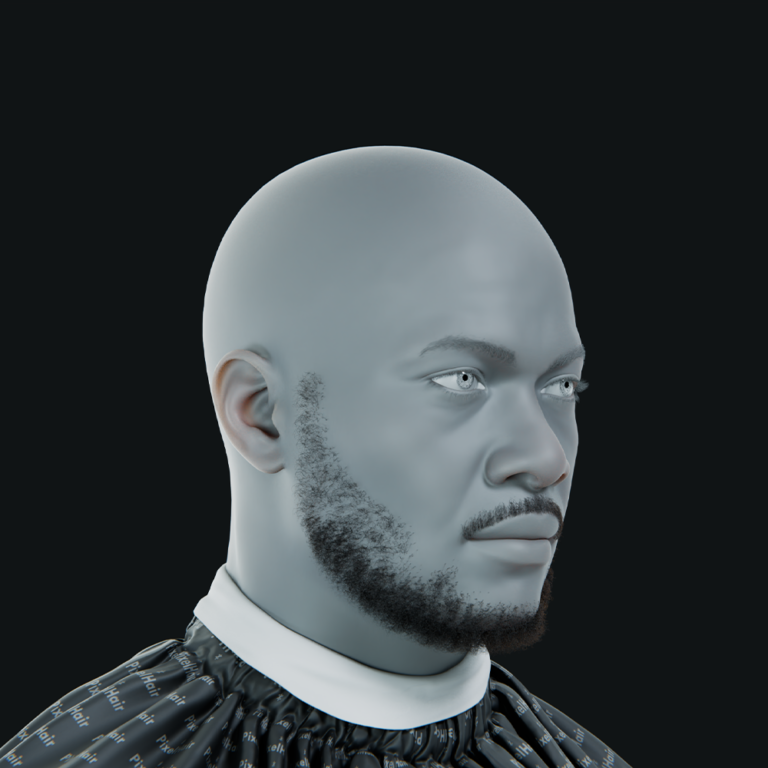

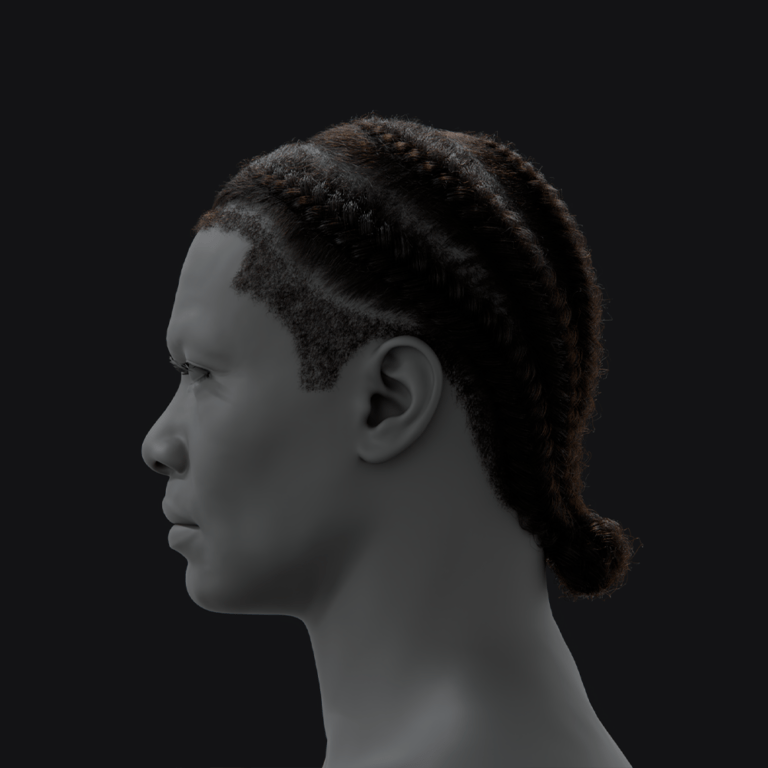

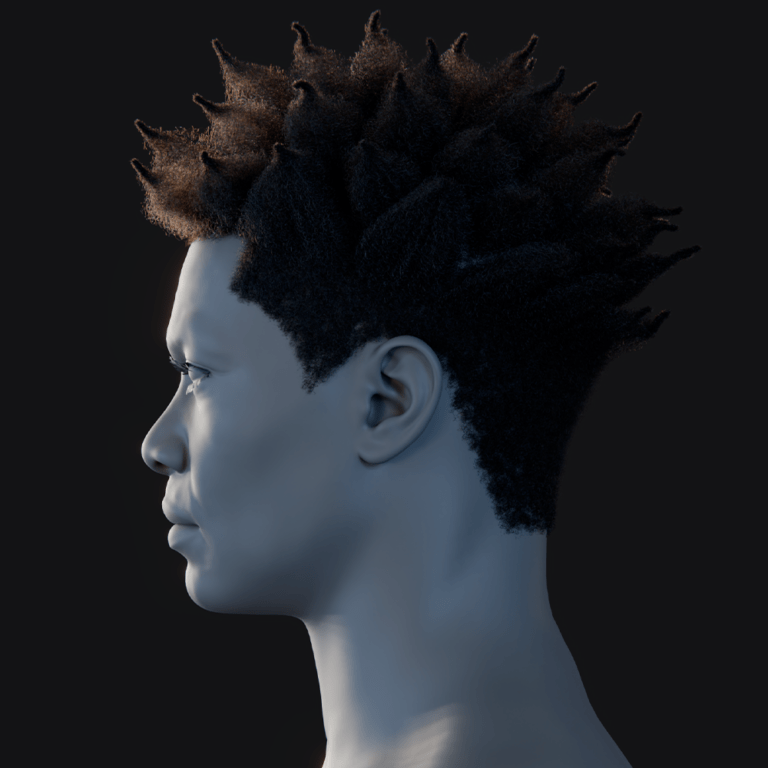

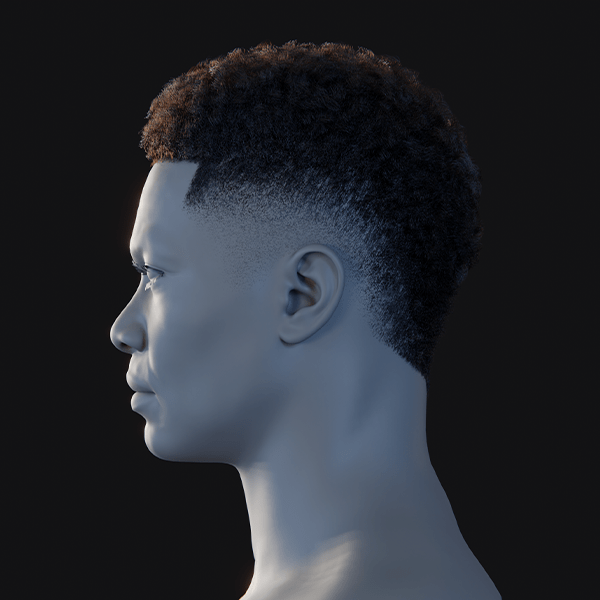

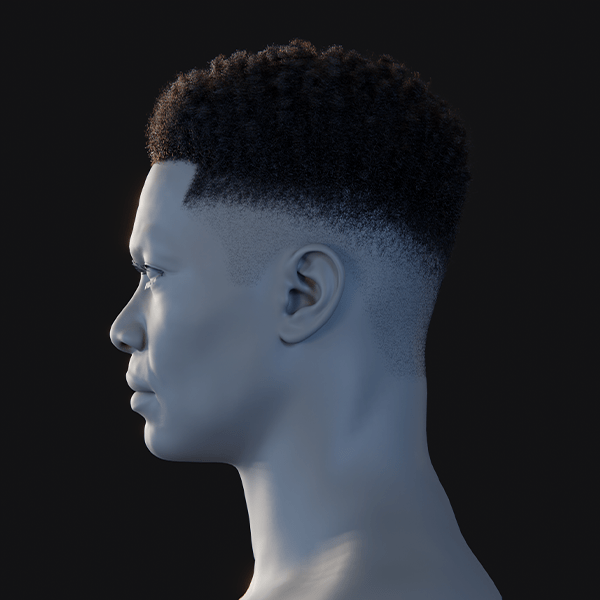

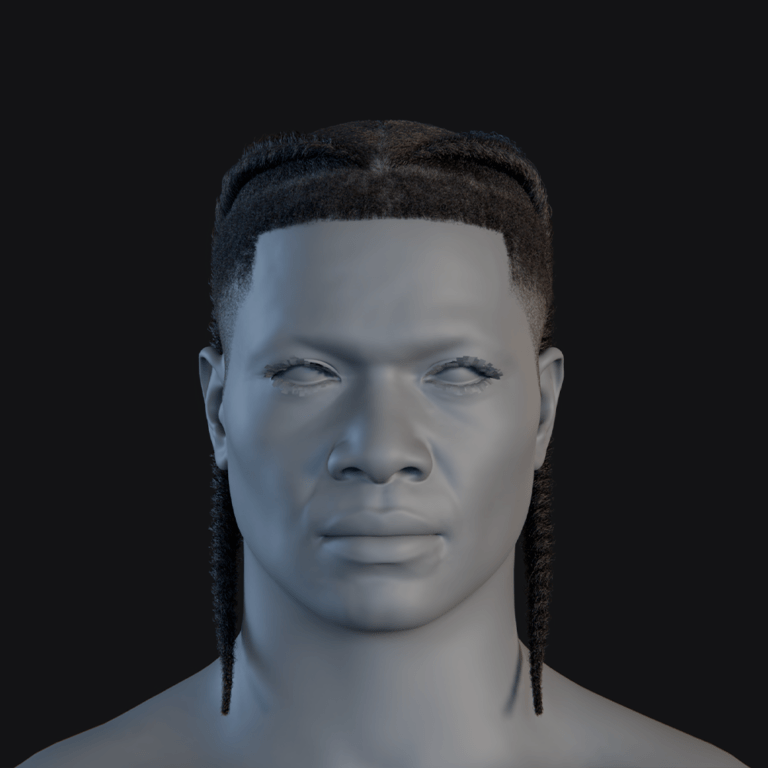

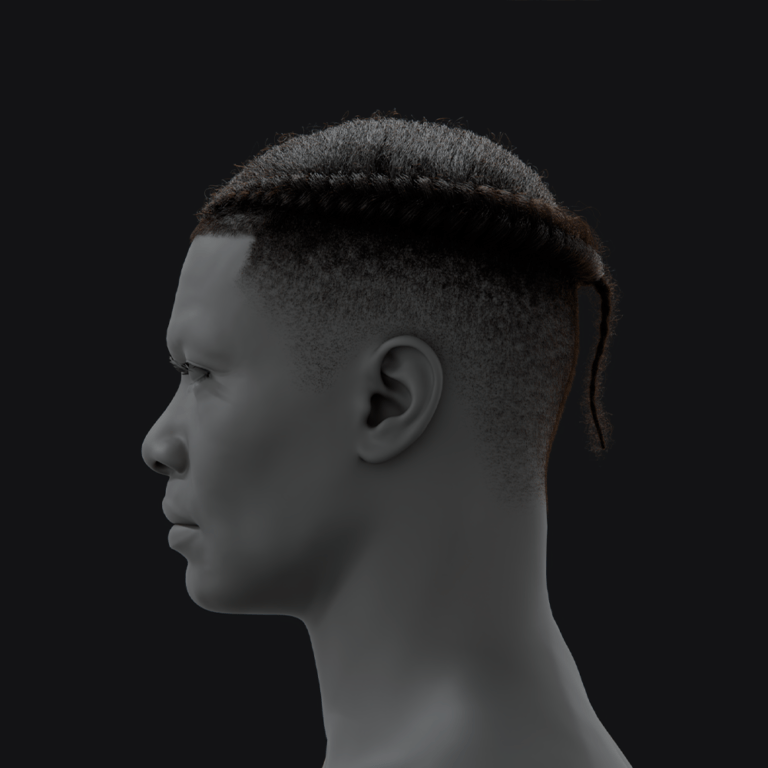

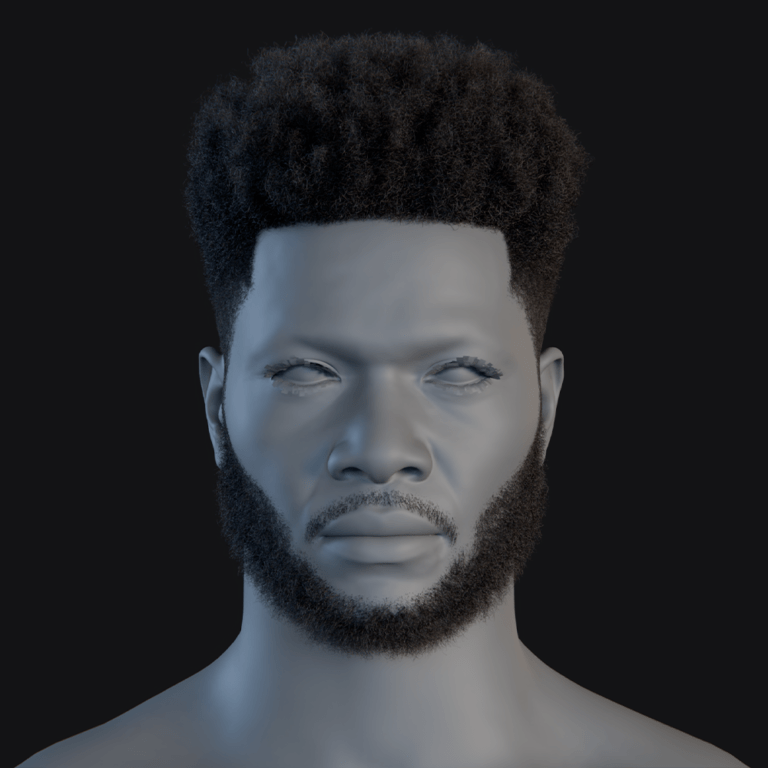

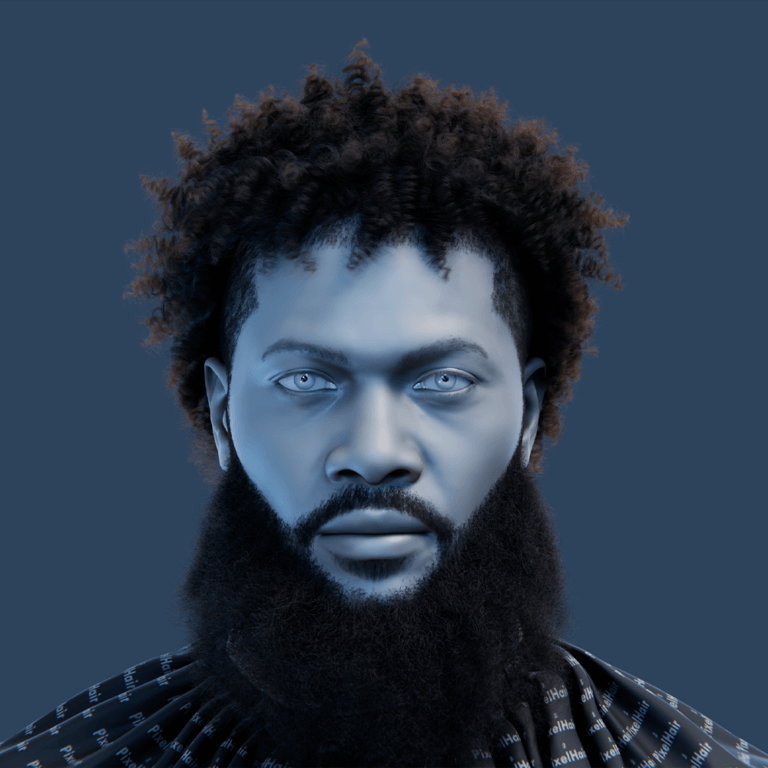

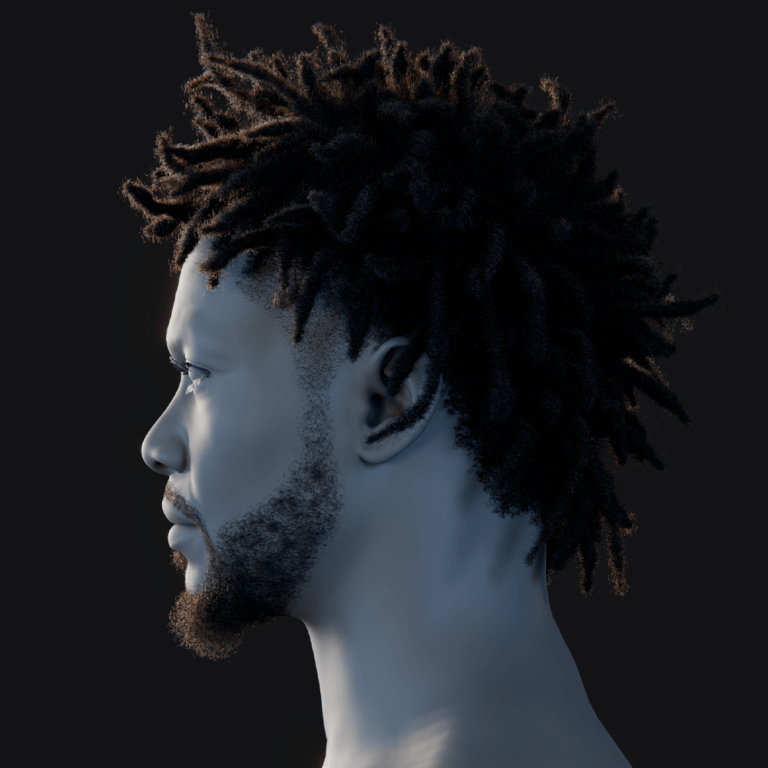

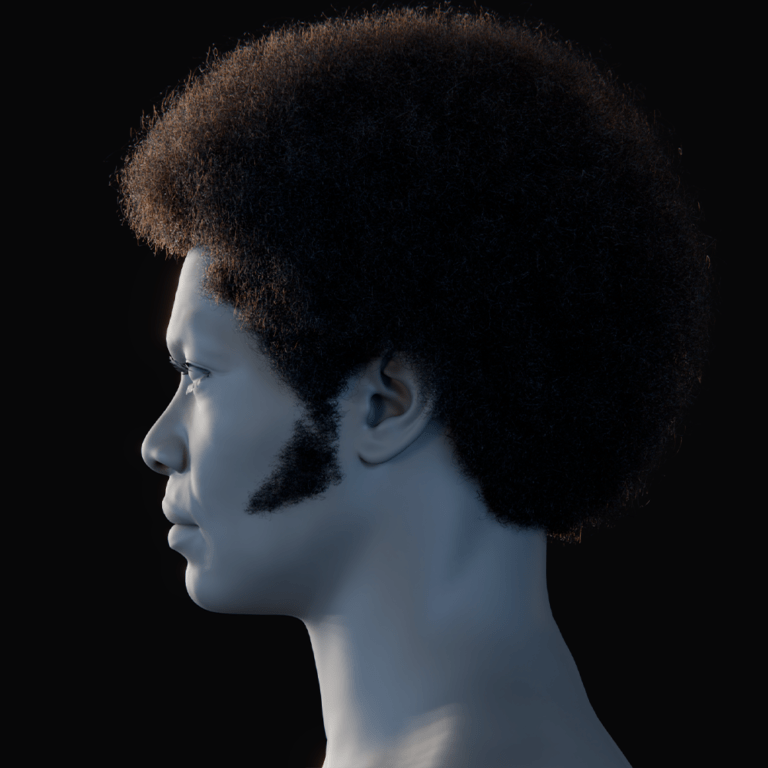

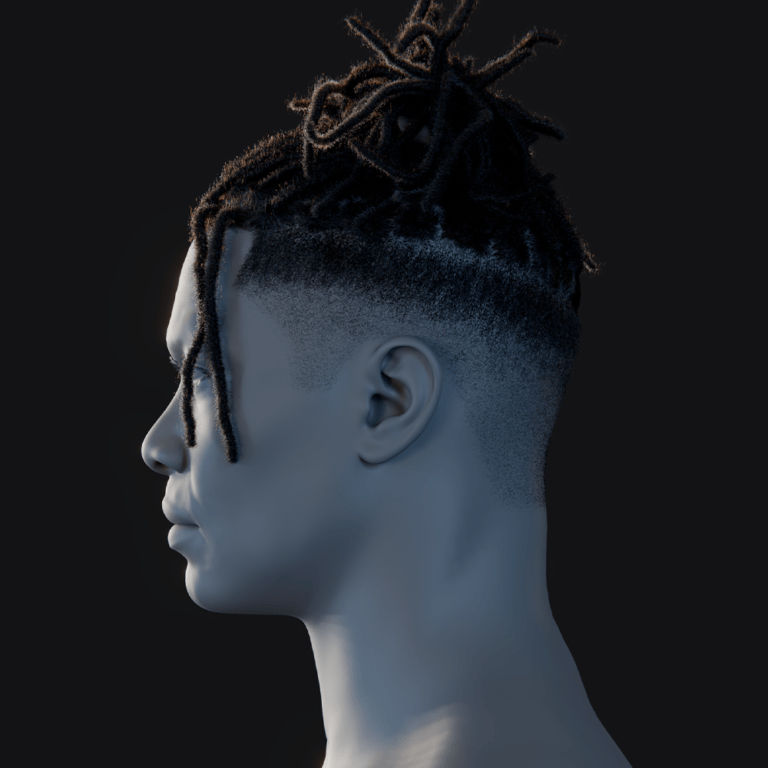

- The Ultimate Guide to the Most Popular Black Hairstyle Options