NVIDIA Omniverse Audio2Face and Metahumans enable realistic facial animations from audio for digital characters in Unreal Engine 5. This guide covers Audio2Face functionality, MetaHuman integration, and best practices for high-quality, real-time lip-sync and expressions in UE5. It details technical setup, file formats, troubleshooting, and advanced tips like PixelHair for realism, outlining the Audio2Face-to-MetaHuman workflow for efficient dialogue-driven animations and virtual productions.

What is NVIDIA Omniverse Audio2Face and how does it work?

NVIDIA Omniverse Audio2Face: AI tool generates facial animations, including lip-sync and expressions, from audio using a deep neural network trained on speech and 3D facial motion data. Maps audio features (phonemes, intonation) to realistic movements in real time, adjusting expressions for emotions like happiness or anger. Runs on Omniverse, supports WAV/MP3, offers real-time streaming or offline export, and uses blendshape values or vertex movement. Includes default 3D head model, supports custom character retargeting, and is fast, user-friendly, language-agnostic, and customizable for high-quality lip-sync, accessible to non-experts.

What are the benefits of using NVIDIA Audio2Face over traditional lip-sync methods?

Audio2Face enhances lip-sync and facial animation over traditional methods with:

- Speed and Automation: Quickly generates animations from audio, unlike slow manual or motion capture methods.

- Ease of Use: Needs no expertise for realistic results, unlike traditional tools requiring viseme mapping or advanced skills.

- Real-Time Capabilities: Supports live animation for interactive use, unlike offline or latency-heavy traditional approaches.

- Consistency and Iteration: Offers uniform results and easy dialogue updates, avoiding slow re-animation.

- Cost-Effectiveness: Free for individuals, runs on consumer GPUs, cheaper than hiring animators or actors.

- Integration: Outputs standard formats, works with tools like Unreal Engine, unlike less compatible older methods.

It provides efficient, accessible real-time lip-syncing, surpassing traditional methods in speed and versatility, though AI may miss some nuances of expert work, refinable for better results.

Table: Audio2Face vs. Traditional Lip-Sync Methods

| Feature | NVIDIA Audio2Face | Traditional Methods |

|---|---|---|

| Setup & Ease of Use | High – Minimal setup, auto lip-sync | Low/Medium – Manual or specialized capture |

| Speed | Real-time or seconds per clip | Slow – Hours of work or mocap processing |

| Consistency | Consistent AI-driven results | Varies – Depends on artist or performance |

| Customization | High – Adjustable emotion, character remap | Medium – Manual tweaks are time-consuming |

| Hardware Needed | PC with NVIDIA RTX GPU (no cameras) | Often mocap rigs or studios |

| Real-Time Use | Yes – Supports live streaming | Limited – Usually offline, complex for live |

| Multilingual Support | Yes – Language-agnostic (Latin best) | Limited – Language-specific viseme libraries |

| Cost | Free for individuals; low hardware cost | High – Animator wages or mocap equipment |

Can I use Audio2Face with Metahuman characters in Unreal Engine 5?

NVIDIA Audio2Face generates ARKit-compatible blendshape data (about 72 blendshapes, including ARKit’s 52) to animate MetaHuman faces in Unreal Engine 5 with full lip-sync and expressions. The 2025 Audio2Face Unreal Engine plugin, part of the Avatar Cloud Engine, streams real-time animations into UE 5.2, 5.3, and likely 5.4+ with minimal setup. The Omniverse Audio2Face app (2023.1/2023.2 by late 2024) includes the Live Link plugin for UE5, supporting offline and real-time workflows. UE 5.3 and 5.2 are supported, with 5.4 expected soon, and MetaHumans work across UE 5.x. NVIDIA recommends Audio2Face 2023.1.1+, an RTX 3070+ GPU, and Windows 10/11. It enhances MetaHumans in UE5 for AI-driven lip-sync in games, cinematics, or virtual assistants, as shown in Epic’s NVIDIA ACE demo.

How realistic is the facial performance using Audio2Face on Metahuman?

Audio2Face creates realistic facial animations for MetaHumans with accurate lip-sync and natural expressions similar to motion-captured performances. Its neural network syncs jaw and lip movements with facial actions like cheeks and eyebrows. Launched in 2022, Audio2Emotion matches expressions to the voice’s emotional tone (e.g., happiness or concern) automatically. It excels in real-time demos and Unreal Engine cutscenes but may require animator tweaks for unique mannerisms or monotone audio. Enhanced with eye animations, it rivals labor-intensive methods, saving time in game and film dialogue as AI and MetaHuman tech advance.

How do I set up the Audio2Face to Metahuman pipeline?

Setting up the pipeline to integrate Audio2Face with Unreal Engine’s MetaHuman requires installing tools and configuring a workflow. Here’s a concise summary:

- Install NVIDIA Omniverse Audio2Face: Download via Omniverse Launcher (free for individuals) on Windows 10/11 with an NVIDIA RTX GPU. Launch to verify functionality.

- Prepare Unreal Engine and MetaHuman: Use Unreal Engine 5 (5.2 or 5.3 recommended). Add a MetaHuman from MetaHuman Creator via Quixel Bridge, with Face blueprint and Control Rig auto-configured. Enable Live Link and USD Importer plugins.

- Get the Audio2Face Unreal Plugin (Optional): For real-time streaming, copy the A2F Live Link plugin from Audio2Face’s directory to Unreal’s Plugins folder and enable it. Not required for offline workflows.

- MetaHuman Setup in UE5: Open the MetaHuman’s Face Anim Blueprint for Live Link or imported animation. A default mapping asset aligns A2F’s ARKit blendshapes to MetaHuman’s, driving facial materials.

- Test the Connection: For live streaming, add an Audio2Face source in UE5’s Live Link panel. For offline, move to exporting. Setup is complete with A2F and MetaHuman ready.

The process involves installing Audio2Face, enabling UE5 plugins, and adding a MetaHuman, leveraging NVIDIA tools and MetaHuman rigs for compatibility without complex customization. Animation transfer follows setup.

What are the steps to export Audio2Face animation data to Unreal Engine?

To export facial animation from Audio2Face for Unreal (offline pipeline), follow these steps:

- Create or Load Face Setup: In Audio2Face, load the default character (“Mark”) or a custom setup compatible with MetaHuman mappings. Use Character Transfer for custom head meshes and blendshapes if needed.

- Load Audio Track: Import a WAV or MP3 file via the Audio Player widget and connect it to the AI solve to animate the face based on audio.

- Adjust Settings (Optional): Fine-tune expression intensity, emotion weight, or movement amplitude for accurate lip-sync on the default head.

- Export Animation Data: Export as a USD file with blendshape animation via the A2F Data Conversion section, selecting BlendShape Animation for MetaHuman-compatible weights, producing files like _usdSkel.usd. Alternatively, export a JSON file of blendshape weights over time for custom applications or scripting, though Unreal requires a custom importer for JSON, making USD more straightforward.

- Verify Export: Confirm the exported USD files include animation (e.g., ARKit blendshape channels like “JawOpen”, “MouthSmile_L”) by checking in an Omniverse viewer or ensuring no export errors.

The exported Audio2Face animation data is now ready for use in Unreal to apply to a MetaHuman. (For live link plugin users, these export steps are unnecessary as data streams directly.)

How do I import Omniverse facial animation into a Metahuman Control Rig?

To import Audio2Face animation data (USD file with blendshape keyframes) into Unreal Engine and apply it to a MetaHuman’s face using the existing control rig/animation blueprint:

- Enable USD Import in UE5: Use the Omniverse Connector or USD Import plugin (ensure enabled). The Omniverse Connector aids USD import and blendshape mapping; the Audio2Face UE plugin may include needed assets.

- Import the USD Animation: In UE5’s Content Browser, import the .usd file from Audio2Face. Enable blendshape or skeletal animation options to create an Animation Sequence asset with morph target curves for the MetaHuman face.

- Apply to MetaHuman: Link the animation asset to the MetaHuman face skeleton or blueprint. Test it—face should animate if mapped correctly. In Level Sequencer, assign the asset to the facial track and sync with audio.

- Verify and Adjust: Playback to confirm movement. If it fails, check morph target name mapping (NVIDIA provides ARKit-to-MetaHuman mapping assets, possibly auto-applied by the plugin). Adjust the Face AnimBP to use the asset if needed. Success is lips and face moving correctly.

Method 2: Live Link (Real-time streaming):

- Live Link Setup: In Audio2Face, enable streaming via “Live Link” or “Stream to Unreal.” In Unreal, open Live Link, add the Audio2Face source, and start data transfer.

- MetaHuman Animation Blueprint: Ensure the MetaHuman Face AnimBP supports Live Link data, using the LiveLinkPose node to drive the face with the plugin’s modified pose asset. The face animates in real-time with Audio2Face audio.

- Recording the Animation: Use Sequencer or Take Recorder to capture the Live Link stream as an animation asset while playing audio in Audio2Face, saving the performance for later use without Audio2Face.

Live Link skips importing, directly animating the MetaHuman’s Control Rig or AnimBP, while offline import creates a static asset. Both methods use Audio2Face output to animate the MetaHuman face skeleton/morphs, leveraging UE5’s ARKit-compatible system and NVIDIA’s mapping for accurate lip-sync.

Can I drive Metahuman facial expressions using audio with Audio2Face?

Audio2Face generates lip-sync and facial expressions, including micro-expressions, for MetaHumans from audio input, syncing jaw, mouth, and tongue to speech while using tone and pitch to reflect emotions like happiness or sadness. Its Audio2Emotion feature adjusts expressions—smiling for joy or frowning for anger—by producing blendshape values for controls like brows and eyelids if properly mapped. Users can manually adjust expressions via sliders for “expression blend,” setting a base emotion that complements speech without disrupting lip-sync. It captures audio-implied movements but misses contextual gestures like winking or eye-rolling. For added complexity, it integrates with tools like the MetaHuman Control Rig in UE5, combining lip-sync and basic emotions with detailed animations for more realistic MetaHuman performances.

Can Audio2Face handle multilingual voice inputs for Metahuman characters?

Audio2Face generates facial motions from audio waveforms, not words, supporting languages like English, French, German, and Japanese, with best results on Latin-based ones due to training data. It handles mid-sentence language switches and provides believable lip sync for non-Latin languages like Chinese and Arabic, though with slightly less accuracy.

NVIDIA is enhancing its language support. For MetaHumans, it uses facial animation curves, managing multilingual input effectively despite some phoneme mismatches with ARKit blendshapes. It approximates visemes like “O” or “M/B/P” well and handles unique sounds (e.g., Mandarin, rolled R’s) with minor manual tweaks for precision. Results reflect speech rhythm and mouth openness, excelling on training-similar languages (likely English), and the same MetaHuman can animate across languages using different audio without separate rigs.

Is real-time streaming possible between Audio2Face and Metahuman in UE5?

The Audio2Face Live Link plugin, an NVIDIA Omniverse connector, streams real-time facial animation from Audio2Face to a MetaHuman in Unreal Engine 5. Audio2Face processes audio (from a microphone, stream, or file) and sends per-frame blendshape weights via the plugin to Unreal Engine 5, where the MetaHuman’s animation blueprint syncs facial movements to the audio for live talking in demos, puppeteering, or interactive games and VR.

With minimal setup, it syncs animation and audio in Unreal, using Audio2Face’s GPU optimization for low latency (tens of milliseconds). The right plugin version for UE5 is required, typically running both apps on one powerful PC, though Audio2Face can stream from another machine or server with possible latency increases. It supports virtual live events, real-time cinematics, or voice-driven MetaHuman NPCs in games, with offline exports available if real-time isn’t needed.

Can I combine Audio2Face with motion capture for full-body Metahuman animation?

You can integrate Audio2Face for facial animation with traditional mocap for body animation to create a full-body MetaHuman performance in Unreal Engine. Audio2Face handles lip-sync and facial expressions from audio, while mocap controls body movements, applied simultaneously.

How to combine:

- Facial (Audio2Face): Set up the MetaHuman for Audio2Face facial animation via live link or imported assets.

- Body (Mocap): Use tools like OptiTrack, Vicon, Xsens, or Rokoko suits for body capture, or Unreal’s Live Link Face for head rotation, retargeting data to the Epic Skeleton live or as recorded assets.

- Combine in Unreal: Use separate Anim Blueprints—one for Audio2Face facial animation, another for mocap body animation—merged by Unreal for a cohesive render.

To sync separately recorded animations, align body (mocap) and facial (Audio2Face) tracks in Sequencer to start together, ensuring lip and gesture sync. For live capture, use two live link sources—Audio2Face for face blendshapes, mocap for body joints—applied real-time with compatible Anim Blueprints. For example, mocap and VR trackers capture body and head, while Audio2Face processes audio for facial animation, syncing gestures and speech without a face camera. This hybrid method works when performers differ, blending neck and face animations smoothly in UE5 for accurate, lip-synced full-body MetaHuman performances in virtual production.

How do I troubleshoot import issues from Omniverse to Unreal Engine?

When transferring Audio2Face animation from Omniverse to Unreal, common issues and solutions are:

- Issue: MetaHuman face isn’t moving after import (blank animation).

Solution: Export blendshape weights, not just geometry cache; target MetaHuman face skeleton in UE via Omniverse connector or USD Stage; ensure Unreal connector/plugin matches engine version. - Issue: Wrong or jumbled facial movements.

Solution: Use ARKit to MetaHuman mapping asset (e.g., mh_arkit_mapping_pose_A2F) from NVIDIA’s Audio2Face UE plugin to align 52 ARKit blendshapes to 72 MetaHuman blendshapes; manually map curves in UE if needed. - Issue: Live link connected but no movement.

Solution: Confirm Audio2Face source is active in UE’s Live Link panel; ensure MetaHuman Anim Blueprint uses live link (add LiveLink pose node if needed); check for firewall/network issues if A2F is on another machine. - Issue: Exported JSON or other format not directly usable.

Solution: Re-export as USD or use Python scripts to drive UE morph targets; prefer USD or live link. - Issue: Performance or frame drops in live mode.

Solution: Run Audio2Face headless or on a separate PC; record animation for baking; avoid heavy Omniverse scenes during streaming. - Issue: Face and body out of sync.

Solution: Align start times in Sequencer; adjust time dilation; use UE as timeline master with timecode for sync.

Check Omniverse Audio2Face logs for export errors and Unreal’s Output Log for import warnings like “curve not found.” Search NVIDIA and Epic forums for user fixes, especially for MetaHuman version issues. Update plugins/connectors for compatibility.

How do I bake and refine facial animation from Audio2Face for Metahuman?

After transferring facial animation from Audio2Face to a MetaHuman via live streaming or file import, you can bake and refine it as follows:

Baking the Animation:

- Live Link: In Unreal Engine, use Take Recorder or Sequencer to capture real-time animation. Add the MetaHuman to Sequencer, connect Audio2Face via Live Link, and record to bake facial animation curves into an Animation Sequence frame-by-frame.

- USD Import: USD animation assets are already baked with keyframes, needing no further baking.

Refining the Animation:

- Control Rig and Sequencer: Assign the MetaHuman Face Control Rig to the Face track in the Control Rig editor or Sequencer. Adjust controls (e.g., brows, eyes, mouth) by keyframing, bake to the rig, and tweak specific frames (e.g., jaw or blinks).

- Additive Layers: Add subtle tweaks (e.g., head nods, expressions) in Sequencer via an additive layer, blending with the Audio2Face base animation.

- Curves Editing: Edit morph target curves in the animation editor’s Curves tab for precise adjustments (e.g., smoothing jitters) while keeping audio sync.

- External Tools: Export to Maya via FBX or use Blender with a MetaHuman rig for refinement, though Control Rig in Unreal often suffices.

- MetaHuman Animator/Faceware: Blend with video-driven MetaHuman Animator or iPhone ARKit/Faceware data for nuanced expressions, potentially overriding parts of the original animation.

- Performance Baking: Bake the animation into a sequence for smooth game playback, avoiding live Audio2Face processing (unless using ACE runtime).

Refinement Tips: Audio2Face output is strong but may need tweaks like adding blinks or enhancing expressions (e.g., smiles). Use UE5’s Control Rig and Sequencer for fine-tuning, then bake the refined animation into a final asset.

Can I use Audio2Face to create dialogue-driven cutscenes with Metahuman?

Audio2Face simplifies facial animation for MetaHumans in Unreal Engine for dialogue-driven cutscenes. Here’s the workflow:

- Prepare Dialogue Audio: Record voice actors or use text-to-speech to create finalized audio tracks for each character.

- Generate Facial Animation: Use Audio2Face to process audio tracks into facial animations for MetaHumans, handling overlapping dialogue separately.

- Apply to MetaHumans in UE: Import or live link animations to MetaHumans in Unreal Engine, syncing faces to dialogue.

- Set up the Cutscene in Sequencer: Place MetaHumans in a level, sequence facial animations and audio in Unreal’s Sequencer for accurate lip-sync.

- Add Body Animation & Cinematics: Add keyframe, mocap, or preset body animations, plus camera cuts and lighting, via Sequencer.

This method ensures tight lip-sync without manual facial animation, ideal for dialogue-heavy projects like RPGs or films. For localization, reprocess new audio tracks easily. It also enhances NPC dialogue in-game, integrating AI animation with MetaHuman fidelity for efficient, high-quality virtual production.

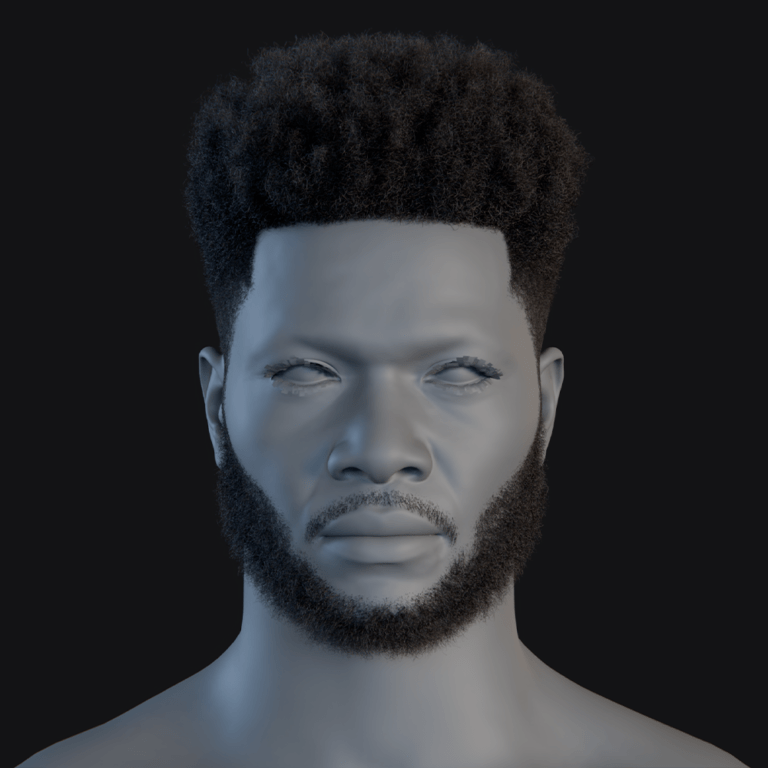

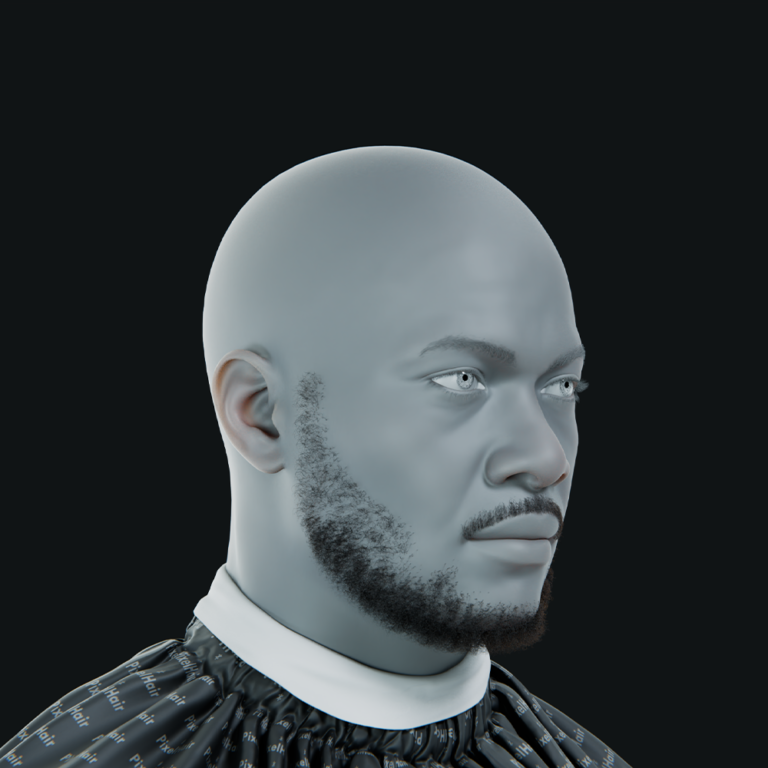

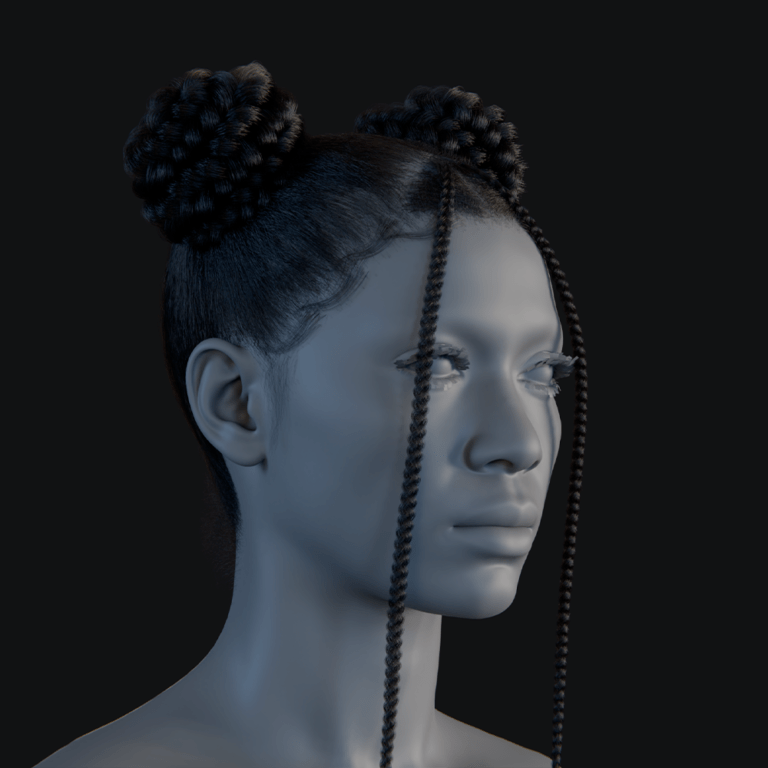

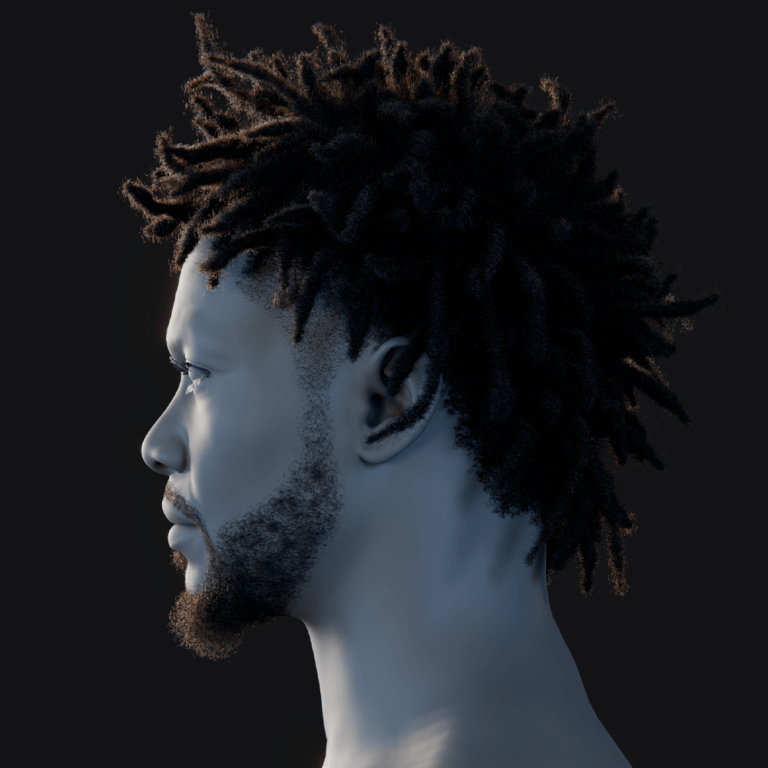

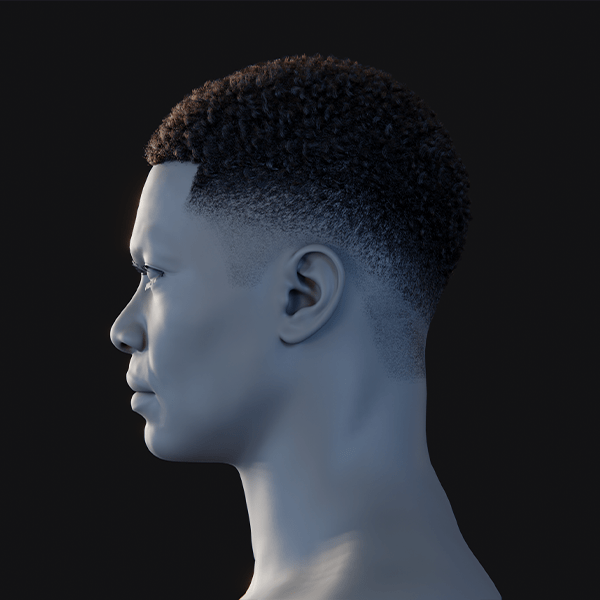

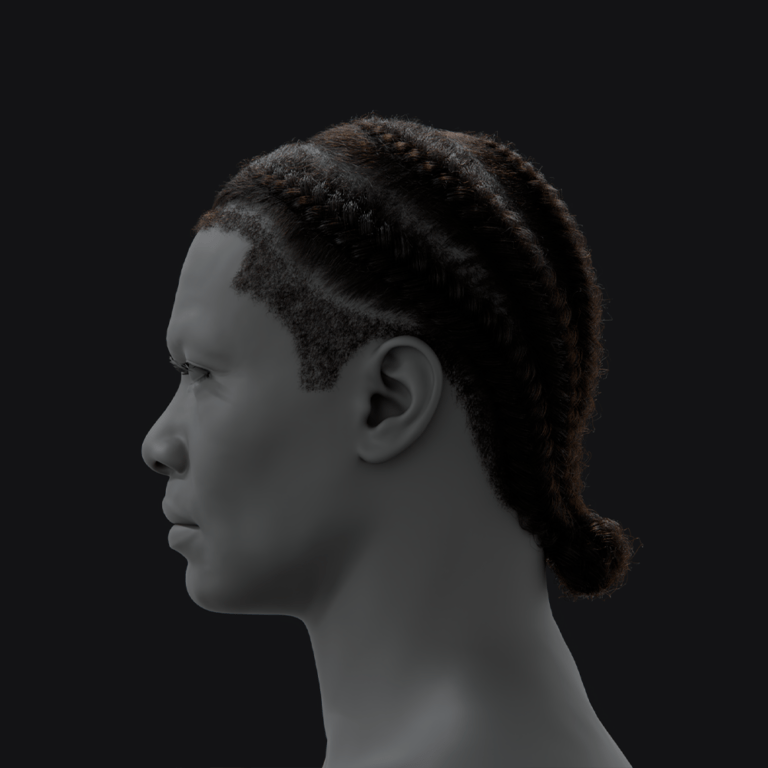

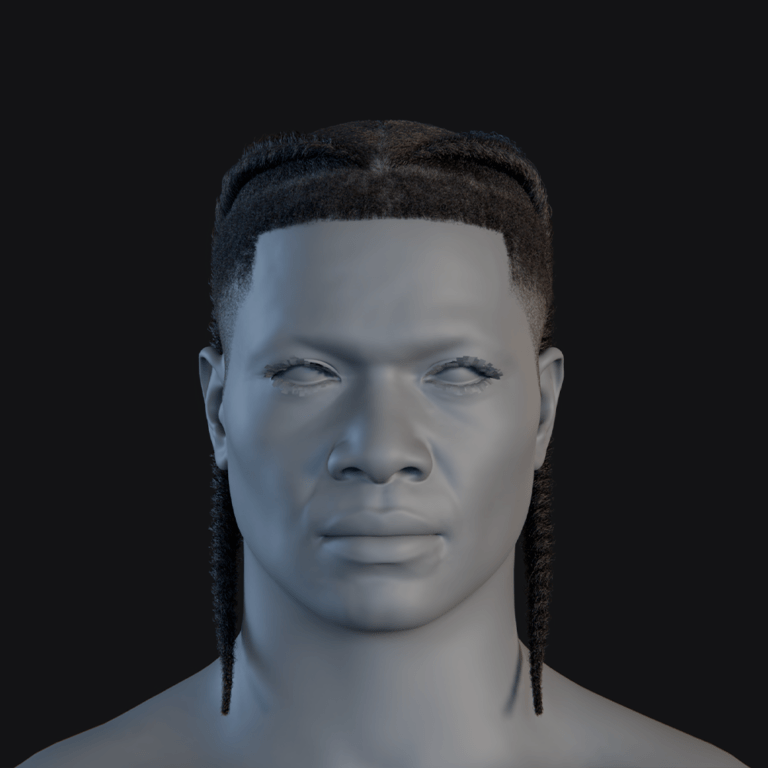

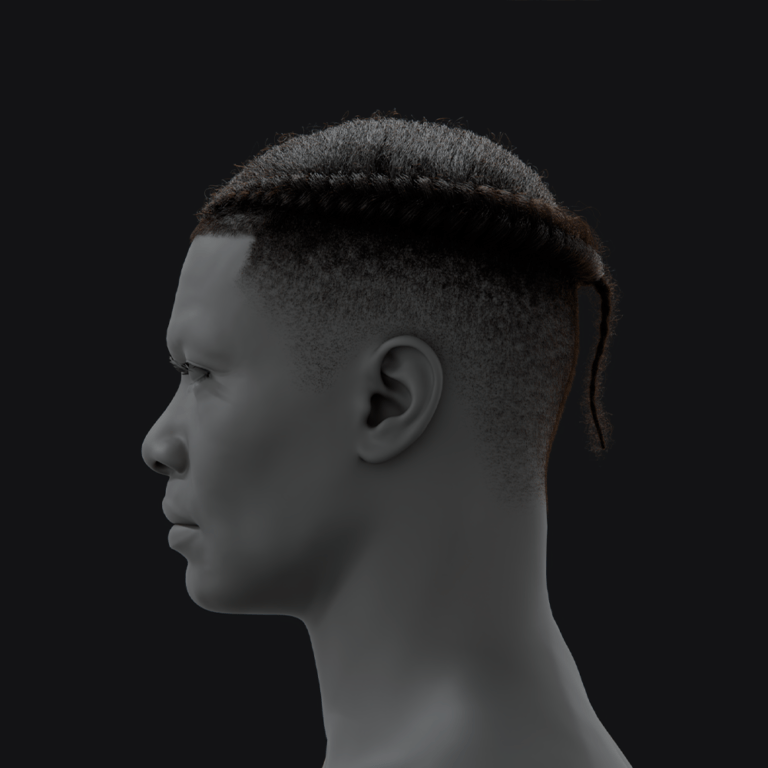

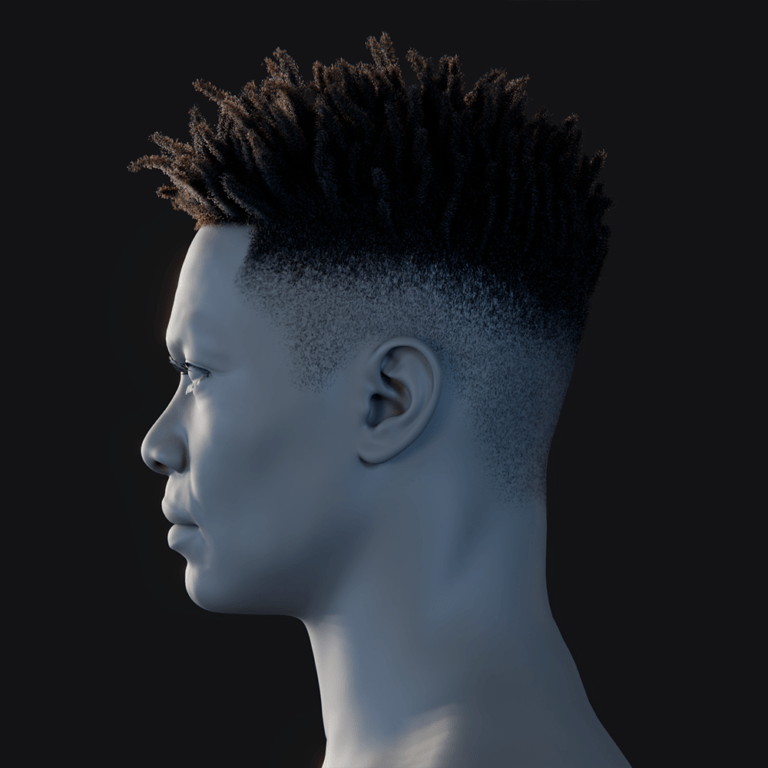

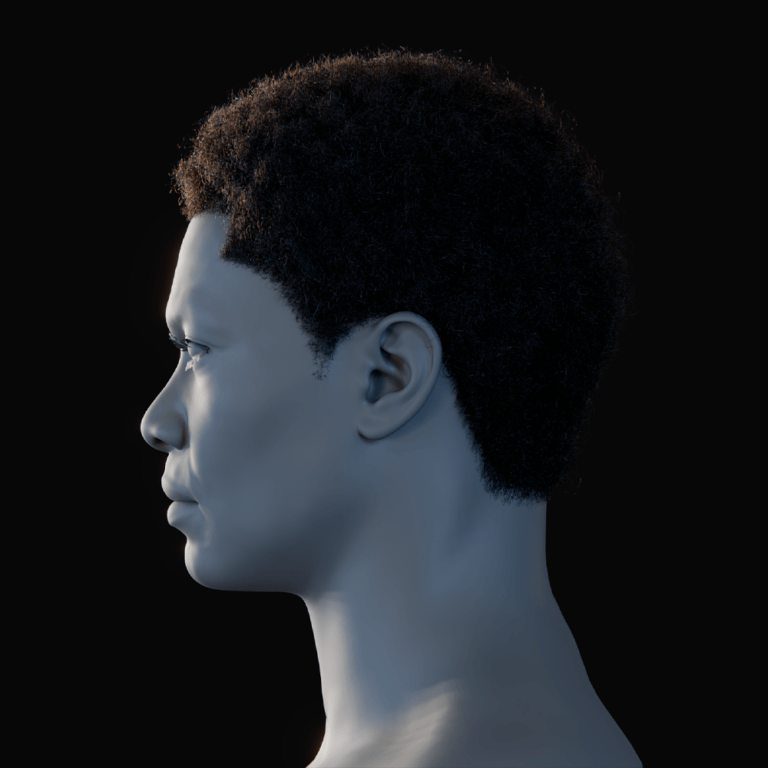

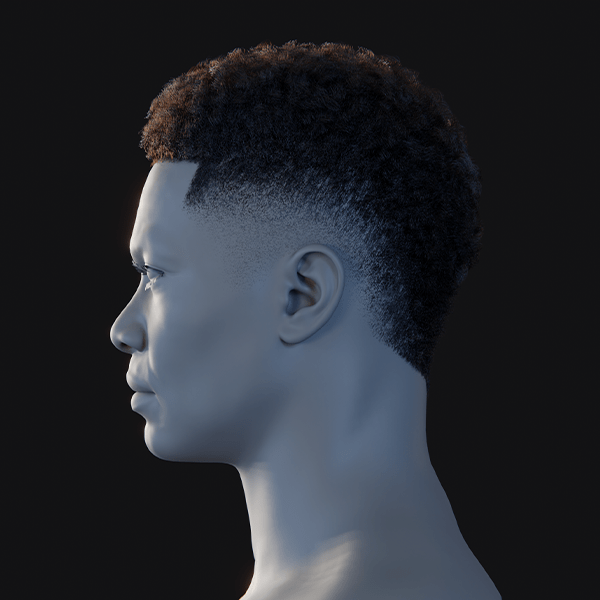

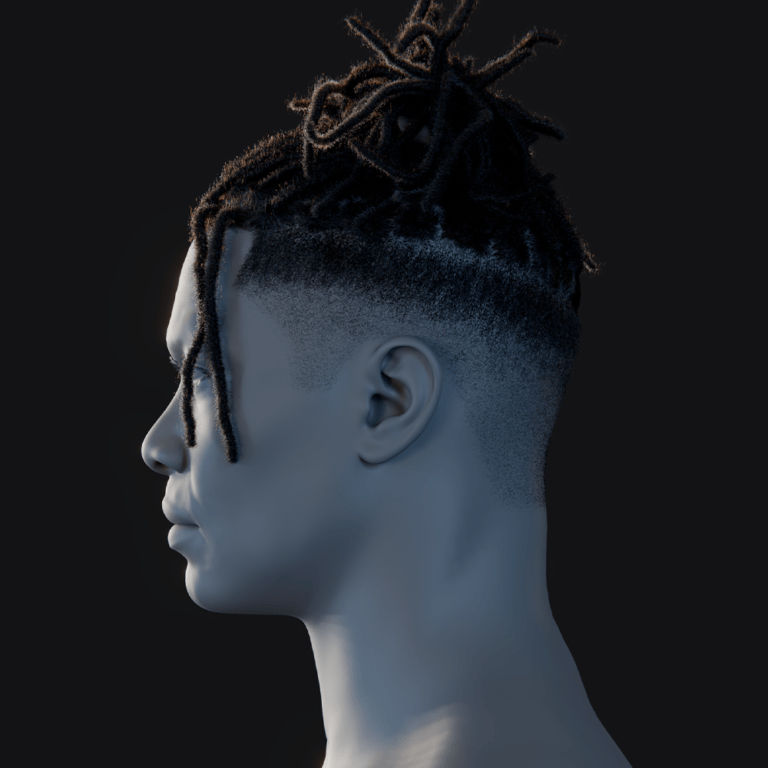

How can PixelHair be used to enhance the realism of Metahuman characters animated through NVIDIA Audio2Face?

PixelHair is a collection of high-quality 3D hair assets for MetaHumans and other characters, enhancing realism in dialogue or close-up shots by improving hair appearance alongside Audio2Face’s facial animation.

Key benefits:

- Better Hair Fidelity: Realistic strand geometry adds believability with light-catching strands, volume, and silhouette.

- Physical Simulation: Hair moves naturally in UE5 with head tilts or gestures using Unreal’s groom system.

- Personalization: Hairstyles match personality or setting for distinctiveness and engagement.

- Close-up Shots: Detailed grooms ensure convincing hair, matching MetaHuman facial and skin fidelity.

- Ease of Use: Pre-fitted assets attach easily to MetaHumans with minimal setup.

Usage steps:

- Obtain a PixelHair hairstyle from Yelzkizi’s site or BlenderMarket.

- Import into Unreal as .uasset or groom files.

- Attach to MetaHuman via blueprint or head socket.

- Configure physics in Unreal’s groom system if desired.

- Adjust materials for shine or color.

PixelHair boosts MetaHumans animated by Audio2Face with authentic hair look and movement, enhancing realism and immersion as a complementary asset, not tied to Audio2Face, similar to dressing an actor for a believable performance.

Are there tutorials or GitHub resources for setting up the Audio2Face-to-Metahuman workflow?

Numerous tutorials and resources from NVIDIA, Epic, and the community assist with setting up and optimizing the Audio2Face-to-MetaHuman pipeline:

- Official NVIDIA Omniverse Documentation: Detailed Audio2Face section with step-by-step guidance on installing and configuring the Unreal Engine Live Link plugin, ideal for setup beginners (see “Audio2Face to UE Live Link Plugin” in the user manual).

- NVIDIA Developer Forums: Q&A threads like “Audio2Face to MetaHuman workflow struggles” with solutions from NVIDIA engineers (e.g., Ehsan) and users, including scripts for specific issues.

- Epic Developer Community Tutorials: Tutorial “How to Setup NVIDIA ACE – Audio2Face with Metahuman” by Michael Muir, targeting UE5.4 with screenshots, available on Epic’s dev community site or Unreal Engine forums.

- YouTube Video Tutorials:

- “NVIDIA Omniverse Audio2Face to Unreal Engine 5.2 MetaHuman Tutorial” – demonstrates the process with UE5.2.

- “How to Export A2F USD to MetaHuman | Unreal Engine 5.3” – covers exporting facial animation from A2F to UE5.3 using the ACE plugin (by NVIDIA expert Simon Yuen).

- “Audio2Face Live Link MetaHuman using ACE Plugin (UE5.3)” – shows the live link approach step-by-step.

- GitHub Resources:

- NVIDIA ACE Sample (Kairos): Sample project showing Audio2Face with MetaHuman in Unreal, including plugins and mappings.

- NeuroSync Player: Open-source project (AnimaVR/NeuroSync_Player) for real-time streaming of Audio2Face outputs to Unreal via Live Link, offering data flow insights.

- Import Script Gists: Python scripts (e.g., by QualityMinds) for importing USD facial animations into Unreal, useful for automation with UE Python API.

- SocAIty/py_audio2face: Repo for running Audio2Face in headless mode with a Python API, generating animations for UE and MetaHumans, suited for advanced pipeline integration.

- Blender/Other Tools: PixelHair’s site (Yelzkizi) documents use with MetaHumans (visual focus), while Reallusion’s iClone community offers workflows to round-trip Audio2Face to UE via iClone.

- Industry Talks and Demos: GTC or Unreal Fest demos (e.g., “Audio2Face to MetaHuman Omniverse 2020 On-Demand”) showcase the workflow from creators.

Match tutorial versions to your software (e.g., 2021 tutorials may differ from 2024 due to updates). Official NVIDIA and Epic documentation provides reliable, current instructions. These resources support beginners in setup and advanced users in optimization.

What industries are using the Metahuman and Audio2Face pipeline for virtual production?

The integration of MetaHumans (realistic digital humans) and Audio2Face (AI-driven facial animation) enhances various industries with real-time virtual production and interaction:

- Game Development: MetaHumans create lifelike NPCs, and Audio2Face automates lip-sync for dialogue-heavy games like RPGs, speeding up cinematics and metaverse platforms.

- Film and Animation (Previs and VFX): MetaHumans aid scene previs, while Audio2Face quickly prototypes facial animations for animated content and VFX (e.g., CG doubles), outpacing manual keyframing for TV or ads.

- Virtual Assistants and AI Avatars: Customer service and education use MetaHumans for visuals and Audio2Face for real-time text-to-speech animation, enabling multilingual avatars in healthcare and finance via NVIDIA’s ACE.

- Broadcast and Live Events: MetaHumans serve as virtual presenters or VTubers, with Audio2Face animating faces from live voices or TTS for broadcasts, concerts, or VR events.

- Training and Simulation: VR training (e.g., military, corporate) uses MetaHumans animated by Audio2Face for realistic role-play in sales or emergency scenarios.

- Advertising and Marketing: Brands craft virtual ambassadors with MetaHumans, animated by Audio2Face for fast voice line updates and multilingual dubbing without motion capture.

- Content Creation by Individuals: Indie creators like YouTubers use these tools for high-quality shorts, making virtual production accessible to prosumers such as architects or solo 3D artists.

This pipeline boosts industries with speaking digital humans—games, film, customer service, and live entertainment—offering real-time interaction and reducing effort as the technologies advance.

FAQ

- Do I need an NVIDIA RTX GPU for Audio2Face with MetaHumans?

Yes, Audio2Face requires an NVIDIA RTX GPU (e.g., GeForce RTX 3070 or RTX A4000) for AI and ray tracing, needing RTX Tensor cores. It won’t run on AMD GPUs, older NVIDIA GTX cards, or without a cloud RTX GPU alternative. - Is NVIDIA Omniverse Audio2Face free?

Yes, Audio2Face and Omniverse are free for individual use with an NVIDIA account via the Omniverse Launcher. Commercial use may require enterprise licensing, but there’s no upfront cost. MetaHumans, tied to Unreal Engine, are also free. - How do I start with Audio2Face—need full Omniverse?

Install Audio2Face via the Omniverse Launcher; only it and a small core are needed, not full Omniverse. It’s standalone for audio-based face animation but integrates with other Omniverse tools if used. - What audio formats and inputs does Audio2Face accept?

Audio2Face accepts WAV and MP3 files (clear, 16-bit, 44.1/48 kHz best) and live microphone input for real-time animation, requiring only raw audio, not phoneme labels or text. - Does Audio2Face generate emotions or just lip-sync?

It primarily handles lip-sync but includes Audio2Emotion (since 2022.1) to infer emotions from audio, adding expressions like smiles or frowns. Manual slider tweaks can refine specific expressions beyond basic lip-sync. - Can I use Audio2Face with custom 3D characters, not just MetaHumans?

Yes, it supports custom characters via ARKit blendshape mapping or the Character Transfer tool for compatible morphs. MetaHumans are simpler due to pre-made mapping, but custom rigging is possible with effort. - How does Audio2Face compare to MetaHuman Animator?

Audio2Face uses audio and AI for fast lip-sync without cameras, while MetaHuman Animator (2023) uses video for detailed facial realism. Audio2Face is for automation; Animator is for actor-driven subtlety. - What is USD, and is it required?

USD (Universal Scene Description) is Omniverse’s 3D animation format, default for Audio2Face exports to Unreal. Live Link or FBX conversion are options, but USD is recommended. - Why don’t my MetaHuman’s eyes move with Audio2Face?

Audio2Face focuses on mouth and some brow motion, not eye gaze or frequent blinking. Eye movement needs manual animation or Unreal’s Eye Tracker; blinks are minimal. - Which Unreal Engine and MetaHuman versions work with Audio2Face?

Unreal Engine 5.2/5.3 (likely 5.4 soon) and MetaHumans from UE5+ work with Audio2Face 2023.1+, using the correct NVIDIA plugin for your UE version. - Can Audio2Face output be edited in Unreal?

Yes, in Unreal, Audio2Face animations can be refined with tools like the Control Rig or curve editor for full customization after initial output.

Conclusion

Key Takeaways: NVIDIA Omniverse Audio2Face integrated with Epic’s MetaHumans in Unreal Engine 5 enables real-time, realistic facial animations from audio, accelerating lip-sync and performance creation. Audio2Face’s AI maps audio to facial movements, supports multiple languages, infers emotion, and connects seamlessly with MetaHumans via Unreal Engine plugins and USD exports, using Live Link for real-time or imported files for offline work.

For Unreal Engine 5 and MetaHuman users: This provides fast, consistent dialogue animations driven by audio, eliminating manual keyframing. Setup requires installing Audio2Face, enabling the UE plugin, and using NVIDIA’s ARKit blendshape mapping, with options to tweak via Control Rig or add body mocap.

Recommendations: Use UE5.3+ and Audio2Face 2023.1+, opt for Live Link for efficiency, test with short samples, refine output for realism, and enhance with assets like PixelHair and eye animations.

Industry Impact: MetaHuman + Audio2Face is gaining traction in virtual production, merging artistry and automation for faster content creation and AI-driven NPCs.

Conclusion: This pipeline transforms facial animation for digital humans, offering efficiency, accessibility, and realistic results for cinematics, virtual assistants, or multilingual games in Unreal Engine 5.

Sources and Citations

- NVIDIA Technical Blog – “Simplify and Scale AI-Powered MetaHuman Deployment with NVIDIA ACE and Unreal Engine 5” (Oct 2024) – discusses Audio2Face plugins and integration with MetaHumansdeveloper.nvidia.com.

- NVIDIA Omniverse Audio2Face Official Documentation: Audio2Face to UE Live Link Plugin – step-by-step guide for streaming Audio2Face animation to Unreal Engine (MetaHuman setup, plugin install, etc.)docs.omniverse.nvidia.com.

- NVIDIA Developer Forums: Multiple threads on Audio2Face and MetaHuman:

- “The best approach for Audio2Face blendshape data for MetaHuman in realtime.” – user Q&A about mapping 52 ARKit shapes to 72 MetaHuman shapesforums.unrealengine.com.

- “Audio2Face USD export not working with MetaHuman 5.3” – troubleshooting tips (using latest connector, Live Link workaround)forums.developer.nvidia.com.

- “Audio2Face Language?” – confirmation that Audio2Face works with different languages and is sound-based (no language tagging)forums.developer.nvidia.com.

- Epic Games Unreal Engine Forums: Tutorial – Omniverse Audio2Face to MetaHuman – community tutorial outlining how to set up Audio2Face 2023.1.1 with UE5.2 MetaHumansforums.unrealengine.com.

- 9meters Media Article: “NVIDIA Audio2Face: Revolutionizing Facial Animation in Real-Time” (Sep 2024) – an overview of Audio2Face’s features, how it works, benefits, and comparison to traditional methods9meters.com.

- CGChannel News: “Omniverse Audio2Face gets new Audio2Emotion system” (Aug 2022) – details on Audio2Face features, RTX requirement, and that Omniverse tools are free for individualscgchannel.com.

- Hackernoon Article: “Omniverse Audio2Face: An End-to-End Platform For Customized 3D Pipelines” – provides explanation of what Audio2Face is and how it works (input .mp3/.wav -> animated face)hackernoon.com.

- GitHub – NVIDIA ACE Samples: NVIDIA/ACE repository – contains the Kairos Unreal Engine sample demonstrating Audio2Face microservice with MetaHumangithub.com.

- GitHub – AnimaVR NeuroSync Player: Open source tool for real-time streaming of Audio2Face output into UE5 via Live Linkforums.unrealengine.com.

Recommended

- How do I create a depth map using the Blender camera?

- How do I set up a VR camera in Blender?

- Why PixelHair is the Best Asset Pack for Blender Hair Grooming

- How do I parent a camera to an object in Blender?

- How do I create a handheld camera effect in Blender?

- Managing Blender Scenes with Multiple Cameras Using The View Keeper

- How to Create a 3D Environment Like Arcane: A Step-by-Step Guide

- Why The View Keeper Is the Best Blender Add-on for Scene Management

- How do I set a camera to render in orthographic mode in Blender?

- How Do I Create a First-Person Camera in Blender?