MetaHumans are high-fidelity digital human characters created with Epic Games’ MetaHuman framework. In Unreal Engine 5 (UE5), animating these realistic characters can seem daunting, but a robust set of tools makes it accessible. This comprehensive guide will walk you through metahuman animation workflows step by step – from simple keyframing and retargeting existing animations to advanced facial motion capture with the new MetaHuman Animator. We’ll cover everything from required tools and quick-start tips to blending face and body animations, lip-syncing dialogue (even with AI voices), adding realistic hair, and best practices for both cinematics and games. Let’s dive in!

What tools are needed for Metahuman animation in UE5?

To animate a MetaHuman in UE5, you’ll need a few core tools and assets ready:

- Unreal Engine 5: Install UE5 with the Quixel Bridge plugin enabled to import MetaHumans. Supports Windows/macOS, fully compatible with MetaHuman assets. Essential for all workflows.

- MetaHuman Character: Create via MetaHuman Creator and import through Bridge. Comes fully rigged for face/body animation. Ready for immediate use.

- MetaHuman Plugin: Optional plugin enhances features like MetaHuman Animator for facial capture. Enabled by default with MetaHuman import. Supports advanced animation tasks.

- Input Devices: Use an iPhone (TrueDepth camera) for facial capture or mocap suits (e.g., Xsens) for body motion. Pre-recorded animation data also works. Optional but boosts realism.

- Animation Software: UE5’s Sequencer, Control Rig, and IK Rig handle most needs. External tools like Blender/Maya can create animations for import (FBX). Built-in tools suffice for beginners.

UE5’s integrated tools streamline MetaHuman animation. Optional devices enhance capture-based workflows.

What is the easiest way to get started with Metahuman animation?

The easiest way to animate a MetaHuman is using existing assets and UE5’s intuitive tools. Beginners can achieve quick results without complex setups, leveraging free animations and real-time capture for instant feedback. Experimentation with sample projects accelerates learning for new animators.

Start with premade animations or the Live Link Face app for facial capture. Free assets like Epic’s MoCap Online pack provide retargetable motions (e.g., walks, idles). The Live Link Face app streams iPhone facial expressions to the MetaHuman in real time, requiring minimal setup.

These methods bring characters to life quickly without animation expertise.

Use Sequencer and Control Rig for simple keyframing or pose application. Add a MetaHuman to a Level Sequence, assign animations, or keyframe poses (e.g., a wave) using the Control Rig. The Facial Pose Library offers preset expressions to enhance animations. This approach is accessible and fast for novices.

Learn from Epic’s sample projects or tutorials for practical insights. The MetaHuman sample project includes pre-animated characters to study. Following Epic’s documentation or community guides (e.g., for blinking animations) builds skills efficiently. Combining retargeted animations and facial capture creates engaging results in minutes.

How do I animate a Metahuman character in Unreal Engine 5?

To animate a MetaHuman in UE5, you’ll follow these steps:

- Import MetaHuman: Import via Quixel Bridge, providing a rigged skeleton and Control Rigs for face/body animation. Ensures immediate usability.

- Choose Animation Method:

- Manual Keyframing: Use Control Rig in Sequencer to pose and keyframe movements, offering precise control.

- Motion Capture (Body): Apply mocap data via IK Retargeter or Live Link for realistic body motion.

- Facial Capture: Use Live Link Face or MetaHuman Animator for real-time or processed facial animation.

- Audio-Driven Animation: Generate lip-sync and expressions from audio using MetaHuman Animator (UE5.5+).

- Retargeting Animations: Reuse existing animations (e.g., UE5 Manny) by retargeting to MetaHuman’s skeleton.

- Use Sequencer: Combine face/body animations in a Level Sequence, adjusting timing and blending transitions. Supports cinematic camera cuts.

- Refine Animation: Adjust details (e.g., hand positions, eye blinks) using Control Rig for polished results. Enhances realism.

- Playback/Render: Preview in viewport or render via Movie Render Queue for high-quality cinematics with ray tracing.

UE5 offers flexible animation methods for MetaHumans. Beginners can start with retargeting, while advanced users leverage capture or keyframing.

Can I animate Metahuman using Control Rig in Unreal Engine?

MetaHumans include built-in Control Rigs for precise animation in UE5. The Control Rig provides selectable controllers for body and facial bones, enabling posing directly in Sequencer or the Control Rig editor. It’s ideal for manual animation or refining mocap, offering flexibility without external tools.

Body animation uses controllers for limbs and torso, keyframed in Sequencer for motions like waving. Facial rigs with extensive controls (e.g., brows, jaw) allow nuanced expressions, supported by the Facial Pose Library. Animations can be baked into reusable Animation Sequences, streamlining workflows.

Control Rig is accessible via a MetaHuman’s Blueprint in Sequencer, selecting the provided rig asset (e.g., FaceControlBoard). Keyframing is intuitive, with options to adjust curves for smooth motion. It excels for additive animations, like layering head turns on walking cycles, enhancing creative control.

How do I keyframe animations manually for Metahuman in Sequencer?

To manually keyframe a MetaHuman, you’ll use Sequencer with the Control Rig or skeleton properties:

- Add MetaHuman: Drag the MetaHuman Blueprint into a Level Sequence, creating tracks for body/face meshes.

- Add Control Rig Track: Select the body or facial Control Rig to access controllers for keyframing poses.

- Pose at Start: At frame 0, pose the character (e.g., pelvis, hands) and keyframe with the S key.

- Set Next Pose: Move to a later frame, adjust controls (e.g., raise arm), and keyframe again for motion.

- Preview Interpolation: Scrub the timeline to check motion; adjust curves in Sequencer for smoothness.

- Continue Keyframing: Add poses for body/face (e.g., smile, blink) to build the animation sequence.

- Refine with Curve Editor: Fine-tune timing and easing using Sequencer’s curve editor for polished results.

- Playback/Iterate: Preview and tweak keyframes to perfect the animation’s flow and timing.

The Facial Pose Library speeds up facial keyframing with presets. Manual keyframing offers precise control, ideal for custom motions or tweaks.

How do I use Metahuman Animator for facial animation?

MetaHuman Animator captures high-fidelity facial performances for MetaHumans using video and depth data. It simplifies creating realistic facial animations, producing editable sequences in minutes, ideal for dialogue or cutscenes. The process leverages UE5’s tools and supported hardware for accuracy.

Record a performance using an iPhone 12+ or head-mounted camera, capturing dialogue via Live Link Face or Take Recorder. In UE5’s Capture Manager, import the video and depth data, then create a MetaHuman Identity by calibrating the actor’s face (e.g., aligning landmarks). Process the clip to generate a rig-faithful Animation Sequence, which can be tweaked with Control Rig for refinements like eye adjustments.

Combine facial animations with body motions in Sequencer, blending at the neck for seamless results. Good capture quality (lighting, framing) ensures optimal outcomes, as depth data enhances precision. MetaHuman Animator reduces weeks of manual work, making professional facial animation accessible to solo creators.

Can I use Live Link Face for real-time Metahuman facial animation?

Live Link Face enables real-time facial animation for MetaHumans using an iPhone’s TrueDepth camera:

- Set Up Live Link Face App: Install the free app on an iPhone X+ and connect to UE5 via the same network.

- Enable Live Link in UE5: Activate Live Link, Apple ARKit, and ARKit Face Support plugins in the project.

- Link MetaHuman: Assign the iPhone’s ARKit Face Subject to the MetaHuman Blueprint’s Live Link settings.

- Preview Animation: In Live mode, the MetaHuman mimics facial expressions and head movements instantly.

- Record Performance: Use Take Recorder to capture the animation for playback or editing in Sequencer.

- Adjust Settings: Tune tracking (e.g., smoothing) in the app for optimal results under good lighting.

Live Link Face is ideal for live demos or quick iteration, offering ARKit-driven facial capture. It supports simultaneous body motion capture, enabling full character puppeteering.

How do I sync voice and lip movements in Metahuman animation?

MetaHuman Animator’s Audio-to-Facial feature simplifies lip-sync for MetaHumans. It generates facial animations from audio, producing realistic lip movements and expressions, enhanced by manual or capture-based methods for nuanced results. Plugins or performance capture offer alternative approaches for specific needs.

In UE5.5+, import a SoundWave into MetaHuman Animator to create an Animation Sequence matching the audio’s phonemes. Clean audio ensures accurate lip-sync, though subtle expressions may need manual keyframing. This method is fast and efficient, ideal for voice-over scenes.

Manual keyframing in Sequencer uses ARKit blendshapes (e.g., JawOpen) to align mouth shapes with audio visemes, supported by pose libraries. Third-party plugins like Oculus OVR Lip Sync automate viseme curves but may require tweaks. Both approaches benefit from timing adjustments for plosives.

Capturing an actor’s performance with Live Link or MetaHuman Animator ensures natural lip-sync, as facial motions align with spoken dialogue. Fine-tune timing in Sequencer to correct lags, adding head nods or blinks for realism. These methods make MetaHumans feel alive with minimal effort.

Can I use AI-generated voice with Metahuman facial animation?

AI voices pair seamlessly with MetaHuman facial animation for indie creators.

Here’s how to do it:

- Get AI Voice Audio: Generate dialogue using tools like Replica Studios or Amazon Polly, saving as WAV.

- Generate Facial Animation: Use MetaHuman Animator’s Audio-to-Facial (UE5.5+) to create lip-sync from AI audio.

- Alternative – Replica Plugin: Replica’s UE plugin generates voice and lip-sync animation simultaneously for MetaHumans.

- Refine Emotion/Timing: Add facial expressions (e.g., smiles) to enhance flat AI intonation, adjusting timing for naturalness.

- Sync and Polish: Align animations with audio pauses, adding head nods for realistic speech emphasis.

AI voices enable rapid prototyping without actors. Clear enunciation improves lip-sync quality.

Additional steps for a convincing performance: - Choose Clear Voices: Select AI voices with adjustable speed/emotion for better animation readability.

- Manual Adjustments: Tweak expressions in Sequencer to match dialogue context, enhancing emotional impact.

This virtual pipeline automates lip-sync, saving weeks of manual work. MetaHuman’s tools make AI-driven animation accessible.

How do I import motion capture data to animate Metahuman characters?

Motion capture (mocap) data animates MetaHumans efficiently in UE5.

To import and apply mocap data for a MetaHuman in UE5, consider the following approaches:

- Live Streaming: Use Live Link with mocap suits (e.g., Rokoko) to stream motion to the MetaHuman’s Blueprint in real time.

- Import FBX: Import mocap as FBX, creating a Skeletal Animation asset for the source skeleton (e.g., UE4 mannequin).

- Retarget to MetaHuman: Use IK Retargeter to map animations to MetaHuman’s skeleton, adjusting for bone differences.

- Apply Animation: Assign retargeted animation to the MetaHuman in Sequencer or Animation Blueprint for playback.

- Combine with Face: Add facial animation (e.g., via Live Link Face) to body mocap, blending in Sequencer.

- Use Take Recorder: Record live mocap in UE5, producing reusable animation assets for body/face.

Ensure A-pose alignment and check scale/root motion. Test joints for accuracy, tweaking mappings if needed.

Tips for import: - Pose Alignment: Match mocap’s T-pose/A-pose to MetaHuman’s A-pose in IK Retargeter settings.

- Root Motion: Enable/disable root motion based on whether the character should move in the world.

- Joint Tweaks: Adjust finger/shoulder mappings in IK Retargeter to fix retargeting issues.

UE5’s retargeting tools simplify mocap integration, preserving performance nuances with minimal effort.

Can I retarget existing animations to Metahuman using IK Retargeter

UE5’s IK Retargeter enables retargeting animations to MetaHumans from other skeletons (e.g., UE4 Mannequin).

Using the IK Retargeter for MetaHumans:

- Set Up IK Rigs: Create IK Rigs for source (e.g., UE4_Mannequin_Skeleton) and MetaHuman skeletons.

- Create Retargeter Asset: Link source and target IK Rigs, using Epic’s presets for common skeletons.

- Define Bone Mappings: Auto-match major chains (e.g., hips, knees), ignoring MetaHuman’s extra bones.

- Preview/Adjust: Load source animation, tweak limb scaling or root alignment to fix issues like foot sliding.

- Export Animation: Bake retargeted animation as a MetaHuman-compatible Animation Sequence.

- Apply to MetaHuman: Use the new animation in Sequencer or Animation Blueprint for playback.

IK Retargeter’s visual IK system reduces foot sliding compared to UE4’s method. It supports retargeting between MetaHumans or Animation Blueprints for gameplay.

Retargeting unlocks existing animations, saving significant animation effort. MetaHumans gain access to diverse motions effortlessly.

How do I use marketplace animations with Metahuman characters?

Marketplace animations for Epic’s skeletons (e.g., UE4 Mannequin) can animate MetaHumans via retargeting.

Here’s how:

- Identify Source Skeleton: Check the pack’s skeleton (e.g., UE4_Mannequin or UE5 Manny).

- Set Up Rigs: Use or create IK Rigs for the source and MetaHuman skeletons.

- Retarget Animations: Use IK Retargeter to map animations to MetaHuman, often with pack-provided setups.

- Batch Export: Export all animations at once to create MetaHuman-compatible assets.

What’s the best workflow for combining facial and body animations in UE5?

Combining facial and body animations ensures cohesive MetaHuman performances.

Here are best practices for merging facial and body animations:

- Use MetaHuman Blueprint: Animate via the Blueprint in Sequencer to manage body/face tracks cohesively.

- Layered Blending: MetaHuman’s Anim Blueprint blends face over body at neck joints (e.g., neck_01).

- Adjust Neck Blending: Modify blend bone (e.g., to neck_01) to prioritize facial head motion if needed.

- Align Timelines: Synchronize body/face tracks in Sequencer to match performance timing (e.g., dialogue start).

- Post-Process Animations: Apply facial animations as post-process, ensuring body animations don’t override.

- Check Overlaps: Ensure body animations exclude facial bones to avoid conflicts (e.g., jaw motion).

The Blueprint’s internal blending simplifies merging. Adjust blend settings to resolve issues like floating heads.

UE5’s design makes combining animations straightforward, enabling reusable pipelines for seamless performances.

How do I animate emotions and expressions with Metahuman?

MetaHuman facial expressions convey emotions using rigs or capture.

Here are approaches to animate emotions:

- Facial Pose Library: Apply premade poses (e.g., Smile) in Sequencer, keyframing intensity for transitions.

- Manual Rig Control: Keyframe facial controllers (e.g., brows, mouth) for custom expressions like anger.

- Blendshape Animation: Animate ARKit blendshapes (e.g., EyeSquint) for precise morph target control.

- Facial Mocap: Capture real performances via Live Link Face or MetaHuman Animator for natural emotions.

- Eye Animation: Keyframe eye rotation/blinks to enhance emotions (e.g., downward gaze for sadness).

Subtle expressions work for realism; exaggerate for wide shots. Sync body language (e.g., slumped shoulders) for impact.

Mirroring real expressions or referencing videos aids manual animation. MetaHumans achieve emotive performances with careful control.

Can I use AI-generated voice with Metahuman facial animation?

Yes – as discussed, AI voices can drive MetaHuman facial animation either through audio-driven animation or plugins like Replica. The key points:

- Generate your AI voice clip and import it.

- Use UE5.5’s Audio-to-Facial feature to create lip-sync animation from that clip.

- Optionally use tools like Replica Studios’ MetaHuman integration for one-step voice-and-lipsync.

- Then refine the expression/emotion manually if needed to match the context of the dialogue.

- AI voices in combination with automated facial animation enable a fully virtual acting pipeline, which is incredibly useful for indie projects.

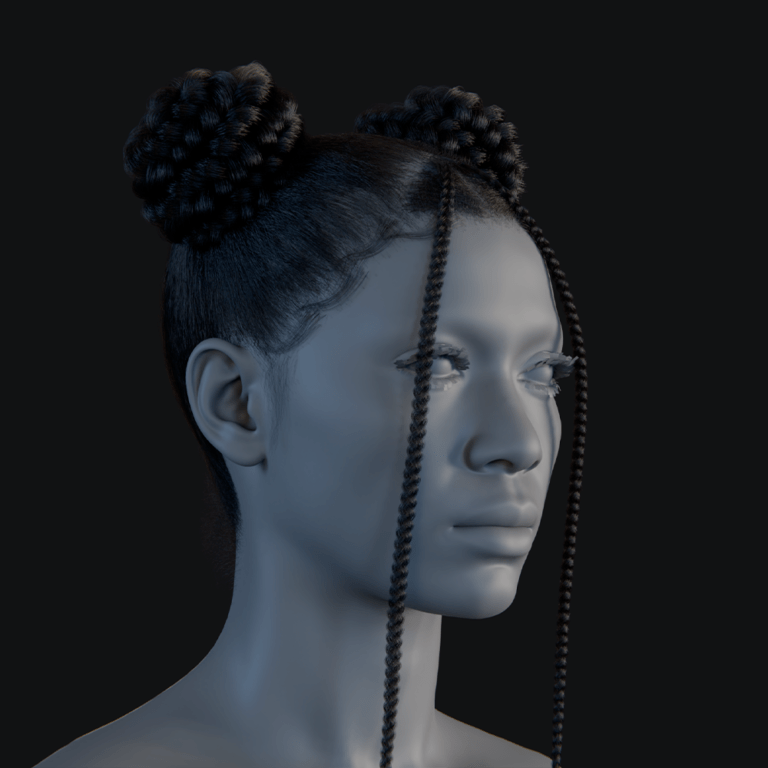

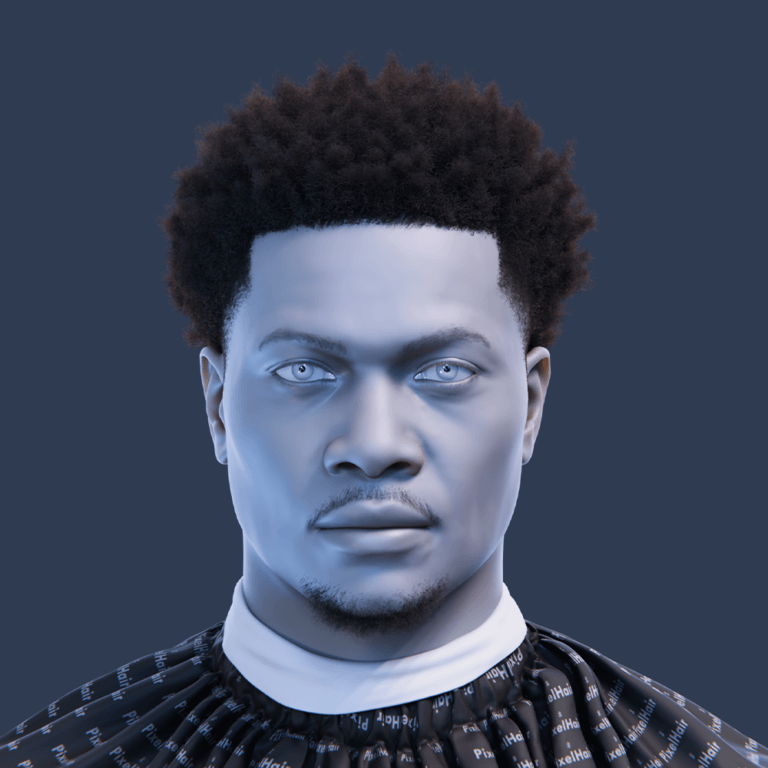

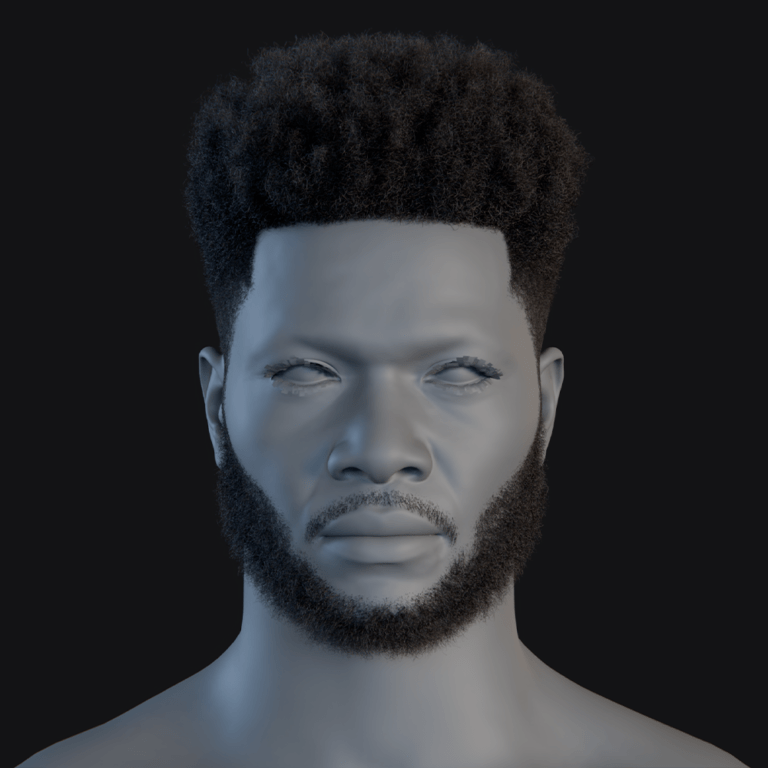

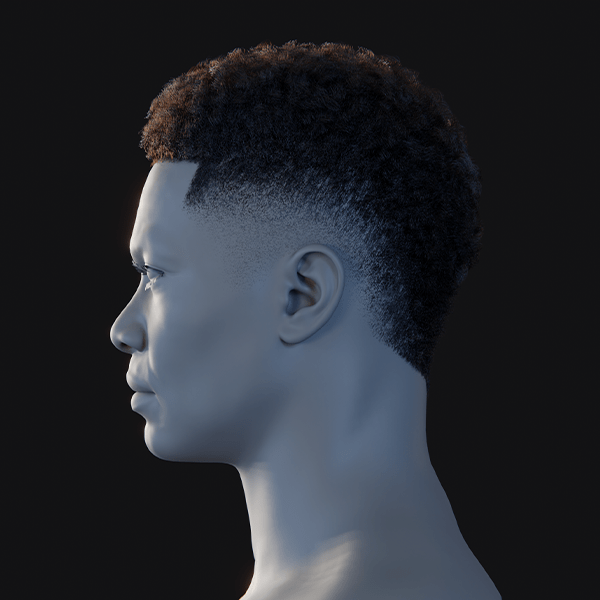

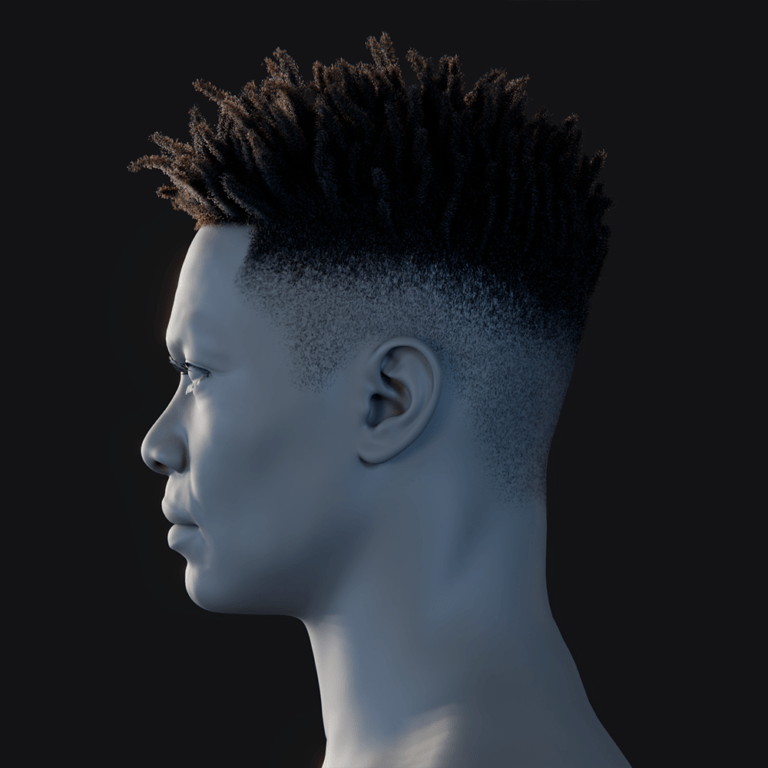

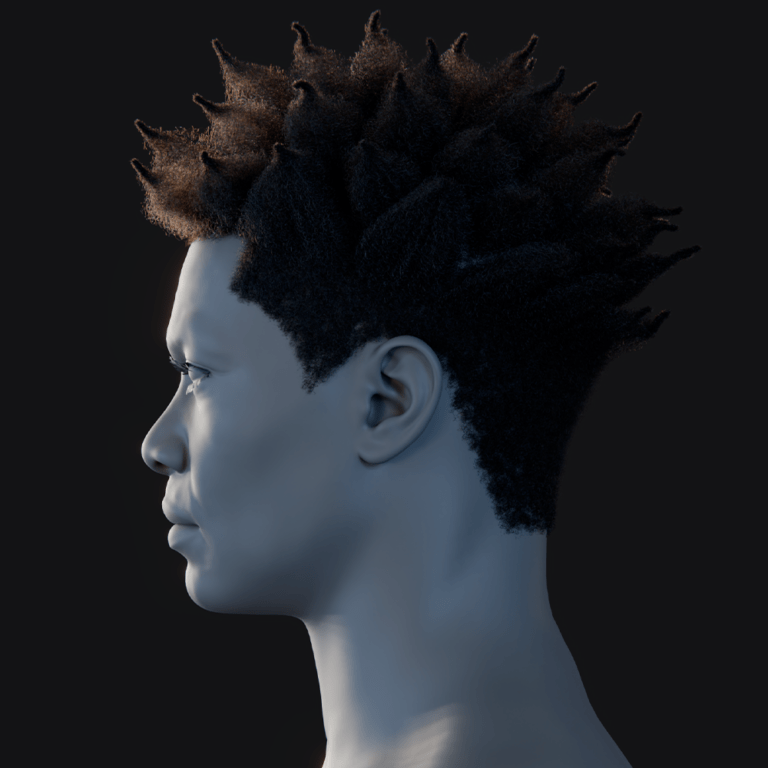

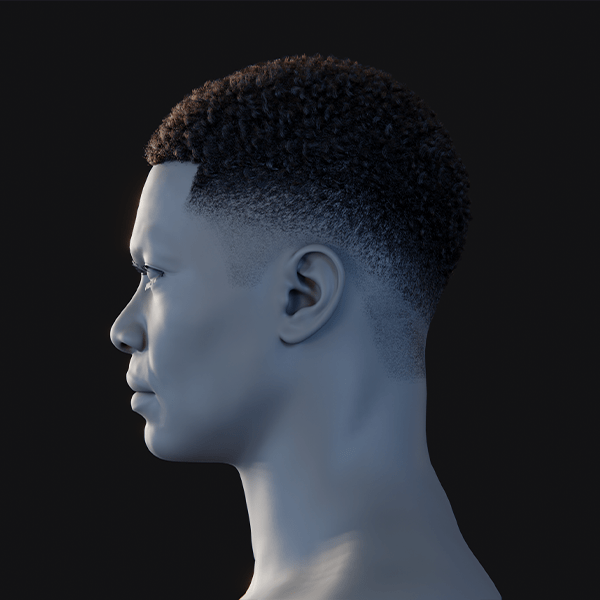

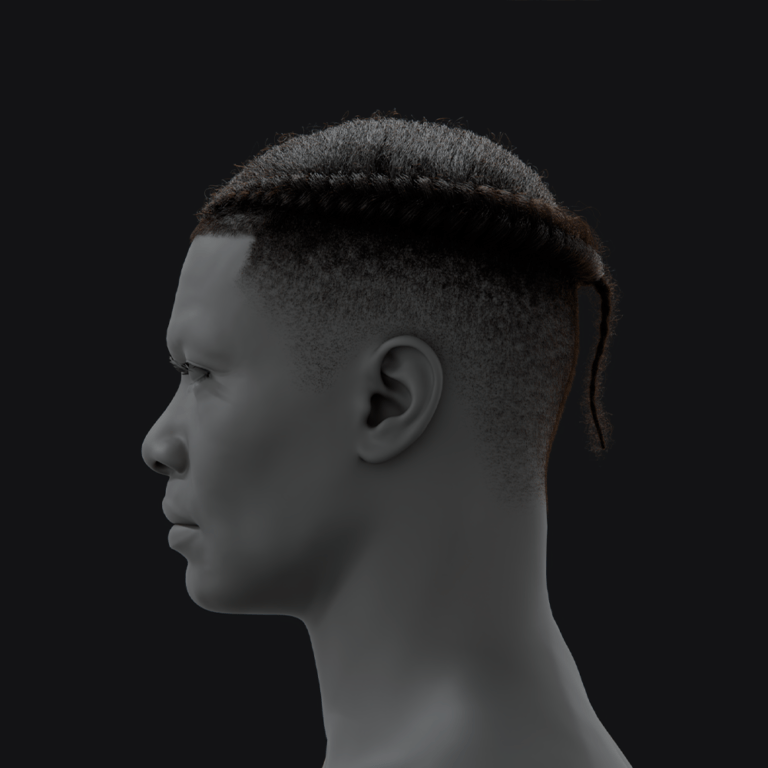

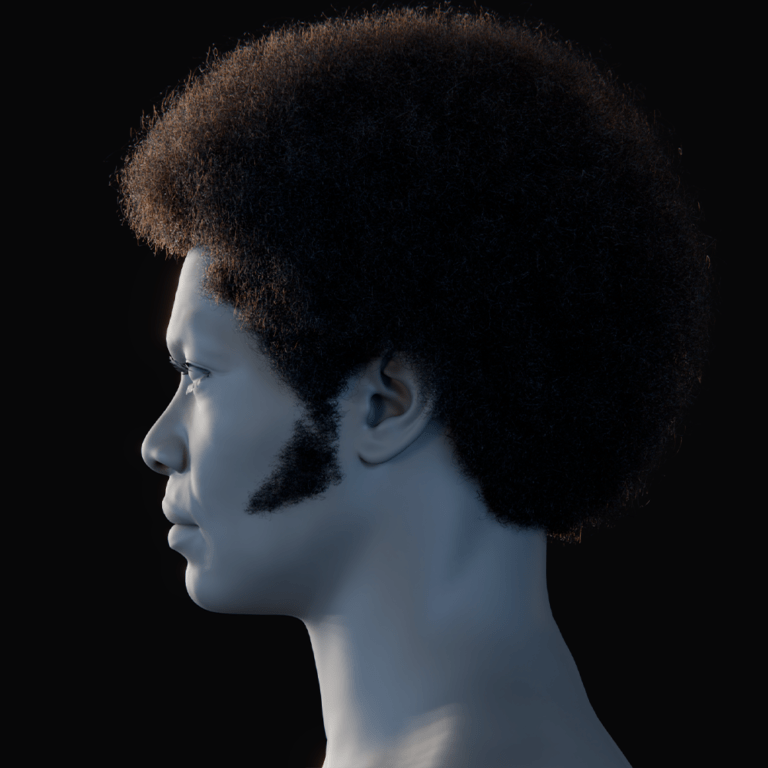

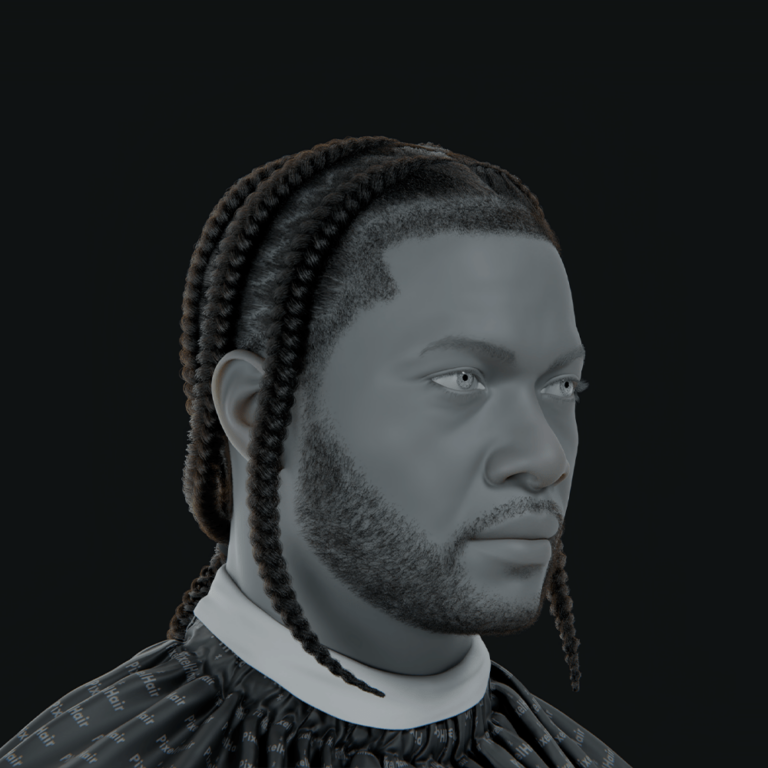

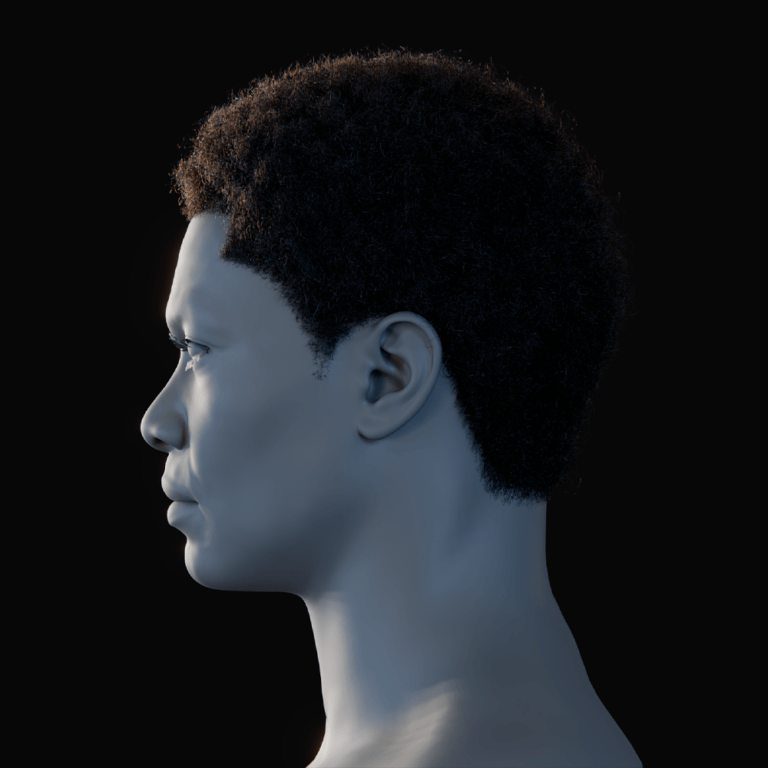

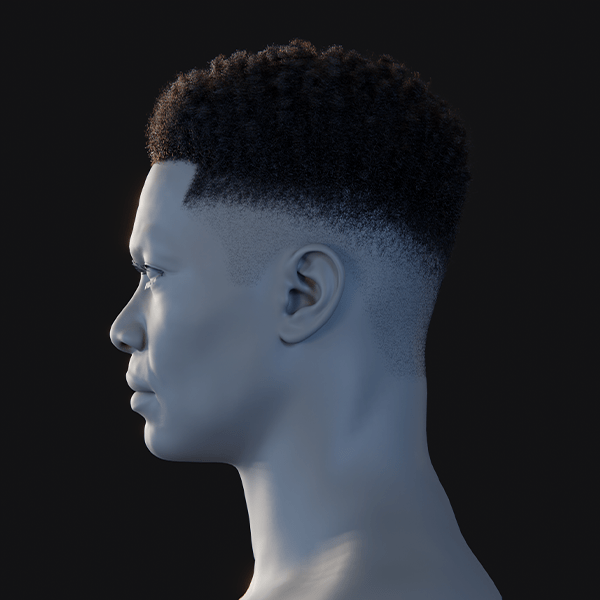

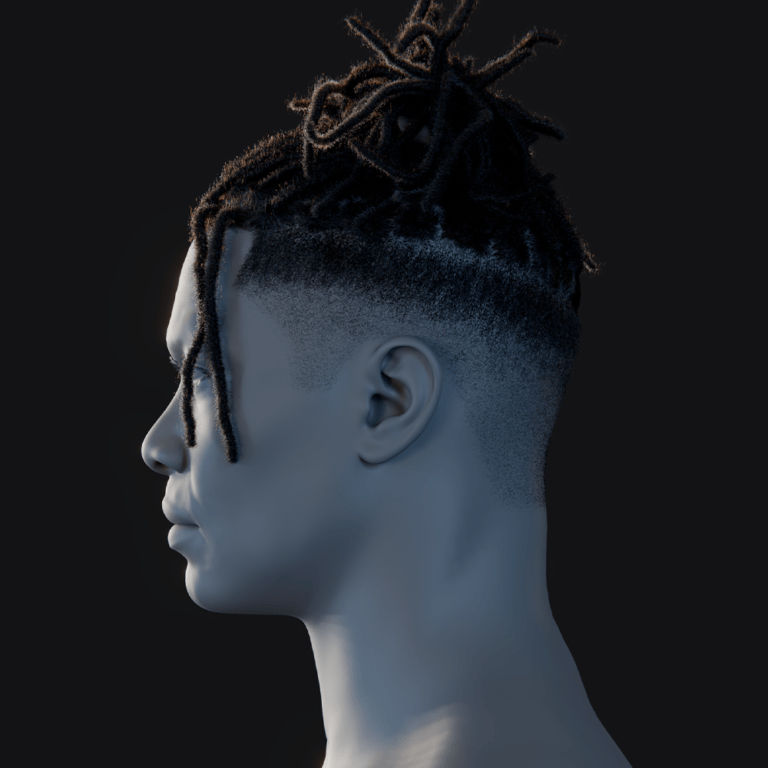

How can PixelHair be used to enhance animated Metahuman characters with realistic hairstyles?

PixelHair enhances MetaHuman hairstyles with diverse, realistic assets. It offers premade styles (e.g., braids, afros) for Blender/UE5, improving character visuals. The process integrates hair seamlessly, boosting animation immersion.

In Blender, PixelHair’s hair cap fits MetaHuman heads using a shrink-wrap modifier, exporting as Alembic/FBX. In UE5, import as Groom or static mesh, attaching to the MetaHuman’s Blueprint head slot. Adjust LODs and add physics (e.g., Groom physics) for natural movement during animation.

PixelHair’s detailed styles avoid flat looks, supporting cultural diversity. Mesh-based hairs ensure visibility across LODs, unlike some default grooms. Realistic hair motion complements MetaHuman animations, enhancing overall fidelity.

How do I export animated Metahuman characters for cinematic rendering?

Exporting MetaHuman animations involves rendering videos or transferring animation data, with Movie Render Queue (MRQ) as the primary tool for cinematics.

Here are the common methods:

- Movie Render Queue (MRQ): Render high-quality cinematics using MRQ in UE5, setting up a Level Sequence with MetaHuman and cameras, configuring resolution, anti-aliasing, and ray tracing, then outputting image sequences or videos for polished results. Ideal for short films showcasing MetaHuman detail.

- Sequencer Export (Legacy): Older “Render to Movie” option in Sequencer, less powerful than MRQ, recommended only for quick tests due to limited customization and support. Not ideal for final output.

- Real-Time Capture: Use OBS or UE5’s nDisplay/Viewport for fast, low-quality captures, suitable for previews but not final renders, as MRQ offers superior visual fidelity.

- FBX Animation Export: Bake MetaHuman skeletal mesh tracks to Animation Sequences in Sequencer, then export as FBX for external tools like Maya, handling separate face/body skeletons carefully. Less common unless required for VFX pipelines.

- In-Engine Use: For games, include animations/Level Sequences in the project for real-time playback, avoiding export by triggering cutscenes directly in Unreal, optimizing for performance.

MRQ excels for cinematic quality, capturing MetaHuman materials like skin and hair. Ensure correct playback ranges and camera assignments for seamless exports.

What are the best practices for animating Metahuman for short films or games?

MetaHuman animations require tailored approaches for cinematic or game contexts, balancing realism and performance.

Here are some best practices that apply across the board:

- Plan with Reference: Use storyboards for films or define game movement styles, referencing videos to capture realistic nuances, ensuring MetaHuman animations align with narrative or gameplay goals.

- Hybrid Animation Tools: Combine mocap for broad motions (e.g., walking) with manual keyframing for details, leveraging mocap’s speed and keyframes’ precision to enhance efficiency and personality.

- Game Optimization: Use LOD Sync to reduce complexity for distant MetaHumans, simplify materials, and employ Animation Blueprints for efficient in-game actions, testing on target hardware early.

- Cinematic Quality: Enable ray tracing, high shadow resolution, and MRQ supersampling for films, using close-ups to highlight MetaHuman facial micro-expressions, maximizing visual impact.

- Realistic Movement: Add eye saccades, facial idles, and physics-based secondary motion (e.g., cloth, hair) to avoid uncanny valley, ensuring natural weight and environmental interactions.

- Iterate and Test: Iterate performances with MetaHuman Animator for films or refine game animations for responsiveness, testing for technical issues like root motion or blueprint errors.

Epic’s sample projects (e.g., Matrix Awakens) provide setup insights. Audio-animation synergy, like matching footsteps, enhances realism for both films and games.

How do I create looping or walk-cycle animations for Metahuman characters?

Looping animations like walk cycles for MetaHumans require seamless start/end poses, leveraging existing assets or manual keyframing.

Here are the key steps:

- Design Seamless Loops: Ensure start/end poses match (e.g., right foot forward at frame 0 and 30), creating smooth walk or idle cycles, adjusting tangents for continuity.

- Use References: Reference “contact, down, pass, up” poses or existing cycles (e.g., MCO Mocap Basics’ looping walks) to simplify creation, retargeting to MetaHumans for instant use.

- Root Motion or In-Place: Choose in-place for game locomotion or root motion for cinematics, ensuring step distance matches movement speed to prevent sliding.

- Test Loop Seams: Preview in Persona to check for pops or foot slides, offsetting keyframes if needed to perfect the loop transition, ensuring visual continuity.

- Animation Blueprints: For games, use Blend Spaces for walk/run transitions, blending loops based on speed/direction, ensuring smooth gameplay locomotion.

- Facial Idle Loops: Create subtle breathing or look-around cycles (e.g., 120-frame inhale/exhale), looping seamlessly to maintain lifelike facial presence.

Retargeted mocap libraries save time, while manual tweaks ensure MetaHuman fidelity. Test root motion and foot locking to avoid sliding in loops.

Where can I find free resources and assets for Metahuman animation?

Free resources for MetaHuman animation are abundant in Unreal’s ecosystem, offering assets and tools to streamline workflows.

Here are key sources:

- Epic Marketplace: Free packs like MoCap Online’s Animation Pack provide retargetable motions (e.g., runs, jumps), while the Animation Starter Pack offers UE4 mannequin animations adaptable for MetaHumans.

- Epic Samples: MetaHuman sample project includes pre-animated characters; Lyra Starter Game provides retargetable Manny/Quinn animations, offering setup and animation insights for learning.

- MetaHuman Creator: Offers 50+ free preset characters with rigs, enabling animation reuse across models without modeling, ideal for testing or populating scenes.

- Mixamo Animations: Free FBX motions (e.g., dances, walks) from Mixamo, retargetable to MetaHumans in UE5, providing a vast library for quick animation integration.

- Blender Community: Export MetaHuman skeletons to Blender for free animation, with community rigs or tools on Blender Market/GitHub enhancing workflows, supporting external animation creation.

- Learning Resources: Epic’s documentation, Unreal forums, and YouTube tutorials offer free scripts, control setups, or project files, while mocap libraries (e.g., CMU) provide retargetable data.

MetaHuman Animator and Live Link Face are free for facial animation. Combining these resources allows cost-free animation, focusing effort on unique project elements.

What are common mistakes to avoid in Metahuman animation workflows?

Avoiding pitfalls in MetaHuman animation ensures smoother workflows and professional results.

Here are some common mistakes and how to avoid them:

- Incorrect Blueprint Use: Animating skeletal meshes instead of MetaHuman Blueprint causes face/body desync; always use BP_[Name] in Sequencer for proper Anim Blueprint integration.

- Live Link Oversights: Forgetting to enable Live Link/ARKit plugins or set ARKit Face Subject in MetaHuman details prevents facial animation; verify settings before capture.

- Retargeting Errors: Mismatched source/target poses (e.g., T-pose vs. A-pose) cause distorted limbs; align poses in IK Retargeter and preview to adjust bone mappings.

- Root Motion Mismatch: Inconsistent root motion/in-place settings lead to sliding; decide early, convert animations as needed, and ensure game logic aligns with animation style.

- Facial Animation Issues: Face not animating in-game due to missing auto-play or post-process evaluation; enable “Evaluate Post-Process Animations” in Sequencer for face tracks.

- Performance Overload: Multiple high-LOD MetaHumans with grooms tank performance; use medium LOD during editing, optimize materials, and limit on-screen characters for smooth playback.

Not baking animations risks loss if Control Rig tracks are skipped; LOD hair issues cause baldness at distance; and ignoring physics misses natural motion. Testing early prevents technical fixes later.

FAQ questions and answers

- Do I need an iPhone or special camera to animate MetaHuman faces?

An iPhone with TrueDepth for Live Link Face offers easy, high-quality facial capture, but it’s not required. Manual keyframing, audio-driven animation, or MetaHuman Animator with non-ARKit video are alternatives. Non-iPhone video lacks depth data, potentially reducing quality. These options ensure flexibility for animating MetaHuman faces without specialized hardware. - Can I animate a MetaHuman without any motion capture at all?

MetaHumans can be fully animated using hand-keyframing in Control Rig and Sequencer. Body movements, facial expressions, and lip-sync are all achievable manually, though more time-consuming than mocap. This approach suits short or simple animations, treating MetaHumans like standard CG characters. Mocap is optional for realistic motion. - What version of Unreal Engine supports MetaHuman Animator and audio-to-face features?

MetaHuman Animator is supported in Unreal Engine 5.2, with enhancements in 5.3. Audio-driven facial animation requires UE 5.5 or later. Ensure the MetaHuman plugin is updated via Epic Games Launcher. Check release notes for the latest feature compatibility in early 2025. - How do I animate MetaHuman eyes and blinking?

Animate MetaHuman eyes via Control Rig or eye bone rotation, using a LookAt node for targeting objects. Blinking uses eyelid blendshapes or rig controls, with periodic blinks added for realism. Pupil dilation in materials is optional for extreme detail. These techniques create lifelike eye movements. - Are MetaHumans suitable for gameplay, or only cinematics due to performance?

MetaHumans are viable for gameplay with optimization like LODs and simplified materials. They’ve been used in demos like Matrix Awakens for crowds and characters. Performance management is key, especially on mobile, but consoles/PCs handle multiple MetaHumans. They’re not limited to cinematics if optimized. - Does animating MetaHumans require programming or blueprints?

Animating MetaHumans in Sequencer or Animation Editors requires no coding, ideal for artists. Keyframing, retargeting, and mocap are code-free processes. Blueprints or code are needed only to trigger animations in-game, like event-based playback. This keeps animation creation designer-friendly. - Can I use my own custom character’s animations on a MetaHuman?

Custom character animations from tools like Maya can be retargeted to MetaHumans via FBX import. Use IK Rig and IK Retargeter to map animations to the MetaHuman skeleton, even with differing skeletons, through pose matching. This allows reuse of existing animations effectively. - Are MetaHuman animations and assets free to use in my projects?

MetaHuman characters and animations are free for Unreal Engine projects, including commercial ones, under Epic’s license. Marketplace or Mixamo animations require checking their licenses, but are typically usable. MetaHuman assets are cost-free with an Epic account, supporting broad project use. - How do I make a MetaHuman talk in different languages – does the lip sync adapt to any language?

MetaHuman Animator’s audio-to-face feature animates lips based on phonetic sounds, supporting any language like Spanish or Japanese. Adjustments may be needed for specific phonetics (e.g., rolling “R”). Accurate voice recordings, including AI voices, ensure effective lip-sync across languages. - What’s the difference between using MetaHuman Animator and the Live Link Face app?

MetaHuman Animator processes recorded video offline for high-fidelity facial animation, capturing fine details like wrinkles. Live Link Face streams real-time performances with less detail, ideal for quick takes or puppeteering. Animator suits final takes, while Live Link aids rehearsals.

Conclusion

Animating MetaHumans in Unreal Engine 5 enables creators, from solo developers to studios, to produce lifelike digital humans using tools like Control Rig for keyframing and MetaHuman Animator for facial performance. The process involves setting up essential tools, blending facial and body animations with Unreal’s sequencing, and retargeting animations via IK Retargeter. Best practices include planning with references, combining mocap with manual tweaks, optimizing for platforms, and avoiding pitfalls to ensure high-quality results. Free resources, such as Epic’s samples and marketplace animations, accelerate workflows. Evolving features like audio-driven lip-sync enhance accessibility, allowing indie developers to create cinematic or playable characters. UE5’s MetaHuman framework supports real-time, emotive storytelling and fluid gameplay animation.

With practice, you’ll be able to direct your MetaHumans as naturally as live actors, blending technology and artistry. We hope this comprehensive tutorial has demystified the process and empowered you to embark on your own MetaHuman animation projects. Happy animating!

Sources and citation

- Epic Games Documentation – Animating MetaHumans (Control Rig): “This page demonstrates how to animate a MetaHuman in Sequencer using Control Rig…” – Epic Developer Communitydev.epicgames.comdev.epicgames.com

- Epic Games Documentation – Live Link Face Workflow: “Use Live Link on a mobile device to capture facial animation and apply it to your MetaHumans in Unreal Engine in real time…” – Epic Developer Communitydev.epicgames.comdev.epicgames.com

- Epic Games Blog – MetaHuman Animator Release: “MetaHuman Animator is a new feature set that enables you to capture an actor’s performance using an iPhone or stereo head-mounted camera… and apply it as high-fidelity facial animation on any MetaHuman…” – Unreal Engine Blog (June 15, 2023)unrealengine.comunrealengine.com

- Epic Games Documentation – Audio-driven Facial Animation: “The Audio Driven Animation feature in MetaHuman Animator gives you the ability to process audio into realistic facial animation.” – Epic Developer Communitydev.epicgames.comdev.epicgames.com

- Epic Games Documentation – Retargeting Animations to MetaHuman: “Retarget animations from the Unreal Engine 4 Mannequin to a MetaHuman… You can use this method to retarget any animation asset that uses the UE4 Mannequin’s skeleton to any MetaHuman.” – Epic Developer Communitydev.epicgames.comdev.epicgames.com

- Epic Games Forum – Blending Face and Body Animation: “The Face_AnimBP… uses a Layered blend per bone node… By doing so, you get the animation from the iPhone from the neck_01 up, while the rest of the body is driven by the body animation.” – Unreal Engine Forumsforums.unrealengine.comforums.unrealengine.com

- Blender Market (Superhive) – PixelHair for MetaHumans Description: “PixelHair offers realistic hair with realistic volume and appearance. It is made using the default Blender Particle system and works the same way as any other hair made in Blender’s particle system.” – PixelHair Braids product pageblendermarket.comflippednormals.com

- Unreal Engine Marketplace – MoCap Online Free Pack: “Enhance your Unreal Engine projects with MoCap Online’s free UE5 animation pack. This curated collection offers professional-grade motion capture animations…” – Marketplace Listingunrealengine.com

- Voicebot.ai – Replica Studios MetaHuman Voices: “With the integration, Replica’s voices will lip-sync in perfect time with the digital characters put together on MetaHuman Creator… animators often lose days or weeks to manually animate lips; Replica’s AI voice pipeline renders dialogue and narration within minutes.” – Voicebot articlevoicebot.aivoicebot.ai

- Rokoko Blog – Keyframing vs Mocap for MetaHumans: “You can try manual keyframe animation… but we suggest motion capture as the most efficient option for body animation. What keyframe animators do in days, motion capture can do in minutes.” – Rokoko Guide to Animating MetaHumansrokoko.comrokoko.com

Recommended

- Why PixelHair is the Best Asset Pack for Blender Hair Grooming

- How to Create Cinematic Motion Capture with Move One and Metahuman Animator on a Budget (No Mocap Suit Needed)

- How do I lock a camera to an object in Blender?

- How to Make Hair in Blender

- What 3D Program Did Arcane Use? An In-Depth Look at the Animation Tools Behind Riot Games’ Hit Series

- The Best Hair Grooming Tools for Unreal Engine

- Can I Improve My Metahuman in Blender? A Comprehensive Guide

- How to Make Anything a LEGO in Blender with Geometry Nodes: A Step-by-Step Guide

- What is the clipping range in Blender cameras?

- Intergalactic: The Heretic Prophet Controversy – Exploring the Backlash and Public Discourse