What is Skibidi Brainrot and why is it trending?

Skibidi Brainrot describes the viral fixation on the Skibidi Toilet meme series, humorously said to cause “brain rot,” a slang term for low-quality, addictive content that dulls the mind. Created by Alexey Gerasimov (DaFuq!?Boom!), Skibidi Toilet is a 3D animated web series featuring human heads emerging from toilets singing a remix of Timbaland’s “Give It to Me” and Biser King’s “Dom Dom Yes Yes,” battling humanoids with speaker and camera heads in a dystopian setting. Launched in February 2023 with an 11-second YouTube video, it has amassed billions of views, captivating Generation Alpha as a hallmark of their internet culture.

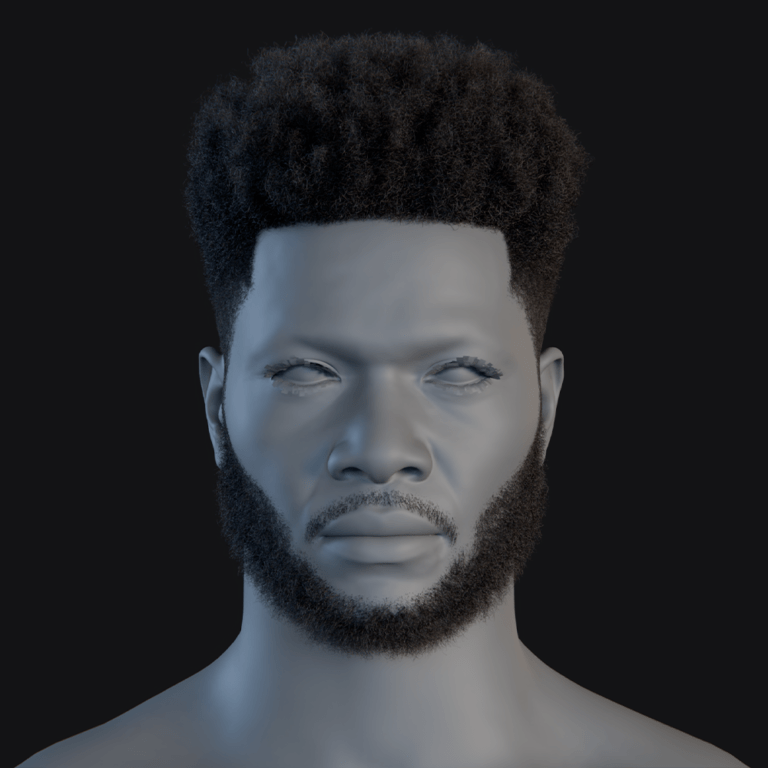

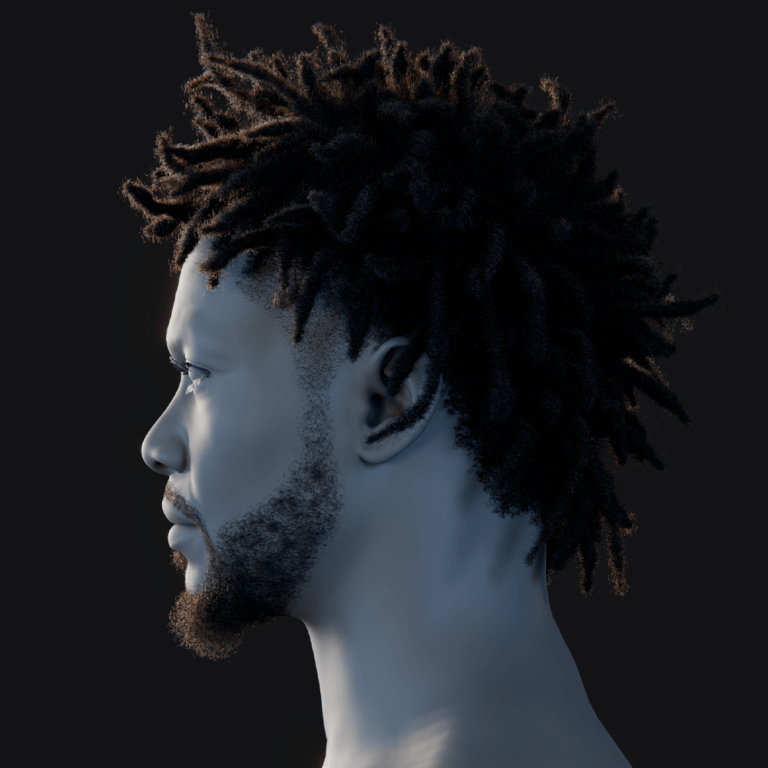

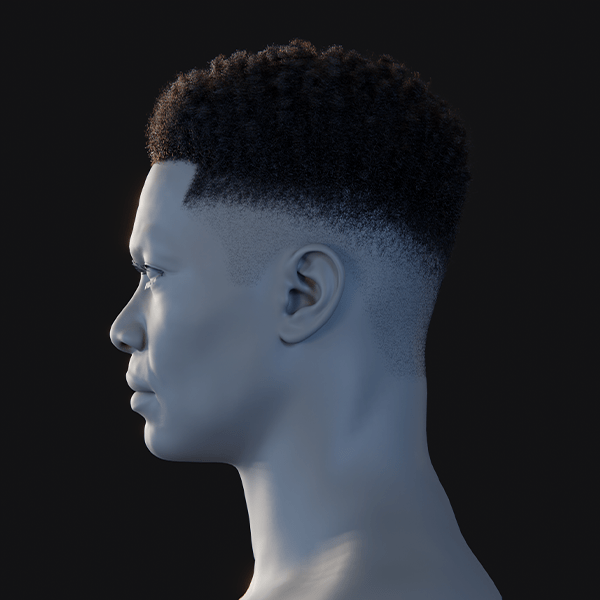

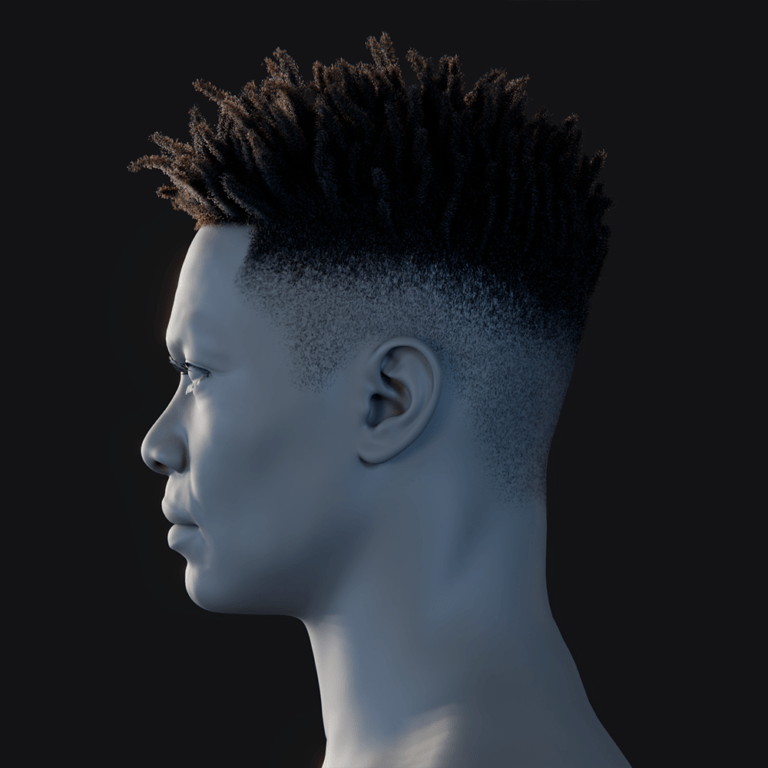

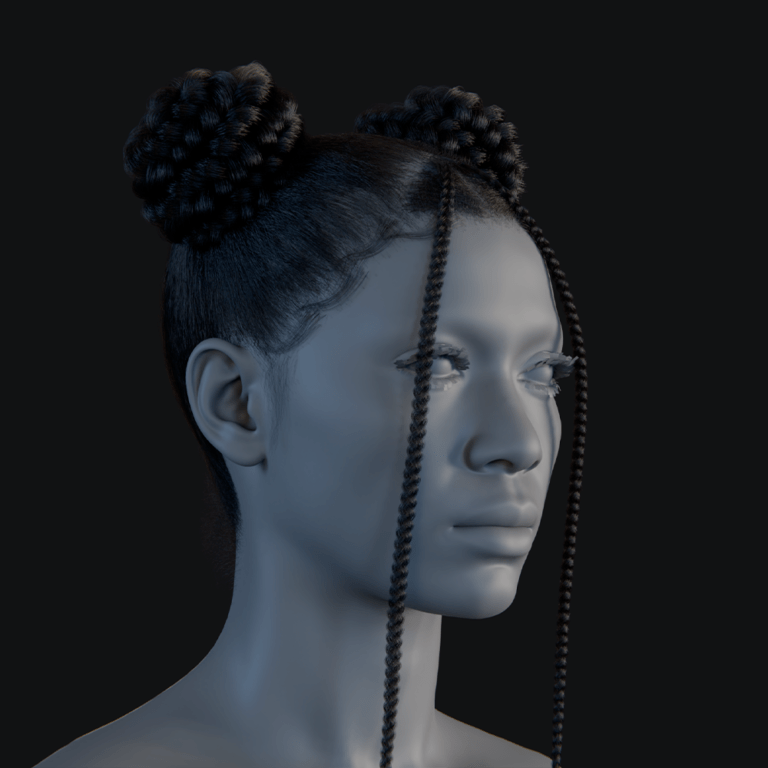

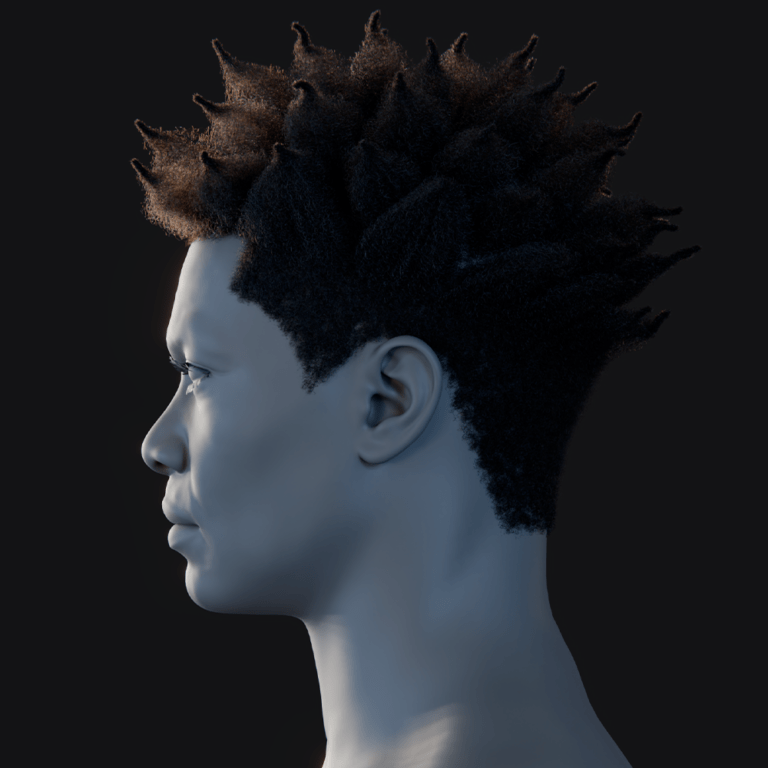

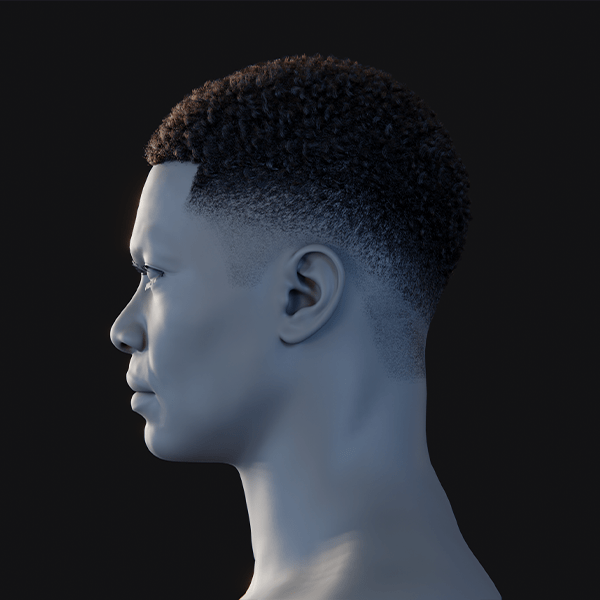

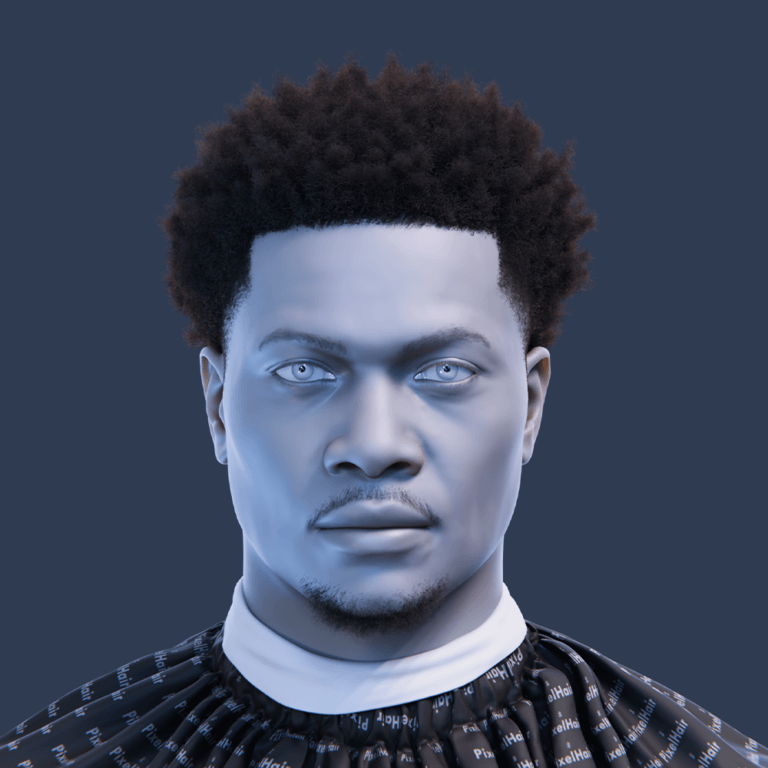

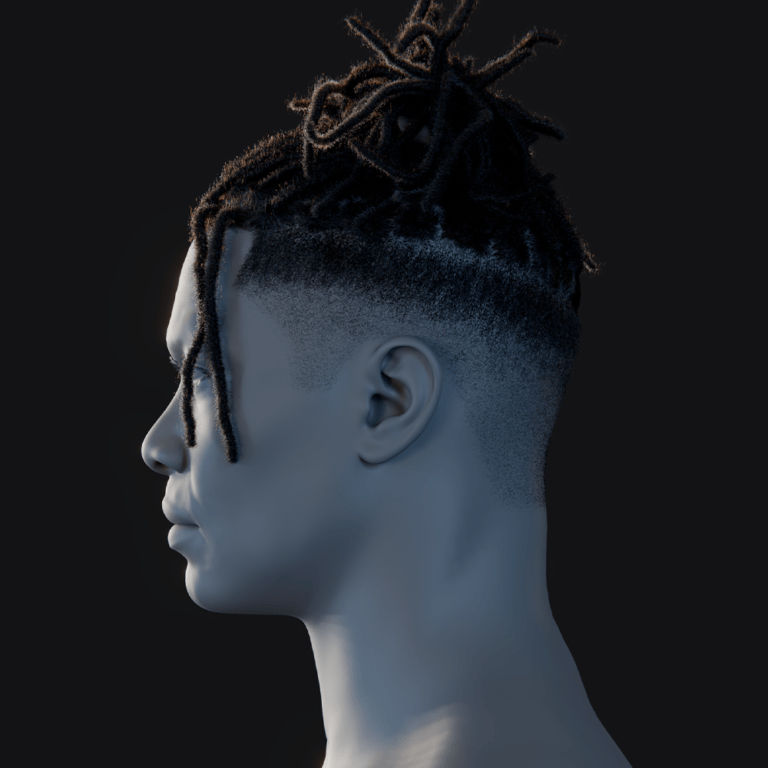

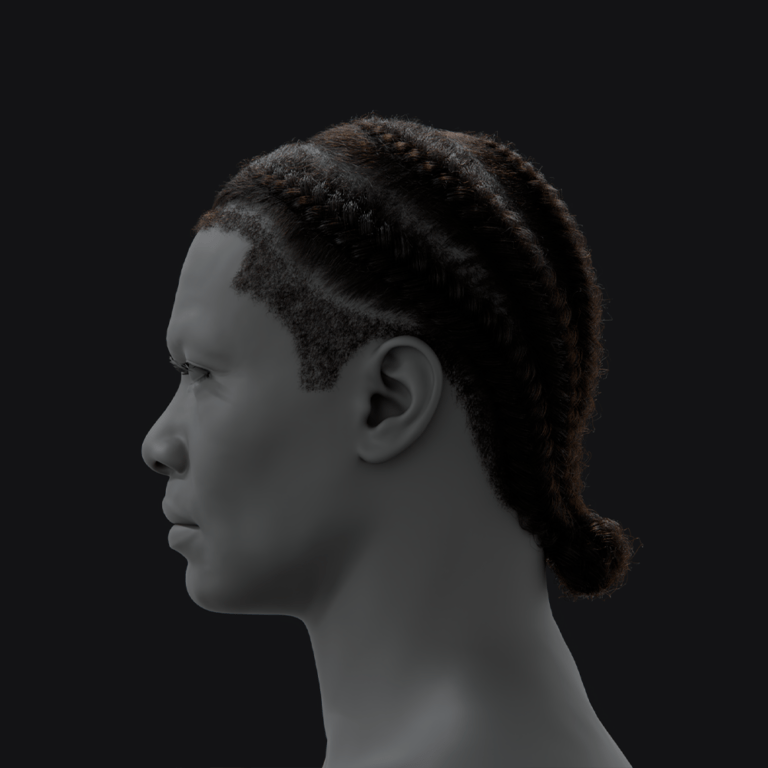

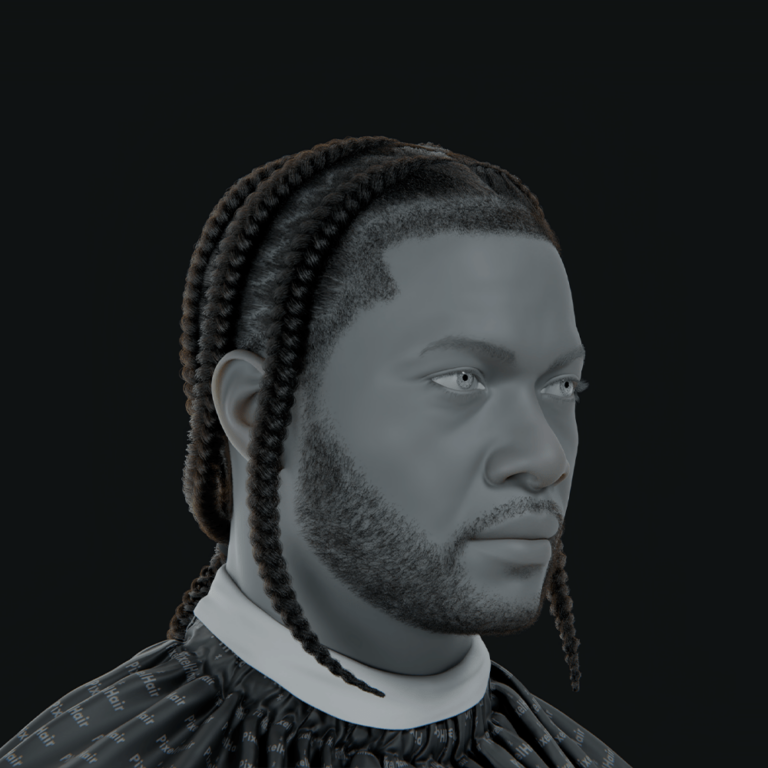

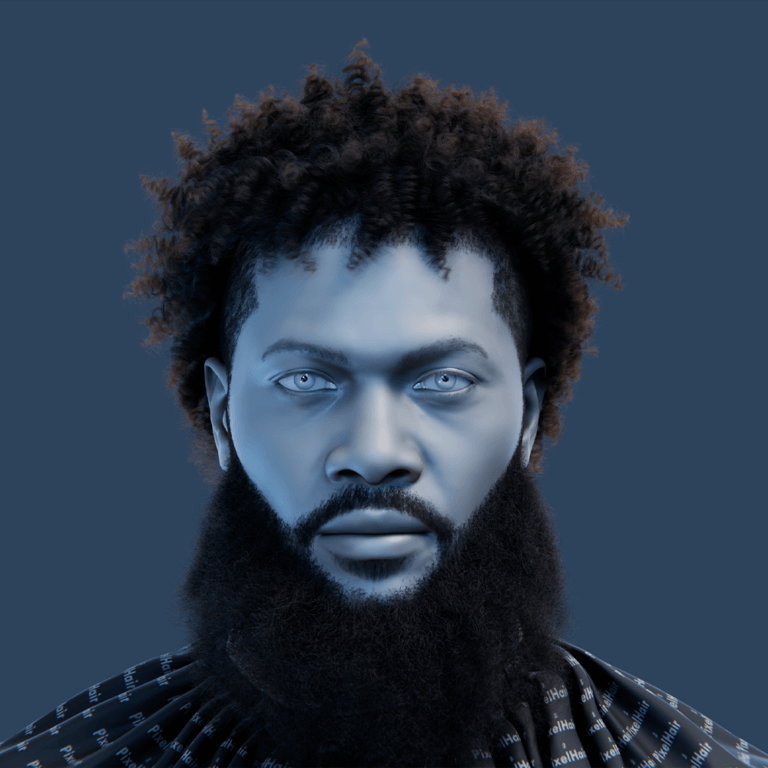

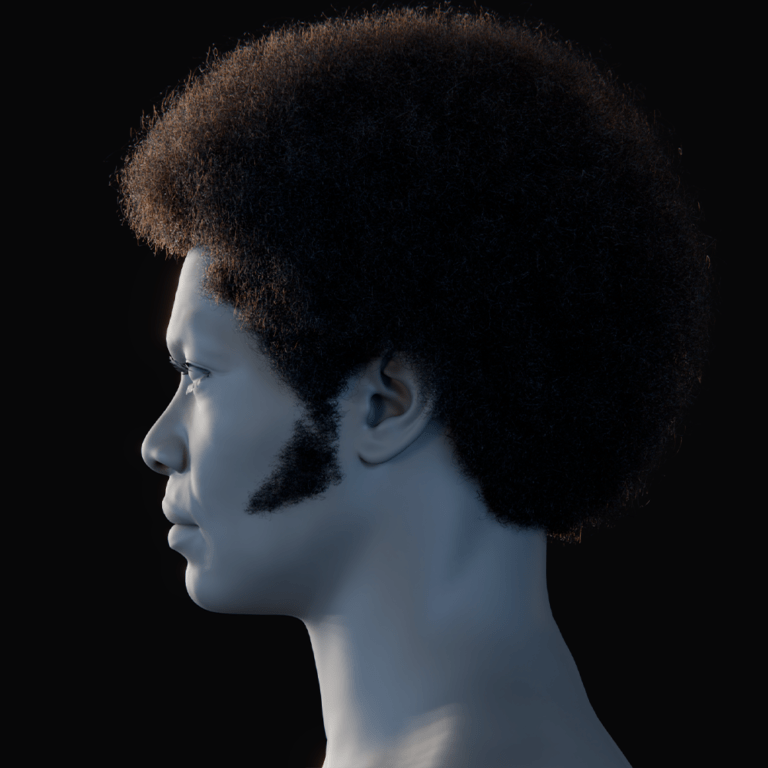

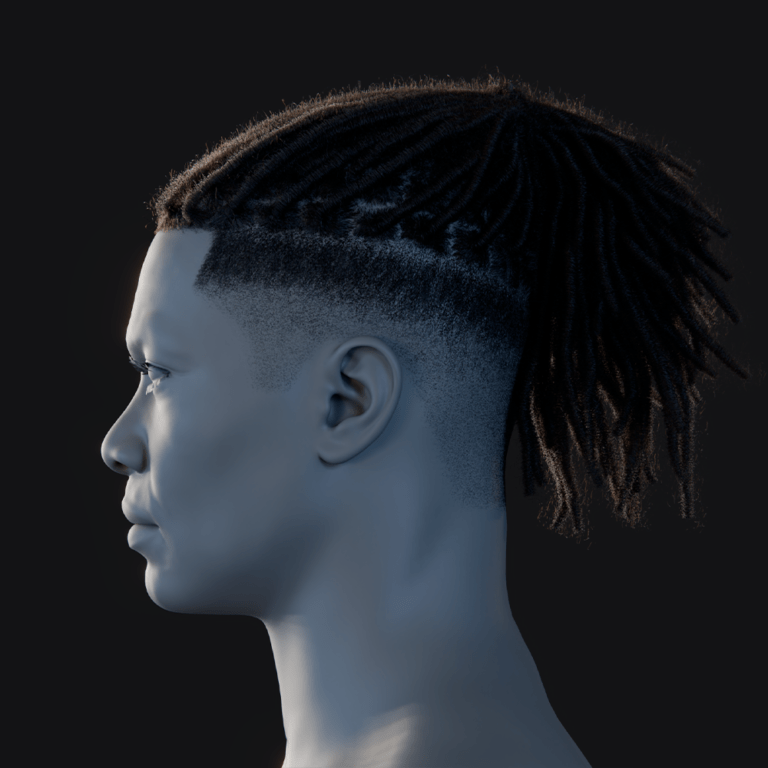

Its catchy audio and absurd visuals made it a cultural phenomenon, with the creator’s channel reaching over 36 million subscribers by late 2023, sometimes outpacing YouTube’s top creators. The term “Skibidi Brainrot” captures the addictive, “profoundly meaningless” appeal of these videos, embraced by Gen Z and Gen Alpha for their bizarre, rapid-fire humor. Mainstream figures like Kim Kardashian, whose daughter gifted her a Skibidi Toilet necklace, highlight its reach. Creators are now making Skibidi-style memes, leveraging tools like Unreal Engine 5 and MetaHumans.

Can you recreate Skibidi Brainrot content using Metahuman in Unreal Engine 5?

Yes, Skibidi-style content can be recreated using MetaHumans and Unreal Engine 5 (UE5). MetaHumans, Epic Games’ realistic digital characters, are fully rigged for animation and importable into UE5, ideal for absurd meme videos akin to Skibidi Toilet’s machinima style, originally made with Source Filmmaker. You can attach a MetaHuman head to a toilet mesh or use MetaHumans for goofy skits, animating them with UE5’s tools to create cinematic, real-time scenes. Community experiments have recreated Skibidi scenarios in UE5, showing its potential. To maintain the meme’s charm, animations should retain the janky, chaotic style of Skibidi Toilet, avoiding the uncanny valley with cartoonish edits, as UE5’s photorealistic graphics can enhance viral appeal.

What tools do I need to make Skibidi-style animations with Metahuman?

To create Skibidi-style MetaHuman animations in UE5, you need:

- Unreal Engine 5 (UE5): For animation, scene setup, and rendering, using features like IK Retargeter, Control Rig, and MetaHuman framework.

- MetaHuman Creator and Assets: Create or download MetaHumans via the free MetaHuman Creator or presets, importable via Quixel Bridge, with rigged body and face.

- Capable PC: A modern GPU (e.g., NVIDIA RTX), 32GB RAM, and strong CPU for smooth UE5 workflow; Mac Silicon supports UE5 but check MetaHuman Animator compatibility.

- MetaHuman Animator: Uses an iPhone (X or newer) with Live Link Face to capture facial performances for high-fidelity animation in UE5.

- Optional Motion Capture: Tools like Rokoko, Xsens, or AI-based mocap for body movements, though manual animation or libraries suffice.

- Animation Libraries: Mixamo or Unreal Marketplace animations (e.g., Paragon, monthly free content) retargetable to MetaHumans via IK Retargeter.

- Audio Assets: Meme sounds or music (e.g., “Skibidi dop dop yes yes”) from TikTok, meme libraries, or royalty-free sources, imported into UE5 for sync, with copyright caution for public uploads.

- Video Editing Software: Adobe Premiere, DaVinci Resolve (free), or TikTok/Instagram editors for post-rendering touches like captions or aspect ratio adjustments.

- Creativity and Humor: Essential for absurd, humorous meme ideas, storyboarding, and referencing other memes to capture the brainrot vibe.

How do I animate a Metahuman to mimic Skibidi Toilet-style videos?

Animating a MetaHuman for Skibidi Toilet-style videos requires exaggerated, cartoonish movements and expressions to capture the viral meme’s chaotic energy. Here’s how to approach it:

- Recreate the Signature Moves: Identify key Skibidi motions like bobbing or funky marches.Find similar dance animations on Mixamo or UE Marketplace. Retarget animations to the MetaHuman skeleton using IK Retargeter. Tweak poses with Control Rig in Sequencer for Skibidi flair. Exaggerate motions to enhance comedic effect.

- Exaggerated Facial Expressions: Skibidi characters feature wild expressions like bulging eyes.Use MetaHuman Animator or manual keyframing for extreme facial blendshapes. Push expressions beyond normal ranges for comedy. Time snaps to beats for meme-like impact. Focus on quick, exaggerated changes.

- Fast, Jumpy Cuts and Camera Angles: Skibidi videos use rapid cuts and dynamic camera work.Use Cine Camera in Unreal for varied angles and zooms. Cut between shots in Sequencer for chaotic pacing. Keyframe camera movements to mimic shaky meme edits. Plan animations for specific camera angles.

- Embrace Looping and Repetition: Skibidi videos often repeat short animation loops.Create a 2-second dance loop in Sequencer for reuse. Copy-paste keyframes to extend repetition. Add occasional variations for surprise. Loops enhance the hypnotic brainrot vibe.

- Add Slapstick Physics (if needed): Some Skibidi gags use ragdoll or slapstick effects.Enable ragdoll in Unreal for dramatic flops or falls. Animate big reactions manually for cartoony impact. Mask transitions with cuts for seamless physics. Use sparingly for comedic contrast.

Animating a MetaHuman for Skibidi-style content involves starting with mocap or animations and amplifying them for absurdity. Exaggerated movements and expressions, paired with frenetic editing, create the meme’s signature chaotic humor.

What tools do I need to make Skibidi-style animations with Metahuman?

As outlined above, the essential tools include Unreal Engine 5, MetaHuman characters, an iPhone + Live Link Face for facial capture, and possibly motion capture for body. Let’s briefly recap and add any additional tools that can help:

- Control Rig and Sequencer (built into UE5): These are your bread-and-butter tools inside Unreal for animating MetaHumans without needing external programs.Control Rig allows posing and keyframing MetaHuman limbs and faces. Sequencer arranges animations, keyframes, and audio on a timeline. These tools enable complex in-engine animations. They are essential for Skibidi-style meme creation.

- MetaHuman Pose Library (optional): Unreal provides a MetaHuman facial pose asset library.Pose library offers blendshape presets like smiles or frowns. Quickly apply expressions to speed up facial animation. Tweak presets for exaggerated meme expressions. It’s ideal for manual face animation.

- Audio editing software: If you need to cut your meme audio or adjust timing, a simple audio editor like Audacity (free) or a DAW can be handy.Edit audio clips like “Skibidi dop dop” in Audacity. Import finalized audio into Unreal for syncing. Adjust timing for seamless integration. This ensures precise audio alignment.

- 3D Modeling Software: If you plan to create custom props you might use Blender or Maya.Model meme props like toilets in Blender for authenticity. Import free assets from Epic Marketplace or Fab. Props enhance Skibidi parody visuals. This adds thematic environmental detail.

- Community plugins or scripts: There are some community-made tools that can simplify parts of the process.Mixamo Converter automates retargeting Mixamo animations to MetaHumans. Python scripts streamline batch rendering tasks. These tools save time on repetitive tasks. They enhance workflow efficiency.

You don’t need much beyond Epic’s ecosystem (UE5 + MetaHumans) and a recording device, with know-how being the key to achieving the desired Skibidi-style effect.

How do I use Metahuman Animator for exaggerated facial expressions?

To use MetaHuman Animator for exaggerated, meme-worthy expressions, follow these steps and tips:

- Capture a Performance with an iPhone: MetaHuman Animator works by taking a recording from an iPhone (with Face ID sensor) or a head-mounted cam and extracting the actor’s facial movements.Record exaggerated expressions using Live Link Face app. Over-act with wide eyes and big mouth movements. Capture voice for automatic lip-sync. Push performance for cartoonish data.

- Process the Capture in Unreal: After recording, you’ll import the track into Unreal’s MetaHuman Animator interface.Import recording to create a facial animation asset. MetaHuman Animator applies performance to the MetaHuman face. Uses a 4D solver for high-fidelity results. Ensures accurate expression reproduction.

- Tweak and Exaggerate Further: Once the facial animation is applied, you can fine-tune it in Sequencer.Edit animation curves to amplify eyebrow or smile intensity. Adjust keyframes for sharper, comedic timing. Layer facial pose assets for extra exaggeration. Focus on major controls for impact.

- Alternative: Manual Keyframing for Expressions: If you don’t have an iPhone or prefer a different approach, you can still animate expressions manually using the Control Rig for the MetaHuman face.Keyframe facial controls in Sequencer for pose-to-pose animation. Use pose library presets like “Surprised” as a base. Push jaw or eye controls for extreme looks. Allows ultimate control over exaggeration.

- Leverage “Cartoon” Techniques: To heighten facial exaggeration, you can borrow techniques from cartoon animation.Add anticipation like a squish before a shocked expression. Use Animation Layers for secondary facial movements. Embellish with nose wrinkles or cheek raises. Mimics cartoon squash-and-stretch effects.

MetaHuman Animator provides a realistic facial performance that can be amplified for comedic, meme-worthy results, blending realism with absurd expressions ideal for Skibidi content.

Can I sync Metahuman animation to Skibidi-style music or memes?

Yes, syncing your MetaHuman’s animation to music or meme audio is essential for nailing the Skibidi vibe. Here’s how to do it:

- Import and Use the Audio in Unreal: First, bring your sound clip into Unreal Engine.Import WAV/MP3 into Content Browser and add to Sequencer. View waveform for timing cues. Align animations to audio spikes like “dop” or “yes”. Ensures precise beat synchronization.

- Animate to the Beat: With the audio waveform as a guide, animate your MetaHuman’s actions to match rhythms or specific moments in the audio.Choreograph movements like head nods on “dop” beats. Align peak actions with waveform spikes. Use snapping or markers for exact timing. Creates satisfying music-animation unity.

- Lip-Sync the Dialogue/Lyrics: If your audio has lyrics or spoken words that your MetaHuman is “saying” you’ll want to sync the character’s lip movements to those words.Use MetaHuman Animator for automatic lip-sync from performance. Manually keyframe visemes for phonemes if needed. Plugins like OVRLipSync can automate lip-sync. Aim for rough sync for meme humor.

- Align Body Movements to Musical Cues: Beyond just beat syncing, pay attention to musical cues or sound effects in the audio.Change animations on beat drops or tempo shifts. Match visual gags to sound effects like booms. Use Event Track for precise cue triggers. Enhances dynamic audio-visual impact.

- Test and Iterate: After syncing your animation, play it back from the start and watch while listening to the audio.Nudge keyframes for perfect beat alignment. Preview with real-time recording for viewer perspective. Adjust timing for seamless sync feel. Ensures engaging, locked-in performance.

Syncing MetaHuman moves to audio using Sequencer’s waveform visualization makes your Skibidi video compelling and meme-worthy, with precise alignment enhancing viewer engagement.

How do I create meme content with Metahuman in UE5?

Here’s a guide to crafting brainrot-style meme skits:

- Embrace Absurdity in Storytelling: Start with a silly concept or scenario.Write a short script with non-sequitur humor. Place realistic MetaHumans in absurd situations. Storyboard quick-escalating gags or punchlines. Captures random, chaotic meme vibe.

- Keep It Short and Punchy: A hallmark of meme reels is brevity.Aim for 10-30 second videos with fast pacing. Trim dead air in Sequencer for impact. Use smash cuts and quick zooms. Maintains high energy for short attention spans.

- Use Trending Meme References: Part of writing brainrot-style skits is remixing familiar meme elements.Parody trends like “Sigma stare” or Vine sounds. Add meme captions via UMG or post-edit. Incorporate trending phrases for relatability. Boosts humor through familiarity.

- Leverage MetaHuman Quality for Comic Effect: One advantage of using MetaHumans is their realism.Juxtapose cinematic visuals with absurd meme dialogue. Record funny voice lines for lip-sync. Act out popular meme formats. Creates hilarious realistic-absurd contrast.

- Add Creative Visuals and Effects: Memes often have DIY-looking effects.Use Niagara for meme symbols like emojis. Apply post-process glitch or fisheye effects. Greenscreen MetaHumans onto meme footage. Enhances comedic visual flair.

- Both Beginner-Friendly and Pro-Level elements: If you’re a beginner, keep your idea simple.Start with one character and basic animation. Add complexity like multiple characters as skills grow. Use multiple MetaHumans for comedic interactions. Balances accessibility and polish.

MetaHumans in UE5 allow creators to blend high-fidelity visuals with absurd humor, crafting short, impactful meme skits that resonate with internet audiences.

What is the workflow for Skibidi meme-style motion capture with Metahuman?

Here’s a practical workflow to follow:

- Concept & Planning: Begin by defining the short “story” or gag for your meme video.Storyboard key movements like dances or falls. Prepare audio track for sync timing. Plan mocap sessions based on audio beats. Guides performance capture process.

- Set Up Characters and Environment in UE5: Open Unreal Engine 5 and create a new level.Import MetaHumans via Quixel Bridge. Add props like bathroom sets if needed. Enable Live Link for mocap streaming. Prepares digital stage for capture.

- Record Body Mocap: If you have a mocap suit or device, perform the character’s movements.Stream mocap via Live Link or import FBX animations. Retarget to MetaHuman using IK Retargeter. Test body movements for audio sync. Captures complex dance motions.

- Record Facial Mocap (or Animation): Now capture the facial performance.Use MetaHuman Animator for iPhone facial capture. Align body and face tracks in Sequencer. Manually animate faces if no mocap. Ensures expressive lip-sync performance.

- Refine and Edit Animation: Mocap is rarely perfect, especially for exaggerated meme actions.Trim glitches and blend animations in Sequencer. Adjust timing for precise audio sync. Fix foot sliding with IK controls. Enhances mocap for comedic polish.

- Add Cinematography & Audio: Set up your cameras in Sequencer.Add dynamic cameras for varied shots. Animate zooms or pans for meme energy. Align audio track with performance. Ensures visual-audio coherence.

- Lighting and Visual Effects: Now that animation and audio are locked, light your scene and add any effects.Use spotlights or pulsing lights for drama. Add fog or sparks for tone. Ensure MetaHuman expressions are well-lit. Enhances visual appeal without overpowering.

- Recording/Rendering: When everything looks good in the Sequencer preview, it’s time to export the video.Use Movie Render Queue for high-quality output. Render PNG sequence and merge audio. Screen-record for quick drafts. Ensures synced, polished video export.

- Post-Production and Meme Polish: With your video rendered, do a quick edit pass outside Unreal if needed.Add captions or meme text in editing software. Layer sound effects like vine booms. Optimize for TikTok’s 9:16 format. Finalizes viral-ready meme video.

This workflow combines motion capture with virtual cinematography, creating high-quality Skibidi meme videos that capture the trend’s frenetic energy.

How do I add audio and lip sync to Metahuman for viral meme videos?

Here’s how:

- Import Your Audio Clip: Just like adding music, bring your dialogue or vocal track into Unreal.Import meme audio or recorded voice into Content Browser. Add to Sequencer’s Audio Track. Position for correct timing alignment. Sets foundation for lip-sync.

- Generate Lip Sync Animation: Unreal Engine doesn’t auto-lip-sync from audio by default, but there are several ways to handle it.Use MetaHuman Animator for performance-based sync. Manually keyframe visemes for phonemes. Plugins like OVRLipSync automate lip movements. Choose based on setup availability.

- Adjust and Refine Mouth Movements: However you generate the initial lip-sync animation, you’ll likely need to tweak it by eye.Ensure closed lips for consonants like B, M, P. Match wide vowels with open mouths. Exaggerate keyframes for comedic emphasis. Simplifies sync for cartoon style.

- Incorporate Facial Expression: Remember that speaking isn’t just the mouth.Add eyebrow raises or blinks for lively expressions. Use MetaHuman Animator for natural captures. Over-express for meme sarcasm or humor. Enhances lip-sync realism.

- Check Sync in Context: Do a final check by watching the video with audio.Nudge animation timing for perfect audio alignment. Lead animation slightly if needed. Watch at varied speeds for mismatches. Confirms convincing character speech.

- Alternate Approach – Dub Over After: A quirky meme-making approach is to animate the MetaHuman’s mouth movements first, then dub your own voice to match the animation.Animate jaw flaps then record matching voice. Experiment for comedic improvisation. Ensures humorous, puppet-like sync. Adds unique meme charm.

Adding audio is simple, and lip-syncing via performance capture or manual animation creates engaging meme videos, where rough sync can enhance comedic appeal.

Can I use body mocap with Metahuman for Skibidi dance animations?

MetaHumans are compatible with a wide range of mocap solutions because they’re rigged to a standard human skeleton. Here’s how you can leverage body mocap for those wild dances:

- Motion Capture Suits and Systems: If you have access to a mocap suit like Rokoko Smartsuit, Xsens, Perception Neuron, etc., you can record yourself doing the Skibidi dance or any other moves.Record dances with mocap suits for FBX export. Stream directly to Unreal via Live Link. Retarget to MetaHuman skeleton seamlessly. Captures complex motions quickly.

- Markerless Mocap (Webcam/Video): Don’t have an expensive suit?Use AI mocap like Move.ai from video footage. Import animations and retarget to MetaHuman. Kinect or similar cameras offer low-cost options. Captures broad dance strokes.

- Live Link with AR/VR Devices: If you happen to have VR equipment, there are ways to use VR controllers and trackers to live-drive a MetaHuman.Use Vive trackers for real-time dance capture. Link iPhone ARKit for basic body tracking. Experimental but viable for enthusiasts. Provides live motion input.

- Using Mocap Data from Libraries: There are also premade mocap animation libraries.Download Mixamo or Unreal Marketplace dance animations. Retarget club or Fortnite-style dances to MetaHuman. Modify for Skibidi flair. Offers quick animation solutions.

Using body mocap with MetaHumans is ideal for Skibidi dances, injecting realistic, complex motion with minimal effort for authentic, viral-ready animations.

What are the best render settings for Skibidi-style videos in Unreal Engine 5?

Here are some recommendations for best render settings:

- Resolution and Aspect Ratio: Aim for at least 1080 x 1920 (vertical 9:16) if you’re targeting TikTok or mobile views.Use 1080×1920 for crisp vertical phone viewing. Set 9:16 aspect ratio in Movie Render Queue. Adjust cameras for vertical composition. Aligns with Skibidi trend’s mobile focus.

- Frame Rate: 30 fps vs 60 fps – TikTok and Shorts support 60fps now, and a smooth 60fps can make fast actions look more fluid.Choose 60fps for fluid fast motions. Use 30fps to save render time. Convert 60fps to 30fps if needed. Balances clarity and efficiency.

- Anti-Aliasing and Quality: In Movie Render Queue, use a high anti-aliasing setting so that edges are smooth.Set Temporal Sample Count to 8 for TAA. Use Temporal Super Resolution for quality. Reduces jaggies on MetaHuman details. Ensures smooth, professional visuals.

- Lighting and Effects Quality: If you used Lumen, you might want to increase the final gather quality for the render to avoid noise.Override to Cinematic scalability for Lumen. Enable high-quality shadows for sharpness. Reduce motion blur for clarity. Optimizes meme video crispness.

- Compression: The final video should ideally be in a widely supported format like H.264 MP4.Render PNG sequence for quality, encode to MP4. Use high bitrate like 10-20 Mbps. Avoid over-compression for clarity. Ensures compatibility and quality.

- Audio: As noted, UE’s MRQ doesn’t include audio by default.Enable audio capture in MRQ or merge in post. Render WAV separately if needed. Ensure audio sync in final MP4. Critical for meme impact.

- File Size Consideration: TikTok currently allows uploads up to 500 MB for videos, and YouTube Shorts can be up to 60 seconds.Keep 15-30s clip under 50 MB. Use high-quality encoding settings. Avoid exceeding platform limits. Ensures upload compatibility.

- Testing Look on Phone: It’s a good idea to test-play the final video on a phone screen.Check visibility and brightness on small screens. Normalize audio for phone playback. Adjust exposure if too dark. Optimizes mobile viewing experience.

- Vertical Video Tips: When rendering vertical, consider the safe zones.Frame MetaHuman to avoid TikTok UI overlap. Place captions in clear top/bottom areas. Center action for visibility. Enhances viewer engagement.

These settings (1080×1920, 30/60 fps, high AA, MP4) ensure a sharp, platform-ready Skibidi video that stands out on social media.

How do I use Sequencer to create short meme scenes with Metahuman?

Here’s a step-by-step on using Sequencer for this purpose:

- Create a Level Sequence: In your Unreal project, go to the Content Browser, click Add > Cinematic > Level Sequence.Create a new Sequencer asset for timeline editing. Open to access empty timeline and viewport. Sets up the meme scene foundation. Enables cinematic control.

- Add Your MetaHuman to Sequencer: In the Sequencer window, click the “+ Track” button and choose Actor to Sequencer, then select your MetaHuman.Add MetaHuman with sub-tracks for body and face. Include existing animations or animate in Sequencer. Prepares character for scene integration. Streamlines animation setup.

- Animate Movements and Expressions: There are a few ways to animate in Sequencer.Use animation clips for dances or falls. Keyframe with Control Rig for custom poses. Apply facial poses via Pose Asset track. Enables flexible, comedic animation.

- Add Cameras: For filmmaking, add a Cine Camera Actor to Sequencer.Position Cine Cameras for desired framing. Create Camera Cuts track for shot switches. Animate cameras for zooms or pans. Achieves dynamic meme editing.

- Arrange the Timeline: Now sequence your story.Drag clips to align with audio beats. Use snap tool for precise frame alignment. Structure scenes with clear cuts. Ensures tight, humorous pacing.

- Insert the Audio Track: Click the +Track and add your audio file.Add meme audio to Sequencer for sync. Align actions with waveform cues. Fine-tune animation timing to audio. Locks in audio-visual harmony.

- Utilize Sequencer’s Tools: Sequencer has a few handy tools for timing.Move multiple keys for segment timing. Adjust clip play rate for speed tweaks. Add markers for beat references. Enhances precise comedic timing.

- Preview Frequently: Press the spacebar or the play button to preview the sequence in the viewport.Watch real-time playback to iterate animations. Adjust keyframes for timing issues. Scrub timeline to check poses. Refines scene for humor.

- Finalize and Render: Once it looks good, you’ve basically directed a tiny movie inside Unreal.Render via Movie Render Queue for quality. Capture real-time for simple scenes. Tweak until joke lands perfectly. Produces polished meme output.

Sequencer acts as a powerful video editing and animation suite, enabling precise, comedic meme scenes with MetaHumans in Unreal Engine 5.

Can I export Skibidi Metahuman clips for use on TikTok or YouTube Shorts?

Yes, you can export your MetaHuman meme clips from Unreal and post them on TikTok, YouTube Shorts, Instagram Reels, or any platform. The process involves rendering the video and then transferring it to your device or uploading directly. We’ve touched on render settings, but here we’ll focus on making sure the export is social-media-ready:

- Render to a Common Video Format: The end goal is an MP4 file (H.264 codec) because that’s universally accepted by TikTok and YouTube.Convert PNG sequences to MP4 using a video editor. Use 1080×1920 resolution for vertical 9:16 aspect. Avoid pillarboxing or cropping on TikTok. Ensures platform compatibility.

- Keep the Video Short: By design, Shorts and TikToks are short (TikTok allows longer uploads now, but the algorithm favors shorter, punchy clips).Trim videos to under 60 seconds for YouTube Shorts. Keep clips around 20 seconds for impact. Edit in a video editor or TikTok’s interface. Enhances algorithm favorability.

- Transfer to Mobile (if needed): If you rendered the video on PC and you want to post on TikTok (assuming you primarily use TikTok on your phone), you’ll need to get the video onto your phone.Copy via USB or cloud services like Google Drive. Avoid compression from messaging apps. Ensure file retains quality during transfer. Simplifies mobile posting process.

- Upload via Web or Desktop (optional): Note that TikTok and Instagram do allow web upload from a PC browser now.Use TikTok’s web upload or YouTube’s interface. Include #Shorts for YouTube Shorts classification. Ensure vertical format for consistency. Streamlines desktop-based uploads.

- Vertical Formatting Check on Platforms: When uploading, preview how it looks.Add TikTok sounds or effects post-upload. Keep original audio unless using trending sounds. Adjust volume to preserve audio clarity. Ensures proper platform presentation.

- Captions/Hashtags: Use the platform’s tools to add captions or text overlays if you didn’t already burn them into the video.Add captions like “Skibidi in Unreal Engine?!” Use hashtags like #skibiditoilet, #unrealengine. Choose eye-catching thumbnail for clicks. Boosts audience reach and engagement.

- Quality Preservation: TikTok compresses videos but tends to preserve quality if the upload is good.Use correct resolution to avoid re-scaling. Upload via stable WiFi for less compression. Test as private to check quality. Maintains visual fidelity on platforms.

- Test on a Throwaway: If you are very particular, you could upload the video as private or unlisted somewhere first to see how it looks once processed by the platform, then adjust if necessary.Check platform-processed video quality. Adjust export settings if needed. Use 1080p MP4 for best results. Ensures optimal viewer experience.

- One more thought: if you aim to post on multiple platforms, it’s good to avoid visible platform watermarks.Use clean original render for each platform. Avoid downloading watermarked TikTok videos. Prevents downranking by platforms. Maintains content originality.

Exporting for TikTok/Shorts involves rendering a vertical MP4 with proper audio and uploading it directly or transferring to a mobile device. Unreal’s high-quality output ensures standout visuals, enhancing meme visibility and engagement.

How do I animate multiple Metahumans in sync for meme content?

Animating multiple MetaHumans in sync for meme content, such as group dances or comedic interactions, is achievable in Unreal Engine using Sequencer to coordinate animations. By applying the same animation asset or retargeting mocap, characters can move in unison, ideal for humorous synchronized dances. Copying keyframes or using group constraints ensures precise interactions, like high-fives, while micro-offsets add natural variation. Sequencer’s timeline allows meticulous alignment of actions, and Take Recorder enables real-time puppeteering for dynamic scenes. Performance considerations are minimal for small groups, but LODs help with larger crowds, ensuring smooth rendering for meme videos.

Here are tips to handle multiple MetaHumans:

- Use the Same Animation Asset or Mocap on All: The simplest way to sync movements is to literally give them the same animation.Apply identical dance animation in Sequencer. Retarget mocap to multiple characters. Offset tracks for echo effects. Ensures perfect movement lockstep.

- Animation Blueprints for Sync: If this is something you plan to reuse, you could use an Animation Blueprint to drive both characters.Use AnimBP for instanced animations. Trigger montages on all characters. Copy tracks in Sequencer for one-offs. Simplifies reusable sync setups.

- Copy-Paste Keyframes: If you manually animated one MetaHuman with Control Rig, you can copy those keyframes and paste them onto another character’s Control Rig.Duplicate keyframes for identical motion. Adjust for slight variations if desired. Ensure rig hierarchy matches. Replicates detailed animations efficiently.

- Use Group Constraints for Interaction: If the characters interact, you’ll animate them in tandem.Pose characters for high-fives at key frames. Use Attach tracks or socket attachments. Animate manually for precise interactions. Ensures believable physical interactions.

- Synchronizing Actions: For things like lip-sync conversations or dances with different moves, a good practice is to use an external reference like a metronome or dope sheet.Use audio clicks or Sequencer markers. Align jumps or poses to beats. Ensures timing consistency across characters. Enhances synchronized comedic timing.

- Stagger for Natural Feel: If you want them almost in sync but not robotically so, you can introduce micro-offsets.Offset Character B’s animation by 0.2 seconds. Create comedic mimicry with delays. Adds natural or humorous variation. Avoids robotic uniformity.

- Multiple Face Animations: If both characters talk or sing together, you’d do lip sync for each.Record face animations separately or together. Copy animations for unison speech. Align to same audio track. Captures realistic facial interactions.

- Performance Consideration: More characters means heavier render.Use LODs for distant MetaHumans in crowds. Optimize for 2-3 characters. Minimal impact for small groups. Ensures smooth rendering performance.

- Using Take Recorder: A nifty Unreal feature is Take Recorder.Record animations by possessing characters. Puppet one while others play back. Iterate in editor for timing. Enables real-time animation capture.

- Example use-case: Suppose your meme is two MetaHumans doing the “Skibidi Toilet vs Cameraman” dance battle.Animate Toilet-head’s moves first. Keyframe Cameraman’s response to align poses. Use Sequencer to preview interactions. Iterate for comedic harmony.

To sync multiple MetaHumans, use Sequencer to align identical or complementary animations, ensuring comedic timing for group performances.

Are there templates or projects that help with Skibidi-style content creation?

While there isn’t an official “Skibidi Toilet template” project, there are resources and community projects that can give you a head start in creating similar content:

- MetaHuman Sample Projects: Epic Games has released sample projects that include MetaHumans with pre-made animations and setups.Use MetaHumans Showcase for animation setups. Replace dialogue with meme content. Provides lighting and Sequencer examples. Kickstarts MetaHuman meme projects.

- ALS (Advanced Locomotion System): This is a popular free character template that provides a robust character controller with many animations.Integrate ALS for interactive gameplay movements. Overkill for cinematics but useful for capture. Includes running, jumping animations. Enhances dynamic character actions.

- Community Remakes and Fandom Assets: The Skibidi Toilet meme is so popular that some fans have created 3D models of the characters.Find Skibidi models on Sketchfab or TurboSquid. Import toilet or camera-head assets. Attach MetaHuman heads for authenticity. Saves modeling time.

- City and Environment Templates: Skibidi Toilet episodes take place in urban environments.Use City Sample or Downtown West pack. Add destroyed streets or bathroom props. Provides authentic meme backdrops. Enhances scene context.

- Animation Templates: There are community animation sets for meme-ish behaviors.Find dance or gesture packs on Marketplace. Use forum-shared meme projects. Apply animations to MetaHumans. Speeds up animation setup.

- Blender and Mixamo: While not an Unreal template, remember that Mixamo is a quick way to get dancing animations.Export Blender meme rigs to Unreal. Use Mixamo for quick dance animations. Apply to MetaHuman skeletons. Leverages external animation resources.

- Machinima Communities: Communities like Source Filmmaker and Garry’s Mod have long histories of meme animation.Borrow ideas from SFM Skibidi assets. Replicate Half-Life-based models in Unreal. Use MetaHuman for similar heads. Inspires authentic meme recreations.

- Pre-built Meme Characters: On Sketchfab or CGTrader, try searching for “meme character” or specific things like “TV head model”.Import TV-Man or speaker head models. Use ready-made meme-inspired assets. Save time on character creation. Enhances meme authenticity.

- Unreal Learning Kits: Epic sometimes provides learning projects like the “Lyra Starter Game” for gameplay or the “Animation Starter Pack”.Use Animation Starter Pack for basic movements. Retarget to MetaHumans for actions. Learn from Lyra’s structure. Accelerates project setup.

- Cine Tracer / UE4 Sequencer templates: There is a tool called Cine Tracer (by Matt Workman) which is aimed at virtual cinematography.Use Cine Tracer for pre-lit environments. Drop in MetaHumans for quick filming. Speeds up scene setup. Enhances cinematic meme production.

- Tutorial Projects: Some YouTube tutorials come with project files.Download .uproject files from meme tutorials. Adapt Epic’s facial animation projects. Learn from shared content setups. Provides practical starting points.

Using free city environments, animation sets, and fan-made Skibidi models accelerates meme creation, allowing focus on comedic timing and editing.

How do I make Metahuman characters more cartoonish or stylized for meme animation?

MetaHumans are realistic, but cartoonish stylization enhances meme comedy through exaggerated animations, modified proportions, or stylized rendering. In MetaHuman Creator, adjust features like larger eyes or colorful outfits for a caricature effect. Post-process effects like toon shading or filters create a non-realistic look, while oversized props add humor. Custom mesh tweaks in Blender or physics-based exaggerations further stylize characters. These methods balance realism with absurdity, priming viewers for humor and aligning with meme aesthetics.

Here are a few ways to stylize MetaHumans:

- Exaggerate via Animation: The first method doesn’t involve changing the model at all – instead, push the animations beyond realistic bounds.Use oversized facial expressions or motions. Realistic models with wacky actions look funny. Enhances comedic effect without mesh changes. Simplest stylization approach.

- Modify MetaHuman in Creator: The MetaHuman Creator has a range of face shapes and body types.Adjust for larger eyes, smaller jaw. Scale head for caricature proportions. Use colorful hair or outfits. Creates exaggerated yet plausible characters.

- Custom Mesh Alteration: For advanced users – you can export a MetaHuman’s mesh to a DCC like Maya or Blender using the Mesh to MetaHuman workflow.Sculpt larger eyes or mouth in Blender. Re-import with MetaHuman Mesh Morpher tool. Maintains rig compatibility with tweaks. Enables custom exaggerated shapes.

- Use Post-Process Effects:

- Toon Shading: You can apply a cel-shader post process to give an outline and flat colors.Use Marketplace toon shading materials. Renders MetaHuman as 2D cartoon. Enhances humor with comic style. Switch filters for comedic effect.

- Filters: Even simple filters like color grading can give a “meme aesthetic”.Oversaturate for vibrant cartoon feel. Add bloom or pixelation for absurdity. Mimics deep-fried meme looks. Creates stylized visual impact.

- Camera FOV and Lens Distortion: A wider camera FOV will distort the MetaHuman’s features near the edges.Use fish-eye lens for humorous bulging. Animate camera for warp effects. Exaggerates facial features comedically. Enhances meme-style visuals.

- Swap Parts or Props: A hacky but fun way to cartoonify is to attach comically oversized props to the MetaHuman.Add googly eyes or cartoon masks. Attach scaled hand or emoji head meshes. Hide original parts if needed. Creates quick visual gags.

- Physics-based Exaggeration: This is more about motion, but consider ragdoll or physics forces to stretch the character briefly.Use Physical Animation for squash-and-stretch. Apply physics for limb stretching on impact. Blends animation with cartoonish motion. Adds dynamic exaggeration.

- Alternate Art Styles: If you truly want a non-realistic character, you might not use MetaHuman at all for that character.Import chibi models alongside MetaHumans. Swap skin material for flat shading. Use cartoon textures for stylization. Creates non-realistic character visuals.

- Future MetaHuman Stylization: Epic has hinted at more stylized MetaHumans in the future.Check for new stylized MetaHuman presets. Use current methods until updates. Experiment with stylization toolkit. Prepares for future enhancements.

To cartoonify a MetaHuman, exaggerate motions, tweak proportions, or apply stylized rendering, creating a humorous, meme-ready caricature.

What lighting and camera setups work best for meme videos in Unreal Engine?

Lighting and camera setups for meme videos in Unreal Engine aim to ensure clarity on small screens while amplifying comedy through stylized or parodic effects. Bright, even lighting with 3-point setups highlights MetaHuman expressions, while dramatic or colored lighting adds humor, like underlighting for an evil look. Camera techniques, such as wide-angle lenses or snap zooms, exaggerate features and mimic meme editing styles. Sequencer enables dynamic camera moves and reaction shots, enhancing comedic timing. Testing exports on phones ensures visual gags remain effective despite compression.

Here are some tips on lighting and camera setups that work well:

- Bright, Even Lighting for Clarity: If your priority is that viewers on small phone screens instantly see what’s happening, a bright and evenly lit scene is safest.Use key and fill lights for visible expressions. Add back light for separation. Mimics TikTok’s ring light clarity. Ensures details on phone screens.

- Cartoonish/Dramatic Lighting for Effect: Sometimes, using stereotypical “dramatic” lighting can be part of the joke.Underlight for evil or crazy expressions. Use high-key lighting ironically. Exaggerates humor in silly situations. Enhances comedic visual impact.

- Colored Lighting and Neon Vibes: Many Skibidi videos have a chaotic rainbow of police lights, explosions, etc.Use flashing red/blue lights for energy. Animate light colors in Sequencer. Adds chaotic meme aesthetic. Matches Skibidi’s vibrant style.

- Background vs Foreground separation: Ensure your character pops from the background.Use rim light for character outline. Apply DOF to blur background. Focuses attention on MetaHuman. Improves visibility on small screens.

- Camera Angles for Comedy: Camera choice matters in comedy.Use wide-angle lens for feature distortion. Capture environment for physical comedy. Exaggerates faces with GoPro-style look. Enhances humorous visuals.

- Camera Motion: Quick whip pans or snap zooms are common in meme edits.Animate rapid zooms or pans in Sequencer. Add shaky handheld effect with noise. Amplifies punchline intensity. Mimics meme editing energy.

- Multiple Cameras for Reaction Shots: In memes, reaction shots are gold.Cut to close-ups for shocked expressions. Use wide shots to establish scenes. Show multiple characters’ reactions. Boosts comedic timing and engagement.

- Parody Cinematic Styles: If your meme references a known format, mimic that camera style.Use static shots for newscast parodies. Apply Dutch angles for anime drama. Replicate filmic styles for humor. Enhances meme format recognition.

- High Frame Rate Camera for Slow-mo: Sometimes memes use slow motion for emphasis.Render high frame rate for slow-mo segments. Use Time Dilation for extra frames. Add dramatic lighting for effect. Emphasizes comedic moments.

- Environment Lighting: If your scene is outdoors, Unreal’s Sky Atmosphere and sun can provide realistic lighting.Adjust sun angle for comedic shadows. Use practical lights indoors for believability. Add invisible lights for clarity. Balances realism and visibility.

- Avoiding Overly Dark or Overexposed Shots: Many social media apps will compress and sometimes dark videos become artifacted.Check histogram for balanced exposure. Avoid shadow loss or highlight clipping. Ensure face details remain clear. Prevents compression artifacts.

- Use Emphasis Lighting: You can use a spotlight like a theatrical emphasis.Highlight character with animated spotlight. Focus viewer’s eye on key moments. Turn on light at punchlines. Directs attention for comedy.

Use well-lit setups and dynamic camera work to highlight comedy or parody styles, testing on phones to ensure gags read well.

Can I use face tracking or webcam input to drive Skibidi-style expressions in Metahuman?

Yes, with some caveats. Here are options:

- Webcam Face Tracking via AI: There are community-made solutions that use a normal webcam to do facial capture.Use MediaPipe or OpenCV for landmark tracking. Pipe data to Live Link in Unreal. Animate MetaHumans in real-time. Offers accessible facial capture.

- Android Device Face Capture: Some have attempted using Android phone cameras.Use ARCore for basic face mesh data. Send to Unreal for MetaHuman animation. Less nuanced than ARKit. Enables Android-based facial tracking.

- VR Headset Tracking: If you have a VR setup, some headsets can capture facial movements.Use Vive Face Tracker for lower face. Map data to Unreal for avatars. Combines head and mouth tracking. Leverages VR hardware capabilities.

- Kinect or Depth Camera: The old Kinect V2 had some face tracking capability.Use Kinect or RealSense for blendshape coefficients. Import data via UE5 plugins. Limited by hardware availability. Provides alternative tracking option.

- Simpler: Manual real-time puppeteering: Another approach if by “webcam input” the question implied controlling expressions without keyframing.Use Remote Control app or MIDI sliders. Trigger expressions while watching webcam. Less automated but controllable. Enables manual expression driving.

- Phone Apps (non-iPhone): There might be third-party apps for Android or PC that do facial motion capture.Use Faceware for high-quality tracking. Integrate via Unreal plugin. Expensive for hobbyists. Offers professional-grade facial capture.

- Limitations and Setup: If you go the webcam/MediaPipe route, expect some setup time and calibration.Capture broad expressions, miss subtleties. Map 468 landmarks to blendshapes. Suits cartoonish meme needs. Requires technical setup effort.

- Example of use: Let’s say you want to lip-sync your MetaHuman to you speaking but you have no iPhone.Track mouth via webcam for speech. Manually animate blinks if needed. Combine with audio visemes. Achieves passable lip-sync results.

- Combining with mocap: If you used a mocap suit for body and want to also do face but don’t have iPhone.Record body and face simultaneously. Use multiple Live Link sources. Technically complex but feasible. Enhances full-body meme animations.

- Always Clean Up: Even with the best webcam tracking, you might have to clean up or exaggerate after the fact.Bake live capture to Sequencer. Edit curves for refined expressions. Treat as first pass for tweaks. Ensures polished facial animations.

Webcam or Android face tracking via AI or apps can animate MetaHuman expressions, with community solutions offering accessible alternatives to ARKit.

Where can I find tutorials for meme content creation using Metahuman?

To learn more and get better at creating meme content with MetaHumans, you’ll want to tap into both official resources and the community tutorials. Here are some great places to find guidance, tips, and examples:

- Unreal Engine Official Documentation & Learning Portal: Epic’s official docs have a MetaHuman section with step-by-step guides.Learn animation, Live Link, and Control Rig. Apply cinematic techniques to memes. Access free video courses online. Builds foundational Unreal skills.

- Epic Developer Community Tutorials: The Unreal Engine community site has a ton of user-made tutorials and official tutorials.Search for MetaHuman animation guides. Watch Unreal’s YouTube for Sequencer tips. Learn from community-shared projects. Provides practical workflow insights.

- YouTube (Community Creators): YouTube is arguably the best source for niche tutorials.Search “MetaHuman TikTok tutorial” or “funny animation.” Check JSFILMZ or William Faucher channels. Learn comedic timing from short film guides. Offers diverse video tutorials.

- Forums and Reddit: The Unreal Engine Forums are a place to ask specific questions.Post in MetaHumans or Animation sections. Use r/unrealengine or r/metahumans. Find lip-sync solutions. Connects with community expertise.

- Discord Communities: There are Unreal Engine Discord servers where many artists and devs chat.Join Unreal Slackers for real-time help. Ask about MetaHuman meme techniques. Discuss mocap and animation. Facilitates direct community support.

- Tutorial for Face/Body capture: If you plan to do mocap, search for specific tutorials.Watch Rokoko’s YouTube for MetaHuman guides. Learn Live Link Face setup. Apply to various capture methods. Enhances motion capture skills.

- Blender to Unreal meme creation: Some content creators share how they make memes in Blender or Source Filmmaker.Study SFM Skibidi breakdowns for timing. Apply Blender meme staging in Unreal. Learn comedic principles. Transfers cross-platform insights.

- Epic’s Sample Projects: Downloading sample projects like Lyra Starter Game or City Sample can be educational.Reverse-engineer MetaHuman driving scenes. Use City Sample for meme environments. Learn material and Blueprint setups. Provides practical project examples.

- One-off guides on Medium/Blogs: Occasionally, developers write blog posts about their experiences.Search for “viral TikTok with Unreal.” Find virtual production tips on Medium. Learn workflow efficiencies. Offers unique creator perspectives.

- Stack Exchange (Unreal section): If you have a very specific issue, the Q&A on Unreal StackExchange might have answers.Troubleshoot Sequencer sync issues. Find render pipeline solutions. Address niche technical problems. Supports targeted problem-solving.

Official Unreal resources, YouTube, forums, and community projects provide tutorials for MetaHuman meme creation, covering animation, lighting, and comedic timing.

What are the challenges of combining Metahuman with internet meme formats like Skibidi?

While using MetaHumans in meme videos is powerful, there are a few challenges and caveats to be aware of:

- Uncanny Valley vs. Absurdity: MetaHumans look very real.Risk eerie feel if animations are stiff. Push absurdity to match Skibidi’s lowbrow humor. Stylize to avoid uncanny valley. Balances realism with comedy.

- Technical Overhead and Time: Creating a meme video with MetaHumans in UE5 is more involved than filming on a phone.Requires decent PC and Unreal knowledge. Trends fade before completion. Streamline with templates or mocap. Demands efficient workflow for speed.

- Learning Curve: For those not already familiar with Unreal Engine, there’s a learning curve.Sequencer and Control Rig take time. Daunting for non-3D creators. Use tutorials to flatten curve. Challenges quick gag production.

- Hardware Requirements: MetaHumans are heavy – high polygon counts, realistic shaders.Lower-end PCs struggle with complex scenes. Use LODs to optimize editing. Slow iteration affects speed. Poses barrier vs. phone videos.

- File Sizes and Exports: The content you produce will be larger files.Large videos slow uploads on mobile. Manage multi-GB project files. Plan storage and bandwidth. Complicates sharing logistics.

- IP and Copyright Considerations: Using MetaHumans and Unreal is fine, but when combining with meme formats, be mindful of any IP.Skibidi audio may face copyright flags. Ensure rights for fan-made models. Use TikTok’s licensed sounds. Avoids monetization issues.

- Maintaining Meme Authenticity: There’s a risk that using high-end tools could overshoot the raw charm of meme videos.Polished visuals may feel like shorts. Add roughness for DIY feel. Mimic meme editing styles. Preserves relatable meme aesthetic.

- Time Sync and Audio in Engine: Unreal’s Sequencer can sometimes have quirks.Audio sync issues delay posting. Adjust frame rates for platforms. Test renders thoroughly. Requires pipeline understanding.

- Audience Reception: Using MetaHumans might cause the video to stand out.May elicit “terrifying yet funny” comments. Embrace niche as 3D meme creator. Differs from typical meme reactions. Shapes viewer expectations.

- Updates and Software Changes: Relying on a software means dealing with updates or changes.Epic’s updates may break workflows. Lock versions or adapt quickly. Debug during trends is tough. Demands pipeline stability.

Challenges include balancing realism with meme absurdity, managing technical overhead, and maintaining authenticity, but streamlined workflows yield unique, standout content.

FAQ Questions and Answers

- Is MetaHuman creation and use free?

Yes. MetaHuman Creator is free to use with an Epic Games account, and you can use MetaHumans in Unreal Engine for free. Just note that they must be used in Unreal-based projects (you can’t export the full MetaHuman to another engine without special license). For making videos (even monetized YouTube videos), it’s completely free and allowed. - Do I need a powerful computer to make MetaHuman meme videos?

It helps, but you don’t need a top-of-the-line machine for short clips. A mid-range gaming PC (say, GTX 1660 or RTX 3060 GPU, 16GB RAM) can handle a MetaHuman in a simple environment. You might experience some lag while editing, but it’s manageable. Rendering will be slower on lower specs, but for a 15s video it’s still feasible. If your PC is very old or low-end, consider using lower quality settings while working, and be patient with renders. - Can I do this if I have zero Unreal Engine experience?

Absolutely – but expect to spend some time learning. Start with basic Unreal tutorials (how to navigate viewport, timeline usage). Focus on Sequencer and MetaHumans. Epic’s official YouTube and documentation are great for beginners. You don’t need to know game coding or anything; just concentrate on the cinematic tools. Many newbies have learned Unreal just to make animated content or memes, so it’s doable! - How long does it take to create a meme video in UE5?

Once you’re up to speed, a simple 15-second meme could be done in a day or two of work (or even a few hours, depending on complexity). That includes setting up characters, animating (especially if using mocap or existing animations), and rendering. The first few might take longer as you learn (maybe a week of tinkering for the very first attempt). With practice, the process becomes much faster – possibly only a few hours from idea to final video for a well-oiled workflow. - Can I use existing meme audio (like a TikTok sound) in my Unreal video?

Yes, you can import any audio into Unreal and sync your animation to it. Just make sure if you plan to post it online that the audio won’t get you in trouble. If it’s a popular TikTok sound, usually you’re fine on TikTok by just adding it through the app. For YouTube, be cautious with copyrighted music – even if it’s a meme remix. In Unreal, use a WAV or MP3 of the sound, and you’ll have full control to edit timing. - My MetaHuman’s mouth isn’t syncing perfectly to audio – what can I do?

Lip sync issues are common to tweak. Ensure your animation for the face matches the phonemes. If using MetaHuman Animator (iPhone capture), try a re-capture with more pronounced mouth movement or check if your audio starts exactly when the animation starts. If doing it manually, use Unreal’s Face Control Rig to fine-tune. Also, check that your sequence frame rate matches your render frame rate (mismatch can cause slight desync). You can always adjust timing by nudging keys a frame or two. For precise syncing, sometimes you might intentionally lead the animation 1-2 frames ahead of the sound so that it visually looks correct. - My render looks different from the viewport (or has glitches) – how do I fix that?

Ensure you’re using Movie Render Queue with adequate settings. Common differences: Motion blur or anti-aliasing in viewport vs render. To fix, explicitly set those in MRQ (e.g., turn on motion blur if desired, increase Temporal Sample count for smoothness). If there are glitches like flickering, it could be ray tracing issues – try increasing samples or disabling ray tracing for that render. Also, make sure to use “Game Mode” in render (so that all effects play as in play mode). If something like hair looks odd, make sure you’re on the latest engine version or try turning off Groom cache (hair physics) for the short render, as sometimes hair can sim differently when time is not real-time. - Can I mix MetaHumans with other characters or elements (like 2D animation or green screen)?

Yes! You can composite anything. For example, you could render your MetaHuman scene with a green background (by placing a green unlit plane) and then overlay it on live-action or other meme footage using a video editor. Or vice versa, you can import an image or video as a texture in Unreal and put it on a plane behind the MetaHuman. Mixing media can create really funny results (like a MetaHuman in a SpongeBob cartoon scene, etc.). Just ensure consistent framing and that you have permission for any external media used. - Are there any legal issues using MetaHumans to represent real people or celebrities in memes?

MetaHumans are fictional characters you create – using them in memes is fine. If you deliberately sculpt one to look like a real celebrity and use them in a derogatory way, that could potentially raise likeness rights issues, same as any portrayal of a real person. But if it’s just a generic character, there’s no issue. MetaHumans do come very life-like, so avoid inadvertently defaming someone who looks similar. As for Skibidi-specific, the original creator has been okay with fan content (it’s huge on the internet), but always frame it clearly as parody or fan tribute to be safe under fair use. - How can I improve the virality of my Unreal-made meme?

Focus on the content first – timing, humor, relatability. But also leverage platform tricks: use trending music (maybe layered quietly in the background), use captions (since many watch without sound initially), and hashtag appropriately (#animation #MetaHuman #Skibidi, etc.). The uniqueness of your high-quality meme will attract attention, but the meme still has to hit the viewer’s funny bone. Posting at the right time (when your target audience is active) helps. Engage with comments – people may have questions (“How did you make this?!”), which is a chance to drive more interaction (and maybe plug that you used Unreal Engine, which also spreads awareness). In short, treat it like any other content: quality + savvy posting = better chances to go viral.

Conclusion

Combining “Skibidi Brainrot” memes with MetaHumans in Unreal Engine 5 offers immense creative potential, blending viral culture with advanced technology. The text explains Skibidi’s internet appeal and confirms MetaHumans can recreate this style using UE5’s tools. It details animating MetaHumans with mocap or keyframes, syncing to meme music, and exporting for TikTok or YouTube. Advanced techniques include managing multiple MetaHumans, using templates, and stylizing characters for meme aesthetics. Lighting and camera techniques, like zooms, enhance humor.

The text addresses challenges like the learning curve and hardware demands, offering solutions to balance creativity and technical constraints. It emphasizes embracing absurdity to craft standout memes, positioning creators as directors of unique digital content. Mastering these skills enables parodying trends or creating original skits, ensuring adaptability to evolving internet culture. Creators are encouraged to experiment, potentially sparking the next viral trend with MetaHumans in UE5.

Now go make the internet laugh (or cringe) – using MetaHuman!

Sources and Citation

- Epic Games – MetaHuman Animator introduction and usageEpic Developer Community – MetaHuman Animator

- Epic Games – Unreal Engine MetaHuman documentation (Live Link Face setup)Epic Developer Community – MetaHuman Documentation

- Washington Post – How a toilet-themed YouTube series became the biggest thing online (Skibidi statistics and cultural impact)Washington Post – Skibidi Toilet Phenomenon

- LADbible – Skibidi Toilet trend explained (viral views and description of first video)LADbible – Skibidi Toilet Trend Explained

- Wikipedia – “Skibidi Toilet” article (meme origin, song mashup and SFM usage)Wikipedia – Skibidi Toilet

- NewYorker – The Year in Brain Rot (discussion of “Skibidi Ohio Rizz” slang and brainrot definition)New Yorker – The Year in Brain Rot

- Wikipedia – “Brain rot” internet slang entry (definition of low-quality content obsession)Wikipedia – Brain rot

- Epic Games – City Sample project info (free city environment resource)Unreal Engine – City Sample

- Epic Marketplace Blog – Free Downtown West environment (city assets for Unreal)Epic Marketplace – Downtown West

- TurboSquid / Fab – Skibidi 3D models listing (availability of community-made Skibidi characters)TurboSquid – Skibidi Toilet Models

- Unreal Engine Forums – Community webcam facial mocap solution (MediaPipe to LiveLink project)Unreal Engine Forums – Animation

- Unreal Engine Dev Community – Mixamo to MetaHuman retargeting tutorialEpic Developer Community – Mixamo to MetaHuman Retargeting

- Epic Games – MetaHuman documentation on animation retargetingEpic Developer Community – MetaHuman Animation Retargeting

- Reddit – r/OutOfTheLoop on brainrot slang usage (background on “brain rot” term among Gen Z)Reddit – r/OutOfTheLoop Brain Rot

- Psychology Today – “Skibidi Ohio Brain Rot” (contextual analysis of slang evolution)Psychology Today – Skibidi Ohio Brain Rot

- Reddit – Discussion on multiple MetaHumans animation (tips for synchronizing animations)Reddit – r/unrealengine

- Oculus / Meta – OVR Lipsync plugin info (lip sync from audio for Unreal)Meta Developer – OVR LipSync Plugin

- YouTube – Official MetaHuman tutorial videos (for Control Rig, Animator, etc., referenced as learning material)YouTube – Unreal Engine MetaHuman Tutorials

Recommended

- Wonder Dynamics: VFX with AI-Powered Wonder Studio

- How to Make Blender Hair Work with Unreal Engine’s Groom System

- How do I create a bird’s-eye camera view in Blender?

- Flow Made With Blender Wins Oscar: Latvia’s Indie Animated Feature Film Making History

- Managing Multiple Camera Settings in Blender with The View Keeper

- How to Import Metahuman into Unreal Engine 5: Step-by-Step Guide for Beginners and Artists

- Why PixelHair is the Best Asset Pack for Blender Hair Grooming

- What is the camera’s field of view in Blender?

- Unreal Engine vs Unity: Which Game Engine Is Best for Your Project?

- How to Create Hair Cards in Blender for Game-Ready Characters: Complete Workflow Guide